Dataset Viewer

url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 600M

2.05B

| node_id

stringlengths 18

32

| number

int64 2

6.51k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

4

| milestone

dict | comments

sequencelengths 0

30

| created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 3

values | active_lock_reason

float64 | draft

float64 0

1

⌀ | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

float64 | state_reason

stringclasses 3

values | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/2469 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2469/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2469/comments | https://api.github.com/repos/huggingface/datasets/issues/2469/events | https://github.com/huggingface/datasets/pull/2469 | 916,440,418 | MDExOlB1bGxSZXF1ZXN0NjY2MTA1OTk1 | 2,469 | Bump tqdm version | {

"avatar_url": "https://avatars.githubusercontent.com/u/26859204?v=4",

"events_url": "https://api.github.com/users/lewtun/events{/privacy}",

"followers_url": "https://api.github.com/users/lewtun/followers",

"following_url": "https://api.github.com/users/lewtun/following{/other_user}",

"gists_url": "https://api.github.com/users/lewtun/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lewtun",

"id": 26859204,

"login": "lewtun",

"node_id": "MDQ6VXNlcjI2ODU5MjA0",

"organizations_url": "https://api.github.com/users/lewtun/orgs",

"received_events_url": "https://api.github.com/users/lewtun/received_events",

"repos_url": "https://api.github.com/users/lewtun/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lewtun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lewtun/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lewtun"

} | [] | closed | false | null | [] | null | [

"i tried both the latest version of `tqdm` and the version required by `autonlp` - no luck with windows 😞 \r\n\r\nit's very weird that a progress bar would trigger these kind of errors, so i'll have a look to see if it's something unique to `datasets`",

"Closing since this is now fixed in #2482 "

] | "2021-06-09T17:24:40" | "2021-06-11T15:03:42" | "2021-06-11T15:03:36" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/2469.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2469",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/2469.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2469"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2469/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2469/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/1373 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1373/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1373/comments | https://api.github.com/repos/huggingface/datasets/issues/1373/events | https://github.com/huggingface/datasets/pull/1373 | 760,280,869 | MDExOlB1bGxSZXF1ZXN0NTM1MTM5MTY0 | 1,373 | Add OPUS ECB Dataset | {

"avatar_url": "https://avatars.githubusercontent.com/u/1183441?v=4",

"events_url": "https://api.github.com/users/abhishekkrthakur/events{/privacy}",

"followers_url": "https://api.github.com/users/abhishekkrthakur/followers",

"following_url": "https://api.github.com/users/abhishekkrthakur/following{/other_user}",

"gists_url": "https://api.github.com/users/abhishekkrthakur/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/abhishekkrthakur",

"id": 1183441,

"login": "abhishekkrthakur",

"node_id": "MDQ6VXNlcjExODM0NDE=",

"organizations_url": "https://api.github.com/users/abhishekkrthakur/orgs",

"received_events_url": "https://api.github.com/users/abhishekkrthakur/received_events",

"repos_url": "https://api.github.com/users/abhishekkrthakur/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/abhishekkrthakur/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/abhishekkrthakur/subscriptions",

"type": "User",

"url": "https://api.github.com/users/abhishekkrthakur"

} | [] | closed | false | null | [] | null | [] | "2020-12-09T12:18:22" | "2020-12-10T15:25:55" | "2020-12-10T15:25:54" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/1373.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1373",

"merged_at": "2020-12-10T15:25:54Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1373.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1373"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1373/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1373/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/964 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/964/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/964/comments | https://api.github.com/repos/huggingface/datasets/issues/964/events | https://github.com/huggingface/datasets/pull/964 | 754,474,660 | MDExOlB1bGxSZXF1ZXN0NTMwMzY4OTAy | 964 | Adding the WebNLG dataset | {

"avatar_url": "https://avatars.githubusercontent.com/u/10469459?v=4",

"events_url": "https://api.github.com/users/yjernite/events{/privacy}",

"followers_url": "https://api.github.com/users/yjernite/followers",

"following_url": "https://api.github.com/users/yjernite/following{/other_user}",

"gists_url": "https://api.github.com/users/yjernite/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/yjernite",

"id": 10469459,

"login": "yjernite",

"node_id": "MDQ6VXNlcjEwNDY5NDU5",

"organizations_url": "https://api.github.com/users/yjernite/orgs",

"received_events_url": "https://api.github.com/users/yjernite/received_events",

"repos_url": "https://api.github.com/users/yjernite/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/yjernite/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yjernite/subscriptions",

"type": "User",

"url": "https://api.github.com/users/yjernite"

} | [] | closed | false | null | [] | null | [

"This is task is part of the GEM suite so will actually need a more complete dataset card. I'm taking a break for now though and will get back to it before merging :) "

] | "2020-12-01T15:05:23" | "2020-12-02T17:34:05" | "2020-12-02T17:34:05" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/964.diff",

"html_url": "https://github.com/huggingface/datasets/pull/964",

"merged_at": "2020-12-02T17:34:05Z",

"patch_url": "https://github.com/huggingface/datasets/pull/964.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/964"

} | This PR adds data from the WebNLG challenge, with one configuration per release and challenge iteration.

More information can be found [here](https://webnlg-challenge.loria.fr/)

Unfortunately, the data itself comes from a pretty large number of small XML files, so the dummy data ends up being quite large (8.4 MB even keeping only one example per file). | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/964/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/964/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3804 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3804/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3804/comments | https://api.github.com/repos/huggingface/datasets/issues/3804/events | https://github.com/huggingface/datasets/issues/3804 | 1,157,297,278 | I_kwDODunzps5E-vR- | 3,804 | Text builder with custom separator line boundaries | {

"avatar_url": "https://avatars.githubusercontent.com/u/18630848?v=4",

"events_url": "https://api.github.com/users/cronoik/events{/privacy}",

"followers_url": "https://api.github.com/users/cronoik/followers",

"following_url": "https://api.github.com/users/cronoik/following{/other_user}",

"gists_url": "https://api.github.com/users/cronoik/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/cronoik",

"id": 18630848,

"login": "cronoik",

"node_id": "MDQ6VXNlcjE4NjMwODQ4",

"organizations_url": "https://api.github.com/users/cronoik/orgs",

"received_events_url": "https://api.github.com/users/cronoik/received_events",

"repos_url": "https://api.github.com/users/cronoik/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/cronoik/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cronoik/subscriptions",

"type": "User",

"url": "https://api.github.com/users/cronoik"

} | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | open | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

] | null | [

"Gently pinging @lhoestq",

"Hi ! Interresting :)\r\n\r\nCould you give more details on what kind of separators you would like to use instead ?",

"In my case, I just want to use `\\n` but not `U+2028`.",

"Ok I see, maybe there can be a `sep` parameter to allow users to specify what line/paragraph separator they'd like to use",

"Related to:\r\n- #3729 \r\n- #3910",

"Thanks for requesting this enhancement. We have recently found a somehow related issue with another dataset:\r\n- #3704\r\n\r\nLet me make a PR proposal."

] | "2022-03-02T14:50:16" | "2022-03-16T15:53:59" | null | NONE | null | null | null | **Is your feature request related to a problem? Please describe.**

The current [Text](https://github.com/huggingface/datasets/blob/207be676bffe9d164740a41a883af6125edef135/src/datasets/packaged_modules/text/text.py#L23) builder implementation splits texts with `splitlines()` which splits the text on several line boundaries. Not all of them are always wanted.

**Describe the solution you'd like**

```python

if self.config.sample_by == "line":

batch_idx = 0

while True:

batch = f.read(self.config.chunksize)

if not batch:

break

batch += f.readline() # finish current line

if self.config.custom_newline is None:

batch = batch.splitlines(keepends=self.config.keep_linebreaks)

else:

batch = batch.split(self.config.custom_newline)[:-1]

pa_table = pa.Table.from_arrays([pa.array(batch)], schema=schema)

# Uncomment for debugging (will print the Arrow table size and elements)

# logger.warning(f"pa_table: {pa_table} num rows: {pa_table.num_rows}")

# logger.warning('\n'.join(str(pa_table.slice(i, 1).to_pydict()) for i in range(pa_table.num_rows)))

yield (file_idx, batch_idx), pa_table

batch_idx += 1

```

**A clear and concise description of what you want to happen.**

Creating the dataset rows with a subset of the `splitlines()` line boundaries. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3804/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3804/timeline | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1643 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1643/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1643/comments | https://api.github.com/repos/huggingface/datasets/issues/1643/events | https://github.com/huggingface/datasets/issues/1643 | 775,280,046 | MDU6SXNzdWU3NzUyODAwNDY= | 1,643 | Dataset social_bias_frames 404 | {

"avatar_url": "https://avatars.githubusercontent.com/u/7501517?v=4",

"events_url": "https://api.github.com/users/atemate/events{/privacy}",

"followers_url": "https://api.github.com/users/atemate/followers",

"following_url": "https://api.github.com/users/atemate/following{/other_user}",

"gists_url": "https://api.github.com/users/atemate/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/atemate",

"id": 7501517,

"login": "atemate",

"node_id": "MDQ6VXNlcjc1MDE1MTc=",

"organizations_url": "https://api.github.com/users/atemate/orgs",

"received_events_url": "https://api.github.com/users/atemate/received_events",

"repos_url": "https://api.github.com/users/atemate/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/atemate/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/atemate/subscriptions",

"type": "User",

"url": "https://api.github.com/users/atemate"

} | [] | closed | false | null | [] | null | [

"I see, master is already fixed in https://github.com/huggingface/datasets/commit/9e058f098a0919efd03a136b9b9c3dec5076f626"

] | "2020-12-28T08:35:34" | "2020-12-28T08:38:07" | "2020-12-28T08:38:07" | NONE | null | null | null | ```

>>> from datasets import load_dataset

>>> dataset = load_dataset("social_bias_frames")

...

Downloading and preparing dataset social_bias_frames/default

...

~/.pyenv/versions/3.7.6/lib/python3.7/site-packages/datasets/utils/file_utils.py in get_from_cache(url, cache_dir, force_download, proxies, etag_timeout, resume_download, user_agent, local_files_only, use_etag)

484 )

485 elif response is not None and response.status_code == 404:

--> 486 raise FileNotFoundError("Couldn't find file at {}".format(url))

487 raise ConnectionError("Couldn't reach {}".format(url))

488

FileNotFoundError: Couldn't find file at https://homes.cs.washington.edu/~msap/social-bias-frames/SocialBiasFrames_v2.tgz

```

[Here](https://homes.cs.washington.edu/~msap/social-bias-frames/) we find button `Download data` with the correct URL for the data: https://homes.cs.washington.edu/~msap/social-bias-frames/SBIC.v2.tgz | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1643/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1643/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/5655 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5655/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5655/comments | https://api.github.com/repos/huggingface/datasets/issues/5655/events | https://github.com/huggingface/datasets/pull/5655 | 1,634,030,017 | PR_kwDODunzps5MjWYy | 5,655 | Improve features decoding in to_iterable_dataset | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009691 / 0.011353 (-0.001662) | 0.006160 / 0.011008 (-0.004848) | 0.127528 / 0.038508 (0.089020) | 0.034445 / 0.023109 (0.011335) | 0.391483 / 0.275898 (0.115585) | 0.425922 / 0.323480 (0.102442) | 0.006621 / 0.007986 (-0.001365) | 0.004550 / 0.004328 (0.000221) | 0.099134 / 0.004250 (0.094884) | 0.051089 / 0.037052 (0.014037) | 0.398675 / 0.258489 (0.140186) | 0.456740 / 0.293841 (0.162899) | 0.052279 / 0.128546 (-0.076267) | 0.020878 / 0.075646 (-0.054768) | 0.414954 / 0.419271 (-0.004317) | 0.061903 / 0.043533 (0.018370) | 0.393088 / 0.255139 (0.137949) | 0.410289 / 0.283200 (0.127089) | 0.101684 / 0.141683 (-0.039998) | 1.747102 / 1.452155 (0.294947) | 1.896976 / 1.492716 (0.404260) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.203193 / 0.018006 (0.185187) | 0.495011 / 0.000490 (0.494521) | 0.006290 / 0.000200 (0.006090) | 0.000098 / 0.000054 (0.000043) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.034840 / 0.037411 (-0.002571) | 0.122529 / 0.014526 (0.108003) | 0.133870 / 0.176557 (-0.042686) | 0.207771 / 0.737135 (-0.529364) | 0.141441 / 0.296338 (-0.154897) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.604190 / 0.215209 (0.388981) | 6.040295 / 2.077655 (3.962641) | 2.405703 / 1.504120 (0.901583) | 2.062767 / 1.541195 (0.521572) | 2.079313 / 1.468490 (0.610823) | 1.240107 / 4.584777 (-3.344670) | 5.316583 / 3.745712 (1.570871) | 3.104758 / 5.269862 (-2.165103) | 2.056489 / 4.565676 (-2.509187) | 0.149060 / 0.424275 (-0.275215) | 0.014467 / 0.007607 (0.006860) | 0.736882 / 0.226044 (0.510838) | 7.324142 / 2.268929 (5.055213) | 3.048752 / 55.444624 (-52.395872) | 2.385013 / 6.876477 (-4.491463) | 2.457478 / 2.142072 (0.315405) | 1.459276 / 4.805227 (-3.345951) | 0.253882 / 6.500664 (-6.246782) | 0.076756 / 0.075469 (0.001287) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.499166 / 1.841788 (-0.342622) | 17.294165 / 8.074308 (9.219857) | 20.385668 / 10.191392 (10.194276) | 0.254633 / 0.680424 (-0.425791) | 0.026253 / 0.534201 (-0.507948) | 0.532928 / 0.579283 (-0.046355) | 0.606095 / 0.434364 (0.171731) | 0.615025 / 0.540337 (0.074687) | 0.728651 / 1.386936 (-0.658285) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009376 / 0.011353 (-0.001977) | 0.005981 / 0.011008 (-0.005027) | 0.109898 / 0.038508 (0.071390) | 0.033746 / 0.023109 (0.010637) | 0.410226 / 0.275898 (0.134328) | 0.470606 / 0.323480 (0.147126) | 0.006706 / 0.007986 (-0.001279) | 0.004482 / 0.004328 (0.000153) | 0.092280 / 0.004250 (0.088030) | 0.047988 / 0.037052 (0.010935) | 0.430628 / 0.258489 (0.172139) | 0.480668 / 0.293841 (0.186827) | 0.052099 / 0.128546 (-0.076447) | 0.018743 / 0.075646 (-0.056903) | 0.112204 / 0.419271 (-0.307068) | 0.059838 / 0.043533 (0.016305) | 0.418230 / 0.255139 (0.163091) | 0.451568 / 0.283200 (0.168368) | 0.107026 / 0.141683 (-0.034657) | 1.708111 / 1.452155 (0.255956) | 1.839268 / 1.492716 (0.346552) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.229558 / 0.018006 (0.211552) | 0.488099 / 0.000490 (0.487609) | 0.004643 / 0.000200 (0.004443) | 0.000107 / 0.000054 (0.000053) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.030461 / 0.037411 (-0.006951) | 0.120993 / 0.014526 (0.106467) | 0.130874 / 0.176557 (-0.045682) | 0.193550 / 0.737135 (-0.543585) | 0.138164 / 0.296338 (-0.158174) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.635709 / 0.215209 (0.420500) | 6.225112 / 2.077655 (4.147457) | 2.639584 / 1.504120 (1.135465) | 2.254487 / 1.541195 (0.713293) | 2.280478 / 1.468490 (0.811988) | 1.205712 / 4.584777 (-3.379065) | 5.367845 / 3.745712 (1.622133) | 3.020207 / 5.269862 (-2.249655) | 2.001897 / 4.565676 (-2.563779) | 0.149582 / 0.424275 (-0.274693) | 0.014867 / 0.007607 (0.007260) | 0.759050 / 0.226044 (0.533006) | 7.692969 / 2.268929 (5.424041) | 3.274009 / 55.444624 (-52.170615) | 2.635529 / 6.876477 (-4.240948) | 2.672960 / 2.142072 (0.530888) | 1.426487 / 4.805227 (-3.378740) | 0.253368 / 6.500664 (-6.247296) | 0.078650 / 0.075469 (0.003181) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.620265 / 1.841788 (-0.221523) | 17.674168 / 8.074308 (9.599860) | 21.120528 / 10.191392 (10.929136) | 0.244205 / 0.680424 (-0.436218) | 0.029646 / 0.534201 (-0.504555) | 0.510948 / 0.579283 (-0.068335) | 0.586255 / 0.434364 (0.151891) | 0.589286 / 0.540337 (0.048949) | 0.736561 / 1.386936 (-0.650375) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007778 / 0.011353 (-0.003575) | 0.005432 / 0.011008 (-0.005577) | 0.098776 / 0.038508 (0.060268) | 0.035196 / 0.023109 (0.012087) | 0.305646 / 0.275898 (0.029748) | 0.342661 / 0.323480 (0.019181) | 0.006513 / 0.007986 (-0.001472) | 0.005897 / 0.004328 (0.001568) | 0.075797 / 0.004250 (0.071547) | 0.056060 / 0.037052 (0.019007) | 0.306645 / 0.258489 (0.048156) | 0.352447 / 0.293841 (0.058606) | 0.037304 / 0.128546 (-0.091242) | 0.012514 / 0.075646 (-0.063132) | 0.334949 / 0.419271 (-0.084323) | 0.051600 / 0.043533 (0.008067) | 0.302302 / 0.255139 (0.047163) | 0.322238 / 0.283200 (0.039038) | 0.106896 / 0.141683 (-0.034787) | 1.483163 / 1.452155 (0.031008) | 1.587483 / 1.492716 (0.094767) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.292318 / 0.018006 (0.274312) | 0.541541 / 0.000490 (0.541051) | 0.008342 / 0.000200 (0.008142) | 0.000339 / 0.000054 (0.000285) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.028287 / 0.037411 (-0.009124) | 0.107775 / 0.014526 (0.093250) | 0.119112 / 0.176557 (-0.057445) | 0.174002 / 0.737135 (-0.563134) | 0.126531 / 0.296338 (-0.169808) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.401684 / 0.215209 (0.186475) | 4.024708 / 2.077655 (1.947053) | 1.812763 / 1.504120 (0.308643) | 1.629540 / 1.541195 (0.088345) | 1.731733 / 1.468490 (0.263243) | 0.711066 / 4.584777 (-3.873711) | 3.867499 / 3.745712 (0.121786) | 3.615968 / 5.269862 (-1.653893) | 1.876077 / 4.565676 (-2.689600) | 0.087003 / 0.424275 (-0.337272) | 0.012445 / 0.007607 (0.004838) | 0.499106 / 0.226044 (0.273061) | 4.975920 / 2.268929 (2.706992) | 2.279074 / 55.444624 (-53.165550) | 1.952311 / 6.876477 (-4.924166) | 2.167480 / 2.142072 (0.025408) | 0.855882 / 4.805227 (-3.949346) | 0.171378 / 6.500664 (-6.329287) | 0.066731 / 0.075469 (-0.008738) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.184226 / 1.841788 (-0.657561) | 15.383396 / 8.074308 (7.309088) | 15.069783 / 10.191392 (4.878391) | 0.161489 / 0.680424 (-0.518935) | 0.017763 / 0.534201 (-0.516438) | 0.427103 / 0.579283 (-0.152180) | 0.434295 / 0.434364 (-0.000069) | 0.496848 / 0.540337 (-0.043489) | 0.592572 / 1.386936 (-0.794364) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.008014 / 0.011353 (-0.003339) | 0.005607 / 0.011008 (-0.005401) | 0.076826 / 0.038508 (0.038318) | 0.035283 / 0.023109 (0.012174) | 0.347809 / 0.275898 (0.071911) | 0.382482 / 0.323480 (0.059003) | 0.006276 / 0.007986 (-0.001709) | 0.005978 / 0.004328 (0.001650) | 0.074938 / 0.004250 (0.070687) | 0.054323 / 0.037052 (0.017271) | 0.344027 / 0.258489 (0.085538) | 0.397623 / 0.293841 (0.103783) | 0.037851 / 0.128546 (-0.090695) | 0.012649 / 0.075646 (-0.062997) | 0.086169 / 0.419271 (-0.333103) | 0.051510 / 0.043533 (0.007977) | 0.341112 / 0.255139 (0.085973) | 0.357957 / 0.283200 (0.074757) | 0.110949 / 0.141683 (-0.030734) | 1.479573 / 1.452155 (0.027419) | 1.578572 / 1.492716 (0.085855) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.310678 / 0.018006 (0.292672) | 0.525504 / 0.000490 (0.525015) | 0.000447 / 0.000200 (0.000247) | 0.000060 / 0.000054 (0.000006) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.031262 / 0.037411 (-0.006149) | 0.113801 / 0.014526 (0.099275) | 0.124967 / 0.176557 (-0.051590) | 0.175226 / 0.737135 (-0.561909) | 0.129377 / 0.296338 (-0.166962) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.420672 / 0.215209 (0.205463) | 4.181337 / 2.077655 (2.103682) | 1.985524 / 1.504120 (0.481404) | 1.803468 / 1.541195 (0.262273) | 1.952915 / 1.468490 (0.484425) | 0.710928 / 4.584777 (-3.873849) | 3.886245 / 3.745712 (0.140533) | 3.737837 / 5.269862 (-1.532024) | 1.806859 / 4.565676 (-2.758818) | 0.088461 / 0.424275 (-0.335814) | 0.013125 / 0.007607 (0.005518) | 0.522410 / 0.226044 (0.296365) | 5.232591 / 2.268929 (2.963663) | 2.451188 / 55.444624 (-52.993437) | 2.127725 / 6.876477 (-4.748751) | 2.232859 / 2.142072 (0.090786) | 0.854257 / 4.805227 (-3.950970) | 0.171004 / 6.500664 (-6.329661) | 0.066724 / 0.075469 (-0.008746) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.257700 / 1.841788 (-0.584088) | 15.738605 / 8.074308 (7.664297) | 15.021698 / 10.191392 (4.830306) | 0.147422 / 0.680424 (-0.533002) | 0.017928 / 0.534201 (-0.516273) | 0.428121 / 0.579283 (-0.151162) | 0.432056 / 0.434364 (-0.002308) | 0.498318 / 0.540337 (-0.042020) | 0.591040 / 1.386936 (-0.795896) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007014 / 0.011353 (-0.004339) | 0.004792 / 0.011008 (-0.006216) | 0.099822 / 0.038508 (0.061314) | 0.029333 / 0.023109 (0.006224) | 0.306453 / 0.275898 (0.030555) | 0.344598 / 0.323480 (0.021118) | 0.005121 / 0.007986 (-0.002865) | 0.004850 / 0.004328 (0.000522) | 0.076668 / 0.004250 (0.072417) | 0.039980 / 0.037052 (0.002927) | 0.312276 / 0.258489 (0.053787) | 0.354722 / 0.293841 (0.060881) | 0.031653 / 0.128546 (-0.096893) | 0.011743 / 0.075646 (-0.063903) | 0.322998 / 0.419271 (-0.096274) | 0.042813 / 0.043533 (-0.000720) | 0.308855 / 0.255139 (0.053716) | 0.332650 / 0.283200 (0.049451) | 0.087155 / 0.141683 (-0.054528) | 1.454946 / 1.452155 (0.002791) | 1.550589 / 1.492716 (0.057873) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.192921 / 0.018006 (0.174914) | 0.411155 / 0.000490 (0.410666) | 0.004779 / 0.000200 (0.004579) | 0.000071 / 0.000054 (0.000017) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.024462 / 0.037411 (-0.012950) | 0.100320 / 0.014526 (0.085794) | 0.105509 / 0.176557 (-0.071048) | 0.168533 / 0.737135 (-0.568602) | 0.110018 / 0.296338 (-0.186321) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.415025 / 0.215209 (0.199816) | 4.144583 / 2.077655 (2.066928) | 1.871627 / 1.504120 (0.367507) | 1.671638 / 1.541195 (0.130443) | 1.734458 / 1.468490 (0.265968) | 0.693435 / 4.584777 (-3.891342) | 3.487999 / 3.745712 (-0.257713) | 3.196553 / 5.269862 (-2.073308) | 1.628499 / 4.565676 (-2.937178) | 0.082999 / 0.424275 (-0.341276) | 0.012822 / 0.007607 (0.005215) | 0.514904 / 0.226044 (0.288860) | 5.157525 / 2.268929 (2.888596) | 2.313093 / 55.444624 (-53.131531) | 1.968335 / 6.876477 (-4.908142) | 2.083462 / 2.142072 (-0.058610) | 0.804485 / 4.805227 (-4.000742) | 0.152290 / 6.500664 (-6.348374) | 0.066813 / 0.075469 (-0.008656) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.210370 / 1.841788 (-0.631418) | 14.261779 / 8.074308 (6.187471) | 14.268121 / 10.191392 (4.076729) | 0.149216 / 0.680424 (-0.531207) | 0.016529 / 0.534201 (-0.517672) | 0.378814 / 0.579283 (-0.200469) | 0.386304 / 0.434364 (-0.048060) | 0.439653 / 0.540337 (-0.100684) | 0.523658 / 1.386936 (-0.863278) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006979 / 0.011353 (-0.004374) | 0.004718 / 0.011008 (-0.006290) | 0.077023 / 0.038508 (0.038514) | 0.029080 / 0.023109 (0.005971) | 0.343145 / 0.275898 (0.067247) | 0.380633 / 0.323480 (0.057153) | 0.006057 / 0.007986 (-0.001928) | 0.003541 / 0.004328 (-0.000788) | 0.075773 / 0.004250 (0.071523) | 0.039112 / 0.037052 (0.002060) | 0.342355 / 0.258489 (0.083866) | 0.386002 / 0.293841 (0.092161) | 0.033238 / 0.128546 (-0.095308) | 0.011696 / 0.075646 (-0.063950) | 0.086178 / 0.419271 (-0.333093) | 0.045219 / 0.043533 (0.001686) | 0.360710 / 0.255139 (0.105571) | 0.367490 / 0.283200 (0.084290) | 0.093041 / 0.141683 (-0.048642) | 1.523670 / 1.452155 (0.071516) | 1.595280 / 1.492716 (0.102564) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.235888 / 0.018006 (0.217882) | 0.410205 / 0.000490 (0.409715) | 0.000405 / 0.000200 (0.000205) | 0.000059 / 0.000054 (0.000005) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.025752 / 0.037411 (-0.011659) | 0.103343 / 0.014526 (0.088818) | 0.108722 / 0.176557 (-0.067834) | 0.159241 / 0.737135 (-0.577894) | 0.113684 / 0.296338 (-0.182654) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.441809 / 0.215209 (0.226600) | 4.410893 / 2.077655 (2.333238) | 2.104061 / 1.504120 (0.599941) | 1.854016 / 1.541195 (0.312821) | 1.947100 / 1.468490 (0.478610) | 0.697682 / 4.584777 (-3.887095) | 3.467513 / 3.745712 (-0.278199) | 1.911603 / 5.269862 (-3.358258) | 1.187479 / 4.565676 (-3.378197) | 0.083153 / 0.424275 (-0.341122) | 0.012651 / 0.007607 (0.005044) | 0.542081 / 0.226044 (0.316036) | 5.444622 / 2.268929 (3.175693) | 2.524236 / 55.444624 (-52.920388) | 2.190463 / 6.876477 (-4.686014) | 2.265764 / 2.142072 (0.123691) | 0.810778 / 4.805227 (-3.994450) | 0.152459 / 6.500664 (-6.348205) | 0.067815 / 0.075469 (-0.007654) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.334388 / 1.841788 (-0.507400) | 14.640459 / 8.074308 (6.566151) | 14.714874 / 10.191392 (4.523482) | 0.153479 / 0.680424 (-0.526945) | 0.016709 / 0.534201 (-0.517492) | 0.379427 / 0.579283 (-0.199856) | 0.391602 / 0.434364 (-0.042762) | 0.438297 / 0.540337 (-0.102041) | 0.524170 / 1.386936 (-0.862766) |\n\n</details>\n</details>\n\n\n"

] | "2023-03-21T14:18:09" | "2023-03-23T13:19:27" | "2023-03-23T13:12:25" | MEMBER | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/5655.diff",

"html_url": "https://github.com/huggingface/datasets/pull/5655",

"merged_at": "2023-03-23T13:12:25Z",

"patch_url": "https://github.com/huggingface/datasets/pull/5655.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/5655"

} | Following discussion at https://github.com/huggingface/datasets/pull/5589

Right now `to_iterable_dataset` on images/audio hurts iterable dataset performance a lot (e.g. x4 slower because it encodes+decodes images/audios unnecessarily).

I fixed it by providing a generator that yields undecoded examples | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5655/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5655/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/5276 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5276/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5276/comments | https://api.github.com/repos/huggingface/datasets/issues/5276/events | https://github.com/huggingface/datasets/issues/5276 | 1,459,363,442 | I_kwDODunzps5W_B5y | 5,276 | Bug in downloading common_voice data and snall chunk of it to one's own hub | {

"avatar_url": "https://avatars.githubusercontent.com/u/48530104?v=4",

"events_url": "https://api.github.com/users/capsabogdan/events{/privacy}",

"followers_url": "https://api.github.com/users/capsabogdan/followers",

"following_url": "https://api.github.com/users/capsabogdan/following{/other_user}",

"gists_url": "https://api.github.com/users/capsabogdan/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/capsabogdan",

"id": 48530104,

"login": "capsabogdan",

"node_id": "MDQ6VXNlcjQ4NTMwMTA0",

"organizations_url": "https://api.github.com/users/capsabogdan/orgs",

"received_events_url": "https://api.github.com/users/capsabogdan/received_events",

"repos_url": "https://api.github.com/users/capsabogdan/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/capsabogdan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/capsabogdan/subscriptions",

"type": "User",

"url": "https://api.github.com/users/capsabogdan"

} | [] | closed | false | null | [] | null | [

"Sounds like one of the file is not a valid one, can you make sure you uploaded valid mp3 files ?",

"Well I just sharded the original commonVoice dataset and pushed a small chunk of it in a private rep\n\nWhat did go wrong?\n\nHolen Sie sich Outlook für iOS<https://aka.ms/o0ukef>\n________________________________\nVon: Quentin Lhoest ***@***.***>\nGesendet: Tuesday, November 22, 2022 3:03:40 PM\nAn: huggingface/datasets ***@***.***>\nCc: capsabogdan ***@***.***>; Author ***@***.***>\nBetreff: Re: [huggingface/datasets] Bug in downloading common_voice data and snall chunk of it to one's own hub (Issue #5276)\n\n\nSounds like one of the file is not a valid one, can you make sure you uploaded valid mp3 files ?\n\n—\nReply to this email directly, view it on GitHub<https://github.com/huggingface/datasets/issues/5276#issuecomment-1323727434>, or unsubscribe<https://github.com/notifications/unsubscribe-auth/ALSIFOAPAL2V4TBJTSPMAULWJTHDZANCNFSM6AAAAAASHQJ63U>.\nYou are receiving this because you authored the thread.Message ID: ***@***.***>\n",

"It should be all good then !\r\nCould you share a link to your repository for me to investigate what went wrong ?",

"https://huggingface.co/datasets/DTU54DL/common-voice-test16k\n\nAm Di., 22. Nov. 2022 um 16:43 Uhr schrieb Quentin Lhoest <\n***@***.***>:\n\n> It should be all good then !\n> Could you share a link to your repository for me to investigate what went\n> wrong ?\n>\n> —\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/datasets/issues/5276#issuecomment-1323876682>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/ALSIFOEUJRZWXAM7DYA5VJDWJTS3NANCNFSM6AAAAAASHQJ63U>\n> .\n> You are receiving this because you authored the thread.Message ID:\n> ***@***.***>\n>\n",

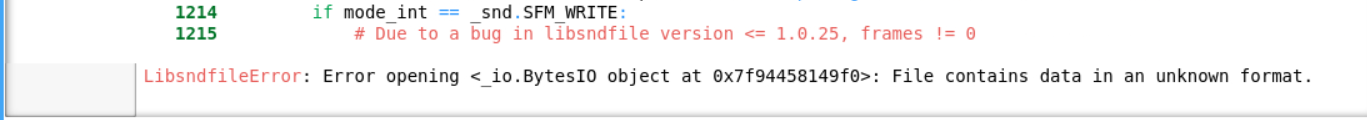

"I see ! This is a bug with MP3 files.\r\n\r\nWhen we store audio data in parquet, we store the bytes and the file name. From the file name extension we know if it's a WAV, an MP3 or else. But here it looks like the paths are all None.\r\n\r\nIt looks like it comes from here:\r\n\r\nhttps://github.com/huggingface/datasets/blob/7feeb5648a63b6135a8259dedc3b1e19185ee4c7/src/datasets/features/audio.py#L212\r\n\r\nCc @polinaeterna maybe we should simply put the file name instead of None values ?",

"@lhoestq I remember we wanted to avoid storing redundant data but maybe it's not that crucial indeed to store one more string value. \r\nOr we can store paths only for mp3s, considering that for other formats we don't have such a problem with reading from bytes without format specified. ",

"It doesn't cost much to always store the file name IMO",

"thanks for the help!\n\ncan I do anything on my side? we are doing a DL project and we need the\ndata really quick.\n\nthanks\nbogdan\n\n> Message ID: ***@***.***>\n>\n",

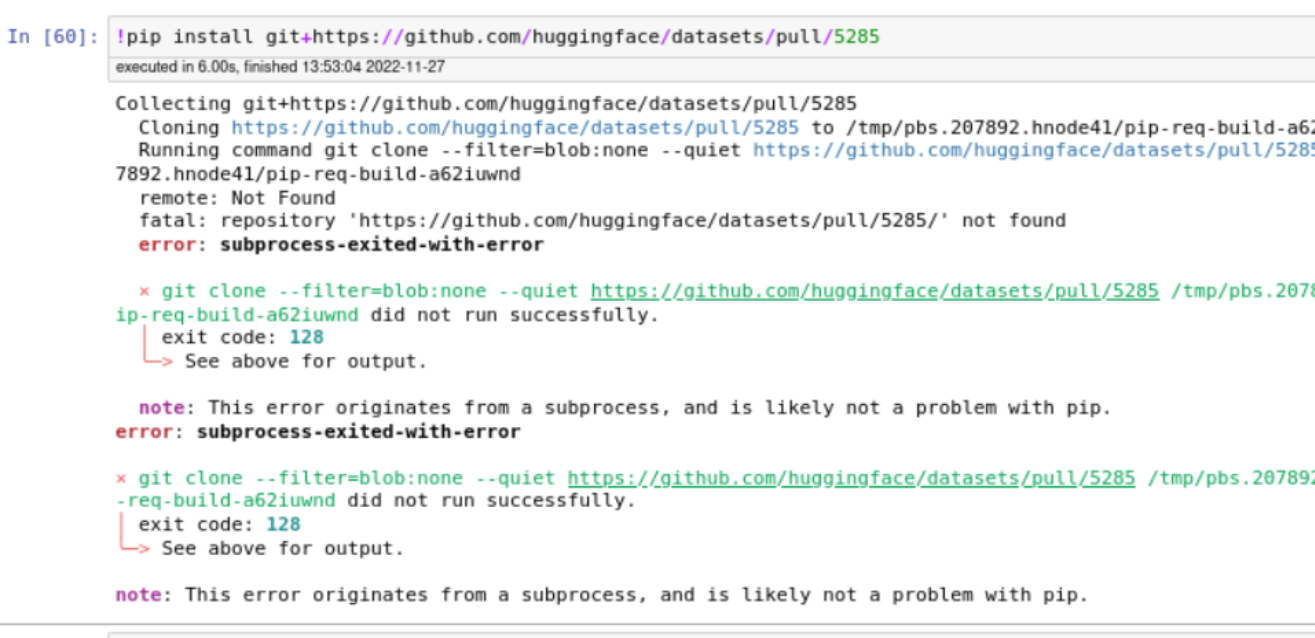

"I opened a pull requests here: https://github.com/huggingface/datasets/pull/5285, we'll do a new release soon with this fix.\r\n\r\nOtherwise if you're really in a hurry you can install `datasets` from this PR",

"[image: image.png]\n\n> Message ID: ***@***.***>\n>\n",

"any idea on what's going wrong here?\n\nthanks\n\nAm So., 27. Nov. 2022 um 13:53 Uhr schrieb Bogdan Capsa <\n***@***.***>:\n\n> [image: image.png]\n>\n>> Message ID: ***@***.***>\n>>\n>\n",

"hi @capsabogdan! \r\ncould you please share more specifically what problem do you have now?",

"I have attached this screenshot above . can u pls help? So can not pip from pull request\r\n\r\n\r\n",

"The pull request has been merged on `main`.\r\nYou can install `datasets` from `main` using\r\n```\r\npip install git+https://github.com/huggingface/datasets.git\r\n```",

"I've tried to load this dataset DTU54DL/common-voice-test16k, but am\ngetting the same error.\n\nSo the bug fix will fix only if I upload a new dataset, or also loading\npreviously uploaded datasets?\n\nthanks\n\nAm Mo., 28. Nov. 2022 um 19:51 Uhr schrieb Quentin Lhoest <\n***@***.***>:\n\n> The pull request has been merged on main.\n> You can install datasets from main using\n>\n> pip install git+https://github.com/huggingface/datasets.git\n>\n> —\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/datasets/issues/5276#issuecomment-1329587334>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/ALSIFOCNYYIGHM2EX3ZIO6DWKT5MXANCNFSM6AAAAAASHQJ63U>\n> .\n> You are receiving this because you were mentioned.Message ID:\n> ***@***.***>\n>\n",

"> So the bug fix will fix only if I upload a new dataset, or also loading\r\npreviously uploaded datasets?\r\n\r\nYou have to reupload the dataset, sorry for the inconvenience",

"thank you so much for the help! works like a charm!\n\nAm Di., 29. Nov. 2022 um 12:15 Uhr schrieb Quentin Lhoest <\n***@***.***>:\n\n> So the bug fix will fix only if I upload a new dataset, or also loading\n> previously uploaded datasets?\n>\n> You have to reupload the dataset, sorry for the inconvenience\n>\n> —\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/datasets/issues/5276#issuecomment-1330468393>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/ALSIFOBKEFZO57BAKY4IGW3WKXQUZANCNFSM6AAAAAASHQJ63U>\n> .\n> You are receiving this because you were mentioned.Message ID:\n> ***@***.***>\n>\n"

] | "2022-11-22T08:17:53" | "2023-07-21T14:33:10" | "2023-07-21T14:33:10" | NONE | null | null | null | ### Describe the bug

I'm trying to load the common voice dataset. Currently there is no implementation to download just par tof the data, and I need just one part of it, without downloading the entire dataset

Help please?

### Steps to reproduce the bug

So here is what I have done:

1. Download common_voice data

2. Trim part of it and publish it to my own repo.

3. Download data from my own repo, but am getting this error.

### Expected behavior

There shouldn't be an error in downloading part of the data and publishing it to one's own repo

### Environment info

common_voice 11 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5276/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5276/timeline | null | completed | false |

https://api.github.com/repos/huggingface/datasets/issues/6023 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6023/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6023/comments | https://api.github.com/repos/huggingface/datasets/issues/6023/events | https://github.com/huggingface/datasets/pull/6023 | 1,801,272,420 | PR_kwDODunzps5VU7EG | 6,023 | Fix `ClassLabel` min max check for `None` values | {

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

} | [] | closed | false | null | [] | null | [

"_The documentation is not available anymore as the PR was closed or merged._",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.007108 / 0.011353 (-0.004245) | 0.004446 / 0.011008 (-0.006562) | 0.084013 / 0.038508 (0.045505) | 0.084271 / 0.023109 (0.061162) | 0.324496 / 0.275898 (0.048598) | 0.347783 / 0.323480 (0.024303) | 0.004382 / 0.007986 (-0.003604) | 0.005200 / 0.004328 (0.000872) | 0.065117 / 0.004250 (0.060866) | 0.063368 / 0.037052 (0.026316) | 0.328731 / 0.258489 (0.070242) | 0.356676 / 0.293841 (0.062835) | 0.031155 / 0.128546 (-0.097392) | 0.008672 / 0.075646 (-0.066975) | 0.287573 / 0.419271 (-0.131698) | 0.053692 / 0.043533 (0.010160) | 0.308796 / 0.255139 (0.053657) | 0.330521 / 0.283200 (0.047321) | 0.025010 / 0.141683 (-0.116672) | 1.498968 / 1.452155 (0.046813) | 1.552096 / 1.492716 (0.059380) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.263580 / 0.018006 (0.245574) | 0.559765 / 0.000490 (0.559275) | 0.003450 / 0.000200 (0.003250) | 0.000079 / 0.000054 (0.000024) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.029403 / 0.037411 (-0.008008) | 0.088154 / 0.014526 (0.073628) | 0.100372 / 0.176557 (-0.076185) | 0.157777 / 0.737135 (-0.579359) | 0.102273 / 0.296338 (-0.194066) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.387027 / 0.215209 (0.171818) | 3.854260 / 2.077655 (1.776605) | 1.875159 / 1.504120 (0.371039) | 1.703734 / 1.541195 (0.162539) | 1.814305 / 1.468490 (0.345815) | 0.482524 / 4.584777 (-4.102253) | 3.463602 / 3.745712 (-0.282110) | 4.004766 / 5.269862 (-1.265095) | 2.406751 / 4.565676 (-2.158925) | 0.057069 / 0.424275 (-0.367206) | 0.007448 / 0.007607 (-0.000159) | 0.465801 / 0.226044 (0.239757) | 4.636700 / 2.268929 (2.367771) | 2.329475 / 55.444624 (-53.115150) | 1.998330 / 6.876477 (-4.878146) | 2.264617 / 2.142072 (0.122544) | 0.577998 / 4.805227 (-4.227230) | 0.130846 / 6.500664 (-6.369818) | 0.059713 / 0.075469 (-0.015756) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.275931 / 1.841788 (-0.565857) | 20.396288 / 8.074308 (12.321980) | 13.875242 / 10.191392 (3.683850) | 0.164367 / 0.680424 (-0.516057) | 0.018573 / 0.534201 (-0.515628) | 0.397516 / 0.579283 (-0.181767) | 0.398977 / 0.434364 (-0.035387) | 0.462386 / 0.540337 (-0.077951) | 0.610129 / 1.386936 (-0.776807) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006912 / 0.011353 (-0.004441) | 0.004212 / 0.011008 (-0.006797) | 0.065707 / 0.038508 (0.027199) | 0.090435 / 0.023109 (0.067325) | 0.380539 / 0.275898 (0.104641) | 0.412692 / 0.323480 (0.089212) | 0.005545 / 0.007986 (-0.002441) | 0.003657 / 0.004328 (-0.000672) | 0.065380 / 0.004250 (0.061130) | 0.062901 / 0.037052 (0.025848) | 0.385931 / 0.258489 (0.127442) | 0.416272 / 0.293841 (0.122431) | 0.031974 / 0.128546 (-0.096572) | 0.008783 / 0.075646 (-0.066863) | 0.071424 / 0.419271 (-0.347847) | 0.049454 / 0.043533 (0.005921) | 0.374231 / 0.255139 (0.119092) | 0.386530 / 0.283200 (0.103331) | 0.025404 / 0.141683 (-0.116279) | 1.469869 / 1.452155 (0.017715) | 1.548629 / 1.492716 (0.055913) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.218413 / 0.018006 (0.200406) | 0.573863 / 0.000490 (0.573373) | 0.004156 / 0.000200 (0.003956) | 0.000097 / 0.000054 (0.000043) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.032610 / 0.037411 (-0.004801) | 0.088270 / 0.014526 (0.073744) | 0.106821 / 0.176557 (-0.069735) | 0.164498 / 0.737135 (-0.572638) | 0.106881 / 0.296338 (-0.189457) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.433730 / 0.215209 (0.218520) | 4.323902 / 2.077655 (2.246247) | 2.308607 / 1.504120 (0.804487) | 2.138888 / 1.541195 (0.597693) | 2.246760 / 1.468490 (0.778269) | 0.486863 / 4.584777 (-4.097914) | 3.561826 / 3.745712 (-0.183886) | 5.592685 / 5.269862 (0.322824) | 3.318560 / 4.565676 (-1.247116) | 0.057348 / 0.424275 (-0.366927) | 0.007434 / 0.007607 (-0.000174) | 0.506767 / 0.226044 (0.280723) | 5.083097 / 2.268929 (2.814168) | 2.780618 / 55.444624 (-52.664006) | 2.456924 / 6.876477 (-4.419553) | 2.564184 / 2.142072 (0.422112) | 0.580693 / 4.805227 (-4.224534) | 0.134471 / 6.500664 (-6.366194) | 0.062883 / 0.075469 (-0.012586) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.346618 / 1.841788 (-0.495169) | 20.547998 / 8.074308 (12.473690) | 14.404159 / 10.191392 (4.212767) | 0.176612 / 0.680424 (-0.503812) | 0.018372 / 0.534201 (-0.515829) | 0.395636 / 0.579283 (-0.183647) | 0.410661 / 0.434364 (-0.023703) | 0.468782 / 0.540337 (-0.071555) | 0.637476 / 1.386936 (-0.749460) |\n\n</details>\n</details>\n\n\n",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.009896 / 0.011353 (-0.001457) | 0.004658 / 0.011008 (-0.006351) | 0.101185 / 0.038508 (0.062677) | 0.075480 / 0.023109 (0.052371) | 0.410620 / 0.275898 (0.134722) | 0.470639 / 0.323480 (0.147159) | 0.007042 / 0.007986 (-0.000943) | 0.003909 / 0.004328 (-0.000419) | 0.079676 / 0.004250 (0.075425) | 0.066921 / 0.037052 (0.029869) | 0.423624 / 0.258489 (0.165135) | 0.473008 / 0.293841 (0.179167) | 0.048492 / 0.128546 (-0.080054) | 0.012833 / 0.075646 (-0.062813) | 0.335286 / 0.419271 (-0.083985) | 0.083506 / 0.043533 (0.039973) | 0.401918 / 0.255139 (0.146779) | 0.467975 / 0.283200 (0.184775) | 0.050025 / 0.141683 (-0.091658) | 1.679392 / 1.452155 (0.227237) | 1.852812 / 1.492716 (0.360095) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.248067 / 0.018006 (0.230061) | 0.584818 / 0.000490 (0.584328) | 0.021558 / 0.000200 (0.021358) | 0.000104 / 0.000054 (0.000050) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.028572 / 0.037411 (-0.008839) | 0.097212 / 0.014526 (0.082686) | 0.121675 / 0.176557 (-0.054881) | 0.186597 / 0.737135 (-0.550538) | 0.122285 / 0.296338 (-0.174053) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.586279 / 0.215209 (0.371070) | 5.634402 / 2.077655 (3.556747) | 2.560648 / 1.504120 (1.056528) | 2.288796 / 1.541195 (0.747601) | 2.402580 / 1.468490 (0.934090) | 0.801453 / 4.584777 (-3.783324) | 5.036654 / 3.745712 (1.290942) | 8.319972 / 5.269862 (3.050110) | 4.665620 / 4.565676 (0.099944) | 0.107292 / 0.424275 (-0.316983) | 0.009206 / 0.007607 (0.001599) | 0.766505 / 0.226044 (0.540461) | 7.333784 / 2.268929 (5.064856) | 3.601875 / 55.444624 (-51.842749) | 2.886388 / 6.876477 (-3.990089) | 3.231797 / 2.142072 (1.089725) | 1.179509 / 4.805227 (-3.625718) | 0.224656 / 6.500664 (-6.276008) | 0.084749 / 0.075469 (0.009280) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.772345 / 1.841788 (-0.069443) | 24.138788 / 8.074308 (16.064480) | 20.712416 / 10.191392 (10.521024) | 0.254655 / 0.680424 (-0.425769) | 0.028858 / 0.534201 (-0.505343) | 0.499314 / 0.579283 (-0.079969) | 0.605797 / 0.434364 (0.171433) | 0.567628 / 0.540337 (0.027290) | 0.752288 / 1.386936 (-0.634648) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.010134 / 0.011353 (-0.001219) | 0.004630 / 0.011008 (-0.006378) | 0.082282 / 0.038508 (0.043774) | 0.081722 / 0.023109 (0.058613) | 0.465018 / 0.275898 (0.189120) | 0.516392 / 0.323480 (0.192912) | 0.006618 / 0.007986 (-0.001368) | 0.004310 / 0.004328 (-0.000018) | 0.078990 / 0.004250 (0.074739) | 0.077729 / 0.037052 (0.040677) | 0.464892 / 0.258489 (0.206403) | 0.510551 / 0.293841 (0.216710) | 0.050750 / 0.128546 (-0.077796) | 0.014402 / 0.075646 (-0.061244) | 0.092587 / 0.419271 (-0.326685) | 0.074769 / 0.043533 (0.031237) | 0.468591 / 0.255139 (0.213452) | 0.508138 / 0.283200 (0.224938) | 0.047774 / 0.141683 (-0.093909) | 1.798354 / 1.452155 (0.346199) | 1.851431 / 1.492716 (0.358714) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.282528 / 0.018006 (0.264522) | 0.588286 / 0.000490 (0.587797) | 0.004892 / 0.000200 (0.004692) | 0.000136 / 0.000054 (0.000082) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.037048 / 0.037411 (-0.000364) | 0.101513 / 0.014526 (0.086987) | 0.133238 / 0.176557 (-0.043319) | 0.234799 / 0.737135 (-0.502336) | 0.120636 / 0.296338 (-0.175703) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.615377 / 0.215209 (0.400168) | 6.225717 / 2.077655 (4.148062) | 2.974137 / 1.504120 (1.470018) | 2.642168 / 1.541195 (1.100973) | 2.706051 / 1.468490 (1.237561) | 0.837171 / 4.584777 (-3.747606) | 5.143368 / 3.745712 (1.397656) | 4.560241 / 5.269862 (-0.709621) | 2.838375 / 4.565676 (-1.727301) | 0.092505 / 0.424275 (-0.331770) | 0.008962 / 0.007607 (0.001355) | 0.726361 / 0.226044 (0.500317) | 7.323998 / 2.268929 (5.055070) | 3.650531 / 55.444624 (-51.794094) | 2.960886 / 6.876477 (-3.915591) | 3.003889 / 2.142072 (0.861816) | 0.979264 / 4.805227 (-3.825963) | 0.204531 / 6.500664 (-6.296133) | 0.078285 / 0.075469 (0.002816) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.774225 / 1.841788 (-0.067563) | 26.399536 / 8.074308 (18.325228) | 22.312890 / 10.191392 (12.121498) | 0.244651 / 0.680424 (-0.435773) | 0.026950 / 0.534201 (-0.507251) | 0.493037 / 0.579283 (-0.086246) | 0.620399 / 0.434364 (0.186036) | 0.748985 / 0.540337 (0.208648) | 0.799766 / 1.386936 (-0.587170) |\n\n</details>\n</details>\n\n\n"

] | "2023-07-12T15:46:12" | "2023-07-12T16:29:26" | "2023-07-12T16:18:04" | CONTRIBUTOR | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/6023.diff",

"html_url": "https://github.com/huggingface/datasets/pull/6023",

"merged_at": "2023-07-12T16:18:04Z",

"patch_url": "https://github.com/huggingface/datasets/pull/6023.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/6023"

} | Fix #6022 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/6023/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/6023/timeline | null | null | true |