metadata

license: mit

📃 Paper • 🌐 Demo • 🤗 LongLLaVA

🌈 Update

- [2024.09.05] LongLLaVA repo is published!🎉 The Code will

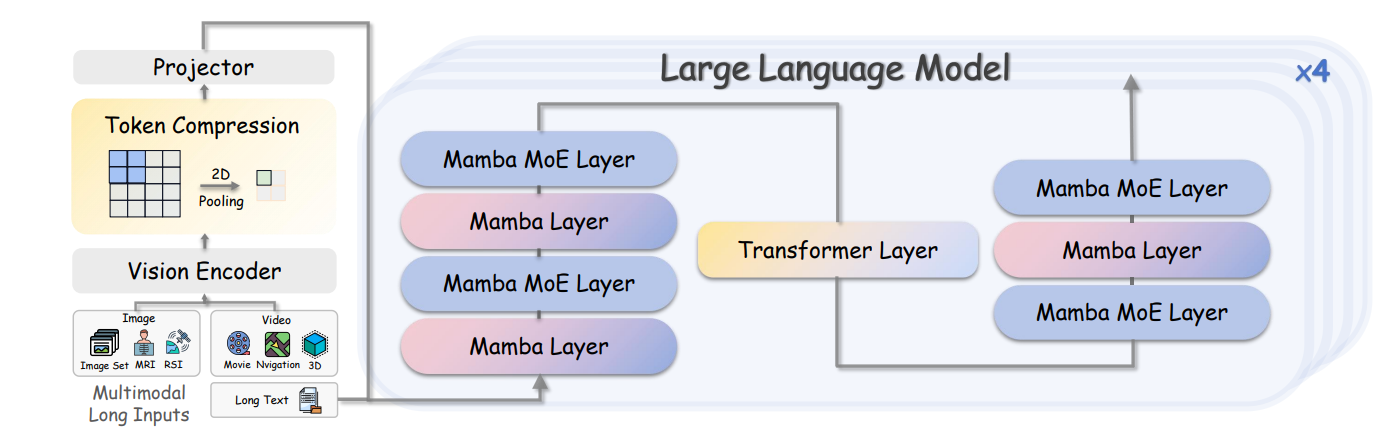

Architecture

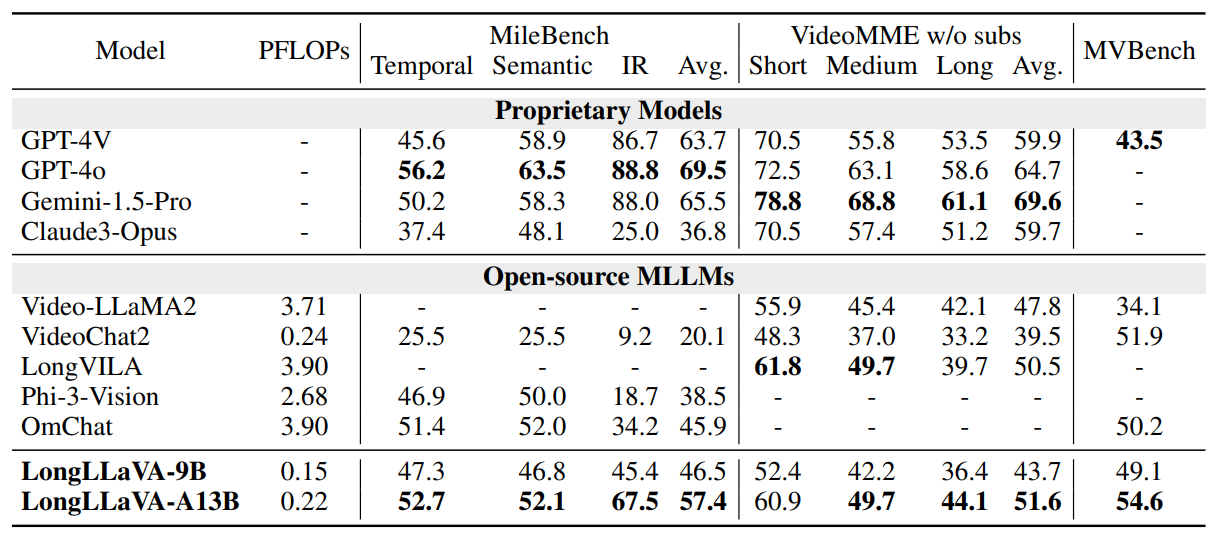

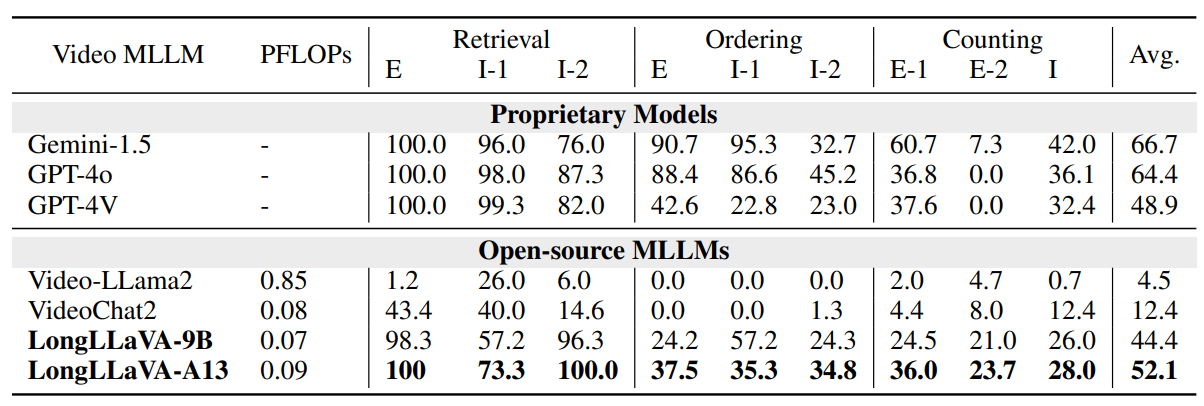

Results

Results reproduction

Data DownLoad and Construction

Dataset DownLoading and Construction

Coming Soon~

Training

Coming Soon~

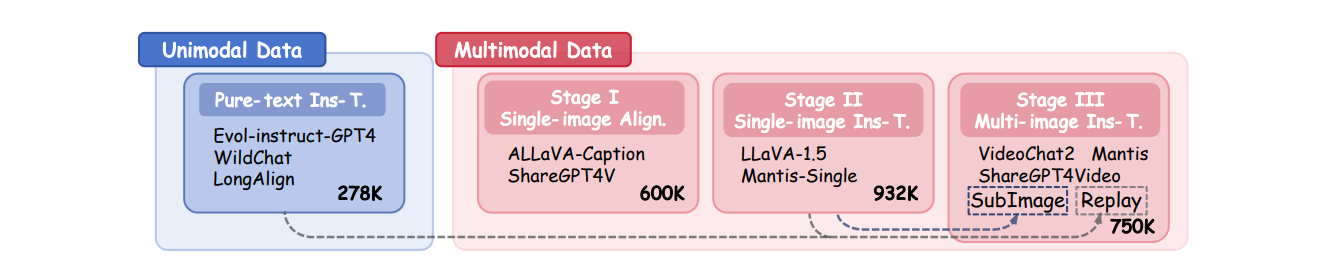

- Stage I: Single-image Alignment.

bash Pretrain.sh - Stage II: Single-image Instruction-tuning.

bash SingleImageSFT.sh - Stage III: Multi-image Instruction-tuning.

bash MultiImageSFT.sh

Evaluation

Coming Soon~

bash Eval.sh

TO DO

- Release Model Evalation Code

- Release Data Construction Code

- Release Model Training Code

Acknowledgement

- LLaVA: Visual Instruction Tuning (LLaVA) built towards GPT-4V level capabilities and beyond.

Citation

@misc{wang2024longllavascalingmultimodalllms,

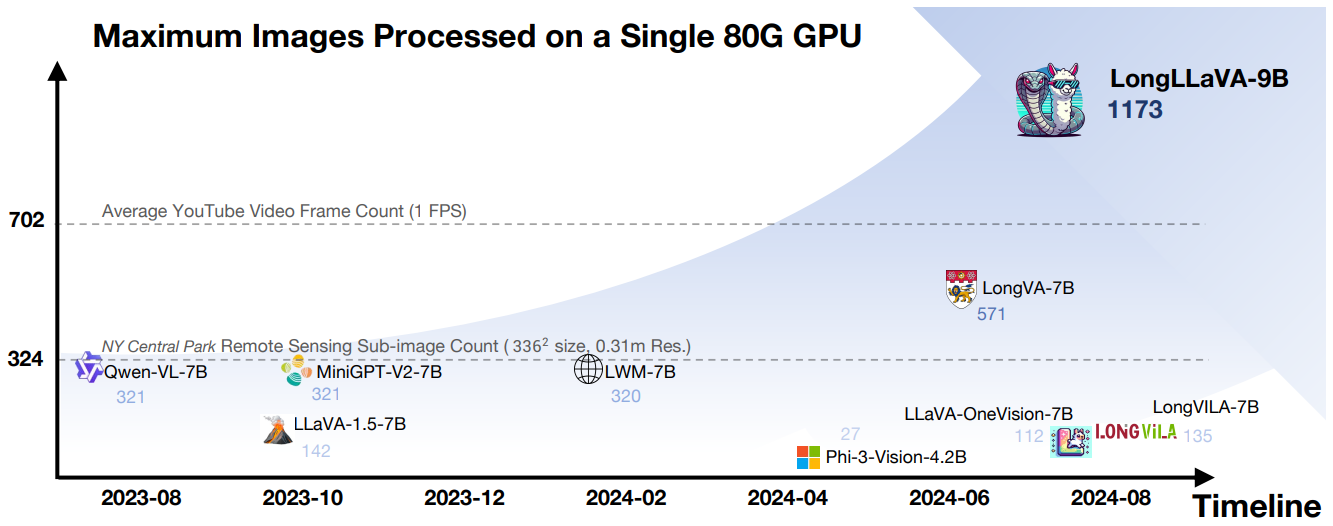

title={LongLLaVA: Scaling Multi-modal LLMs to 1000 Images Efficiently via Hybrid Architecture},

author={Xidong Wang and Dingjie Song and Shunian Chen and Chen Zhang and Benyou Wang},

year={2024},

eprint={2409.02889},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2409.02889},

}