Spaces:

Runtime error

A newer version of the Streamlit SDK is available:

1.41.1

SAMv2 is just mindblowingly good 😍 Learn what makes this model so good at video segmentation, keep reading 🦆⇓

Check out the demo by @skalskip92 to see how to use the model locally.

Check out Meta's demo where you can edit segmented instances too!

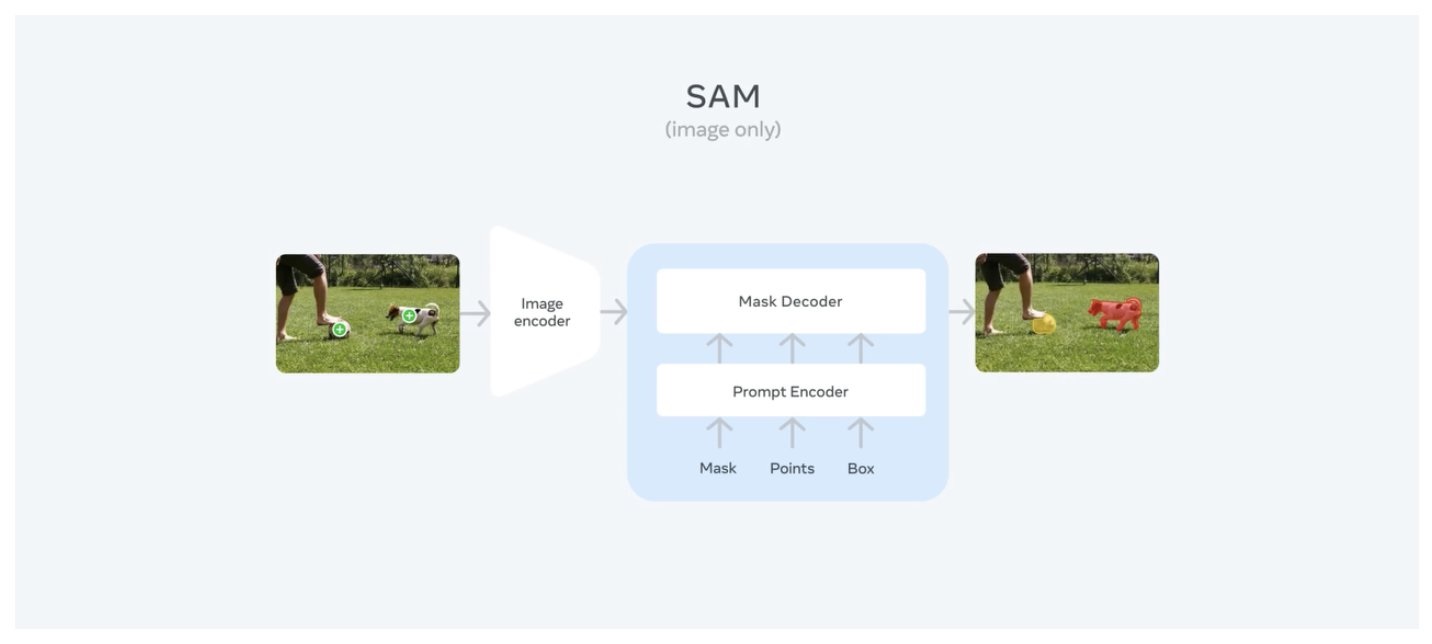

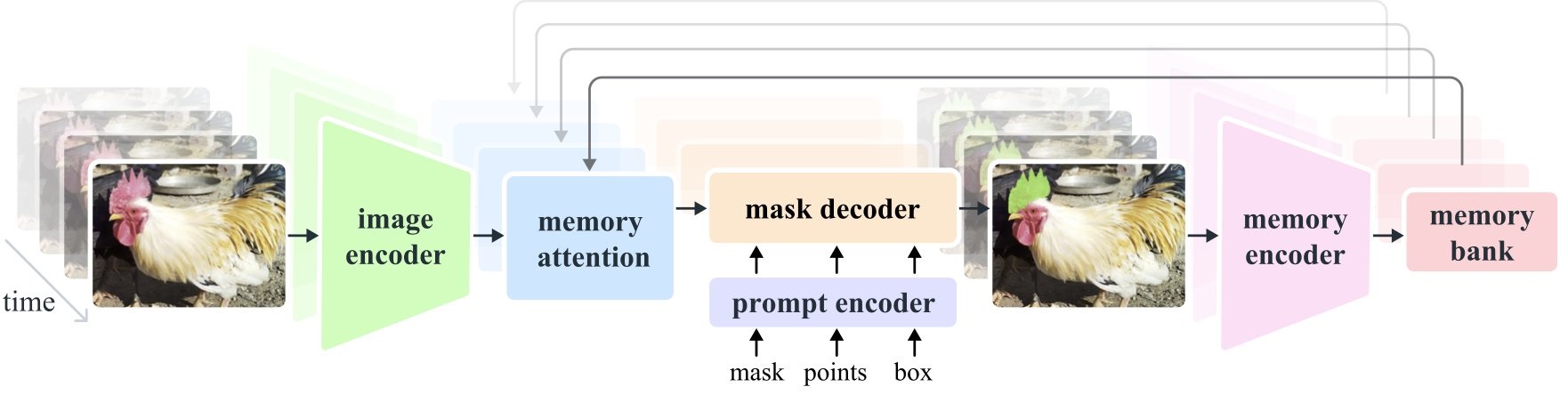

However SAM doesn't naturally track object instances in videos, one needs to make sure to prompt the same mask or point prompt for that instance in each frame and feed each frame, which is infeasible 😔 But don't fret, that is where SAMv2 comes in with a memory module!

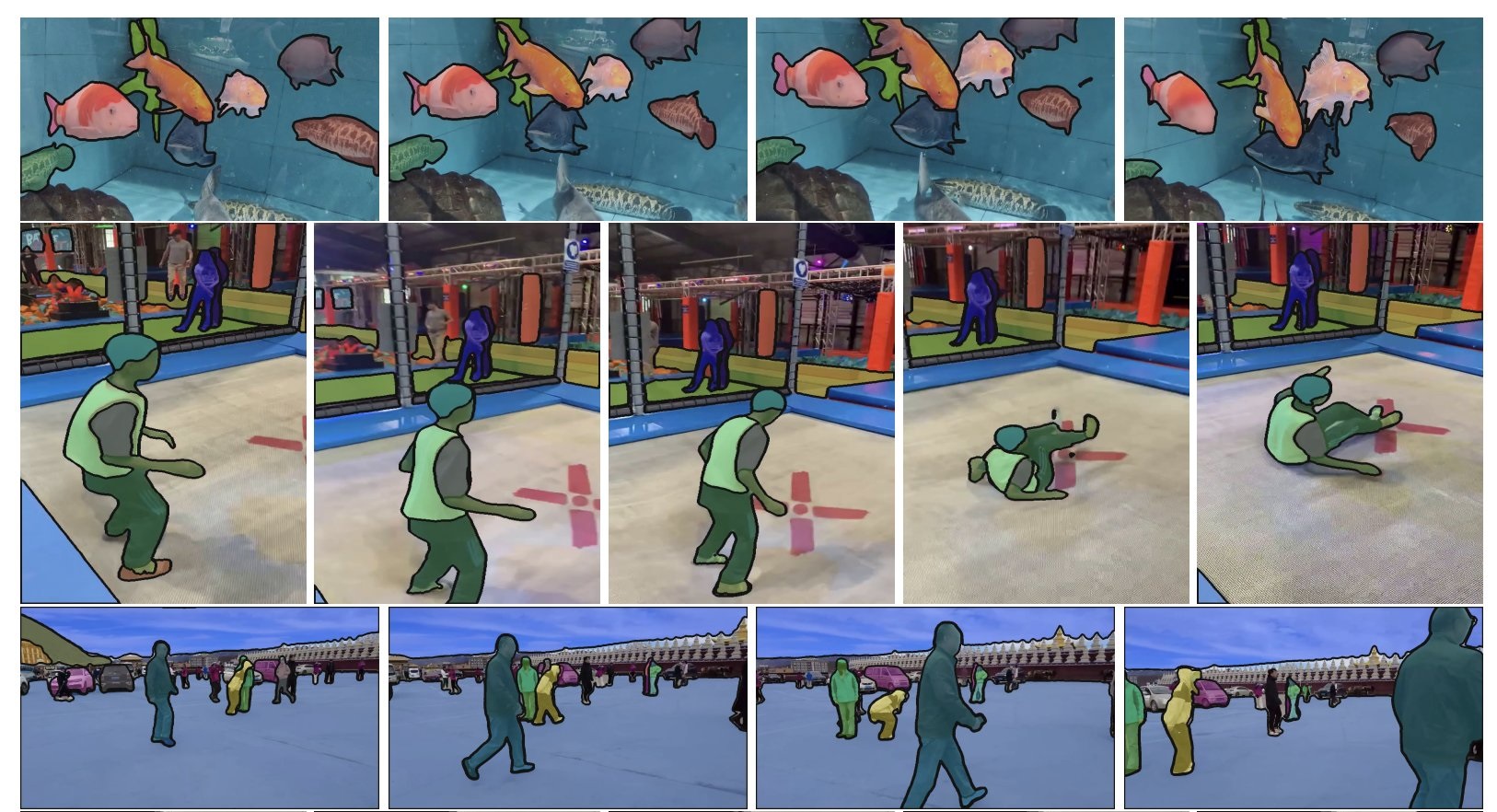

SAMv2 defines a new task called "masklet prediction" here masklet refers to the same mask instance throughout the frames 🎞️ Unlike SAM, SAM 2 decoder is not fed the image embedding directly from an image encoder, but attention of memories of prompted frames and object pointers.

🖼️ These "memories" are essentially past predictions of object of interest up to a number of recent frames, and are in form of feature maps of location info (spatial feature maps) 👉🏻 The object pointers are high level semantic information of the object of interest based on.

Just like SAM paper SAMv2 depends on a data engine, and the dataset it generated comes with the release: SA-V 🤯 This dataset is gigantic, it has 190.9K manual masklet annotations and 451.7K automatic masklets!

Initially they apply SAM to each frame to assist human annotators to annotate a video at six FPS for high quality data, in the second phase they add SAM and SAM2 to generate masklets across time consistently Finally they use SAM2 to refine the masklets.

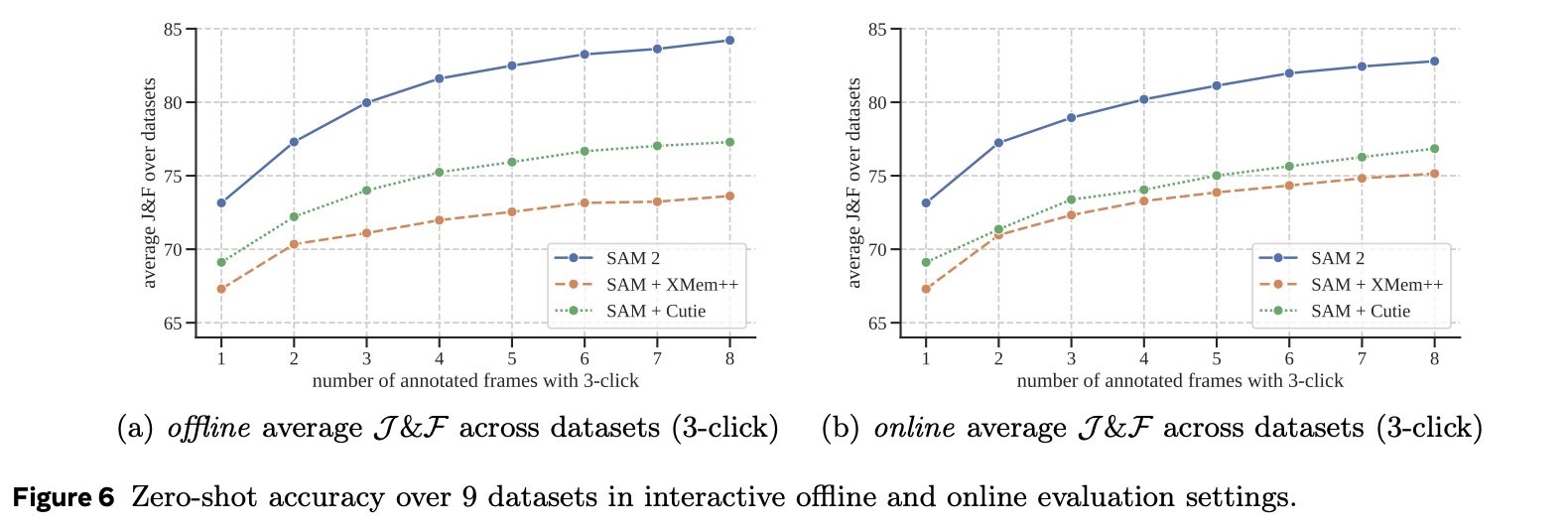

They have evaluated this model on J&F score (Jaccard Index + F-measure for contour acc) which is used to evaluate zero-shot video segmentation benchmarks SAMv2 seems to outperform two previously sota models that are built on top of SAM! 🥹

Ressources:

SAM 2: Segment Anything in Images and Videos

by Nikhila Ravi, Valentin Gabeur, Yuan-Ting Hu, Ronghang Hu, Chaitanya Ryali, Tengyu Ma, Haitham Khedr, Roman Rädle, Chloe Rolland, Laura Gustafson, Eric Mintun, Junting Pan, Kalyan Vasudev Alwala, Nicolas Carion, Chao-Yuan Wu, Ross Girshick, Piotr Dollár, Christoph Feichtenhofer (2024)

GitHub

Hugging Face documentation

Original tweet (July 31, 2024)