Spaces:

Running

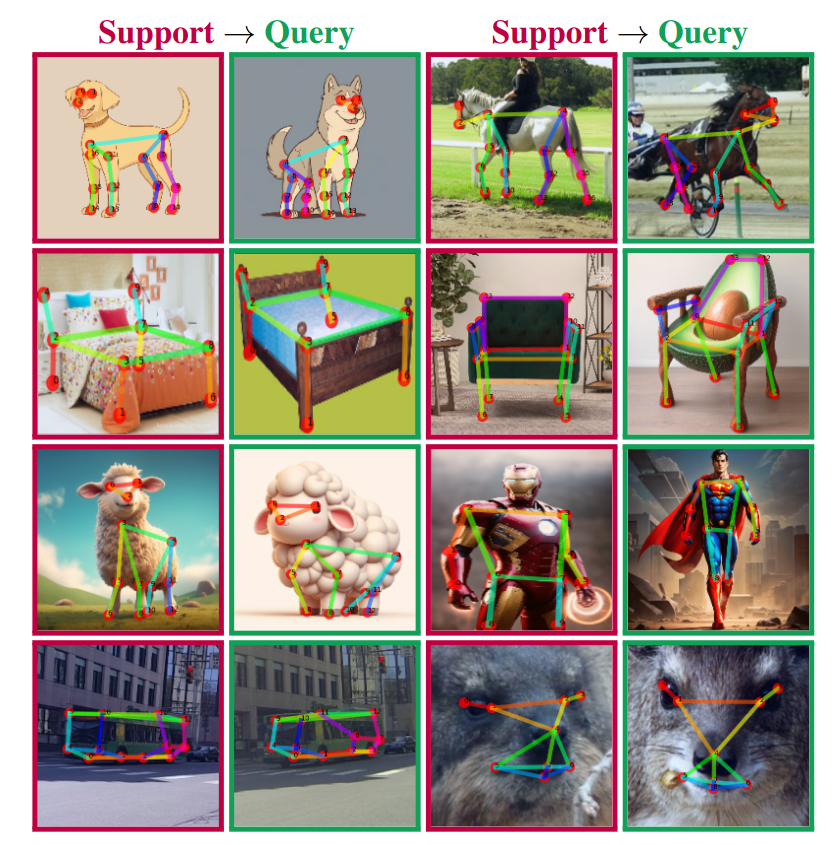

Pose Anything: A Graph-Based Approach for Category-Agnostic Pose Estimation

By Or Hirschorn and Shai Avidan

This repo is the official implementation of "Pose Anything: A Graph-Based Approach for Category-Agnostic Pose Estimation".

Introduction

We present a novel approach to CAPE that leverages the inherent geometrical relations between keypoints through a newly designed Graph Transformer Decoder. By capturing and incorporating this crucial structural information, our method enhances the accuracy of keypoint localization, marking a significant departure from conventional CAPE techniques that treat keypoints as isolated entities.

Citation

If you find this useful, please cite this work as follows:

@misc{hirschorn2023pose,

title={Pose Anything: A Graph-Based Approach for Category-Agnostic Pose Estimation},

author={Or Hirschorn and Shai Avidan},

year={2023},

eprint={2311.17891},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Getting Started

Docker [Recommended]

We provide a docker image for easy use. You can simply pull the docker image from docker hub, containing all the required libraries and packages:

docker pull orhir/pose_anything

docker run --name pose_anything -v {DATA_DIR}:/workspace/PoseAnything/PoseAnything/data/mp100 -it orhir/pose_anything /bin/bash

Conda Environment

We train and evaluate our model on Python 3.8 and Pytorch 2.0.1 with CUDA 12.1.

Please first install pytorch and torchvision following official documentation Pytorch. Then, follow MMPose to install the following packages:

mmcv-full=1.6.2

mmpose=0.29.0

Having installed these packages, run:

python setup.py develop

Demo on Custom Images

We provide a demo code to test our code on custom images.

A bigger and more accurate version of the model - COMING SOON!

Gradio Demo

We first require to install gradio:

pip install gradio==3.44.0

Then, Download the pretrained model and run:

python app.py --checkpoint [path_to_pretrained_ckpt]

Terminal Demo

Download the pretrained model and run:

python demo.py --support [path_to_support_image] --query [path_to_query_image] --config configs/demo_b.py --checkpoint [path_to_pretrained_ckpt]

Note: The demo code supports any config with suitable checkpoint file. More pre-trained models can be found in the evaluation section.

MP-100 Dataset

Please follow the official guide to prepare the MP-100 dataset for training and evaluation, and organize the data structure properly.

We provide an updated annotation file, which includes skeleton definitions, in the following link.

Please note:

Current version of the MP-100 dataset includes some discrepancies and filenames errors:

- Note that the mentioned DeepFasion dataset is actually DeepFashion2 dataset. The link in the official repo is wrong. Use this repo instead.

- We provide a script to fix CarFusion filename errors, which can be run by:

python tools/fix_carfusion.py [path_to_CarFusion_dataset] [path_to_mp100_annotation]

Training

Backbone Options

To use pre-trained Swin-Transformer as used in our paper, we provide the weights, taken from this repo, in the following link.

These should be placed in the ./pretrained folder.

We also support DINO and ResNet backbones. To use them, you can easily change the config file to use the desired backbone.

This can be done by changing the pretrained field in the config file to dinov2, dino or resnet respectively (this will automatically load the pretrained weights from the official repo).

Training

To train the model, run:

python train.py --config [path_to_config_file] --work-dir [path_to_work_dir]

Evaluation and Pretrained Models

You can download the pretrained checkpoints from following link.

Here we provide the evaluation results of our pretrained models on MP-100 dataset along with the config files and checkpoints:

1-Shot Models

| Setting | split 1 | split 2 | split 3 | split 4 | split 5 |

|---|---|---|---|---|---|

| Tiny | 91.06 | 88024 | 86.09 | 86.17 | 85.78 |

| link / config | link / config | link / config | link / config | link / config | |

| Small | 93.66 | 90.42 | 89.79 | 88.68 | 89.61 |

| link / config | link / config | link / config | link / config | link / config |

5-Shot Models

| Setting | split 1 | split 2 | split 3 | split 4 | split 5 |

|---|---|---|---|---|---|

| Tiny | 94.18 | 91.46 | 90.50 | 90.18 | 89.47 |

| link / config | link / config | link / config | link / config | link / config | |

| Small | 96.51 | 92.15 | 91.99 | 92.01 | 92.36 |

| link / config | link / config | link / config | link / config | link / config |

Evaluation

The evaluation on a single GPU will take approximately 30 min.

To evaluate the pretrained model, run:

python test.py [path_to_config_file] [path_to_pretrained_ckpt]

Acknowledgement

Our code is based on code from:

License

This project is released under the Apache 2.0 license.