Spaces:

Sleeping

Sleeping

Ayush Shrivastava

commited on

Commit

·

23f826d

1

Parent(s):

4bfb461

final changes

Browse files- app.py +32 -11

- plot_1.jpg +0 -0

- plot_2.jpg +0 -0

app.py

CHANGED

|

@@ -40,7 +40,7 @@ def model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, it

|

|

| 40 |

model.compile(optimizer=opt,loss = 'mean_squared_error',metrics=['mean_squared_error'])

|

| 41 |

|

| 42 |

# Fit model.

|

| 43 |

-

model.fit(X_train, y_train, epochs=iter

|

| 44 |

|

| 45 |

# Evaluate model.

|

| 46 |

y_hat = model.predict(X_test)

|

|

@@ -51,18 +51,38 @@ def model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, it

|

|

| 51 |

|

| 52 |

|

| 53 |

def get_model_summary(model):

|

|

|

|

|

|

|

|

|

|

| 54 |

stream = io.StringIO()

|

|

|

|

|

|

|

| 55 |

model.summary(print_fn=lambda x: stream.write(x + '\n'))

|

|

|

|

|

|

|

| 56 |

summary_string = stream.getvalue()

|

|

|

|

|

|

|

| 57 |

stream.close()

|

|

|

|

|

|

|

| 58 |

return summary_string

|

| 59 |

|

| 60 |

|

| 61 |

|

| 62 |

if __name__ == '__main__':

|

| 63 |

|

| 64 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 65 |

st.title("Visualize MLPs")

|

|

|

|

| 66 |

|

| 67 |

# Adding a subtitle to the app.

|

| 68 |

st.subheader('MLP Parameters')

|

|

@@ -73,22 +93,22 @@ if __name__ == '__main__':

|

|

| 73 |

with left_column:

|

| 74 |

|

| 75 |

# slider for max iterations.

|

| 76 |

-

iter = st.slider('

|

| 77 |

# slider for nodes per layer.

|

| 78 |

-

nodes = st.slider('Nodes', min_value=1,max_value=

|

| 79 |

# slider for number of hidden layers.

|

| 80 |

-

layers = st.slider('Hidden Layers', min_value=1,max_value= 10,value=3,step=1)

|

| 81 |

# selectbox for activation function.

|

| 82 |

activation = st.selectbox('Activation (Output layer will always be linear)',('linear','relu','sigmoid','tanh'),index=2)

|

| 83 |

|

| 84 |

with right_column:

|

| 85 |

|

| 86 |

# slider for adding noise.

|

| 87 |

-

noise = st.slider('Noise', min_value=0,max_value= 100,value=20,step=10)

|

| 88 |

# slider for test-train split.

|

| 89 |

split = st.slider('Test-Train Split', min_value=0.1,max_value= 0.9,value=0.3,step=0.1)

|

| 90 |

# selectbox for solver/optimizer.

|

| 91 |

-

solver = st.selectbox('Solver',('adam','sgd'),index=0)

|

| 92 |

# selectbox for learning rate.

|

| 93 |

rate = float(st.selectbox('Learning Rate',('0.001','0.003','0.01','0.03','0.1','0.3','1.0'),index=3))

|

| 94 |

|

|

@@ -97,7 +117,7 @@ if __name__ == '__main__':

|

|

| 97 |

y = X + np.sin(X)*X/5*noise/50*np.random.choice([0,0.5,1,1.5]) + np.random.normal(0,2,250)*noise/100

|

| 98 |

|

| 99 |

# Split data into training and test sets.

|

| 100 |

-

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=split

|

| 101 |

|

| 102 |

# Predicting the test data.

|

| 103 |

y_hat,model = model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, iter)

|

|

@@ -112,7 +132,8 @@ if __name__ == '__main__':

|

|

| 112 |

|

| 113 |

# Adding a subheader to the container.

|

| 114 |

st.subheader('Predictions')

|

| 115 |

-

|

|

|

|

| 116 |

# Adding two columns to display the graphs.

|

| 117 |

left_graph, right_graph = st.columns(2)

|

| 118 |

|

|

@@ -122,7 +143,7 @@ if __name__ == '__main__':

|

|

| 122 |

st.write('Training Data set')

|

| 123 |

|

| 124 |

fig1, ax1 = plt.subplots(1)

|

| 125 |

-

ax1.scatter(X_train, y_train, label='train',color='blue',alpha=0.

|

| 126 |

|

| 127 |

# setting the labels and title of the graph.

|

| 128 |

ax1.set_xlabel('X')

|

|

@@ -140,7 +161,7 @@ if __name__ == '__main__':

|

|

| 140 |

st.write('Test Data set')

|

| 141 |

|

| 142 |

fig2, ax2 = plt.subplots(1)

|

| 143 |

-

ax2.scatter(X_test, y_test, label='test',color='blue',alpha=0.

|

| 144 |

|

| 145 |

test = np.c_[(X_test,y_hat)]

|

| 146 |

test = test[test[:,0].argsort()]

|

|

|

|

| 40 |

model.compile(optimizer=opt,loss = 'mean_squared_error',metrics=['mean_squared_error'])

|

| 41 |

|

| 42 |

# Fit model.

|

| 43 |

+

model.fit(X_train, y_train, epochs=iter)

|

| 44 |

|

| 45 |

# Evaluate model.

|

| 46 |

y_hat = model.predict(X_test)

|

|

|

|

| 51 |

|

| 52 |

|

| 53 |

def get_model_summary(model):

|

| 54 |

+

"""Gets the summary of the model"""

|

| 55 |

+

|

| 56 |

+

# Creating a stream to store the summary.

|

| 57 |

stream = io.StringIO()

|

| 58 |

+

|

| 59 |

+

# Printing the summary to the stream.

|

| 60 |

model.summary(print_fn=lambda x: stream.write(x + '\n'))

|

| 61 |

+

|

| 62 |

+

# Getting the summary from the stream.

|

| 63 |

summary_string = stream.getvalue()

|

| 64 |

+

|

| 65 |

+

# Closing the stream.

|

| 66 |

stream.close()

|

| 67 |

+

|

| 68 |

+

# Returning the summary.

|

| 69 |

return summary_string

|

| 70 |

|

| 71 |

|

| 72 |

|

| 73 |

if __name__ == '__main__':

|

| 74 |

|

| 75 |

+

with st.sidebar:

|

| 76 |

+

# Adding a title to the app.

|

| 77 |

+

st.header('Multi Layer Perceptron Neural Network')

|

| 78 |

+

|

| 79 |

+

st.write('A multilayer perceptron(MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean any feedforward ANN, sometimes strictly to refer to networks composed of multiple layers of perceptrons (with threshold activation)')

|

| 80 |

+

|

| 81 |

+

st.write('An MLP consists of at least three layers of nodes: an input layer, a hidden layer and an output layer. Except for the input nodes, each node is a neuron that uses a nonlinear activation function. MLP utilizes a chain rule based supervised learning technique called backpropagation or reverse mode of automatic differentiation for training.Its multiple layers and non-linear activation distinguish MLP from a linear perceptron. It can distinguish data that is not linearly separable.')

|

| 82 |

+

|

| 83 |

+

|

| 84 |

st.title("Visualize MLPs")

|

| 85 |

+

st.write('This app is created to visualize the Multi layer perceptron Neural Network model. You can change the parameters and see the changes in the model architecture and the predictions.')

|

| 86 |

|

| 87 |

# Adding a subtitle to the app.

|

| 88 |

st.subheader('MLP Parameters')

|

|

|

|

| 93 |

with left_column:

|

| 94 |

|

| 95 |

# slider for max iterations.

|

| 96 |

+

iter = st.slider('No. of Iterations to run', min_value=10,max_value= 1000,value=50,step=10)

|

| 97 |

# slider for nodes per layer.

|

| 98 |

+

nodes = st.slider('No. of Nodes in each layer', min_value=1,max_value= 15,value=5,step=1)

|

| 99 |

# slider for number of hidden layers.

|

| 100 |

+

layers = st.slider('No. of Hidden Layers in the Network', min_value=1,max_value= 10,value=3,step=1)

|

| 101 |

# selectbox for activation function.

|

| 102 |

activation = st.selectbox('Activation (Output layer will always be linear)',('linear','relu','sigmoid','tanh'),index=2)

|

| 103 |

|

| 104 |

with right_column:

|

| 105 |

|

| 106 |

# slider for adding noise.

|

| 107 |

+

noise = st.slider('Noise (Sinusoidal Noise)', min_value=0,max_value= 100,value=20,step=10)

|

| 108 |

# slider for test-train split.

|

| 109 |

split = st.slider('Test-Train Split', min_value=0.1,max_value= 0.9,value=0.3,step=0.1)

|

| 110 |

# selectbox for solver/optimizer.

|

| 111 |

+

solver = st.selectbox('Solver/Optimizer',('adam','sgd'),index=0)

|

| 112 |

# selectbox for learning rate.

|

| 113 |

rate = float(st.selectbox('Learning Rate',('0.001','0.003','0.01','0.03','0.1','0.3','1.0'),index=3))

|

| 114 |

|

|

|

|

| 117 |

y = X + np.sin(X)*X/5*noise/50*np.random.choice([0,0.5,1,1.5]) + np.random.normal(0,2,250)*noise/100

|

| 118 |

|

| 119 |

# Split data into training and test sets.

|

| 120 |

+

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=split)

|

| 121 |

|

| 122 |

# Predicting the test data.

|

| 123 |

y_hat,model = model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, iter)

|

|

|

|

| 132 |

|

| 133 |

# Adding a subheader to the container.

|

| 134 |

st.subheader('Predictions')

|

| 135 |

+

st.write('True function : y = x ')

|

| 136 |

+

st.write('Noise : some sinusoial function with random noise')

|

| 137 |

# Adding two columns to display the graphs.

|

| 138 |

left_graph, right_graph = st.columns(2)

|

| 139 |

|

|

|

|

| 143 |

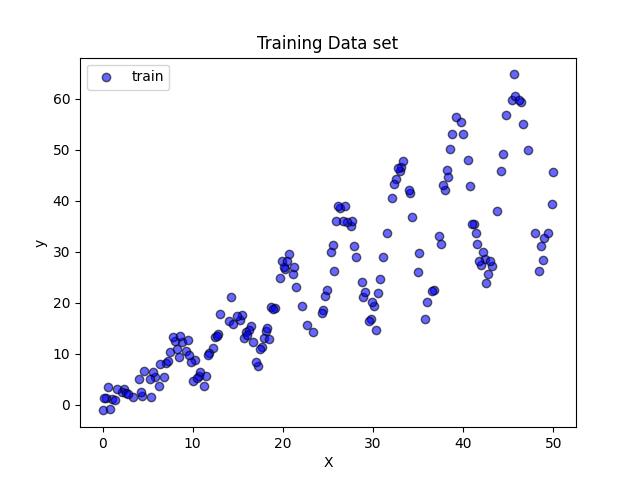

st.write('Training Data set')

|

| 144 |

|

| 145 |

fig1, ax1 = plt.subplots(1)

|

| 146 |

+

ax1.scatter(X_train, y_train, label='train',color='blue',alpha=0.4,edgecolors='black')

|

| 147 |

|

| 148 |

# setting the labels and title of the graph.

|

| 149 |

ax1.set_xlabel('X')

|

|

|

|

| 161 |

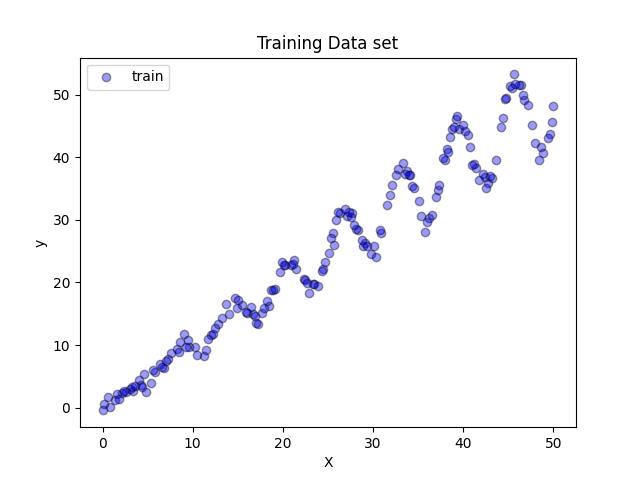

st.write('Test Data set')

|

| 162 |

|

| 163 |

fig2, ax2 = plt.subplots(1)

|

| 164 |

+

ax2.scatter(X_test, y_test, label='test',color='blue',alpha=0.4,edgecolors='black')

|

| 165 |

|

| 166 |

test = np.c_[(X_test,y_hat)]

|

| 167 |

test = test[test[:,0].argsort()]

|

plot_1.jpg

CHANGED

|

|

plot_2.jpg

CHANGED

|

|