Spaces:

Sleeping

Sleeping

Ayush Shrivastava

commited on

Commit

·

4bfb461

1

Parent(s):

60bdc2a

Final changes

Browse files- app.py +12 -11

- plot_1.jpg +0 -0

- plot_2.jpg +0 -0

app.py

CHANGED

|

@@ -11,7 +11,8 @@ import streamlit as st

|

|

| 11 |

import numpy as np

|

| 12 |

import io

|

| 13 |

|

| 14 |

-

|

|

|

|

| 15 |

|

| 16 |

def model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, iter):

|

| 17 |

"""Creates a MLP model and return the predictions"""

|

|

@@ -78,7 +79,7 @@ if __name__ == '__main__':

|

|

| 78 |

# slider for number of hidden layers.

|

| 79 |

layers = st.slider('Hidden Layers', min_value=1,max_value= 10,value=3,step=1)

|

| 80 |

# selectbox for activation function.

|

| 81 |

-

activation = st.selectbox('Activation',('linear','relu','sigmoid','tanh'),index=

|

| 82 |

|

| 83 |

with right_column:

|

| 84 |

|

|

@@ -92,20 +93,17 @@ if __name__ == '__main__':

|

|

| 92 |

rate = float(st.selectbox('Learning Rate',('0.001','0.003','0.01','0.03','0.1','0.3','1.0'),index=3))

|

| 93 |

|

| 94 |

# Generating regression data.

|

| 95 |

-

|

| 96 |

-

X

|

| 97 |

-

y = np.sin(X) + X + X*np.random.normal(0,1,100)/5

|

| 98 |

|

| 99 |

# Split data into training and test sets.

|

| 100 |

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=split,random_state=42)

|

| 101 |

|

| 102 |

# Predicting the test data.

|

| 103 |

-

X_test.sort(axis=0)

|

| 104 |

y_hat,model = model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, iter)

|

| 105 |

|

| 106 |

# Printing Model Architecture.

|

| 107 |

st.subheader('Model Architecture')

|

| 108 |

-

# summary = get_model_summary(model)

|

| 109 |

st.write(model.summary(print_fn=lambda x: st.text(x)))

|

| 110 |

|

| 111 |

# Plotting the Prediction data.

|

|

@@ -142,9 +140,12 @@ if __name__ == '__main__':

|

|

| 142 |

st.write('Test Data set')

|

| 143 |

|

| 144 |

fig2, ax2 = plt.subplots(1)

|

| 145 |

-

ax2.scatter(X_test, y_test, label='test',color='blue',alpha=0.6)

|

| 146 |

-

|

| 147 |

-

|

|

|

|

|

|

|

|

|

|

| 148 |

|

| 149 |

# setting the labels and title of the graph.

|

| 150 |

ax2.set_xlabel('X')

|

|

@@ -157,7 +158,7 @@ if __name__ == '__main__':

|

|

| 157 |

plt.savefig('plot_2.jpg')

|

| 158 |

|

| 159 |

# Printing the Errors.

|

| 160 |

-

st.subheader('Errors')

|

| 161 |

|

| 162 |

# Calculating the MSE.

|

| 163 |

mse = mean_squared_error(y_test, y_hat, squared=False)

|

|

|

|

| 11 |

import numpy as np

|

| 12 |

import io

|

| 13 |

|

| 14 |

+

# set random seed

|

| 15 |

+

np.random.seed(42)

|

| 16 |

|

| 17 |

def model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, iter):

|

| 18 |

"""Creates a MLP model and return the predictions"""

|

|

|

|

| 79 |

# slider for number of hidden layers.

|

| 80 |

layers = st.slider('Hidden Layers', min_value=1,max_value= 10,value=3,step=1)

|

| 81 |

# selectbox for activation function.

|

| 82 |

+

activation = st.selectbox('Activation (Output layer will always be linear)',('linear','relu','sigmoid','tanh'),index=2)

|

| 83 |

|

| 84 |

with right_column:

|

| 85 |

|

|

|

|

| 93 |

rate = float(st.selectbox('Learning Rate',('0.001','0.003','0.01','0.03','0.1','0.3','1.0'),index=3))

|

| 94 |

|

| 95 |

# Generating regression data.

|

| 96 |

+

X=np.linspace(0,50,250)

|

| 97 |

+

y = X + np.sin(X)*X/5*noise/50*np.random.choice([0,0.5,1,1.5]) + np.random.normal(0,2,250)*noise/100

|

|

|

|

| 98 |

|

| 99 |

# Split data into training and test sets.

|

| 100 |

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=split,random_state=42)

|

| 101 |

|

| 102 |

# Predicting the test data.

|

|

|

|

| 103 |

y_hat,model = model_MLP(X_train,y_train,X_test,layers, nodes, activation, solver, rate, iter)

|

| 104 |

|

| 105 |

# Printing Model Architecture.

|

| 106 |

st.subheader('Model Architecture')

|

|

|

|

| 107 |

st.write(model.summary(print_fn=lambda x: st.text(x)))

|

| 108 |

|

| 109 |

# Plotting the Prediction data.

|

|

|

|

| 140 |

st.write('Test Data set')

|

| 141 |

|

| 142 |

fig2, ax2 = plt.subplots(1)

|

| 143 |

+

ax2.scatter(X_test, y_test, label='test',color='blue',alpha=0.6,edgecolors='black')

|

| 144 |

+

|

| 145 |

+

test = np.c_[(X_test,y_hat)]

|

| 146 |

+

test = test[test[:,0].argsort()]

|

| 147 |

+

ax2.plot(test[:,0],test[:,1], label='prediction',c='red',alpha=0.6,linewidth=2,marker='x')

|

| 148 |

+

|

| 149 |

|

| 150 |

# setting the labels and title of the graph.

|

| 151 |

ax2.set_xlabel('X')

|

|

|

|

| 158 |

plt.savefig('plot_2.jpg')

|

| 159 |

|

| 160 |

# Printing the Errors.

|

| 161 |

+

st.subheader('Errors')

|

| 162 |

|

| 163 |

# Calculating the MSE.

|

| 164 |

mse = mean_squared_error(y_test, y_hat, squared=False)

|

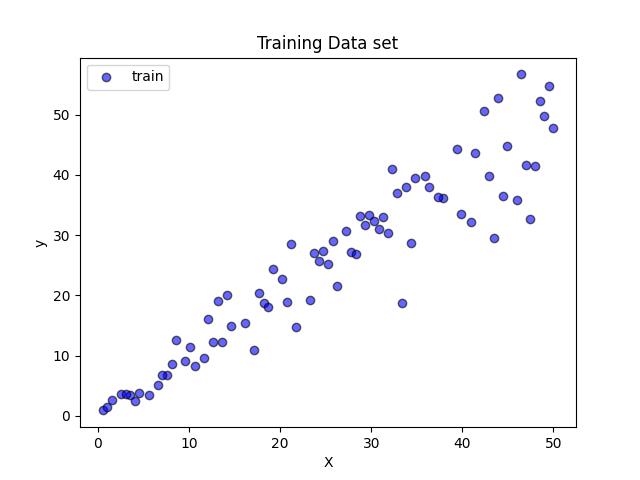

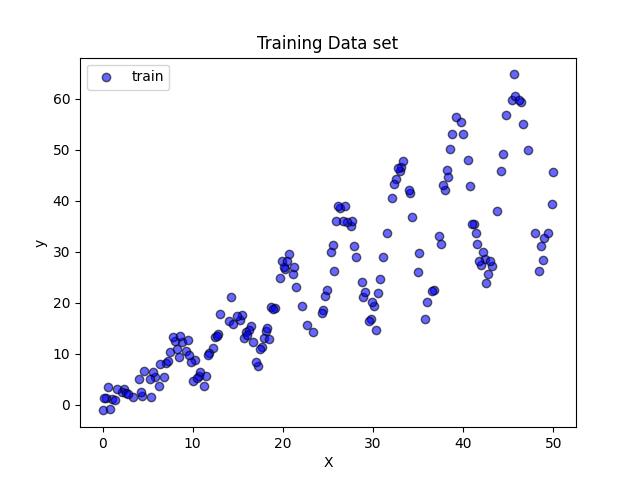

plot_1.jpg

CHANGED

|

|

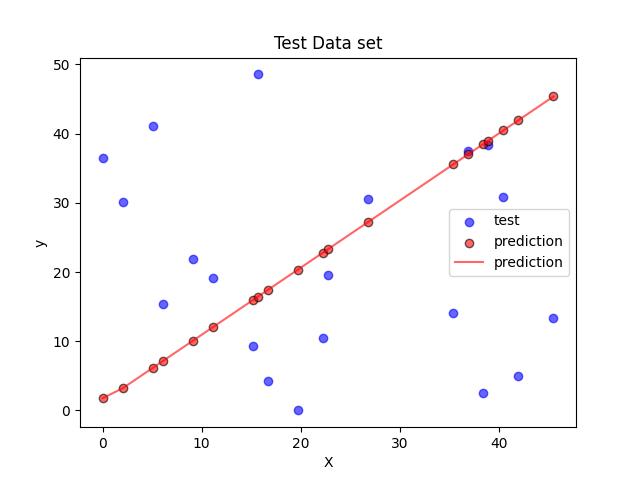

plot_2.jpg

CHANGED

|

|