MM-IGLU: Multi-Modal Interactive Grounded Language Understanding

This repository contains the code for MM-IGLU: Multi-Modal Interactive Grounded Language Understanding accepted at the LREC-COLING 2024 conference, published by Claudiu Daniel Hromei (Tor Vergata, University of Rome), Daniele Margiotta (Tor Vergata, University of Rome), Danilo Croce (Tor Vergata, University of Rome) and Roberto Basili (Tor Vergata, University of Rome). The paper will be available here.

Usage

This model is only the LoRA adapters, you should load it with the LLaMA2chat 13b Language Model:

from peft import PeftModel

from llava.model import LlavaLlamaForCausalLM

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-13b-chat-hf")

model = LlavaLlamaForCausalLM.from_pretrained(

"meta-llama/Llama-2-13b-chat-hf",

load_in_8bit=args.load_in_8bit,

torch_dtype=torch.float16,

device_map="auto",

)

model = PeftModel.from_pretrained(

model,

"sag-uniroma2/llava-Llama-2-chat-13b-hf-iglu-adapters",

torch_dtype=torch.float16,

device_map="auto",

)

Description

MM-IGLU is a Multi-Modal dataset for Interactive Grounded Language Understanding, that expands the resource released during the IGLU competition. While the competition was text-only, we expanded this resource by generating a 3d image for each representation of the world.

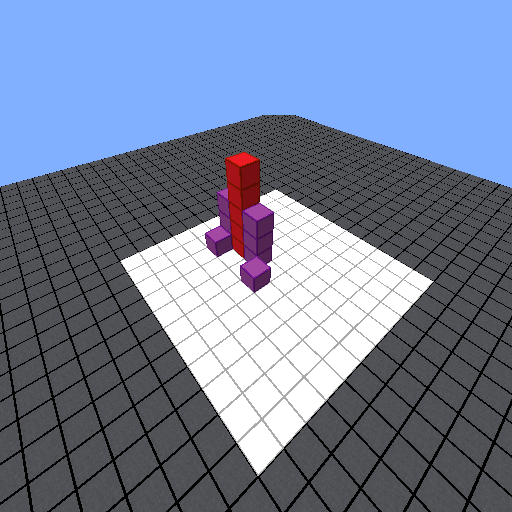

Given a 3d world and a command in natural language from a Human Architect, the task of a Robotic Builder is to assess if the command is executable based on the world and then execute it or, if more information is needed, to ask more questions. We report here an example of the image:

and a command like "Break the green blocks". If, like in this case, there are no green blocks, the Robotic Builder should answer back "There are no break blocks, which block should I break?". For the same image, if the command is "Break the red blocks", in this case, the Builder should understand that there are red blocks in the environment and should answer "I can execute it", confirming the feasibility of the command.

We developed a multi-modal model based on LLaVA for solving the task exploiting both the command and the 3d image. It couples a CLIP model for handling the images with a Language Model for generating the answers. This model achieved the best performance when coupled with the LLaMA-2-chat-13b model.

GitHub

If you want more details, please consult the GitHub page, where you can find out how to use the model.

- Downloads last month

- 6