Project Hinglish - A Hinglish to English Language Translater.

Project Hinglish aims to develop a high-performance language translation model capable of translating Hinglish (a blend of Hindi and English commonly used in informal communication in India) to standard English. The model is fine-tuned over gemma-2b using PEFT(LoRA) method using the rank 128. Aim of this model is for handling the unique syntactical and lexical characteristics of Hinglish.

Fine-Tune Method:

- Fine-Tuning Approach Using PEFT (LoRA): The fine-tuning employs Parameter-efficient Fine Tuning (PEFT) techniques, particularly using LoRA (Low-Rank Adaptation). LoRA modifies a pre-trained model efficiently by introducing low-rank matrices that adapt the model’s attention and feed-forward layers. This method allows significant model adaptation with minimal updates to the parameters, preserving the original model's strengths while adapting it effectively to the nuances of Hinglish.

- Dataset: cmu_hinglish_dog + Combination of sentences taken from my own dialy life chats with friends and Uber Messages.

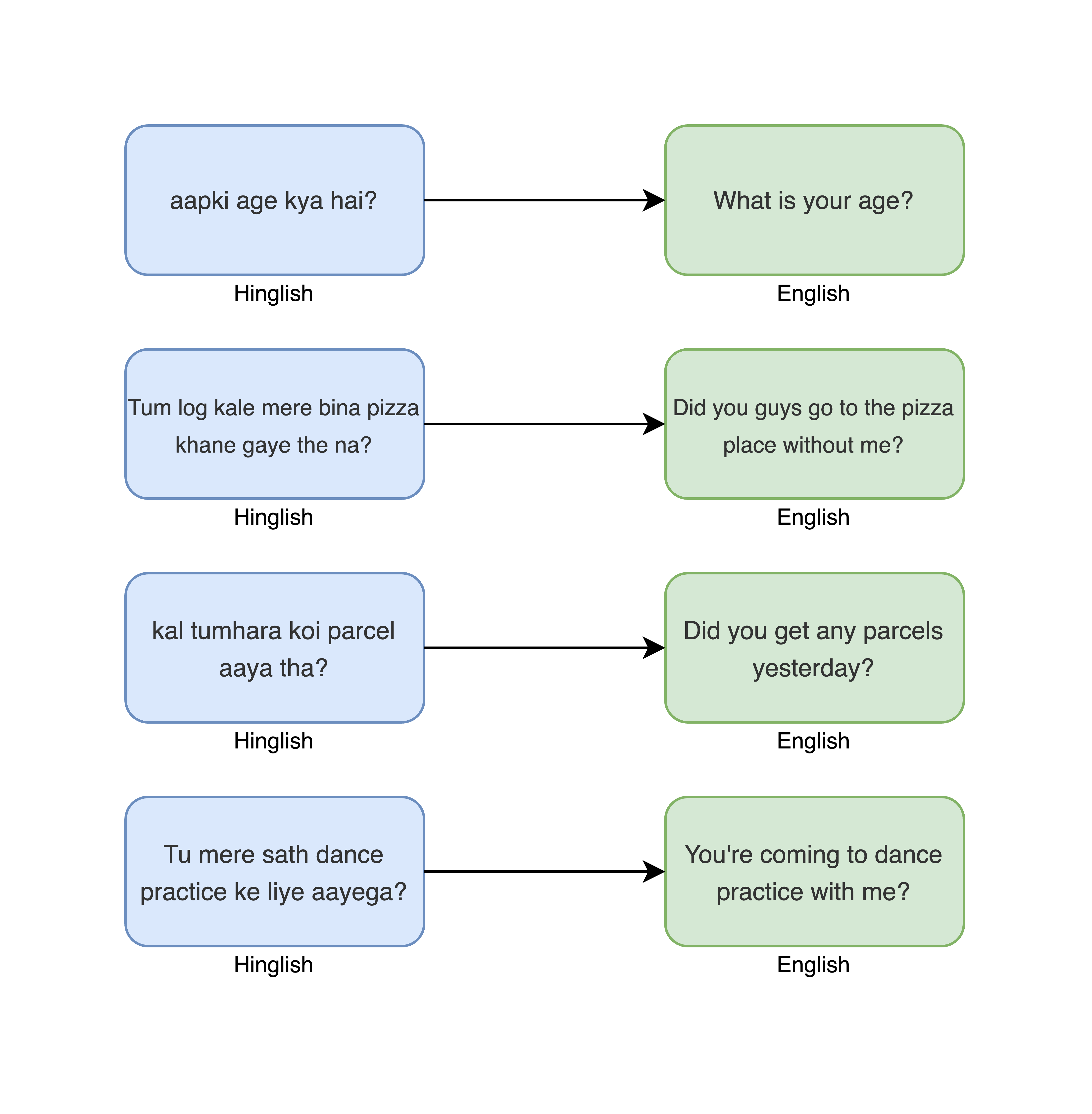

Example Output

Usage

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("rudrashah/RLM-hinglish-translator")

model = AutoModelForCausalLM.from_pretrained("rudrashah/RLM-hinglish-translator")

template = "Hinglish:\n{hi_en}\n\nEnglish:\n{en}" #THIS IS MOST IMPORTANT, WITHOUT THIS IT WILL GIVE RANDOM OUTPUT

input_text = tokenizer(template.format(hi_en="aapka name kya hai?",en=""),return_tensors="pt")

output = model.generate(**input_text)

print(tokenizer.decode(output[0]))

- Downloads last month

- 300

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.