Whisper Large V3 Turbo (Swiss German Fine-Tuned with QLoRa)

This repository contains a fine-tuned version of OpenAI's Whisper Large V3 Turbo model, adapted specifically for Swiss German dialects using QLoRa optimization. The model achieves state-of-the-art performance for Swiss German automatic speech recognition (ASR).

Model Summary

- Base Model: Whisper Large V3 Turbo

- Fine-Tuning Method: QLoRa (8-bit precision)

- Rank: 200

- Alpha: 16

- Hardware: 2x NVIDIA A100 80GB GPUs

- Training Time: 140 hours

Performance Metrics

- Word Error Rate (WER): 17.5%

- BLEU Score: 65.0

The model's performance has been evaluated across multiple datasets representing diverse dialectal and demographic distributions in Swiss German.

Dataset Summary

The model has been trained and evaluated on a comprehensive suite of Swiss German datasets:

SDS-200 Corpus

- Size: 200 hours

- Description: A corpus covering all Swiss German dialects.

STT4SG-350

- Size: 343 hours

- Description: Balanced distribution across Swiss German dialects and demographics, including gender representation.

- Dataset Link

SwissDial-Zh v1.1

- Size: 24 hours

- Description: A dataset with balanced representation of Swiss German dialects.

- Dataset Link

Swiss Parliament Corpus V2 (SPC)

- Size: 293 hours

- Description: Parliament recordings across Swiss German dialects.

- Dataset Link

ASGDTS (All Swiss German Dialects Test Set)

- Size: 13 hours

- Description: A stratified dataset closely resembling real-world Swiss German dialect distribution.

- Dataset Link

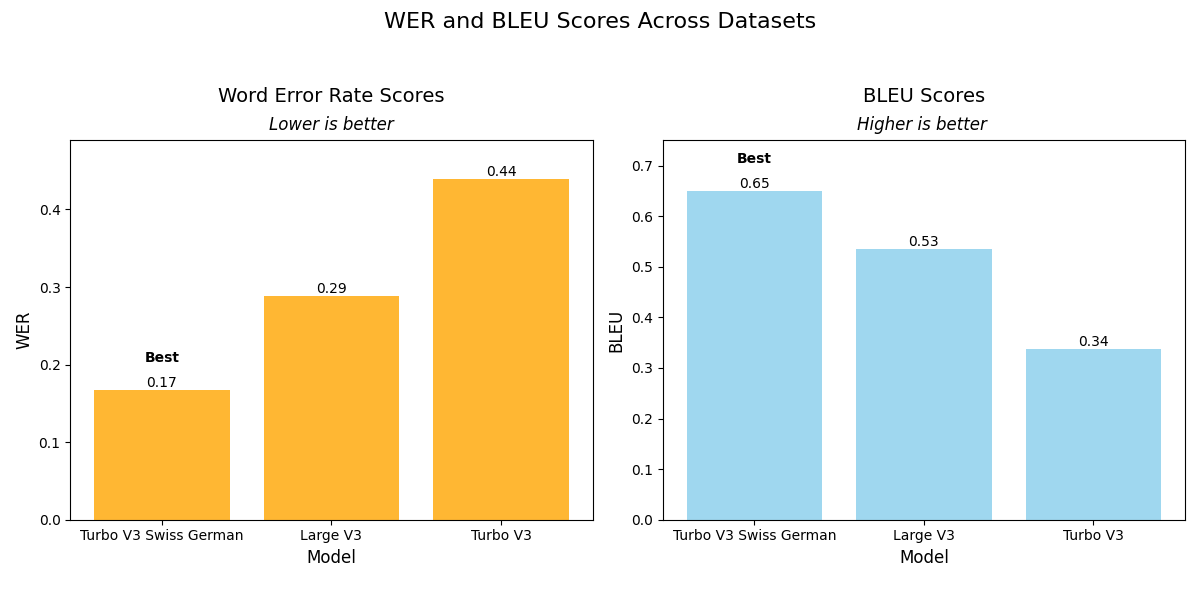

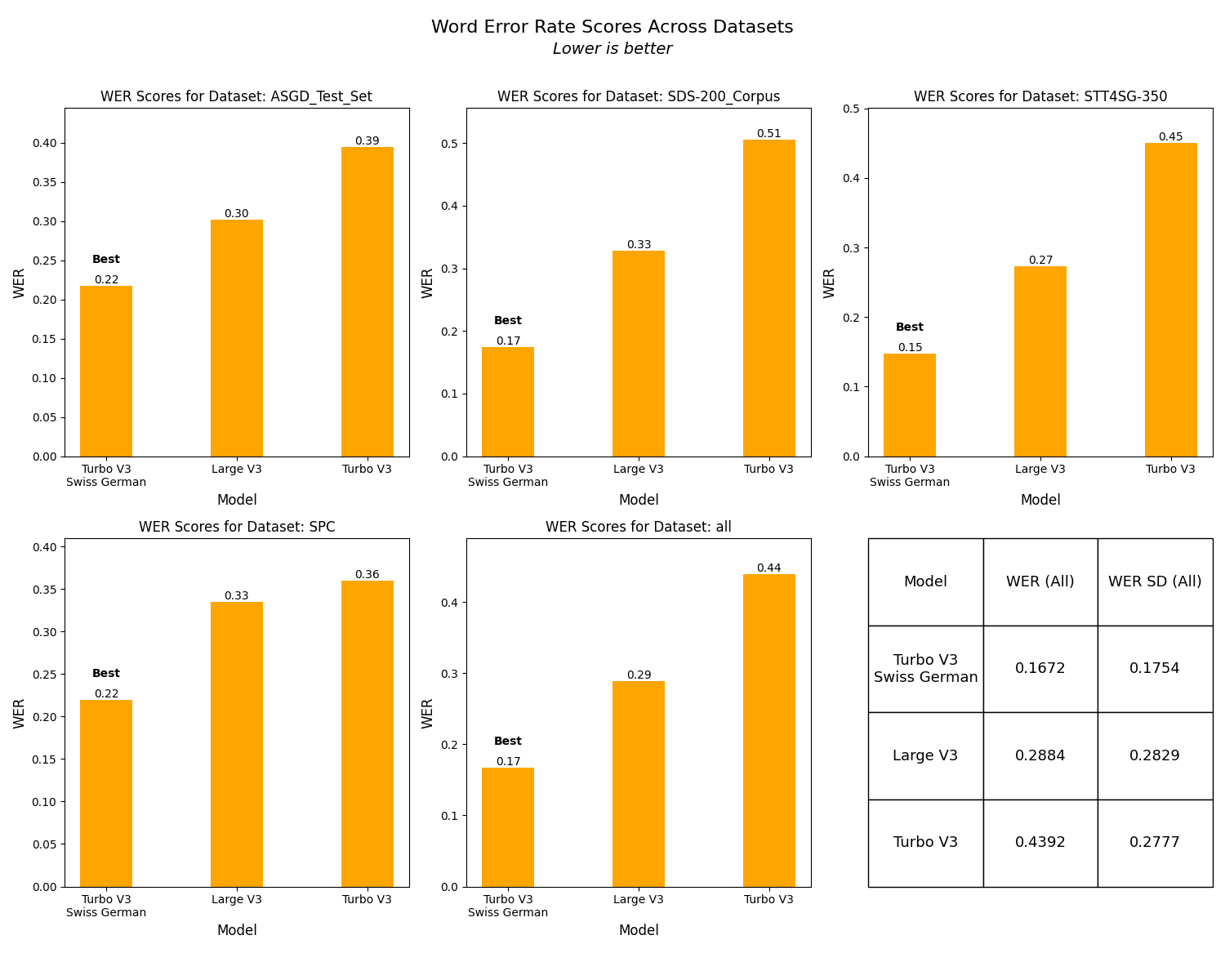

Results Across Datasets

WER Scores

| Model | WER (All) | WER SD (All) |

|---|---|---|

| Turbo V3 Swiss German | 0.1672 | 0.1754 |

| Large V3 | 0.2884 | 0.2829 |

| Turbo V3 | 0.4392 | 0.2777 |

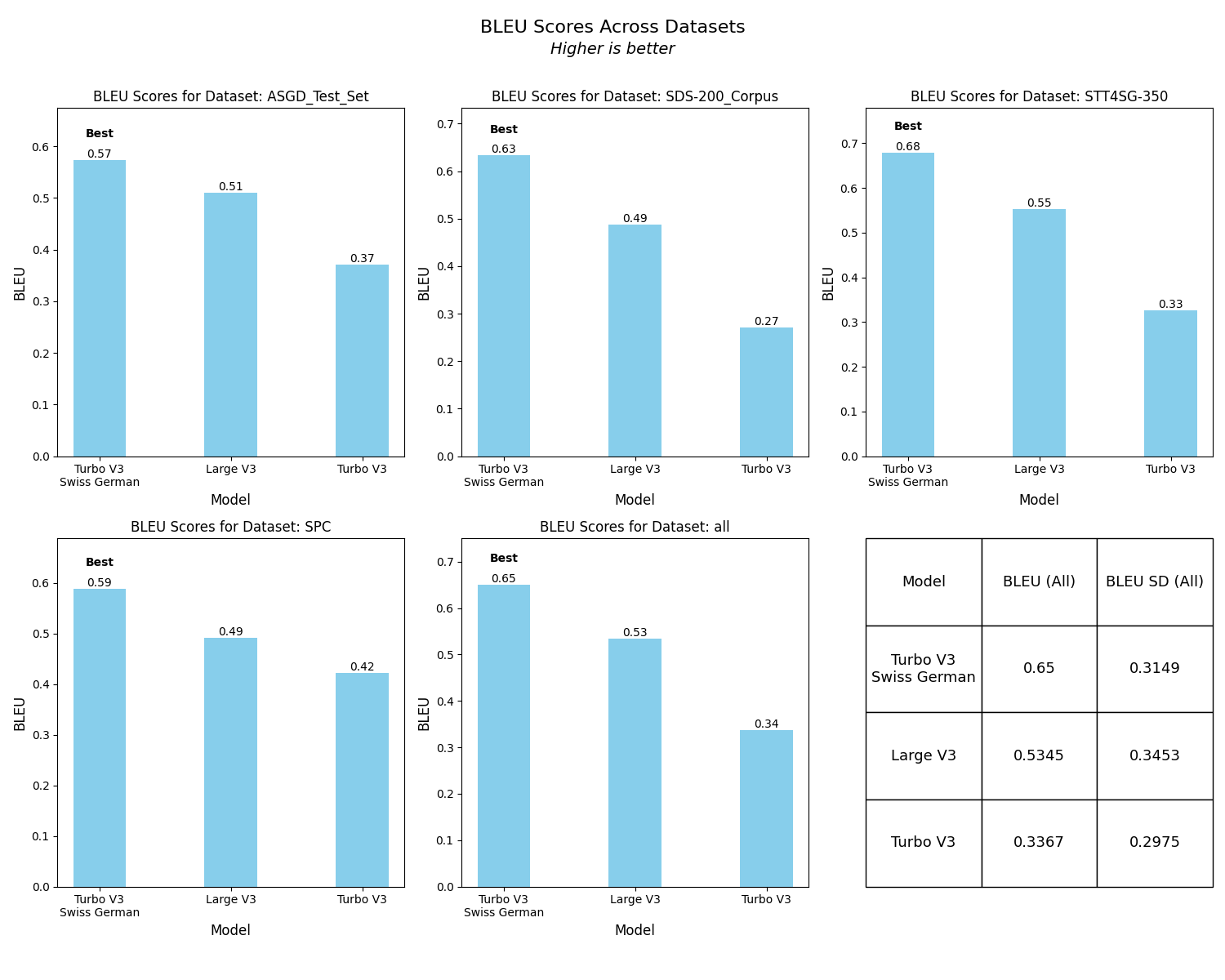

BLEU Scores

| Model | BLEU (All) | BLEU SD (All) |

|---|---|---|

| Turbo V3 Swiss German | 0.65 | 0.3149 |

| Large V3 | 0.5345 | 0.3453 |

| Turbo V3 | 0.3367 | 0.2975 |

Visual Results

WER and BLEU Scores Across Datasets

WER Scores Across Datasets

BLEU Scores Across Datasets

Usage

This model can be used directly with the Hugging Face Transformers library for tasks requiring Swiss German ASR.

Acknowledgments

Special thanks to the creators and maintainers of the datasets used in this work:

And to the University of Geneva for allowing us access to their High Performance Computing cluster on which the model has been trained.

Citation

If you use this model in your work, please cite this repository as follows:

@misc{whisper-large-v3-turbo-swissgerman,

author = {Nizar Michaud},

title = {Whisper Large V3 Turbo Fine-Tuned for Swiss German},

year = {2024},

publisher = {Hugging Face},

url = {https://huggingface.co/nizarmichaud/whisper-large-v3-turbo-swissgerman},

doi = 10.57967/hf/3858,

}

- Downloads last month

- 426

Model tree for nizarmichaud/whisper-large-v3-turbo-swissgerman

Base model

openai/whisper-large-v3