NAVI verifiers

🌐 NAVI's Ecosystem 🌐

- 🌍 NAVI Platform – Dive into NAVI's full capabilities and explore how it ensures policy alignment and compliance.

- 📜 API Docs – Your starting point for integrating NAVI into your applications.

- 📝 Blogpost: Policy-Driven Safeguards Comparison – A deep dive into the challenges and solutions NAVI addresses.

- 📊 Public Dataset: Policy Alignment Verification Dataset – Test and benchmark your models with NAVI's open-source dataset.

✨ Exciting News! ✨ We are temporarily offering free API and platform access to empower developers and researchers to explore NAVI's capabilities! 🚀

NAVI is a hallucination detection safety model designed primarily for policy alignment verification. It reviews various types of text against documents and policies to identify non-compliant or violating content. Optimized for enterprise applications requiring compliance verification for automated text generation, NAVI supports lengthy and complex documents. To push policy verification in the open-source community, we release NAVI-small-preview, an open-weights version of the model we have deployed on the platform. NAVI-small-preview is centered around verifying specifically assistant outputs against policy documents. The full solution is accessible via the NAVI Platform.

Performance Overview

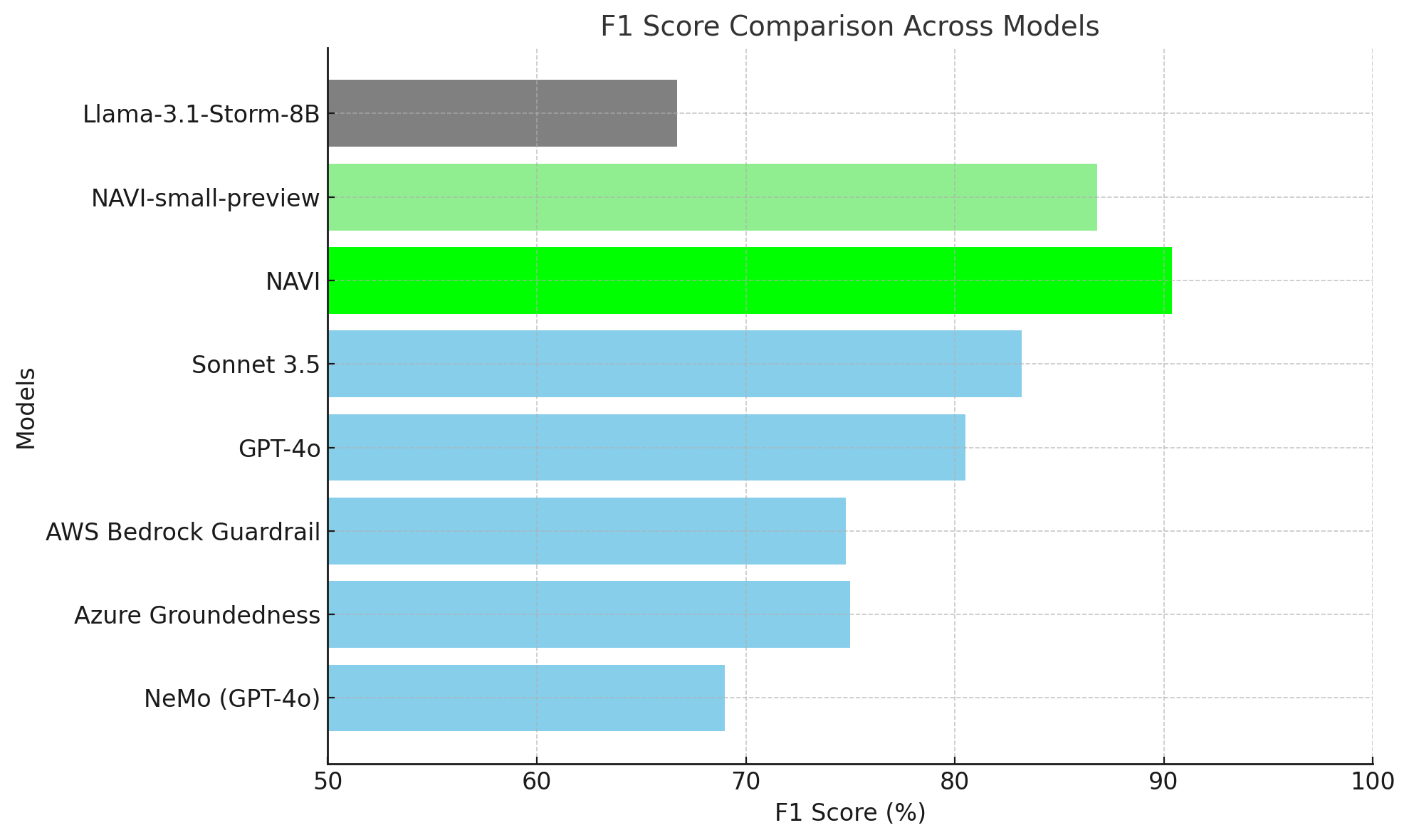

The chart below illustrates NAVI's strong performance on Policy Alignment Verification test set, with the full model achieving an F1 score of 90.4%, outperforming all competitors. NAVI-small-preview also demonstrates impressive results, providing an open-source option with significant improvements over baseline models while maintaining reliable policy alignment verification.

- Developed by: Nace.AI

- Model type: Safety model, Policy Alignment Verifier

- Language(s) (NLP): English

- License: MIT

- Finetuned from model: akjindal53244/Llama-3.1-Storm-8B

Uses

Direct Use

NAVI-small-preview is used for verifying chatbot/assistant/agents outputs with company policy documents. It processes policies and identifies any contradictions, inconsistencies and violations.

Downstream Use

Policy checks for accuracy critical LLM Apps. Good suite for Enterprise Environment.

Out-of-Scope Use

NAVI is not designed for general-purpose factuality verification or tasks unrelated to policy compliance.

Bias, Risks, and Limitations

While NAVI excels in policy compliance verification, it may face challenges with unrealistic policy scenarios or contexts outside its training data scope.

Recommendations

We recommend to use the model only as a generative classifier outputting the class label, any other outputs are not accounted for during training.

How to Get Started with the Model

The verifier takes the assistant response and document context as inputs to generate a classification label. There are three possible classes: Compliant, Noncompliant, and Irrelevant. For long enterprise documents, we recommend setting up chunk-based vector search for selecting most relevant chunks from the document.

For inferencing the model we recommend using vLLM==0.6.3.post1, inferencing with Transformers gives suboptimal performance. Below are the prompts and sample code to launch:

from transformers import AutoTokenizer

from vllm import LLM, SamplingParams

from vllm.lora.request import LoRARequest

target_tokenizer = AutoTokenizer.from_pretrained(

'akjindal53244/Llama-3.1-Storm-8B', padding_side="left")

llm = LLM(model='akjindal53244/Llama-3.1-Storm-8B',

enable_lora=True, max_model_len=4096, max_lora_rank=16, seed=42)

lora_request = LoRARequest(

'navi-small-preview', 1,

lora_path='nace-ai/navi-small-preview')

sampling_params = SamplingParams(

temperature=0.0,

max_tokens=3,

stop=["<|eot_id|>"]

)

template = """Determine if the given passage adheres strictly to the provided policy. Respond with one word: Compliant or Noncompliant.

Don't output anything else or use any other words. Deduct where the passage violates the policy with one word: Noncompliant or Compliant.

{context}

- Passage:

{response}

- Label:"""

context = "The return policy is 90 days, except for electronics, which is 30 days."

response = "The return policy is 90 days for all items."

messages = [{'role': 'user', 'content': template.format(context=context, response=response)}]

formatted_input = target_tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

output = llm.generate([formatted_input], sampling_params, lora_request=lora_request)[0]

print(output.outputs[0].text)

Noncompliant

For simplified handling of long documents we suggest implementing vector search among document chunks and including top 5 enumerated policy chunks.

Training Details

Training Data

NAVI was trained on a mix of real-world policy documents and synthetic interactions between a user and an assistant, it includes diverse, realistic, and complex policy examples across multiple industries.

Training Procedure

Preprocessing

NAVI utilizes latest advances in Knowledge Augmentation and Memory in order to internatlize document knowledge. However, NAVI-small-preview was trained to be able to work with simple vector retrieval.

Training Hyperparameters

- Training regime: We perform thorough hyperparameter search during finetuning. The resulting model is a Lora adapter that uses all linear modules for all Transformer layers with rank 16, alpha 32, learning rate 5e-5, effective batch size 32. Trained with 8 A100s for 6 epochs using Pytorch Distributed Data Parallel.

Evaluation

Testing Data, Factors & Metrics

Testing Data

We curated the Policy Alignment Verification (PAV) dataset to evaluate diverse policy verification use cases, releasing a public subset of 125 examples spanning six industry-specific scenarios: AT&T, Airbnb, Cadence Bank, Delta Airlines, Verisk, and Walgreens. This open-sourced subset ensures transparency and facilitates benchmarking of model performance. We evaluate our models and alternative solutions on this test set.

Factors

Evaluation focuses on policy compliance within multi-policy, multi-document contexts.

Metrics

F1 score for "Noncompliant" class was used to measure performance, prioritizing detection of noncompliance cases.

Results

The table below shows performance of models evaluated on the public subset of PAV dataset. NAVI-small-preview achieved an F1 score of 86.8%, outperforming all tested alternatives except full-scale NAVI. We evaluate against general-purpose solutions like Claude and Open AI models, as well as some guardrails focusing on groundedness to demonstrate a clear distinction of policy verification from the more common groundedness verification.

| Model | F1 Score (%) | Precision (%) | Recall (%) | Accuracy (%) |

|---|---|---|---|---|

| Llama-3.1-Storm-8B | 66.7 | 86.4 | 54.3 | 69.6 |

| NAVI-small-preview | 86.8 | 80.5 | 94.3 | 84.0 |

| NAVI | 90.4 | 93.8 | 87.1 | 89.6 |

| Sonnet 3.5 | 83.2 | 85.1 | 81.4 | 81.6 |

| GPT-4o | 80.5 | 73.8 | 88.6 | 76.0 |

| AWS Bedrock Guardrail | 74.8 | 87.1 | 65.6 | 67.2 |

| Azure Groundedness | 75.0 | 62.3 | 94.3 | 64.8 |

| NeMo (GPT-4o) | 69.0 | 67.2 | 70.9 | 72.0 |

Model Card Contact

- Downloads last month

- 8

Model tree for nace-ai/navi-small-preview

Base model

akjindal53244/Llama-3.1-Storm-8B