MedCLIP

Description

A CLIP model is finetuned on the ROCO dataset.

Dataset

Each image is accompanied by a text caption. The caption length varies from a few characters (a single word) to 2,000 characters. During preprocessing we remove all images that has a caption shorter than 10 characters. Training set: 57,780 images with their caption. Validation set: 7.200 Test set: 7,650

Training

Finetune a CLIP model by simply running sh run_medclip.

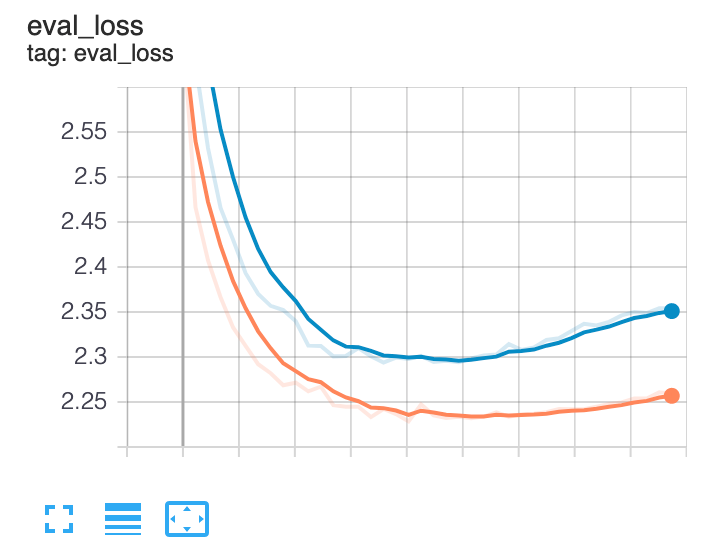

This is the validation loss curve we observed when we trained the model using the run_medclip.sh script.