Model Card for llm.c GPT2_350M

Instruction Pretraining: Fineweb-edu 10B interleaved with OpenHermes 2.5

Model Details

How to Get Started with the Model

Use the code below to get started with the model.

from transformers import pipeline

p = pipeline("text-generation", "jrahn/gpt2_350M_edu_hermes")

# instruction following

p("<|im_start|>user\nTeach me to fish.<|im_end|>\n<|im_start|>assistant\n", max_lenght=128)

#[{'generated_text': '<|im_start|>user\nTeach me to fish.<|im_end|>\n<|im_start|>assistant\nTo fish, you can start by learning the basics of fishing. First, you need to learn how to catch fish. Fish are a type of fish that are found in the ocean. They are also known as sea fish. They are a type of fish that are found in the ocean. They are a type of fish that are found in the ocean. They are a type of fish that are found in the ocean. They are a type of fish that are found in the ocean'}]

# text completion

p("In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English. ", max_length=128)

# [{'generated_text': 'In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English. \nThe researchers believe that the animals were able to communicate with each other by using a unique vocalization system. The researchers believe that the animals were able to communicate with each other by using a unique vocalization system.\nThe researchers believe that the animals were able to communicate with each other by using a unique vocalization system. The researchers believe that the animals were able to communicate with each other by using a unique'}]

Training Details

Training Data

Datasets used: Fineweb-Edu 10B + OpenHermes 2.5

Dataset proportions:

- Part 1: FWE 4,836,050 + OH 100,000 (2.03%) = 4,936,050

- Part 2: FWE 4,336,051 + OH 400,000 (8.45%) = 4,736,051

- Part 3: FWE 500,000 + OH 501,551 (50.08%) = 1,001,551

Total documents: 10,669,024

Training Procedure

Preprocessing [optional]

- Fineweb-Edu: none, just the "text" feature

- OpenHermes 2.5: applied ChatML prompt template to "conversations" to create the "text" feature

Training Hyperparameters

- Training regime:

- bf16

- context length 1024

- per device batch size 16, global batch size 524,288 -> gradient accumulation 16

- zero stage 1

- lr 3e-4, cosine schedule, 700 warmup steps

- more details see run script

Speeds, Sizes, Times [optional]

Params: 355M -> 710MB / checkpoint

Tokens: ~10B (10,287,579,136)

Total training time: ~30hrs

Hardware: 2x RTX4090

MFU: 71% (110,000 tok/s)

Evaluation

Results

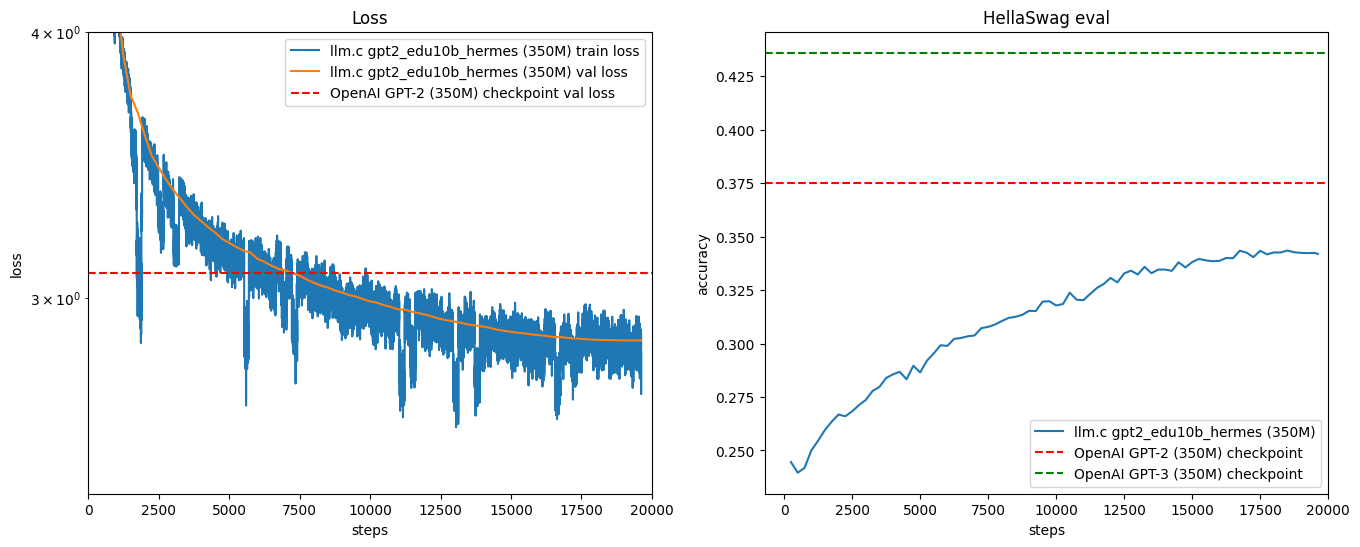

HellaSwag: 34.4

- more details see main.log

Technical Specifications [optional]

Model Architecture and Objective

GTP2 350M, Causal Language Modeling

Compute Infrastructure

Hardware

2x RTX4090

Software

- Downloads last month

- 93

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.