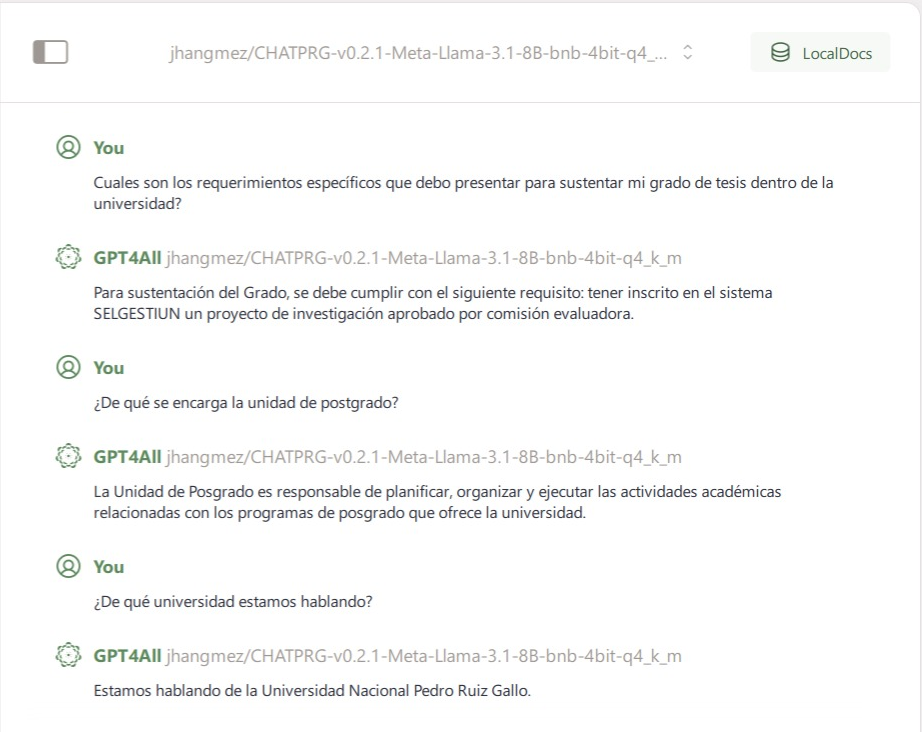

ChatPRG v0.2.1 Llama 3.1 8B 4bit q4_k_m (stable version)

- Modelo pre-entrenado para dar a conocer a estudiantes y personas externas, los reglamentos de la Universidad nacional Pedro Ruiz Gallo de Lambayeque, Perú

- Pre-trained model to make known to students and external people the regulations of the Pedro Ruiz Gallo National University of Lambayeque, Peru

Testing the model

Observations

- El modelo puede responder en inglés y español, a pesar de haber sido entrenado solo con un dataset en inglés, pero aún no puedo confirmar eso, hasta que el modelo pase por mas pruebas.

- The model can answer in English and Spanish, although it was train only with an English dataset, but I can't still confirm that, until it has pass more test.

Uploaded model

- Developed by: jhangmez

- License: apache-2.0

- Finetuned from model : unsloth/Meta-Llama-3.1-8B-bnb-4bit

This llama model was trained 2x faster with Unsloth and Huggingface's TRL library.

ChatPRG v0.2.1 Llama 3.1 8B 4bit q4_k_m (stable version)

Hecho con ❤️ por Jhan Gómez P.

Hecho con ❤️ por Jhan Gómez P.