Update README.md

Browse files

README.md

CHANGED

|

@@ -49,12 +49,28 @@ language:

|

|

| 49 |

metrics:

|

| 50 |

- accuracy

|

| 51 |

base_model:

|

| 52 |

-

-

|

| 53 |

pipeline_tag: question-answering

|

| 54 |

tags:

|

| 55 |

- biology

|

| 56 |

- medical

|

| 57 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 58 |

# Democratizing Medical LLMs For Much More Languages

|

| 59 |

|

| 60 |

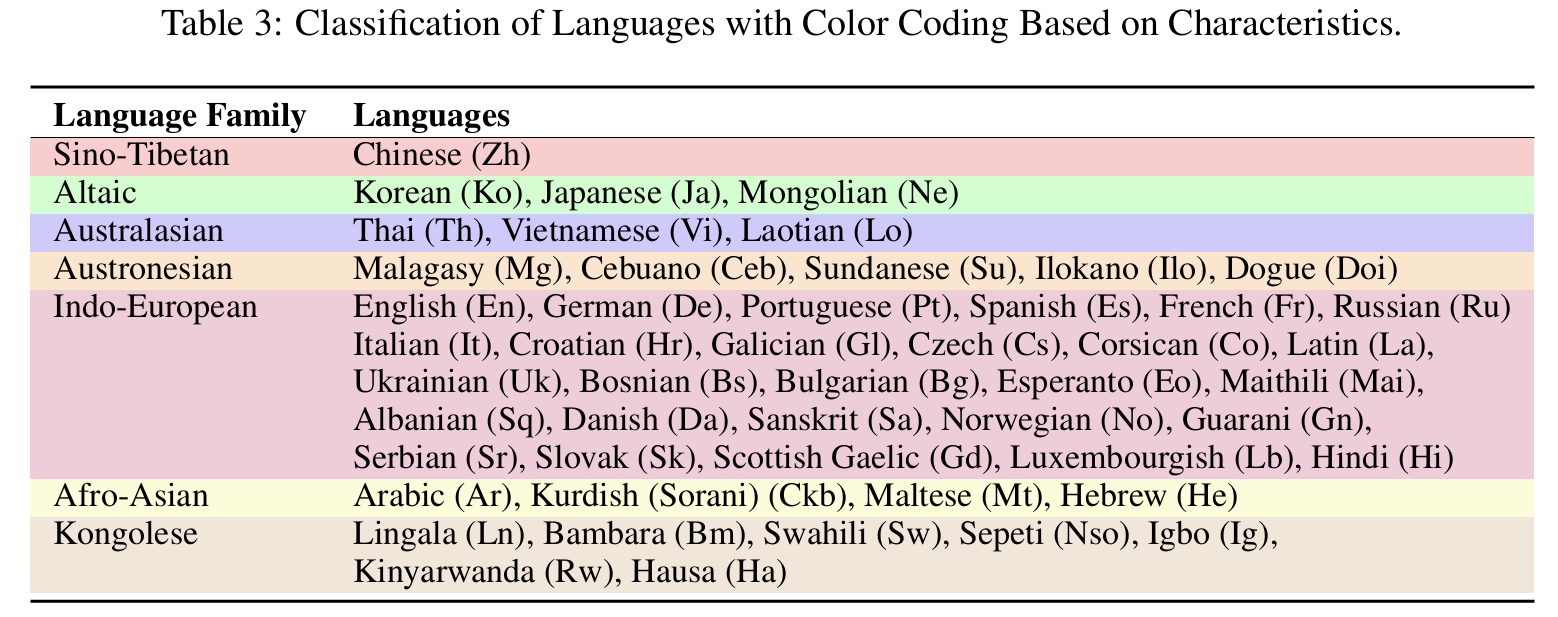

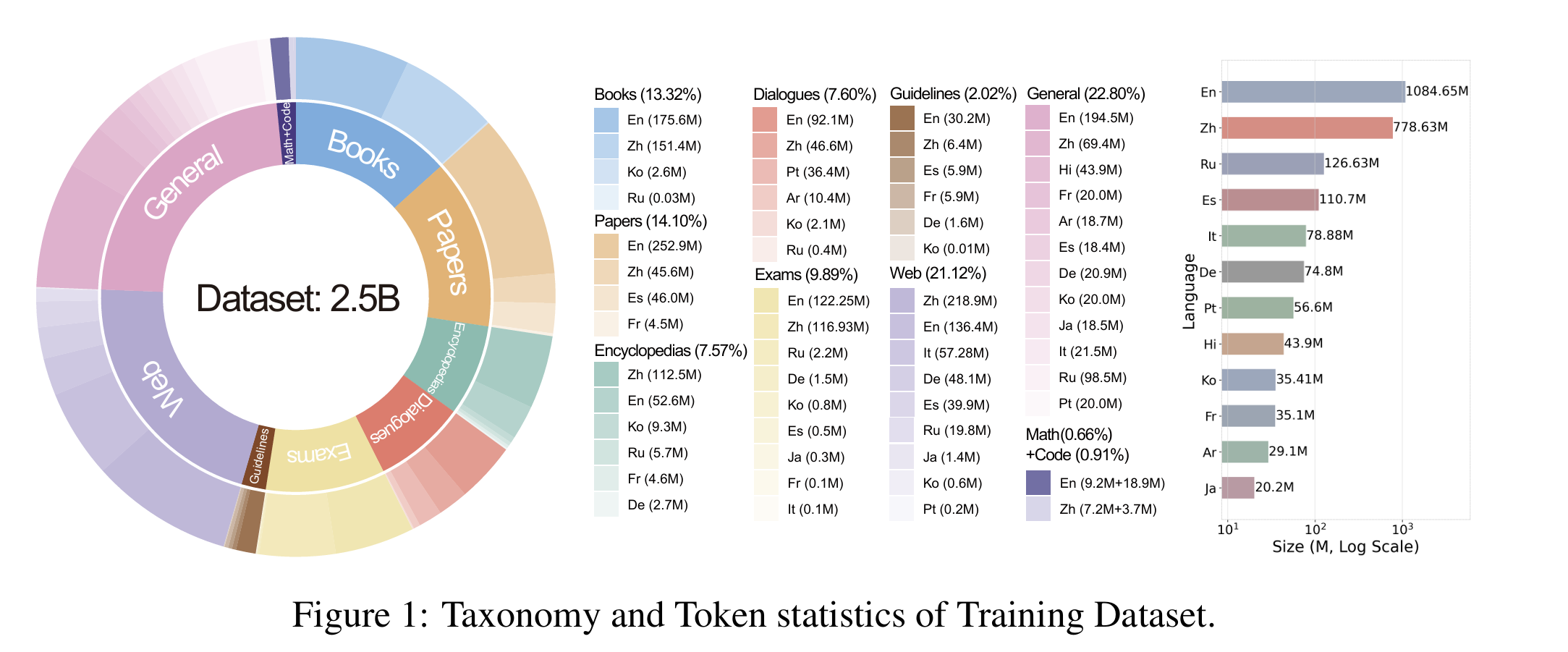

Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish, Arabic, Russian, Japanese, Korean, German, Italian, Portuguese and 38 Minor Languages So far.

|

|

@@ -67,7 +83,7 @@ Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish,

|

|

| 67 |

|

| 68 |

|

| 69 |

|

| 70 |

-

|

| 71 |

|

| 72 |

|

| 73 |

## 🌈 Update

|

|

@@ -81,7 +97,7 @@ Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish,

|

|

| 81 |

<details>

|

| 82 |

<summary>Click to view the Languages Coverage</summary>

|

| 83 |

|

| 84 |

-

|

| 85 |

|

| 86 |

</details>

|

| 87 |

|

|

@@ -91,7 +107,7 @@ Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish,

|

|

| 91 |

<details>

|

| 92 |

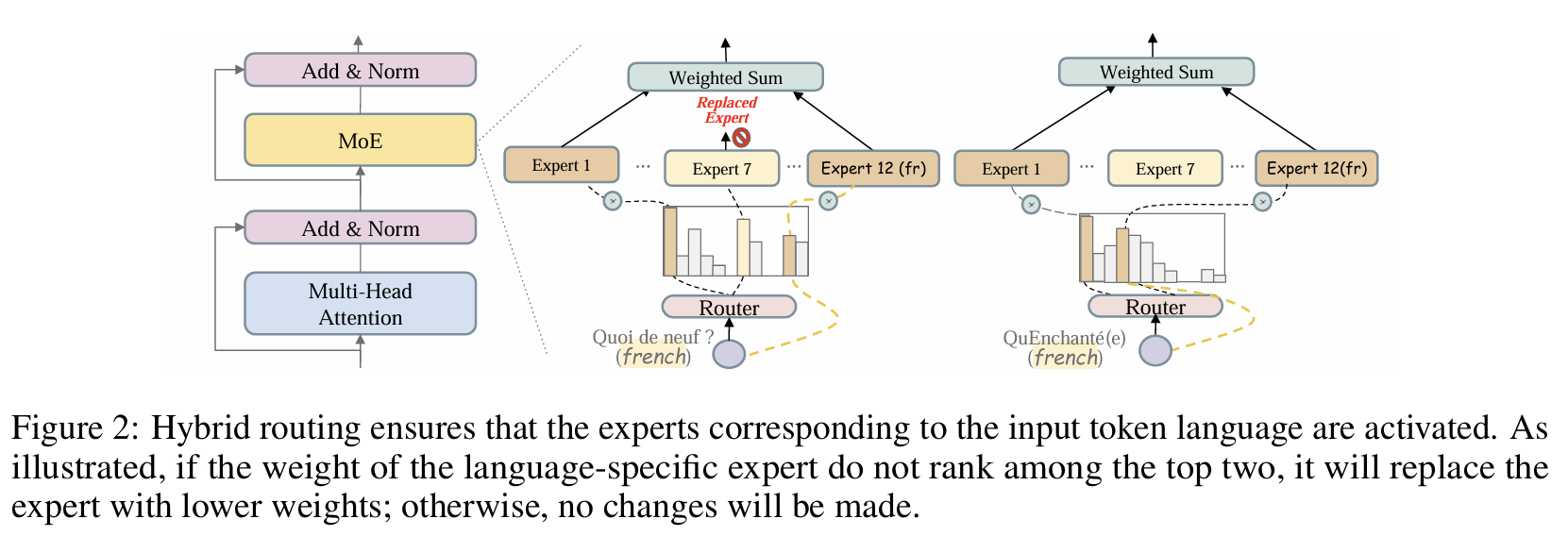

<summary>Click to view the MoE routing image</summary>

|

| 93 |

|

| 94 |

-

|

| 95 |

|

| 96 |

</details>

|

| 97 |

|

|

@@ -105,7 +121,7 @@ Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish,

|

|

| 105 |

<details>

|

| 106 |

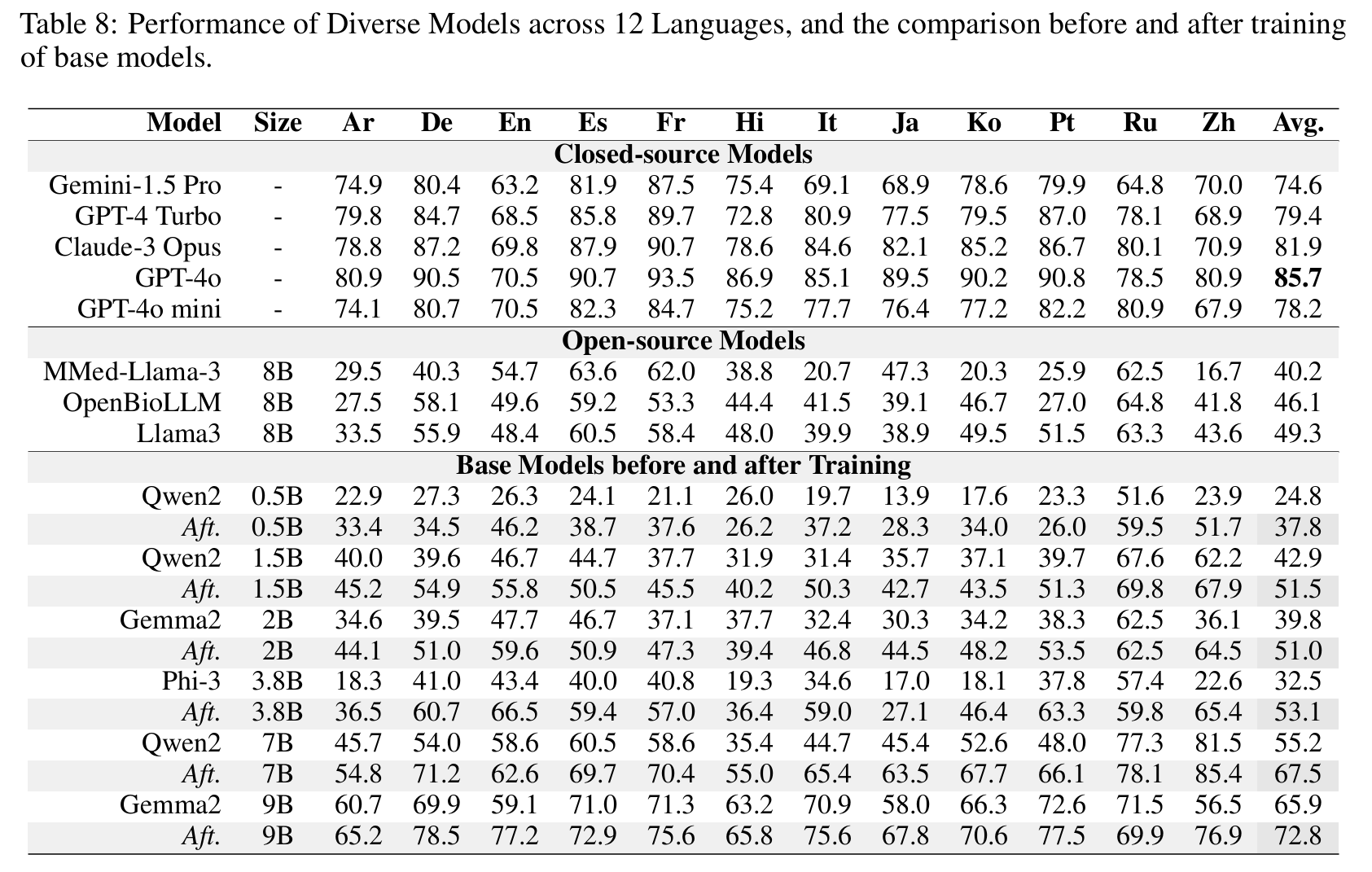

<summary>Click to view the Dense Models Results</summary>

|

| 107 |

|

| 108 |

-

|

| 109 |

|

| 110 |

</details>

|

| 111 |

|

|

@@ -116,7 +132,7 @@ Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish,

|

|

| 116 |

<details>

|

| 117 |

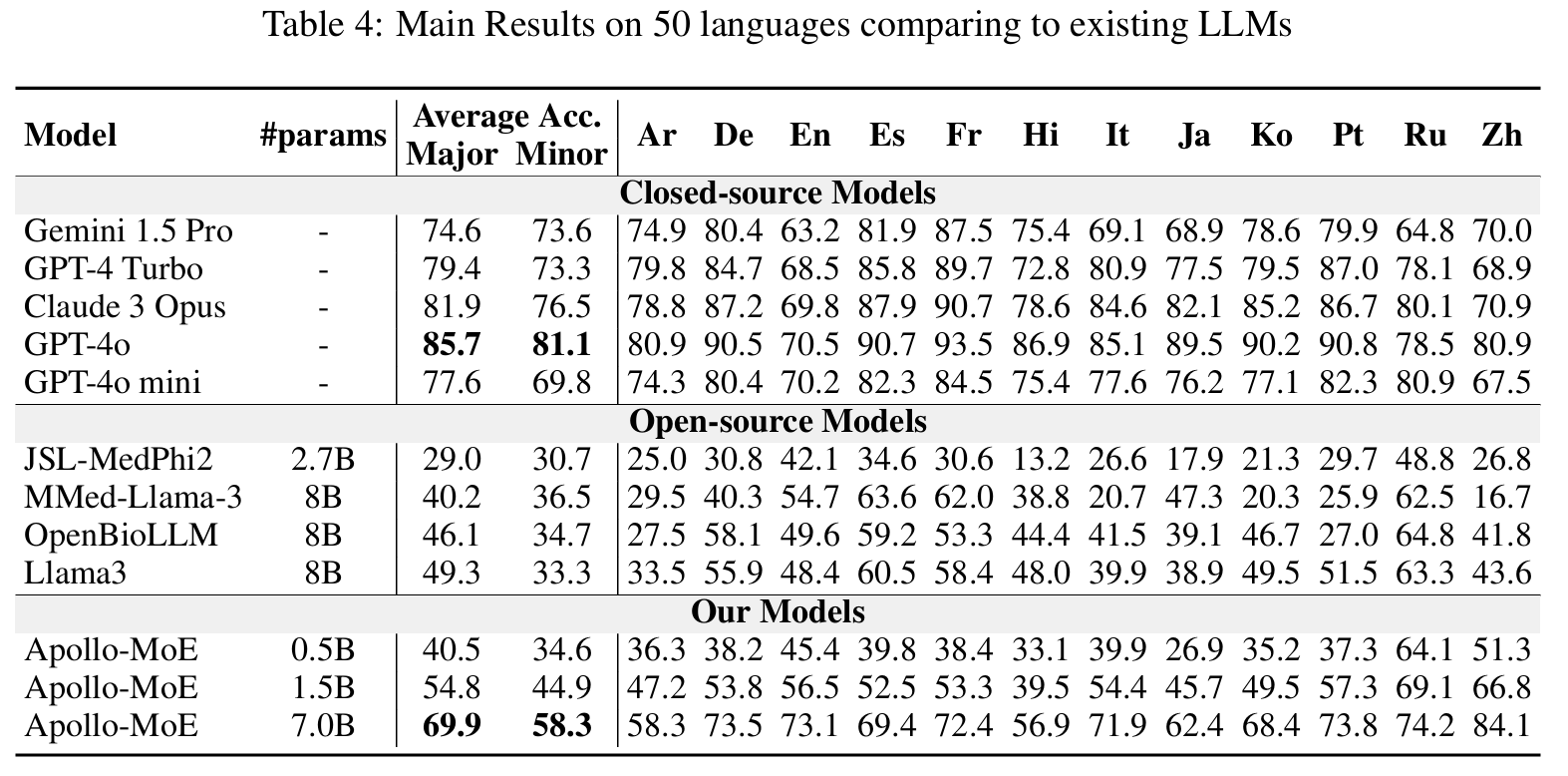

<summary>Click to view the Post-MoE Models Results</summary>

|

| 118 |

|

| 119 |

-

|

| 120 |

|

| 121 |

</details>

|

| 122 |

|

|

@@ -139,7 +155,7 @@ Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish,

|

|

| 139 |

|

| 140 |

<details><summary>Click to expand</summary>

|

| 141 |

|

| 142 |

-

|

| 143 |

|

| 144 |

- [Data category](https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus/tree/main/train)

|

| 145 |

|

|

|

|

| 49 |

metrics:

|

| 50 |

- accuracy

|

| 51 |

base_model:

|

| 52 |

+

- FreedomIntelligence/Apollo2-7B

|

| 53 |

pipeline_tag: question-answering

|

| 54 |

tags:

|

| 55 |

- biology

|

| 56 |

- medical

|

| 57 |

---

|

| 58 |

+

# Apollo2-7B-exl2

|

| 59 |

+

Original model: [Apollo2-7B](https://huggingface.co/FreedomIntelligence/Apollo2-7B)

|

| 60 |

+

Made by: [FreedomIntelligence](https://huggingface.co/FreedomIntelligence)

|

| 61 |

+

|

| 62 |

+

## Quants

|

| 63 |

+

[4bpw h6 (main)](https://huggingface.co/cgus/Apollo2-7B-exl2/tree/main)

|

| 64 |

+

[4.5bpw h6](https://huggingface.co/cgus/Apollo2-7B-exl2/tree/4.5bpw-h6)

|

| 65 |

+

[5bpw h6](https://huggingface.co/cgus/Apollo2-7B-exl2/tree/5bpw-h6)

|

| 66 |

+

[6bpw h6](https://huggingface.co/cgus/Apollo2-7B-exl2/tree/6bpw-h6)

|

| 67 |

+

[8bpw h8](https://huggingface.co/cgus/Apollo2-7B-exl2/tree/8bpw-h8)

|

| 68 |

+

|

| 69 |

+

## Quantization notes

|

| 70 |

+

Made with Exllamav2 0.2.3 with the default dataset. This model needs software with Exllamav2 library such as Text-Generation-WebUI, TabbyAPI, etc.

|

| 71 |

+

This model has to fit your GPU to be usable and it's mainly meant for RTX cards on Windows/Linux or AMD on Linux.

|

| 72 |

+

|

| 73 |

+

# Original model card

|

| 74 |

# Democratizing Medical LLMs For Much More Languages

|

| 75 |

|

| 76 |

Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish, Arabic, Russian, Japanese, Korean, German, Italian, Portuguese and 38 Minor Languages So far.

|

|

|

|

| 83 |

|

| 84 |

|

| 85 |

|

| 86 |

+

|

| 87 |

|

| 88 |

|

| 89 |

## 🌈 Update

|

|

|

|

| 97 |

<details>

|

| 98 |

<summary>Click to view the Languages Coverage</summary>

|

| 99 |

|

| 100 |

+

|

| 101 |

|

| 102 |

</details>

|

| 103 |

|

|

|

|

| 107 |

<details>

|

| 108 |

<summary>Click to view the MoE routing image</summary>

|

| 109 |

|

| 110 |

+

|

| 111 |

|

| 112 |

</details>

|

| 113 |

|

|

|

|

| 121 |

<details>

|

| 122 |

<summary>Click to view the Dense Models Results</summary>

|

| 123 |

|

| 124 |

+

|

| 125 |

|

| 126 |

</details>

|

| 127 |

|

|

|

|

| 132 |

<details>

|

| 133 |

<summary>Click to view the Post-MoE Models Results</summary>

|

| 134 |

|

| 135 |

+

|

| 136 |

|

| 137 |

</details>

|

| 138 |

|

|

|

|

| 155 |

|

| 156 |

<details><summary>Click to expand</summary>

|

| 157 |

|

| 158 |

+

|

| 159 |

|

| 160 |

- [Data category](https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus/tree/main/train)

|

| 161 |

|