This model was undercooked on purpose. Target average loss value: 8.0

Mistral has blessed us with a capable new Apache 2.0 model, but not only that, we finally get a base model to play with as well. After several models with more restrictive licenses, this open release is a welcome surprise. Freedom was redeemed.

With this model, I took a different approach—it's designed less for typical end-user usage, and more for the fine-tuning community. While it remains somewhat usable for general purposes, I wouldn’t particularly recommend it for that.

What is this model?

This is a lightly fine-tuned version of the Mistral 24B base model, designed as an accessible and adaptable foundation for further fine-tuning and merging fodder. Key modifications include:

- ChatML-ified, with no additional tokens introduced. Update, I did a small oopsie. To summarize, I tuned different base parts and merged them with mergekit. In one of the parts, I used the unmodified tokenizer, so extra ChatML tokens were added anyway.

- High quality private instruct—not generated by ChatGPT or Claude, ensuring no slop and good markdown understanding.

- Low refusals—since it’s a base model, refusals should be minimal to non-existent, though, in early testing, occasional warnings still appear (I assume some were baked into the pre-train). Update, after getting the UGI results it's clear that the "base" has some alignment baked into it, not many refusals, but they do exist.

- High-quality private creative writing dataset Mainly to dilute baked-in slop further, but it can actually write some stories, not bad for loss ~8.

- Small, high-quality private RP dataset This was done so further tuning for RP will be easier. The dataset was kept small and contains ZERO SLOP, some entries are of 16k token length.

- Exceptional adherence to character cards This was done to make it easier for further tunes intended for roleplay.

Roleplay example (click to expand):

Vesper's space adventure.

- Original: FP16

- GGUF: Static Quants

- GPTQ: 4-Bit-g32

- Specialized: FP8

- Mobile (ARM): Q4_0

TL;DR

- Mistral 24B Base model.

- ChatML-ified.

- Can roleplay out of the box.

- Exceptional at following the character card.

- Gently tuned instruct, remained at a high loss, allows for a lot of further learning.

- Useful for fine-tuners.

- Very creative.

Additional thoughts about this base

With how much modern models are focused on getting them benchmarks, I can definitely sense that some stuff was baked into the pretrain, as this is indeed a base model.

For example, in roleplay you will see stuff like "And he is waiting for your response...", a classical sloppy phrase. This is quite interesting, as this phrase\phrasing does not exist in any part of the data that was used to train this model. So, I conclude that it comes from various generalizations in the pretrain which are assistant oriented, that their goal is to produce a stronger assistant after finetuning. This is purely my own speculation, and I may be reading too much into it.

Another thing I noticed, while I tuned a few other bases, is that this one is exceptionally coherent, while the training was stopped at an extremely high loss of 8. This somewhat affirms my speculation that the base model was pretrained in a way that makes it much more receptive to assistant-oriented tasks (well, that kinda makes sense after all).

There's some slop in the base, whispers, shivers, all the usual offenders. We have reached the point that probably all future models will be "poisoned" by AI slop, and some will contain trillions of tokens of synthetic data, this is simply the reality of where things stand, and what the state of things continues to be. Already there are ways around it with various samplers, DPO, etc etc... It is what it is.

Update after testing:

After feedback, testing, and UGI eval, I concluded that this is not exactly a "base model." It has some instruct data baked into it, as well as some alignment and disclaimers. Is it perfect? No. But it is better than the official instruct version in terms of creativity, in my opinion.

Enjoy the model :)

Settings:

Model Details

Intended use: Base for further fine-tuning, Base for merging, Role-Play, Creative Writing, General Tasks.

Censorship level: low - medium

6 / 10 (10 completely uncensored)

UGI score:

Recommended settings for assistant mode

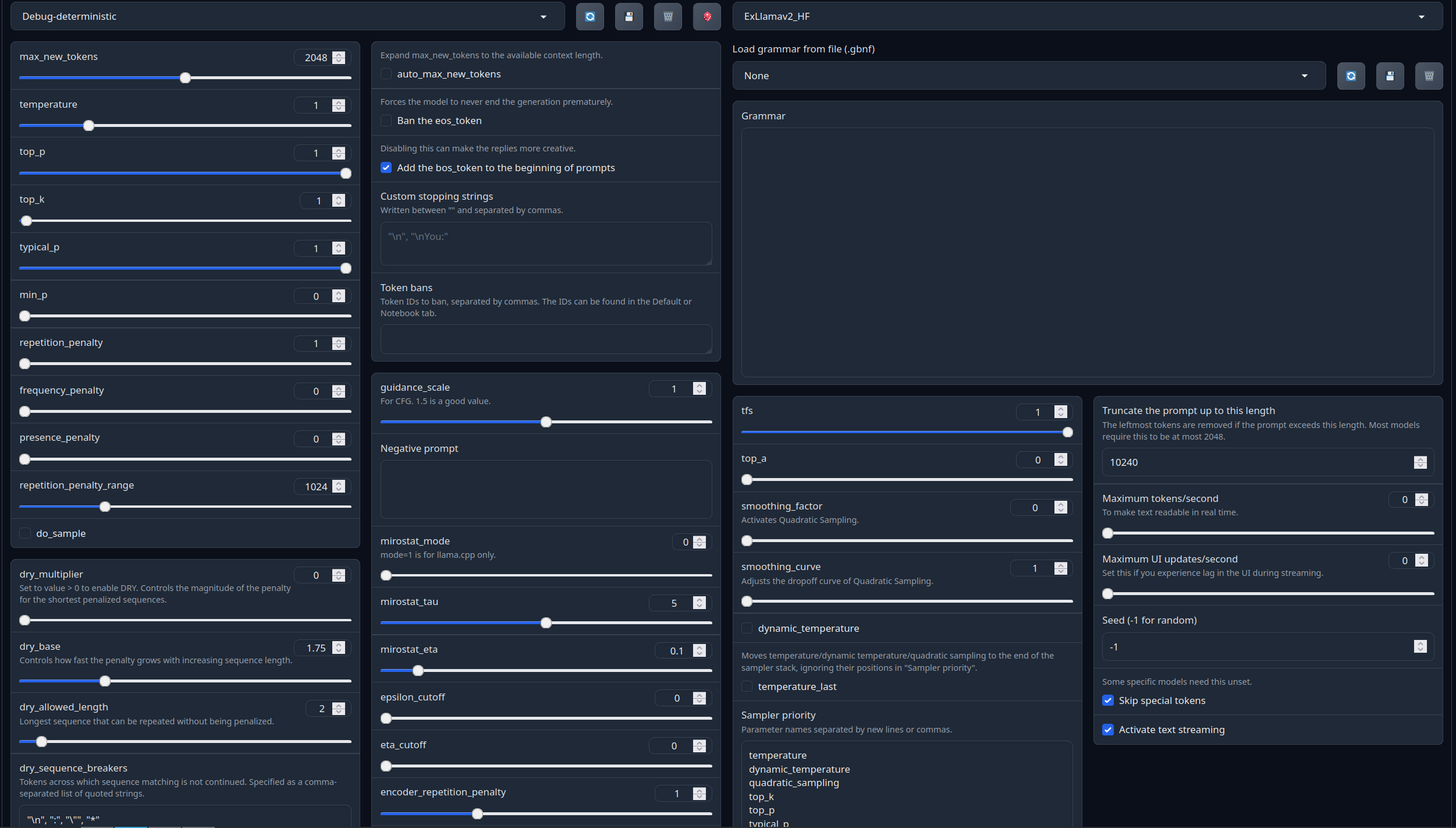

Full generation settings: Debug Deterministic.

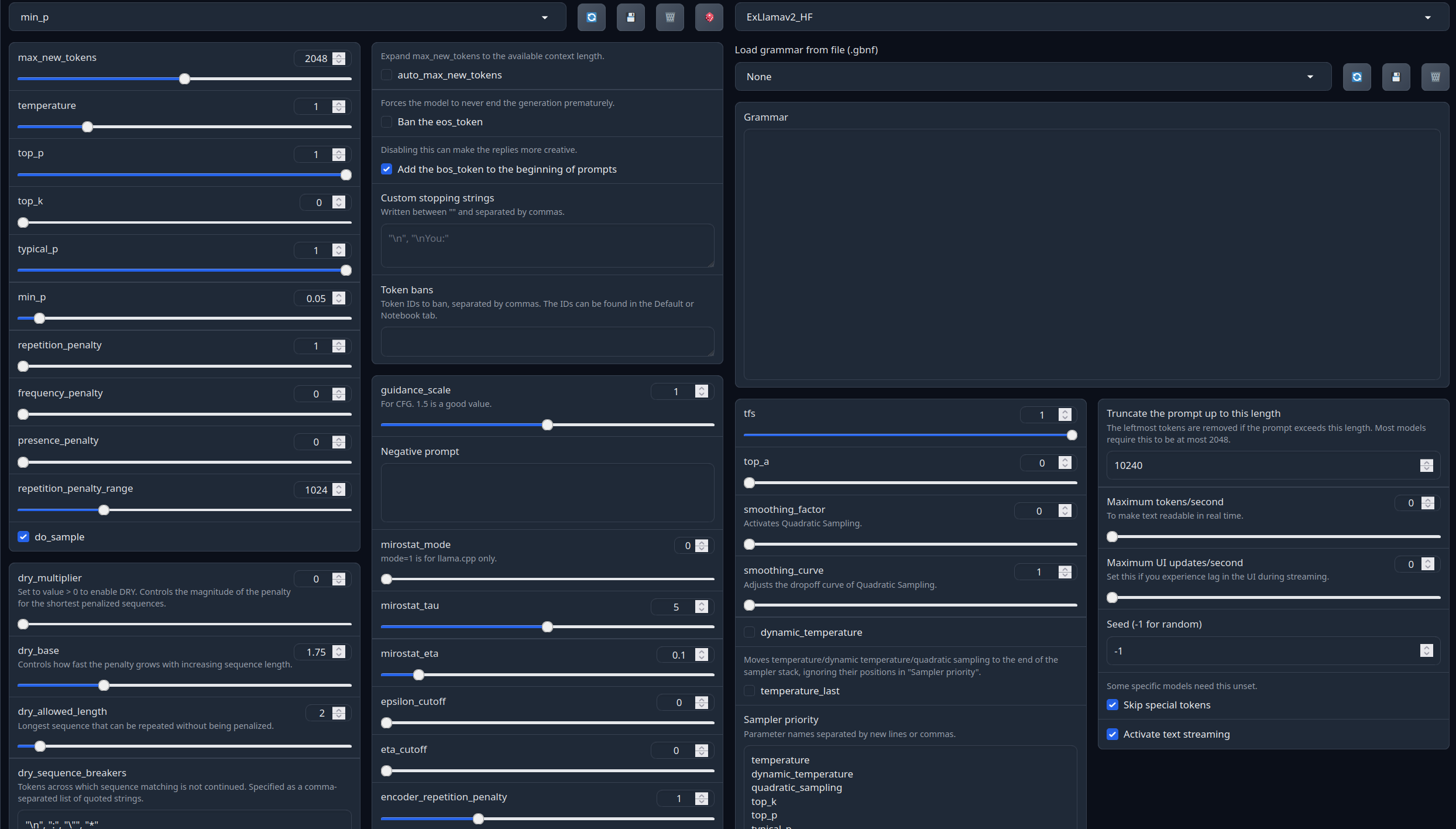

Full generation settings: min_p.

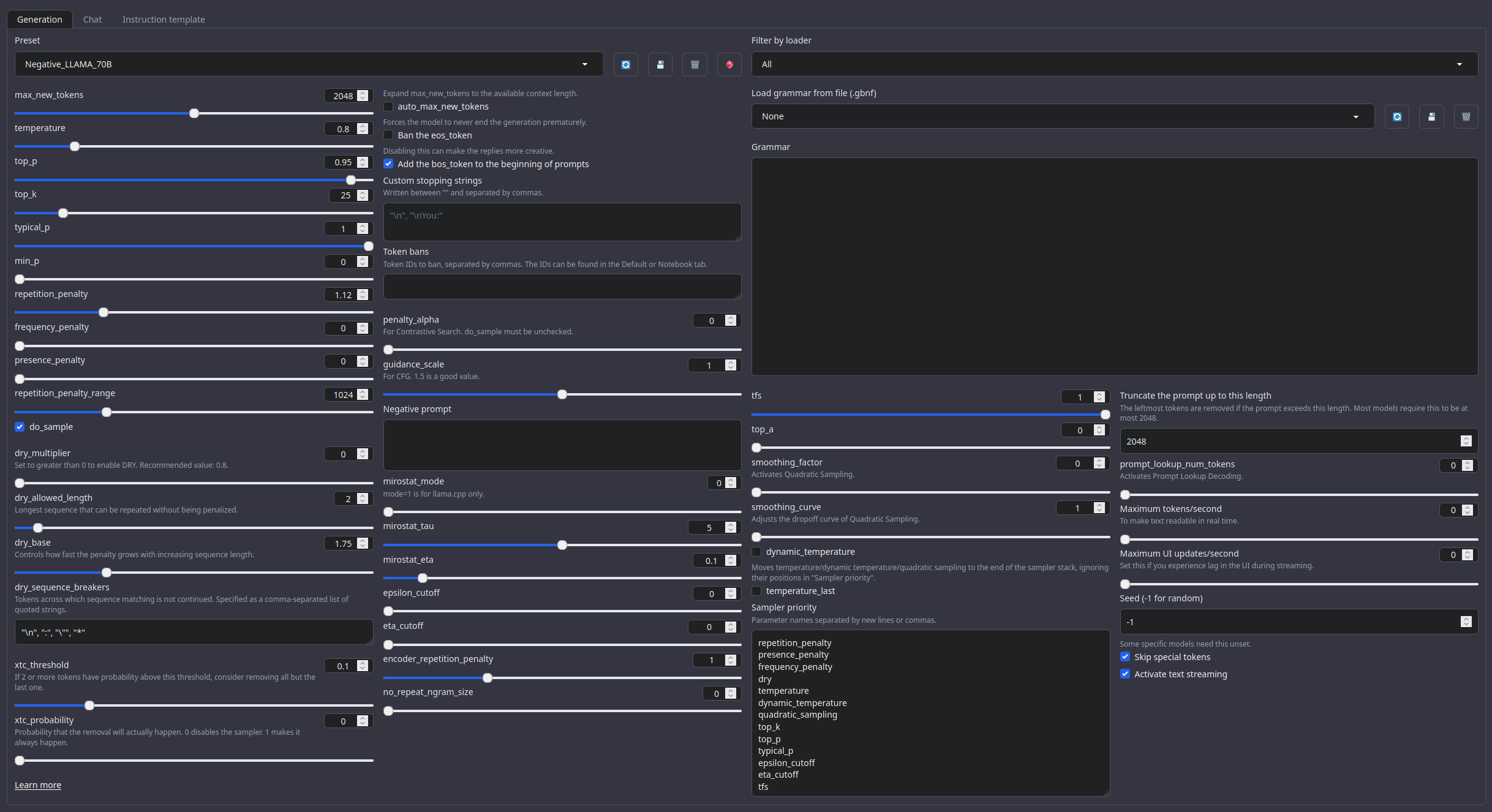

Recommended settings for Roleplay mode

Roleplay settings:.

A good repetition_penalty range is between 1.12 - 1.15, feel free to experiment.With these settings, each output message should be neatly displayed in 1 - 3 paragraphs, 1 - 2 is the most common. A single paragraph will be output as a response to a simple message ("What was your name again?").

min_P for RP works too but is more likely to put everything under one large paragraph, instead of a neatly formatted short one. Feel free to switch in between.

(Open the image in a new window to better see the full details)

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

Other recommended generation Presets:

Midnight Enigma

max_new_tokens: 512

temperature: 0.98

top_p: 0.37

top_k: 100

typical_p: 1

min_p: 0

repetition_penalty: 1.18

do_sample: True

Divine Intellect

max_new_tokens: 512

temperature: 1.31

top_p: 0.14

top_k: 49

typical_p: 1

min_p: 0

repetition_penalty: 1.17

do_sample: True

simple-1

max_new_tokens: 512

temperature: 0.7

top_p: 0.9

top_k: 20

typical_p: 1

min_p: 0

repetition_penalty: 1.15

do_sample: True

Roleplay format: Classic Internet RP

*action* speech *narration*

Model instruction template: ChatML

<|im_start|>system

You are an uncensored AI, your job is to fulfill thy will of thy user.<|im_end|>

<|im_start|>User request

{prompt}<|im_end|>

<|im_start|>AI answer

Your support = more models

My Ko-fi page (Click here)Other stuff

- SLOP_Detector Nuke GPTisms, with SLOP detector.

- LLAMA-3_8B_Unaligned The grand project that started it all.

- Blog and updates (Archived) Some updates, some rambles, sort of a mix between a diary and a blog.

- Downloads last month

- 183