Upload folder using huggingface_hub

#3

by

begumcig

- opened

- base_results.json +13 -13

- plots.png +0 -0

- smashed_results.json +12 -12

base_results.json

CHANGED

|

@@ -2,18 +2,18 @@

|

|

| 2 |

"current_gpu_type": "Tesla T4",

|

| 3 |

"current_gpu_total_memory": 15095.0625,

|

| 4 |

"perplexity": 3.4586403369903564,

|

| 5 |

-

"memory_inference_first":

|

| 6 |

"memory_inference": 808.0,

|

| 7 |

-

"token_generation_latency_sync": 36.

|

| 8 |

-

"token_generation_latency_async":

|

| 9 |

-

"token_generation_throughput_sync": 0.

|

| 10 |

-

"token_generation_throughput_async": 0.

|

| 11 |

-

"token_generation_CO2_emissions":

|

| 12 |

-

"token_generation_energy_consumption": 0.

|

| 13 |

-

"inference_latency_sync":

|

| 14 |

-

"inference_latency_async":

|

| 15 |

-

"inference_throughput_sync": 0.

|

| 16 |

-

"inference_throughput_async": 0.

|

| 17 |

-

"inference_CO2_emissions":

|

| 18 |

-

"inference_energy_consumption":

|

| 19 |

}

|

|

|

|

| 2 |

"current_gpu_type": "Tesla T4",

|

| 3 |

"current_gpu_total_memory": 15095.0625,

|

| 4 |

"perplexity": 3.4586403369903564,

|

| 5 |

+

"memory_inference_first": 810.0,

|

| 6 |

"memory_inference": 808.0,

|

| 7 |

+

"token_generation_latency_sync": 36.57732048034668,

|

| 8 |

+

"token_generation_latency_async": 36.563923954963684,

|

| 9 |

+

"token_generation_throughput_sync": 0.02733934544323193,

|

| 10 |

+

"token_generation_throughput_async": 0.02734936220827159,

|

| 11 |

+

"token_generation_CO2_emissions": 1.9446958828920205e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.001798393284076898,

|

| 13 |

+

"inference_latency_sync": 122.71987800598144,

|

| 14 |

+

"inference_latency_async": 47.88346290588379,

|

| 15 |

+

"inference_throughput_sync": 0.008148639130420741,

|

| 16 |

+

"inference_throughput_async": 0.020884036769970592,

|

| 17 |

+

"inference_CO2_emissions": 1.9399486097971613e-05,

|

| 18 |

+

"inference_energy_consumption": 6.64519560542376e-05

|

| 19 |

}

|

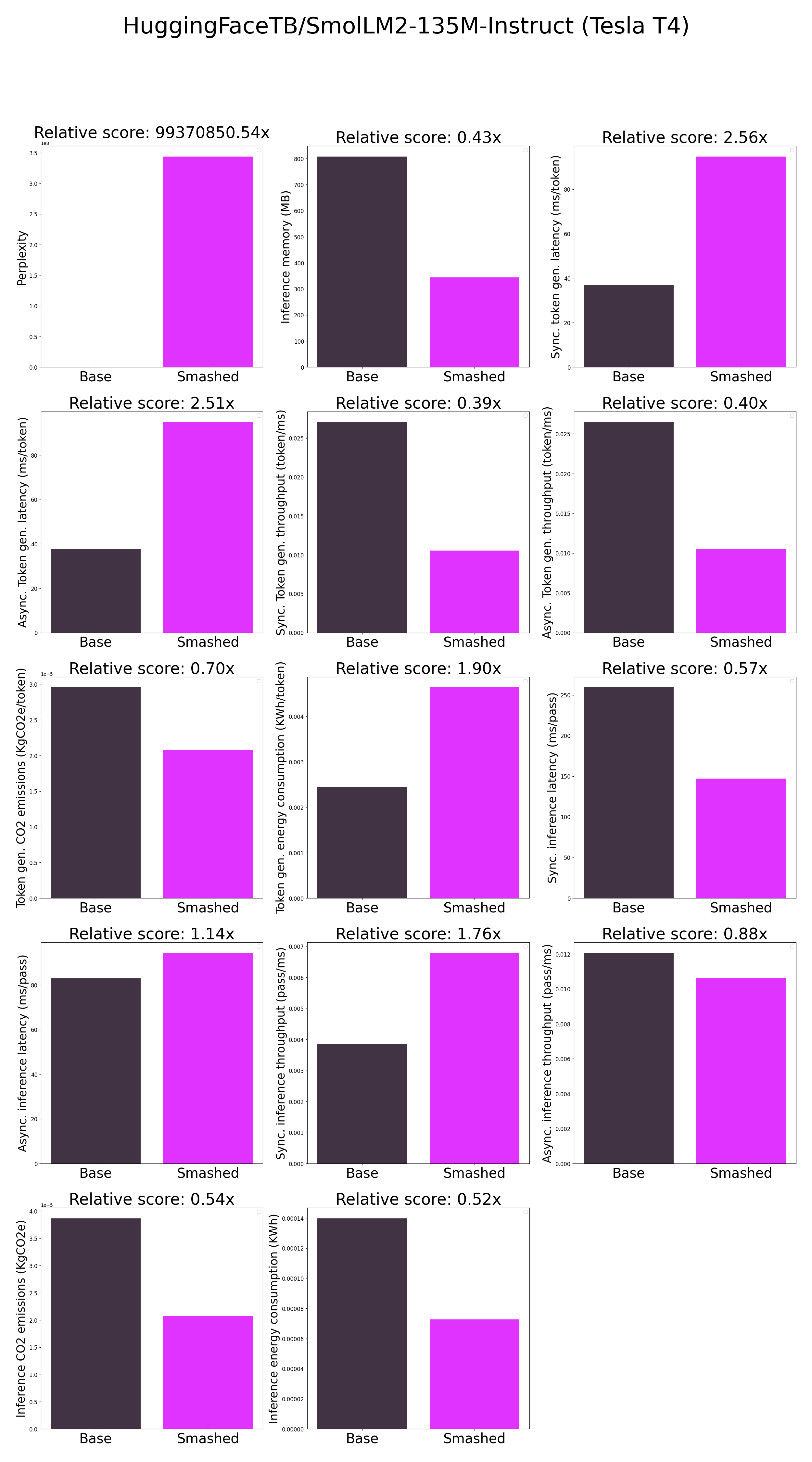

plots.png

CHANGED

|

|

smashed_results.json

CHANGED

|

@@ -4,16 +4,16 @@

|

|

| 4 |

"perplexity": 343688032.0,

|

| 5 |

"memory_inference_first": 344.0,

|

| 6 |

"memory_inference": 344.0,

|

| 7 |

-

"token_generation_latency_sync": 94.

|

| 8 |

-

"token_generation_latency_async":

|

| 9 |

-

"token_generation_throughput_sync": 0.

|

| 10 |

-

"token_generation_throughput_async": 0.

|

| 11 |

-

"token_generation_CO2_emissions": 2.

|

| 12 |

-

"token_generation_energy_consumption": 0.

|

| 13 |

-

"inference_latency_sync":

|

| 14 |

-

"inference_latency_async":

|

| 15 |

-

"inference_throughput_sync": 0.

|

| 16 |

-

"inference_throughput_async": 0.

|

| 17 |

-

"inference_CO2_emissions": 2.

|

| 18 |

-

"inference_energy_consumption": 7.

|

| 19 |

}

|

|

|

|

| 4 |

"perplexity": 343688032.0,

|

| 5 |

"memory_inference_first": 344.0,

|

| 6 |

"memory_inference": 344.0,

|

| 7 |

+

"token_generation_latency_sync": 94.19695053100585,

|

| 8 |

+

"token_generation_latency_async": 93.61793361604214,

|

| 9 |

+

"token_generation_throughput_sync": 0.010616054918580831,

|

| 10 |

+

"token_generation_throughput_async": 0.01068171408377083,

|

| 11 |

+

"token_generation_CO2_emissions": 2.0701273057098414e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.004600785530736977,

|

| 13 |

+

"inference_latency_sync": 146.6492561340332,

|

| 14 |

+

"inference_latency_async": 93.28432083129883,

|

| 15 |

+

"inference_throughput_sync": 0.006818991288206936,

|

| 16 |

+

"inference_throughput_async": 0.010719915105652773,

|

| 17 |

+

"inference_CO2_emissions": 2.082240367862723e-05,

|

| 18 |

+

"inference_energy_consumption": 7.15078451615474e-05

|

| 19 |

}

|