File size: 4,948 Bytes

ed0c951 bb69298 ed0c951 e49c216 ed0c951 bb69298 ed0c951 e49c216 ed0c951 e49c216 ed0c951 e49c216 ed0c951 bb69298 ed0c951 bb69298 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 |

---

license: apache-2.0

language:

- en

- zh

base_model:

- Qwen/Qwen2-7B-Instruct

pipeline_tag: text-generation

library_name: transformers

tags:

- finance

- text-generation-inference

datasets:

- IDEA-FinAI/Golden-Touchstone

---

<!-- markdownlint-disable first-line-h1 -->

<!-- markdownlint-disable html -->

<!-- markdownlint-disable no-duplicate-header -->

<div align="center">

<img src="https://github.com/IDEA-FinAI/Golden-Touchstone/blob/main/assets/Touchstone-GPT-logo.png?raw=true" width="15%" alt="Golden-Touchstone" />

<h1 style="display: inline-block; vertical-align: middle; margin-left: 10px; font-size: 2em; font-weight: bold;">Golden-Touchstone Benchmark</h1>

</div>

<div align="center" style="line-height: 1;">

<a href="https://arxiv.org/abs/2411.06272" target="_blank" style="margin: 2px;">

<img alt="arXiv" src="https://img.shields.io/badge/Arxiv-2411.06272-b31b1b.svg?logo=arXiv" style="display: inline-block; vertical-align: middle;"/>

</a>

<a href="https://github.com/IDEA-FinAI/Golden-Touchstone" target="_blank" style="margin: 2px;">

<img alt="github" src="https://img.shields.io/github/stars/IDEA-FinAI/Golden-Touchstone.svg?style=social" style="display: inline-block; vertical-align: middle;"/>

</a>

<a href="https://huggingface.co/IDEA-FinAI/TouchstoneGPT-7B-Instruct" target="_blank" style="margin: 2px;">

<img alt="datasets" src="https://img.shields.io/badge/🤗-Datasets-yellow.svg" style="display: inline-block; vertical-align: middle;"/>

</a>

<a href="https://huggingface.co/IDEA-FinAI/TouchstoneGPT-7B-Instruct" target="_blank" style="margin: 2px;">

<img alt="huggingface" src="https://img.shields.io/badge/🤗-Model-yellow.svg" style="display: inline-block; vertical-align: middle;"/>

</a>

</div>

# Golden-Touchstone

Golden Touchstone is a simple, effective, and systematic benchmark for bilingual (Chinese-English) financial large language models, driving the research and implementation of financial large language models, akin to a touchstone. We also have trained and open-sourced Touchstone-GPT as a baseline for subsequent community research.

## Introduction

The paper shows the evaluation of the diversity, systematicness and LLM adaptability of each open source benchmark.

By collecting and selecting representative task datasets, we built our own Chinese-English bilingual Touchstone Benchmark, which includes 22 datasets

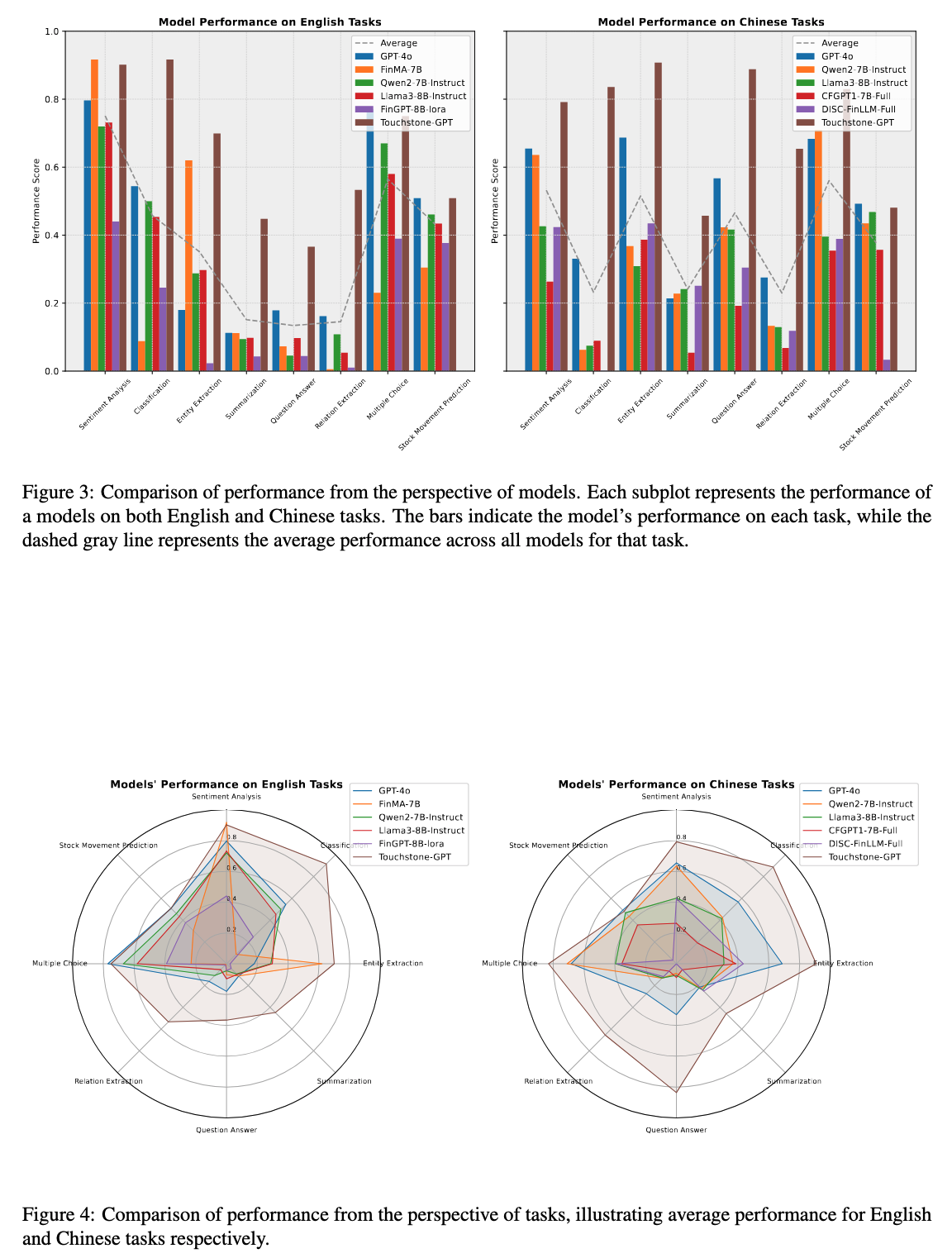

We extensively evaluated GPT-4o, llama3, qwen2, fingpt and our own trained Touchstone-GPT, analyzed the advantages and disadvantages of these models, and provided direction for subsequent research on financial large language models

## Evaluation of Touchstone Benchmark

Please See our github repo [Golden-Touchstone](https://github.com/IDEA-FinAI/Golden-Touchstone)

## Usage of Touchstone-GPT

Here provides a code snippet with `apply_chat_template` to show you how to load the tokenizer and model and how to generate contents.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained(

"IDEA-FinAI/TouchstoneGPT-7B-Instruct",

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained("IDEA-FinAI/TouchstoneGPT-7B-Instruct")

prompt = "What is the sentiment of the following financial post: Positive, Negative, or Neutral?\nsees #Apple at $150/share in a year (+36% from today) on growing services business."

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

```

## Citation

```

@misc{wu2024goldentouchstonecomprehensivebilingual,

title={Golden Touchstone: A Comprehensive Bilingual Benchmark for Evaluating Financial Large Language Models},

author={Xiaojun Wu and Junxi Liu and Huanyi Su and Zhouchi Lin and Yiyan Qi and Chengjin Xu and Jiajun Su and Jiajie Zhong and Fuwei Wang and Saizhuo Wang and Fengrui Hua and Jia Li and Jian Guo},

year={2024},

eprint={2411.06272},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2411.06272},

}

``` |