IP-Adapter Model Card

Introduction

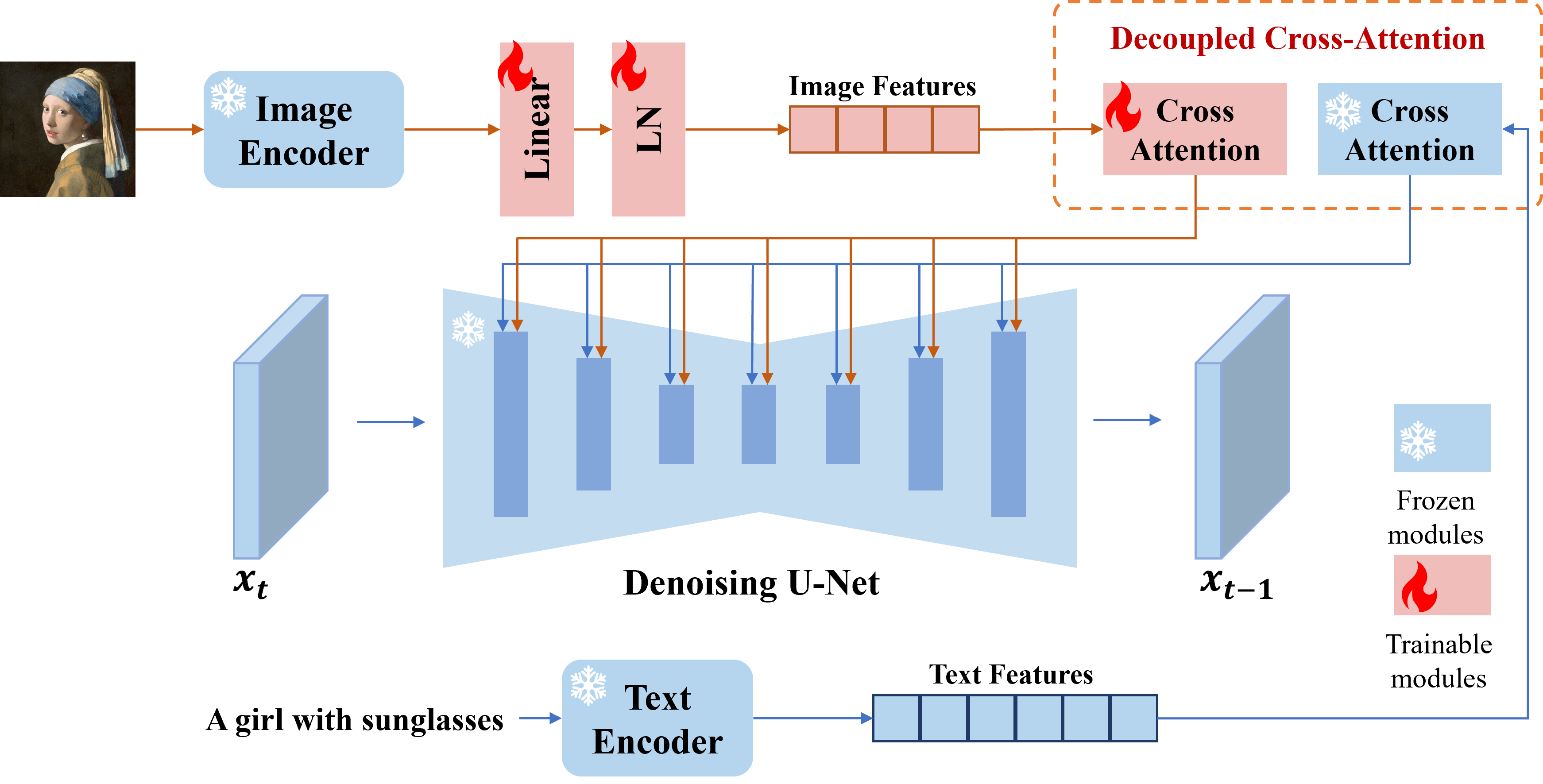

we present IP-Adapter, an effective and lightweight adapter to achieve image prompt capability for the pre-trained text-to-image diffusion models. An IP-Adapter with only 22M parameters can achieve comparable or even better performance to a fine-tuned image prompt model. IP-Adapter can be generalized not only to other custom models fine-tuned from the same base model, but also to controllable generation using existing controllable tools. Moreover, the image prompt can also work well with the text prompt to accomplish multimodal image generation.

Models

Image Encoder

- models/image_encoder: OpenCLIP-ViT-H-14 with 632.08M parameter

- sdxl_models/image_encoder: OpenCLIP-ViT-bigG-14 with 1844.9M parameter

More information can be found here

IP-Adapter for SD 1.5

- ip-adapter_sd15.bin: use global image embedding from OpenCLIP-ViT-H-14 as condition

- ip-adapter_sd15_light.bin: same as ip-adapter_sd15, but more compatible with text prompt

- ip-adapter-plus_sd15.bin: use patch image embeddings from OpenCLIP-ViT-H-14 as condition, closer to the reference image than ip-adapter_sd15

- ip-adapter-plus-face_sd15.bin: same as ip-adapter-plus_sd15, but use cropped face image as condition

IP-Adapter for SDXL 1.0

- ip-adapter_sdxl.bin: use global image embedding from OpenCLIP-ViT-bigG-14 as condition

- ip-adapter_sdxl_vit-h.bin: same as ip-adapter_sdxl, but use OpenCLIP-ViT-H-14

- ip-adapter-plus_sdxl_vit-h.bin: use patch image embeddings from OpenCLIP-ViT-H-14 as condition, closer to the reference image than ip-adapter_xl and ip-adapter_sdxl_vit-h

- ip-adapter-plus-face_sdxl_vit-h.bin: same as ip-adapter-plus_sdxl_vit-h, but use cropped face image as condition

- Downloads last month

- 0

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support