|

|

--- |

|

|

language: en |

|

|

license: mit |

|

|

tags: |

|

|

- image-classification |

|

|

- densenet |

|

|

- ai-generated-content |

|

|

- human-created-content |

|

|

- model-card |

|

|

--- |

|

|

|

|

|

# **Fine-tuned DenseNet for Image Classification** |

|

|

|

|

|

## **Model Overview** |

|

|

This fine-tuned **DenseNet121** model is designed to classify images into the following categories: |

|

|

1. **DALL-E Generated Images** |

|

|

2. **Human-Created Images** |

|

|

3. **Other AI-Generated Images** |

|

|

|

|

|

The model is ideal for detecting AI-generated content, particularly useful in creative fields such as art and design. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Use Cases** |

|

|

- **AI Art Detection**: Identifies whether an image was generated by AI or created by a human. |

|

|

- **Content Moderation**: Useful in media, art, and design industries where distinguishing AI-generated content is essential. |

|

|

- **Educational Purposes**: Useful for exploring the differences between AI and human-generated content. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Model Performance** |

|

|

- **Accuracy**: **95%** on the validation dataset. |

|

|

- **Loss**: **0.0552** after 15 epochs of training. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Training Details** |

|

|

- **Base Model**: DenseNet121, pretrained on ImageNet. |

|

|

- **Optimizer**: Adam with a learning rate of 0.0001. |

|

|

- **Loss Function**: Cross-Entropy Loss. |

|

|

- **Batch Size**: 32 |

|

|

- **Epochs**: 15 |

|

|

|

|

|

The model was fine-tuned using data augmentation techniques like random flips, rotations, and color jittering to improve robustness. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Training Metrics** |

|

|

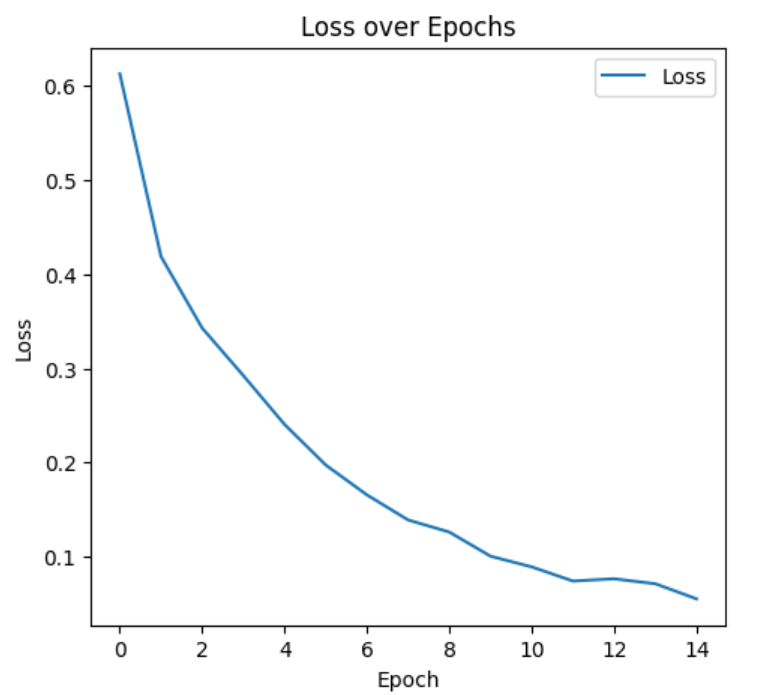

### **1. Loss Over Epochs** |

|

|

|

|

|

This graph shows the decrease in loss over 15 epochs, indicating the model's improved ability to fit the data. |

|

|

|

|

|

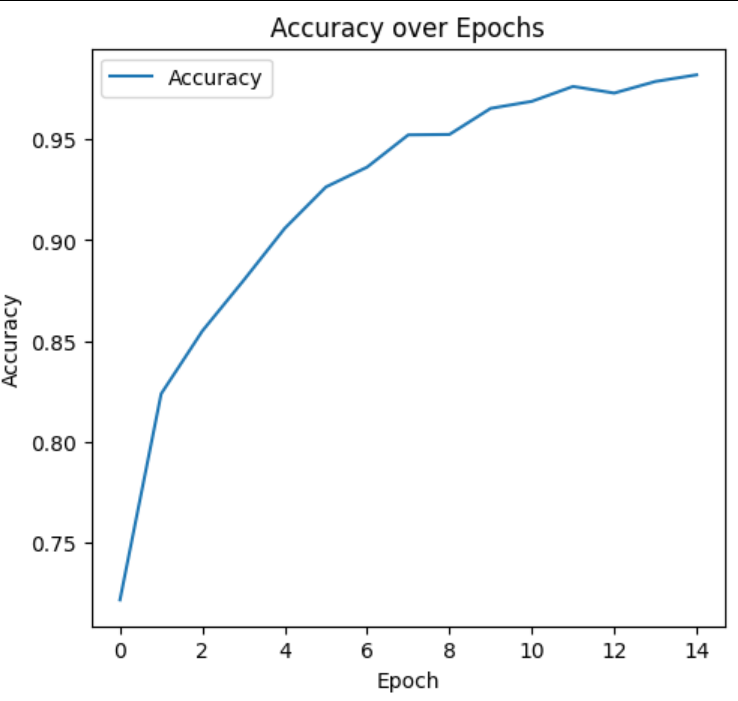

### **2. Accuracy Over Epochs** |

|

|

|

|

|

This graph shows the increase in accuracy, reflecting the model's growing ability to correctly classify images. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Sample Dataset** |

|

|

Here is a visual representation of the dataset used for training and validation: |

|

|

|

|

|

|

|

|

|

|

|

This image shows a collage of examples from the dataset used to fine-tune the DenseNet model. The dataset includes a diverse mix of images from three distinct categories: |

|

|

1. **Human-Created Images** – Traditional artwork or photographs made by humans. |

|

|

2. **DALL-E Generated Images** – Images created using DALL-E, an advanced AI model designed to generate visual content. |

|

|

3. **Other AI-Generated Images** – Visual content generated by other AI systems, aside from DALL-E, to provide variety in the training data. |

|

|

|

|

|

This diversity allows the model to effectively learn how to distinguish between different forms of image creation, ensuring robust performance across a range of AI-generated and human-created content. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Model Output Samples** |

|

|

Here are some examples of the model's predictions on various images: |

|

|

|

|

|

#### Sample 1: Human-Created Image |

|

|

|

|

|

*Predicted: Human-Created* |

|

|

|

|

|

#### Sample 2: DALL-E Generated Image |

|

|

|

|

|

*Predicted: DALL-E Generated* |

|

|

|

|

|

#### Sample 3: Other AI-Generated Image |

|

|

|

|

|

*Predicted: Other AI-Generated* |

|

|

|

|

|

--- |

|

|

|

|

|

## **Model Architecture** |

|

|

- **Feature Extractor**: DenseNet121 with frozen layers to retain general features from ImageNet. |

|

|

- **Classifier**: A fully connected layer with 3 output nodes, one for each class (DALL-E, Human-Created, Other AI). |

|

|

|

|

|

--- |

|

|

|

|

|

## **Limitations** |

|

|

- **Data Bias**: The model's performance is dependent on the balance and diversity of the training dataset. |

|

|

- **Generalization**: Further testing on more diverse datasets is recommended to validate the model’s performance across different domains and types of images. |

|

|

|

|

|

--- |

|

|

|

|

|

## **Model Download** |

|

|

You can download the fine-tuned DenseNet121 model using the following link: |

|

|

[**Download the Model**](https://huggingface.co/alokpandey/DenseNet-DH3Classifier/resolve/main/densenet_finetuned_dense.pth) |

|

|

|

|

|

--- |

|

|

|

|

|

## **References** |

|

|

For more information on DenseNet, refer to the original research paper: |

|

|

[**Densely Connected Convolutional Networks (DenseNet)**](https://arxiv.org/abs/1608.06993) |