fc7e96b182b21f5be42d5060b4cc04b1c2e517067a0c471c97505b29f93f45e5

Browse files- .gitattributes +9 -0

- ebsynth_utility/__pycache__/stage8.cpython-310.pyc +0 -0

- ebsynth_utility/calculator.py +237 -0

- ebsynth_utility/ebsynth_utility.py +185 -0

- ebsynth_utility/imgs/clipseg.png +0 -0

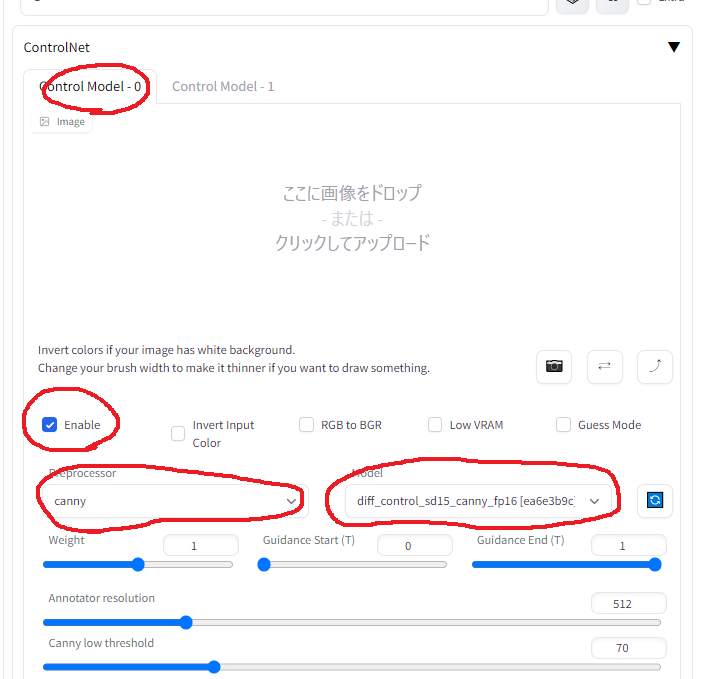

- ebsynth_utility/imgs/controlnet_0.png +0 -0

- ebsynth_utility/imgs/controlnet_1.png +0 -0

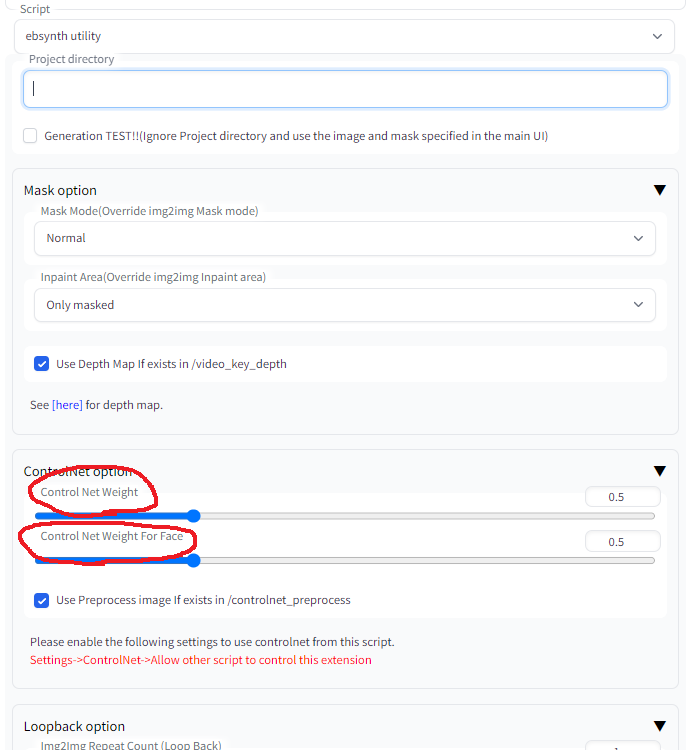

- ebsynth_utility/imgs/controlnet_option_in_ebsynthutil.png +0 -0

- ebsynth_utility/imgs/controlnet_setting.png +0 -0

- ebsynth_utility/imgs/sample1.mp4 +3 -0

- ebsynth_utility/imgs/sample2.mp4 +3 -0

- ebsynth_utility/imgs/sample3.mp4 +3 -0

- ebsynth_utility/imgs/sample4.mp4 +3 -0

- ebsynth_utility/imgs/sample5.mp4 +3 -0

- ebsynth_utility/imgs/sample6.mp4 +3 -0

- ebsynth_utility/imgs/sample_anyaheh.mp4 +3 -0

- ebsynth_utility/imgs/sample_autotag.mp4 +3 -0

- ebsynth_utility/imgs/sample_clipseg.mp4 +3 -0

- ebsynth_utility/install.py +24 -0

- ebsynth_utility/sample/add_token.txt +54 -0

- ebsynth_utility/sample/blacklist.txt +10 -0

- ebsynth_utility/scripts/__pycache__/custom_script.cpython-310.pyc +0 -0

- ebsynth_utility/scripts/__pycache__/ui.cpython-310.pyc +0 -0

- ebsynth_utility/scripts/custom_script.py +1012 -0

- ebsynth_utility/scripts/ui.py +199 -0

- ebsynth_utility/stage1.py +258 -0

- ebsynth_utility/stage2.py +173 -0

- ebsynth_utility/stage3_5.py +178 -0

- ebsynth_utility/stage5.py +279 -0

- ebsynth_utility/stage7.py +234 -0

- ebsynth_utility/stage8.py +146 -0

- ebsynth_utility/style.css +39 -0

- microsoftexcel-controlnet/__pycache__/preload.cpython-310.pyc +0 -0

- microsoftexcel-controlnet/annotator/__pycache__/util.cpython-310.pyc +0 -0

.gitattributes

CHANGED

|

@@ -55,3 +55,12 @@ SD-CN-Animation/examples/gold_1.gif filter=lfs diff=lfs merge=lfs -text

|

|

| 55 |

SD-CN-Animation/examples/macaroni_1.gif filter=lfs diff=lfs merge=lfs -text

|

| 56 |

SD-CN-Animation/examples/tree_2.gif filter=lfs diff=lfs merge=lfs -text

|

| 57 |

SD-CN-Animation/examples/tree_2.mp4 filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 55 |

SD-CN-Animation/examples/macaroni_1.gif filter=lfs diff=lfs merge=lfs -text

|

| 56 |

SD-CN-Animation/examples/tree_2.gif filter=lfs diff=lfs merge=lfs -text

|

| 57 |

SD-CN-Animation/examples/tree_2.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

ebsynth_utility/imgs/sample1.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

ebsynth_utility/imgs/sample2.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

ebsynth_utility/imgs/sample3.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

ebsynth_utility/imgs/sample4.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

ebsynth_utility/imgs/sample5.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

ebsynth_utility/imgs/sample6.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

ebsynth_utility/imgs/sample_anyaheh.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

ebsynth_utility/imgs/sample_autotag.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

ebsynth_utility/imgs/sample_clipseg.mp4 filter=lfs diff=lfs merge=lfs -text

|

ebsynth_utility/__pycache__/stage8.cpython-310.pyc

ADDED

|

Binary file (3.97 kB). View file

|

|

|

ebsynth_utility/calculator.py

ADDED

|

@@ -0,0 +1,237 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# https://www.mycompiler.io/view/3TFZagC

|

| 2 |

+

|

| 3 |

+

class ParseError(Exception):

|

| 4 |

+

def __init__(self, pos, msg, *args):

|

| 5 |

+

self.pos = pos

|

| 6 |

+

self.msg = msg

|

| 7 |

+

self.args = args

|

| 8 |

+

|

| 9 |

+

def __str__(self):

|

| 10 |

+

return '%s at position %s' % (self.msg % self.args, self.pos)

|

| 11 |

+

|

| 12 |

+

class Parser:

|

| 13 |

+

def __init__(self):

|

| 14 |

+

self.cache = {}

|

| 15 |

+

|

| 16 |

+

def parse(self, text):

|

| 17 |

+

self.text = text

|

| 18 |

+

self.pos = -1

|

| 19 |

+

self.len = len(text) - 1

|

| 20 |

+

rv = self.start()

|

| 21 |

+

self.assert_end()

|

| 22 |

+

return rv

|

| 23 |

+

|

| 24 |

+

def assert_end(self):

|

| 25 |

+

if self.pos < self.len:

|

| 26 |

+

raise ParseError(

|

| 27 |

+

self.pos + 1,

|

| 28 |

+

'Expected end of string but got %s',

|

| 29 |

+

self.text[self.pos + 1]

|

| 30 |

+

)

|

| 31 |

+

|

| 32 |

+

def eat_whitespace(self):

|

| 33 |

+

while self.pos < self.len and self.text[self.pos + 1] in " \f\v\r\t\n":

|

| 34 |

+

self.pos += 1

|

| 35 |

+

|

| 36 |

+

def split_char_ranges(self, chars):

|

| 37 |

+

try:

|

| 38 |

+

return self.cache[chars]

|

| 39 |

+

except KeyError:

|

| 40 |

+

pass

|

| 41 |

+

|

| 42 |

+

rv = []

|

| 43 |

+

index = 0

|

| 44 |

+

length = len(chars)

|

| 45 |

+

|

| 46 |

+

while index < length:

|

| 47 |

+

if index + 2 < length and chars[index + 1] == '-':

|

| 48 |

+

if chars[index] >= chars[index + 2]:

|

| 49 |

+

raise ValueError('Bad character range')

|

| 50 |

+

|

| 51 |

+

rv.append(chars[index:index + 3])

|

| 52 |

+

index += 3

|

| 53 |

+

else:

|

| 54 |

+

rv.append(chars[index])

|

| 55 |

+

index += 1

|

| 56 |

+

|

| 57 |

+

self.cache[chars] = rv

|

| 58 |

+

return rv

|

| 59 |

+

|

| 60 |

+

def char(self, chars=None):

|

| 61 |

+

if self.pos >= self.len:

|

| 62 |

+

raise ParseError(

|

| 63 |

+

self.pos + 1,

|

| 64 |

+

'Expected %s but got end of string',

|

| 65 |

+

'character' if chars is None else '[%s]' % chars

|

| 66 |

+

)

|

| 67 |

+

|

| 68 |

+

next_char = self.text[self.pos + 1]

|

| 69 |

+

if chars == None:

|

| 70 |

+

self.pos += 1

|

| 71 |

+

return next_char

|

| 72 |

+

|

| 73 |

+

for char_range in self.split_char_ranges(chars):

|

| 74 |

+

if len(char_range) == 1:

|

| 75 |

+

if next_char == char_range:

|

| 76 |

+

self.pos += 1

|

| 77 |

+

return next_char

|

| 78 |

+

elif char_range[0] <= next_char <= char_range[2]:

|

| 79 |

+

self.pos += 1

|

| 80 |

+

return next_char

|

| 81 |

+

|

| 82 |

+

raise ParseError(

|

| 83 |

+

self.pos + 1,

|

| 84 |

+

'Expected %s but got %s',

|

| 85 |

+

'character' if chars is None else '[%s]' % chars,

|

| 86 |

+

next_char

|

| 87 |

+

)

|

| 88 |

+

|

| 89 |

+

def keyword(self, *keywords):

|

| 90 |

+

self.eat_whitespace()

|

| 91 |

+

if self.pos >= self.len:

|

| 92 |

+

raise ParseError(

|

| 93 |

+

self.pos + 1,

|

| 94 |

+

'Expected %s but got end of string',

|

| 95 |

+

','.join(keywords)

|

| 96 |

+

)

|

| 97 |

+

|

| 98 |

+

for keyword in keywords:

|

| 99 |

+

low = self.pos + 1

|

| 100 |

+

high = low + len(keyword)

|

| 101 |

+

|

| 102 |

+

if self.text[low:high] == keyword:

|

| 103 |

+

self.pos += len(keyword)

|

| 104 |

+

self.eat_whitespace()

|

| 105 |

+

return keyword

|

| 106 |

+

|

| 107 |

+

raise ParseError(

|

| 108 |

+

self.pos + 1,

|

| 109 |

+

'Expected %s but got %s',

|

| 110 |

+

','.join(keywords),

|

| 111 |

+

self.text[self.pos + 1],

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

def match(self, *rules):

|

| 115 |

+

self.eat_whitespace()

|

| 116 |

+

last_error_pos = -1

|

| 117 |

+

last_exception = None

|

| 118 |

+

last_error_rules = []

|

| 119 |

+

|

| 120 |

+

for rule in rules:

|

| 121 |

+

initial_pos = self.pos

|

| 122 |

+

try:

|

| 123 |

+

rv = getattr(self, rule)()

|

| 124 |

+

self.eat_whitespace()

|

| 125 |

+

return rv

|

| 126 |

+

except ParseError as e:

|

| 127 |

+

self.pos = initial_pos

|

| 128 |

+

|

| 129 |

+

if e.pos > last_error_pos:

|

| 130 |

+

last_exception = e

|

| 131 |

+

last_error_pos = e.pos

|

| 132 |

+

last_error_rules.clear()

|

| 133 |

+

last_error_rules.append(rule)

|

| 134 |

+

elif e.pos == last_error_pos:

|

| 135 |

+

last_error_rules.append(rule)

|

| 136 |

+

|

| 137 |

+

if len(last_error_rules) == 1:

|

| 138 |

+

raise last_exception

|

| 139 |

+

else:

|

| 140 |

+

raise ParseError(

|

| 141 |

+

last_error_pos,

|

| 142 |

+

'Expected %s but got %s',

|

| 143 |

+

','.join(last_error_rules),

|

| 144 |

+

self.text[last_error_pos]

|

| 145 |

+

)

|

| 146 |

+

|

| 147 |

+

def maybe_char(self, chars=None):

|

| 148 |

+

try:

|

| 149 |

+

return self.char(chars)

|

| 150 |

+

except ParseError:

|

| 151 |

+

return None

|

| 152 |

+

|

| 153 |

+

def maybe_match(self, *rules):

|

| 154 |

+

try:

|

| 155 |

+

return self.match(*rules)

|

| 156 |

+

except ParseError:

|

| 157 |

+

return None

|

| 158 |

+

|

| 159 |

+

def maybe_keyword(self, *keywords):

|

| 160 |

+

try:

|

| 161 |

+

return self.keyword(*keywords)

|

| 162 |

+

except ParseError:

|

| 163 |

+

return None

|

| 164 |

+

|

| 165 |

+

class CalcParser(Parser):

|

| 166 |

+

def start(self):

|

| 167 |

+

return self.expression()

|

| 168 |

+

|

| 169 |

+

def expression(self):

|

| 170 |

+

rv = self.match('term')

|

| 171 |

+

while True:

|

| 172 |

+

op = self.maybe_keyword('+', '-')

|

| 173 |

+

if op is None:

|

| 174 |

+

break

|

| 175 |

+

|

| 176 |

+

term = self.match('term')

|

| 177 |

+

if op == '+':

|

| 178 |

+

rv += term

|

| 179 |

+

else:

|

| 180 |

+

rv -= term

|

| 181 |

+

|

| 182 |

+

return rv

|

| 183 |

+

|

| 184 |

+

def term(self):

|

| 185 |

+

rv = self.match('factor')

|

| 186 |

+

while True:

|

| 187 |

+

op = self.maybe_keyword('*', '/')

|

| 188 |

+

if op is None:

|

| 189 |

+

break

|

| 190 |

+

|

| 191 |

+

term = self.match('factor')

|

| 192 |

+

if op == '*':

|

| 193 |

+

rv *= term

|

| 194 |

+

else:

|

| 195 |

+

rv /= term

|

| 196 |

+

|

| 197 |

+

return rv

|

| 198 |

+

|

| 199 |

+

def factor(self):

|

| 200 |

+

if self.maybe_keyword('('):

|

| 201 |

+

rv = self.match('expression')

|

| 202 |

+

self.keyword(')')

|

| 203 |

+

|

| 204 |

+

return rv

|

| 205 |

+

|

| 206 |

+

return self.match('number')

|

| 207 |

+

|

| 208 |

+

def number(self):

|

| 209 |

+

chars = []

|

| 210 |

+

|

| 211 |

+

sign = self.maybe_keyword('+', '-')

|

| 212 |

+

if sign is not None:

|

| 213 |

+

chars.append(sign)

|

| 214 |

+

|

| 215 |

+

chars.append(self.char('0-9'))

|

| 216 |

+

|

| 217 |

+

while True:

|

| 218 |

+

char = self.maybe_char('0-9')

|

| 219 |

+

if char is None:

|

| 220 |

+

break

|

| 221 |

+

|

| 222 |

+

chars.append(char)

|

| 223 |

+

|

| 224 |

+

if self.maybe_char('.'):

|

| 225 |

+

chars.append('.')

|

| 226 |

+

chars.append(self.char('0-9'))

|

| 227 |

+

|

| 228 |

+

while True:

|

| 229 |

+

char = self.maybe_char('0-9')

|

| 230 |

+

if char is None:

|

| 231 |

+

break

|

| 232 |

+

|

| 233 |

+

chars.append(char)

|

| 234 |

+

|

| 235 |

+

rv = float(''.join(chars))

|

| 236 |

+

return rv

|

| 237 |

+

|

ebsynth_utility/ebsynth_utility.py

ADDED

|

@@ -0,0 +1,185 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

from modules.ui import plaintext_to_html

|

| 4 |

+

|

| 5 |

+

import cv2

|

| 6 |

+

import glob

|

| 7 |

+

from PIL import Image

|

| 8 |

+

|

| 9 |

+

from extensions.ebsynth_utility.stage1 import ebsynth_utility_stage1,ebsynth_utility_stage1_invert

|

| 10 |

+

from extensions.ebsynth_utility.stage2 import ebsynth_utility_stage2

|

| 11 |

+

from extensions.ebsynth_utility.stage5 import ebsynth_utility_stage5

|

| 12 |

+

from extensions.ebsynth_utility.stage7 import ebsynth_utility_stage7

|

| 13 |

+

from extensions.ebsynth_utility.stage8 import ebsynth_utility_stage8

|

| 14 |

+

from extensions.ebsynth_utility.stage3_5 import ebsynth_utility_stage3_5

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def x_ceiling(value, step):

|

| 18 |

+

return -(-value // step) * step

|

| 19 |

+

|

| 20 |

+

def dump_dict(string, d:dict):

|

| 21 |

+

for key in d.keys():

|

| 22 |

+

string += ( key + " : " + str(d[key]) + "\n")

|

| 23 |

+

return string

|

| 24 |

+

|

| 25 |

+

class debug_string:

|

| 26 |

+

txt = ""

|

| 27 |

+

def print(self, comment):

|

| 28 |

+

print(comment)

|

| 29 |

+

self.txt += comment + '\n'

|

| 30 |

+

def to_string(self):

|

| 31 |

+

return self.txt

|

| 32 |

+

|

| 33 |

+

def ebsynth_utility_process(stage_index: int, project_dir:str, original_movie_path:str, frame_width:int, frame_height:int, st1_masking_method_index:int, st1_mask_threshold:float, tb_use_fast_mode:bool, tb_use_jit:bool, clipseg_mask_prompt:str, clipseg_exclude_prompt:str, clipseg_mask_threshold:int, clipseg_mask_blur_size:int, clipseg_mask_blur_size2:int, key_min_gap:int, key_max_gap:int, key_th:float, key_add_last_frame:bool, color_matcher_method:str, st3_5_use_mask:bool, st3_5_use_mask_ref:bool, st3_5_use_mask_org:bool, color_matcher_ref_type:int, color_matcher_ref_image:Image, blend_rate:float, export_type:str, bg_src:str, bg_type:str, mask_blur_size:int, mask_threshold:float, fg_transparency:float, mask_mode:str):

|

| 34 |

+

args = locals()

|

| 35 |

+

info = ""

|

| 36 |

+

info = dump_dict(info, args)

|

| 37 |

+

dbg = debug_string()

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def process_end(dbg, info):

|

| 41 |

+

return plaintext_to_html(dbg.to_string()), plaintext_to_html(info)

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

if not os.path.isdir(project_dir):

|

| 45 |

+

dbg.print("{0} project_dir not found".format(project_dir))

|

| 46 |

+

return process_end( dbg, info )

|

| 47 |

+

|

| 48 |

+

if not os.path.isfile(original_movie_path):

|

| 49 |

+

dbg.print("{0} original_movie_path not found".format(original_movie_path))

|

| 50 |

+

return process_end( dbg, info )

|

| 51 |

+

|

| 52 |

+

is_invert_mask = False

|

| 53 |

+

if mask_mode == "Invert":

|

| 54 |

+

is_invert_mask = True

|

| 55 |

+

|

| 56 |

+

frame_path = os.path.join(project_dir , "video_frame")

|

| 57 |

+

frame_mask_path = os.path.join(project_dir, "video_mask")

|

| 58 |

+

|

| 59 |

+

if is_invert_mask:

|

| 60 |

+

inv_path = os.path.join(project_dir, "inv")

|

| 61 |

+

os.makedirs(inv_path, exist_ok=True)

|

| 62 |

+

|

| 63 |

+

org_key_path = os.path.join(inv_path, "video_key")

|

| 64 |

+

img2img_key_path = os.path.join(inv_path, "img2img_key")

|

| 65 |

+

img2img_upscale_key_path = os.path.join(inv_path, "img2img_upscale_key")

|

| 66 |

+

else:

|

| 67 |

+

org_key_path = os.path.join(project_dir, "video_key")

|

| 68 |

+

img2img_key_path = os.path.join(project_dir, "img2img_key")

|

| 69 |

+

img2img_upscale_key_path = os.path.join(project_dir, "img2img_upscale_key")

|

| 70 |

+

|

| 71 |

+

if mask_mode == "None":

|

| 72 |

+

frame_mask_path = ""

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

project_args = [project_dir, original_movie_path, frame_path, frame_mask_path, org_key_path, img2img_key_path, img2img_upscale_key_path]

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

if stage_index == 0:

|

| 79 |

+

ebsynth_utility_stage1(dbg, project_args, frame_width, frame_height, st1_masking_method_index, st1_mask_threshold, tb_use_fast_mode, tb_use_jit, clipseg_mask_prompt, clipseg_exclude_prompt, clipseg_mask_threshold, clipseg_mask_blur_size, clipseg_mask_blur_size2, is_invert_mask)

|

| 80 |

+

if is_invert_mask:

|

| 81 |

+

inv_mask_path = os.path.join(inv_path, "inv_video_mask")

|

| 82 |

+

ebsynth_utility_stage1_invert(dbg, frame_mask_path, inv_mask_path)

|

| 83 |

+

|

| 84 |

+

elif stage_index == 1:

|

| 85 |

+

ebsynth_utility_stage2(dbg, project_args, key_min_gap, key_max_gap, key_th, key_add_last_frame, is_invert_mask)

|

| 86 |

+

elif stage_index == 2:

|

| 87 |

+

|

| 88 |

+

sample_image = glob.glob( os.path.join(frame_path , "*.png" ) )[0]

|

| 89 |

+

img_height, img_width, _ = cv2.imread(sample_image).shape

|

| 90 |

+

if img_width < img_height:

|

| 91 |

+

re_w = 512

|

| 92 |

+

re_h = int(x_ceiling( (512 / img_width) * img_height , 64))

|

| 93 |

+

else:

|

| 94 |

+

re_w = int(x_ceiling( (512 / img_height) * img_width , 64))

|

| 95 |

+

re_h = 512

|

| 96 |

+

img_width = re_w

|

| 97 |

+

img_height = re_h

|

| 98 |

+

|

| 99 |

+

dbg.print("stage 3")

|

| 100 |

+

dbg.print("")

|

| 101 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 102 |

+

dbg.print("1. Go to img2img tab")

|

| 103 |

+

dbg.print("2. Select [ebsynth utility] in the script combo box")

|

| 104 |

+

dbg.print("3. Fill in the \"Project directory\" field with [" + project_dir + "]" )

|

| 105 |

+

dbg.print("4. Select in the \"Mask Mode(Override img2img Mask mode)\" field with [" + ("Invert" if is_invert_mask else "Normal") + "]" )

|

| 106 |

+

dbg.print("5. I recommend to fill in the \"Width\" field with [" + str(img_width) + "]" )

|

| 107 |

+

dbg.print("6. I recommend to fill in the \"Height\" field with [" + str(img_height) + "]" )

|

| 108 |

+

dbg.print("7. I recommend to fill in the \"Denoising strength\" field with lower than 0.35" )

|

| 109 |

+

dbg.print(" (When using controlnet together, you can put in large values (even 1.0 is possible).)")

|

| 110 |

+

dbg.print("8. Fill in the remaining configuration fields of img2img. No image and mask settings are required.")

|

| 111 |

+

dbg.print("9. Drop any image onto the img2img main screen. This is necessary to avoid errors, but does not affect the results of img2img.")

|

| 112 |

+

dbg.print("10. Generate")

|

| 113 |

+

dbg.print("(Images are output to [" + img2img_key_path + "])")

|

| 114 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 115 |

+

return process_end( dbg, "" )

|

| 116 |

+

|

| 117 |

+

elif stage_index == 3:

|

| 118 |

+

ebsynth_utility_stage3_5(dbg, project_args, color_matcher_method, st3_5_use_mask, st3_5_use_mask_ref, st3_5_use_mask_org, color_matcher_ref_type, color_matcher_ref_image)

|

| 119 |

+

|

| 120 |

+

elif stage_index == 4:

|

| 121 |

+

sample_image = glob.glob( os.path.join(frame_path , "*.png" ) )[0]

|

| 122 |

+

img_height, img_width, _ = cv2.imread(sample_image).shape

|

| 123 |

+

|

| 124 |

+

sample_img2img_key = glob.glob( os.path.join(img2img_key_path , "*.png" ) )[0]

|

| 125 |

+

img_height_key, img_width_key, _ = cv2.imread(sample_img2img_key).shape

|

| 126 |

+

|

| 127 |

+

if is_invert_mask:

|

| 128 |

+

project_dir = inv_path

|

| 129 |

+

|

| 130 |

+

dbg.print("stage 4")

|

| 131 |

+

dbg.print("")

|

| 132 |

+

|

| 133 |

+

if img_height == img_height_key and img_width == img_width_key:

|

| 134 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 135 |

+

dbg.print("!! The size of frame and img2img_key matched.")

|

| 136 |

+

dbg.print("!! You can skip this stage.")

|

| 137 |

+

|

| 138 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 139 |

+

dbg.print("0. Enable the following item")

|

| 140 |

+

dbg.print("Settings ->")

|

| 141 |

+

dbg.print(" Saving images/grids ->")

|

| 142 |

+

dbg.print(" Use original name for output filename during batch process in extras tab")

|

| 143 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 144 |

+

dbg.print("1. If \"img2img_upscale_key\" directory already exists in the %s, delete it manually before executing."%(project_dir))

|

| 145 |

+

dbg.print("2. Go to Extras tab")

|

| 146 |

+

dbg.print("3. Go to Batch from Directory tab")

|

| 147 |

+

dbg.print("4. Fill in the \"Input directory\" field with [" + img2img_key_path + "]" )

|

| 148 |

+

dbg.print("5. Fill in the \"Output directory\" field with [" + img2img_upscale_key_path + "]" )

|

| 149 |

+

dbg.print("6. Go to Scale to tab")

|

| 150 |

+

dbg.print("7. Fill in the \"Width\" field with [" + str(img_width) + "]" )

|

| 151 |

+

dbg.print("8. Fill in the \"Height\" field with [" + str(img_height) + "]" )

|

| 152 |

+

dbg.print("9. Fill in the remaining configuration fields of Upscaler.")

|

| 153 |

+

dbg.print("10. Generate")

|

| 154 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 155 |

+

return process_end( dbg, "" )

|

| 156 |

+

elif stage_index == 5:

|

| 157 |

+

ebsynth_utility_stage5(dbg, project_args, is_invert_mask)

|

| 158 |

+

elif stage_index == 6:

|

| 159 |

+

|

| 160 |

+

if is_invert_mask:

|

| 161 |

+

project_dir = inv_path

|

| 162 |

+

|

| 163 |

+

dbg.print("stage 6")

|

| 164 |

+

dbg.print("")

|

| 165 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 166 |

+

dbg.print("Running ebsynth.(on your self)")

|

| 167 |

+

dbg.print("Open the generated .ebs under %s and press [Run All] button."%(project_dir))

|

| 168 |

+

dbg.print("If ""out-*"" directory already exists in the %s, delete it manually before executing."%(project_dir))

|

| 169 |

+

dbg.print("If multiple .ebs files are generated, run them all.")

|

| 170 |

+

dbg.print("(I recommend associating the .ebs file with EbSynth.exe.)")

|

| 171 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 172 |

+

return process_end( dbg, "" )

|

| 173 |

+

elif stage_index == 7:

|

| 174 |

+

ebsynth_utility_stage7(dbg, project_args, blend_rate, export_type, is_invert_mask)

|

| 175 |

+

elif stage_index == 8:

|

| 176 |

+

if mask_mode != "Normal":

|

| 177 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 178 |

+

dbg.print("Please reset [configuration]->[etc]->[Mask Mode] to Normal.")

|

| 179 |

+

dbg.print("!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

|

| 180 |

+

return process_end( dbg, "" )

|

| 181 |

+

ebsynth_utility_stage8(dbg, project_args, bg_src, bg_type, mask_blur_size, mask_threshold, fg_transparency, export_type)

|

| 182 |

+

else:

|

| 183 |

+

pass

|

| 184 |

+

|

| 185 |

+

return process_end( dbg, info )

|

ebsynth_utility/imgs/clipseg.png

ADDED

|

ebsynth_utility/imgs/controlnet_0.png

ADDED

|

ebsynth_utility/imgs/controlnet_1.png

ADDED

|

ebsynth_utility/imgs/controlnet_option_in_ebsynthutil.png

ADDED

|

ebsynth_utility/imgs/controlnet_setting.png

ADDED

|

ebsynth_utility/imgs/sample1.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9c5458eea82a691a584af26f51d1db728092069a3409c6f9eb2dd14fd2b71173

|

| 3 |

+

size 4824162

|

ebsynth_utility/imgs/sample2.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:537b8331b74d8ea49ee580aed138d460735ef897ab31ca694031d7a56d99ff72

|

| 3 |

+

size 2920523

|

ebsynth_utility/imgs/sample3.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c49739c2ef1f2ecaf14453f463e46b1a05de1688ce22b50200563a03b1758ddf

|

| 3 |

+

size 5161880

|

ebsynth_utility/imgs/sample4.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:62ed25263b6e5328a49460714c0b6e6ac46759921c87c91850f749f5bf068cfa

|

| 3 |

+

size 5617838

|

ebsynth_utility/imgs/sample5.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4e429cfef8b3ed7829ce3219895fcfdbbe94e1494a2e4dcd87988e03509c8d50

|

| 3 |

+

size 4190467

|

ebsynth_utility/imgs/sample6.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fb9a9ea8662ef1b7fc7151b987006eef8dd3598e320242bd87a2838ac8733df6

|

| 3 |

+

size 6890883

|

ebsynth_utility/imgs/sample_anyaheh.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4de4e9b9758cefe28c430f909da9dfc086e5b3510e9d0aa7becab7b4be355447

|

| 3 |

+

size 12159686

|

ebsynth_utility/imgs/sample_autotag.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4e904d68fc0fd9ac09ce153a9d54e9f1ce9f8db7cf5e96109c496f7e64924c92

|

| 3 |

+

size 7058129

|

ebsynth_utility/imgs/sample_clipseg.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:17701c5c3a376d3c4cf8ce0acfb991033830d56670ca3178eedd6e671e096af3

|

| 3 |

+

size 10249706

|

ebsynth_utility/install.py

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import launch

|

| 2 |

+

|

| 3 |

+

def update_transparent_background():

|

| 4 |

+

from importlib.metadata import version as meta_version

|

| 5 |

+

from packaging import version

|

| 6 |

+

v = meta_version("transparent-background")

|

| 7 |

+

print("current transparent-background " + v)

|

| 8 |

+

if version.parse(v) < version.parse('1.2.3'):

|

| 9 |

+

launch.run_pip("install -U transparent-background", "update transparent-background version for Ebsynth Utility")

|

| 10 |

+

|

| 11 |

+

if not launch.is_installed("transparent_background"):

|

| 12 |

+

launch.run_pip("install transparent-background", "requirements for Ebsynth Utility")

|

| 13 |

+

|

| 14 |

+

update_transparent_background()

|

| 15 |

+

|

| 16 |

+

if not launch.is_installed("IPython"):

|

| 17 |

+

launch.run_pip("install ipython", "requirements for Ebsynth Utility")

|

| 18 |

+

|

| 19 |

+

if not launch.is_installed("seaborn"):

|

| 20 |

+

launch.run_pip("install ""seaborn>=0.11.0""", "requirements for Ebsynth Utility")

|

| 21 |

+

|

| 22 |

+

if not launch.is_installed("color_matcher"):

|

| 23 |

+

launch.run_pip("install color-matcher", "requirements for Ebsynth Utility")

|

| 24 |

+

|

ebsynth_utility/sample/add_token.txt

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[

|

| 2 |

+

{

|

| 3 |

+

"target":"smile",

|

| 4 |

+

"min_score":0.5,

|

| 5 |

+

"token": ["lottalewds_v0", "1.2"],

|

| 6 |

+

"type":"lora"

|

| 7 |

+

},

|

| 8 |

+

{

|

| 9 |

+

"target":"smile",

|

| 10 |

+

"min_score":0.5,

|

| 11 |

+

"token": ["anyahehface", "score*1.2"],

|

| 12 |

+

"type":"normal"

|

| 13 |

+

},

|

| 14 |

+

{

|

| 15 |

+

"target":"smile",

|

| 16 |

+

"min_score":0.5,

|

| 17 |

+

"token": ["wicked smug", "score*1.2"],

|

| 18 |

+

"type":"normal"

|

| 19 |

+

},

|

| 20 |

+

{

|

| 21 |

+

"target":"smile",

|

| 22 |

+

"min_score":0.5,

|

| 23 |

+

"token": ["half closed eyes", "0.2 + score*0.3"],

|

| 24 |

+

"type":"normal"

|

| 25 |

+

},

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

{

|

| 30 |

+

"target":"test_token",

|

| 31 |

+

"min_score":0.8,

|

| 32 |

+

"token": ["lora_name_A", "0.5"],

|

| 33 |

+

"type":"lora"

|

| 34 |

+

},

|

| 35 |

+

{

|

| 36 |

+

"target":"test_token",

|

| 37 |

+

"min_score":0.5,

|

| 38 |

+

"token": ["bbbb", "score - 0.1"],

|

| 39 |

+

"type":"normal"

|

| 40 |

+

},

|

| 41 |

+

{

|

| 42 |

+

"target":"test_token2",

|

| 43 |

+

"min_score":0.8,

|

| 44 |

+

"token": ["hypernet_name_A", "score"],

|

| 45 |

+

"type":"hypernet"

|

| 46 |

+

},

|

| 47 |

+

{

|

| 48 |

+

"target":"test_token3",

|

| 49 |

+

"min_score":0.0,

|

| 50 |

+

"token": ["dddd", "score"],

|

| 51 |

+

"type":"normal"

|

| 52 |

+

}

|

| 53 |

+

]

|

| 54 |

+

|

ebsynth_utility/sample/blacklist.txt

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

motion_blur

|

| 2 |

+

blurry

|

| 3 |

+

realistic

|

| 4 |

+

depth_of_field

|

| 5 |

+

mountain

|

| 6 |

+

tree

|

| 7 |

+

water

|

| 8 |

+

underwater

|

| 9 |

+

tongue

|

| 10 |

+

tongue_out

|

ebsynth_utility/scripts/__pycache__/custom_script.cpython-310.pyc

ADDED

|

Binary file (25.8 kB). View file

|

|

|

ebsynth_utility/scripts/__pycache__/ui.cpython-310.pyc

ADDED

|

Binary file (10.2 kB). View file

|

|

|

ebsynth_utility/scripts/custom_script.py

ADDED

|

@@ -0,0 +1,1012 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|