pipeline build up

Browse files- Experiments/Baseline-Experimental.ipynb +0 -85

- Experiments/Baseline/GUI.py +24 -0

- Experiments/Baseline/baseline.py +12 -0

- Experiments/Baseline/baseline_utils.py +107 -0

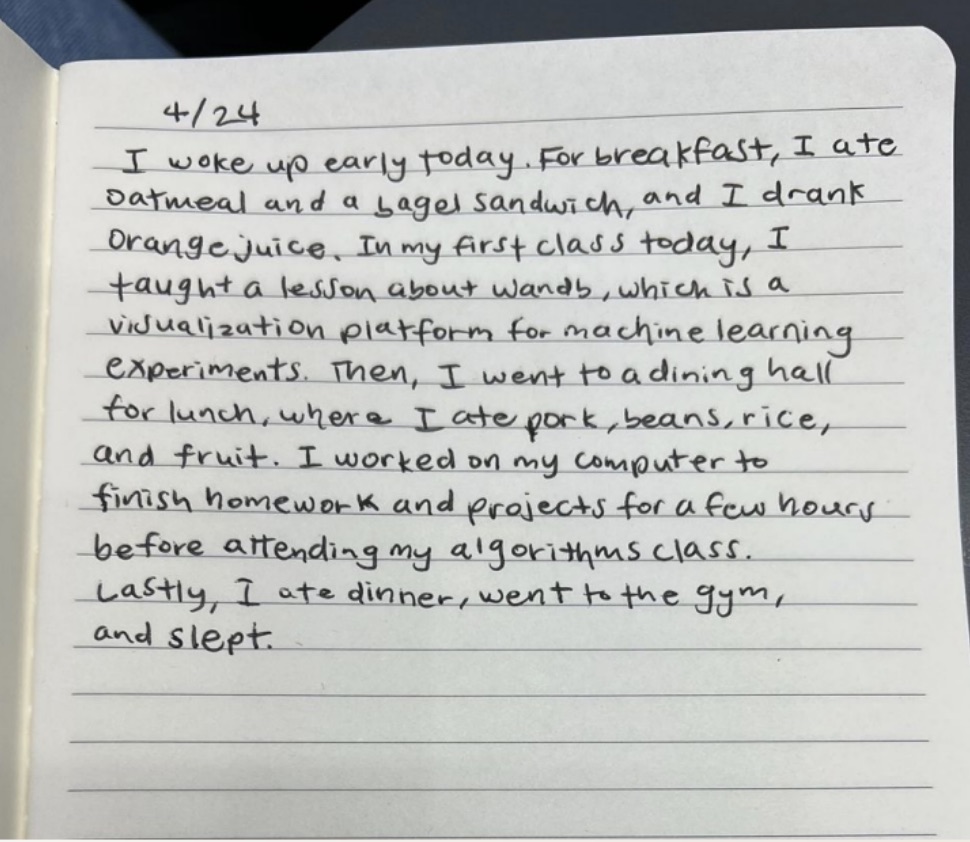

- Experiments/Baseline/images/test_sample.jpeg +0 -0

- Experiments/Baseline/images/writer.jpg +0 -0

- Source/requirements.txt +0 -0

- requirements.txt +6 -0

Experiments/Baseline-Experimental.ipynb

DELETED

|

@@ -1,85 +0,0 @@

|

|

| 1 |

-

{

|

| 2 |

-

"cells": [

|

| 3 |

-

{

|

| 4 |

-

"cell_type": "code",

|

| 5 |

-

"execution_count": null,

|

| 6 |

-

"id": "initial_id",

|

| 7 |

-

"metadata": {

|

| 8 |

-

"collapsed": true

|

| 9 |

-

},

|

| 10 |

-

"outputs": [],

|

| 11 |

-

"source": [

|

| 12 |

-

"# Utilize the Google Cloud Vision API to recognize text in the input images (diary images), https://cloud.google.com/vision.\n"

|

| 13 |

-

]

|

| 14 |

-

},

|

| 15 |

-

{

|

| 16 |

-

"cell_type": "code",

|

| 17 |

-

"execution_count": null,

|

| 18 |

-

"outputs": [],

|

| 19 |

-

"source": [

|

| 20 |

-

"# Utilize the PaLM 2 Bison for Text model to conduct NLP tasks such as text summarization and condensing on the diary text, https://ai.google.dev/palm_docs/palm.\n"

|

| 21 |

-

],

|

| 22 |

-

"metadata": {

|

| 23 |

-

"collapsed": false

|

| 24 |

-

},

|

| 25 |

-

"id": "204930c13fd1e579"

|

| 26 |

-

},

|

| 27 |

-

{

|

| 28 |

-

"cell_type": "code",

|

| 29 |

-

"execution_count": null,

|

| 30 |

-

"outputs": [],

|

| 31 |

-

"source": [

|

| 32 |

-

"# Utilize the Gemini 1.0 Pro Vision to input an image of the diary writer, and output a textual description of the image, https://ai.google.dev/gemini-api/docs/models/gemini.\n"

|

| 33 |

-

],

|

| 34 |

-

"metadata": {

|

| 35 |

-

"collapsed": false

|

| 36 |

-

},

|

| 37 |

-

"id": "7f0c7d788b8de177"

|

| 38 |

-

},

|

| 39 |

-

{

|

| 40 |

-

"cell_type": "code",

|

| 41 |

-

"execution_count": null,

|

| 42 |

-

"outputs": [],

|

| 43 |

-

"source": [

|

| 44 |

-

"# Now that you have text from the diary and text describing the diary writer, you can utilize the SDXL-Turbo stable diffusion model to generate images https://huggingface.co/stabilityai/sdxl-turbo. You can try to output several images for a diary entry. Analyze how accurate the results, and think about what could be improved.\n"

|

| 45 |

-

],

|

| 46 |

-

"metadata": {

|

| 47 |

-

"collapsed": false

|

| 48 |

-

},

|

| 49 |

-

"id": "c475ca58dea760da"

|

| 50 |

-

},

|

| 51 |

-

{

|

| 52 |

-

"cell_type": "code",

|

| 53 |

-

"execution_count": null,

|

| 54 |

-

"outputs": [],

|

| 55 |

-

"source": [

|

| 56 |

-

"# You can create a web or mobile-based GUI so that users can experience your solution. Suggested libraries include https://www.gradio.app/ or https://streamlit.io/.\n"

|

| 57 |

-

],

|

| 58 |

-

"metadata": {

|

| 59 |

-

"collapsed": false

|

| 60 |

-

},

|

| 61 |

-

"id": "ee3a3a8d4027bae3"

|

| 62 |

-

}

|

| 63 |

-

],

|

| 64 |

-

"metadata": {

|

| 65 |

-

"kernelspec": {

|

| 66 |

-

"display_name": "Python 3",

|

| 67 |

-

"language": "python",

|

| 68 |

-

"name": "python3"

|

| 69 |

-

},

|

| 70 |

-

"language_info": {

|

| 71 |

-

"codemirror_mode": {

|

| 72 |

-

"name": "ipython",

|

| 73 |

-

"version": 2

|

| 74 |

-

},

|

| 75 |

-

"file_extension": ".py",

|

| 76 |

-

"mimetype": "text/x-python",

|

| 77 |

-

"name": "python",

|

| 78 |

-

"nbconvert_exporter": "python",

|

| 79 |

-

"pygments_lexer": "ipython2",

|

| 80 |

-

"version": "2.7.6"

|

| 81 |

-

}

|

| 82 |

-

},

|

| 83 |

-

"nbformat": 4,

|

| 84 |

-

"nbformat_minor": 5

|

| 85 |

-

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Experiments/Baseline/GUI.py

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from PIL import Image

|

| 3 |

+

|

| 4 |

+

# You can create a web or mobile-based GUI so that users can experience your solution. Suggested libraries include https://www.gradio.app/ or https://streamlit.io/.

|

| 5 |

+

st.title('Handwritten Diary to Cartoon Book')

|

| 6 |

+

uploaded_diary = st.file_uploader("Upload your diary image", type=["png", "jpg", "jpeg"])

|

| 7 |

+

uploaded_writer_image = st.file_uploader("Upload your photo", type=["png", "jpg", "jpeg"])

|

| 8 |

+

|

| 9 |

+

if uploaded_diary and uploaded_writer_image:

|

| 10 |

+

st.write("Analyzing your diary...")

|

| 11 |

+

|

| 12 |

+

diary_text = detect_text_in_image(uploaded_diary)

|

| 13 |

+

summarized_text = summarize_diary_text(diary_text)

|

| 14 |

+

|

| 15 |

+

st.write(f"Summarized Diary Text: {summarized_text}")

|

| 16 |

+

|

| 17 |

+

writer_description = analyze_writer_image(uploaded_writer_image)

|

| 18 |

+

st.write(f"Diary Writer Description: {writer_description}")

|

| 19 |

+

|

| 20 |

+

# Generate cartoon image

|

| 21 |

+

prompt = f"{summarized_text}, featuring a person who {writer_description}"

|

| 22 |

+

generated_image = generate_image(prompt)

|

| 23 |

+

|

| 24 |

+

st.image(generated_image, caption="Generated Cartoon Image")

|

Experiments/Baseline/baseline.py

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from baseline_utils import *

|

| 2 |

+

from keys.keys import *

|

| 3 |

+

|

| 4 |

+

diary_image_path = "images/test_sample.jpeg"

|

| 5 |

+

writer_image_path = "images/writer.jpg"

|

| 6 |

+

credentials_path = "keys/service_account_credentials.json"

|

| 7 |

+

|

| 8 |

+

# Detect text from the image using the provided credentials

|

| 9 |

+

detected_text = detect_text_in_image(diary_image_path, credentials_path)

|

| 10 |

+

diary_summary = summarize_diary_text(detected_text, open_ai_keys)

|

| 11 |

+

writer_summary = analyze_writer_image(writer_image_path, gemini_keys)

|

| 12 |

+

generate_image(diary_summary, writer_summary)

|

Experiments/Baseline/baseline_utils.py

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import openai

|

| 2 |

+

from google.cloud import vision

|

| 3 |

+

from google.oauth2 import service_account

|

| 4 |

+

import io

|

| 5 |

+

import google.generativeai as genai

|

| 6 |

+

from diffusers import AutoPipelineForText2Image

|

| 7 |

+

import torch

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

# Utilize the Google Cloud Vision API to recognize text in the

|

| 11 |

+

# input images (diary images), https://cloud.google.com/vision.

|

| 12 |

+

def detect_text_in_image(image_path, credentials_path):

|

| 13 |

+

# Load the service account key from the credentials JSON file

|

| 14 |

+

credentials = service_account.Credentials.from_service_account_file(credentials_path)

|

| 15 |

+

|

| 16 |

+

# Create a Vision API client using the credentials

|

| 17 |

+

client = vision.ImageAnnotatorClient(credentials=credentials)

|

| 18 |

+

|

| 19 |

+

# Open the image file

|

| 20 |

+

with io.open(image_path, 'rb') as image_file:

|

| 21 |

+

content = image_file.read()

|

| 22 |

+

|

| 23 |

+

# Create an image object for the Vision API

|

| 24 |

+

image = vision.Image(content=content)

|

| 25 |

+

|

| 26 |

+

# Use the Vision API to detect text

|

| 27 |

+

response = client.text_detection(image=image)

|

| 28 |

+

texts = response.text_annotations

|

| 29 |

+

|

| 30 |

+

# Check for errors in the response

|

| 31 |

+

if response.error.message:

|

| 32 |

+

raise Exception(f'{response.error.message}')

|

| 33 |

+

|

| 34 |

+

# Return the detected text or an empty string

|

| 35 |

+

return texts[0].description if texts else ''

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# Utilize the PaLM 2 Bison for Text model to conduct NLP tasks such as

|

| 39 |

+

# text summarization and condensing on the diary text, https://ai.google.dev/palm_docs/palm.

|

| 40 |

+

def summarize_diary_text(text, api_key):

|

| 41 |

+

# Initialize the OpenAI client

|

| 42 |

+

client = openai.Client(api_key=api_key)

|

| 43 |

+

|

| 44 |

+

# Use the client to call the chat completion API

|

| 45 |

+

response = client.chat.completions.create(

|

| 46 |

+

model="gpt-4", # Use GPT-4

|

| 47 |

+

messages=[

|

| 48 |

+

{"role": "system", "content": "You are a helpful assistant."},

|

| 49 |

+

{"role": "user", "content": f"Summarize the following diary entry: {text}"}

|

| 50 |

+

],

|

| 51 |

+

max_tokens=150,

|

| 52 |

+

temperature=0.7,

|

| 53 |

+

n=1 # Number of completions to generate

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

# Extract the summary from the response

|

| 57 |

+

return response.choices[0].message.content

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

# Utilize the Gemini 1.0 Pro Vision to input an image of the diary writer,

|

| 61 |

+

# and output a textual description of the image,

|

| 62 |

+

# https://ai.google.dev/gemini-api/docs/models/gemini.

|

| 63 |

+

# Mock example assuming an API request to Gemini

|

| 64 |

+

def analyze_writer_image(image_path, api_key):

|

| 65 |

+

genai.configure(api_key=api_key)

|

| 66 |

+

model = genai.GenerativeModel("gemini-1.5-flash")

|

| 67 |

+

myfile = genai.upload_file(image_path)

|

| 68 |

+

result = model.generate_content(

|

| 69 |

+

[myfile, "\n\n", "Can you give a textual description of the image?"]

|

| 70 |

+

)

|

| 71 |

+

return result.text

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

# Now that you have text from the diary and text describing the diary writer,

|

| 75 |

+

# you can utilize the SDXL-Turbo stable diffusion model to generate

|

| 76 |

+

# images https://huggingface.co/stabilityai/sdxl-turbo.

|

| 77 |

+

# You can try to output several images for a diary entry. Analyze how accurate the results,

|

| 78 |

+

# and think about what could be improved.

|

| 79 |

+

def generate_image(diary_text, writer_description):

|

| 80 |

+

pipe = AutoPipelineForText2Image.from_pretrained(

|

| 81 |

+

"stabilityai/sdxl-turbo",

|

| 82 |

+

torch_dtype=torch.float16,

|

| 83 |

+

variant="fp16",

|

| 84 |

+

cache_dir="./SDXL-Turbo")

|

| 85 |

+

|

| 86 |

+

# Check for available device: CUDA, MPS, or CPU

|

| 87 |

+

if torch.cuda.is_available():

|

| 88 |

+

device = "cuda"

|

| 89 |

+

print("Using CUDA backend.")

|

| 90 |

+

elif torch.backends.mps.is_available():

|

| 91 |

+

device = "mps"

|

| 92 |

+

print("Using MPS backend.")

|

| 93 |

+

else:

|

| 94 |

+

device = "cpu"

|

| 95 |

+

print("CUDA and MPS not available. Falling back to CPU.")

|

| 96 |

+

|

| 97 |

+

# Move the model to the selected device

|

| 98 |

+

pipe = pipe.to(device)

|

| 99 |

+

|

| 100 |

+

# Generate the image with a simple prompt

|

| 101 |

+

prompt = f'Writer Description: {writer_description} \n\n Diary: {diary_text}'

|

| 102 |

+

print(prompt)

|

| 103 |

+

image = pipe(prompt=prompt, num_inference_steps=1, guidance_scale=0.0).images[0]

|

| 104 |

+

|

| 105 |

+

# Save the generated image

|

| 106 |

+

image.save("generated_image.png")

|

| 107 |

+

|

Experiments/Baseline/images/test_sample.jpeg

ADDED

|

Experiments/Baseline/images/writer.jpg

ADDED

|

Source/requirements.txt

DELETED

|

File without changes

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

openai

|

| 2 |

+

google-cloud-vision

|

| 3 |

+

google-auth

|

| 4 |

+

google-generativeai

|

| 5 |

+

diffusers

|

| 6 |

+

torch

|