Spaces:

Runtime error

Runtime error

test langchain-chatglm

#3

by

fb700

- opened

- .gitignore +3 -0

- README.md +110 -7

- app.py +207 -297

- app_modules/__pycache__/presets.cpython-310.pyc +0 -0

- app_modules/__pycache__/presets.cpython-39.pyc +0 -0

- app_modules/overwrites.py +49 -0

- app_modules/presets.py +82 -0

- app_modules/utils.py +227 -0

- assets/Kelpy-Codos.js +76 -0

- assets/custom.css +190 -0

- assets/custom.js +1 -0

- assets/favicon.ico +0 -0

- clc/__init__.py +11 -0

- clc/__pycache__/__init__.cpython-310.pyc +0 -0

- clc/__pycache__/__init__.cpython-39.pyc +0 -0

- clc/__pycache__/gpt_service.cpython-310.pyc +0 -0

- clc/__pycache__/gpt_service.cpython-39.pyc +0 -0

- clc/__pycache__/langchain_application.cpython-310.pyc +0 -0

- clc/__pycache__/langchain_application.cpython-39.pyc +0 -0

- clc/__pycache__/source_service.cpython-310.pyc +0 -0

- clc/__pycache__/source_service.cpython-39.pyc +0 -0

- clc/config.py +18 -0

- clc/gpt_service.py +62 -0

- clc/langchain_application.py +97 -0

- clc/source_service.py +86 -0

- corpus/zh_wikipedia/README.md +114 -0

- corpus/zh_wikipedia/chinese_t2s.py +82 -0

- corpus/zh_wikipedia/clean_corpus.py +88 -0

- corpus/zh_wikipedia/wiki_process.py +46 -0

- create_knowledge.py +79 -0

- docs/added/马保国.txt +2 -0

- docs/姚明.txt +4 -0

- docs/王治郅.txt +4 -0

- docs/科比.txt +5 -0

- images/computing.png +0 -0

- images/web_demos/v1.png +0 -0

- images/web_demos/v2.png +0 -0

- images/web_demos/v3.png +0 -0

- images/wiki_process.png +0 -0

- main.py +227 -0

- requirements.txt +10 -8

- resources/OpenCC-1.1.6-cp310-cp310-manylinux1_x86_64.whl +0 -0

- tests/test_duckduckgo_search.py +16 -0

- tests/test_duckpy.py +15 -0

- tests/test_gradio_slient.py +19 -0

- tests/test_langchain.py +36 -0

- tests/test_vector_store.py +11 -0

.gitignore

CHANGED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.idea

|

| 2 |

+

cache

|

| 3 |

+

docs/zh_wikipedia

|

README.md

CHANGED

|

@@ -1,13 +1,116 @@

|

|

| 1 |

---

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

| 4 |

colorFrom: yellow

|

| 5 |

colorTo: yellow

|

| 6 |

-

|

| 7 |

-

sdk_version: 3.38.0

|

| 8 |

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: apache-2.0

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

license: openrail

|

| 3 |

+

title: 'Chinese-LangChain '

|

| 4 |

+

sdk: gradio

|

| 5 |

+

emoji: 🚀

|

| 6 |

colorFrom: yellow

|

| 7 |

colorTo: yellow

|

| 8 |

+

pinned: true

|

|

|

|

| 9 |

app_file: app.py

|

|

|

|

|

|

|

| 10 |

---

|

| 11 |

|

| 12 |

+

# Chinese-LangChain

|

| 13 |

+

|

| 14 |

+

> Chinese-LangChain:中文langchain项目,基于ChatGLM-6b+langchain实现本地化知识库检索与智能答案生成

|

| 15 |

+

|

| 16 |

+

https://github.com/yanqiangmiffy/Chinese-LangChain

|

| 17 |

+

|

| 18 |

+

俗称:小必应,Q.Talk,强聊,QiangTalk

|

| 19 |

+

|

| 20 |

+

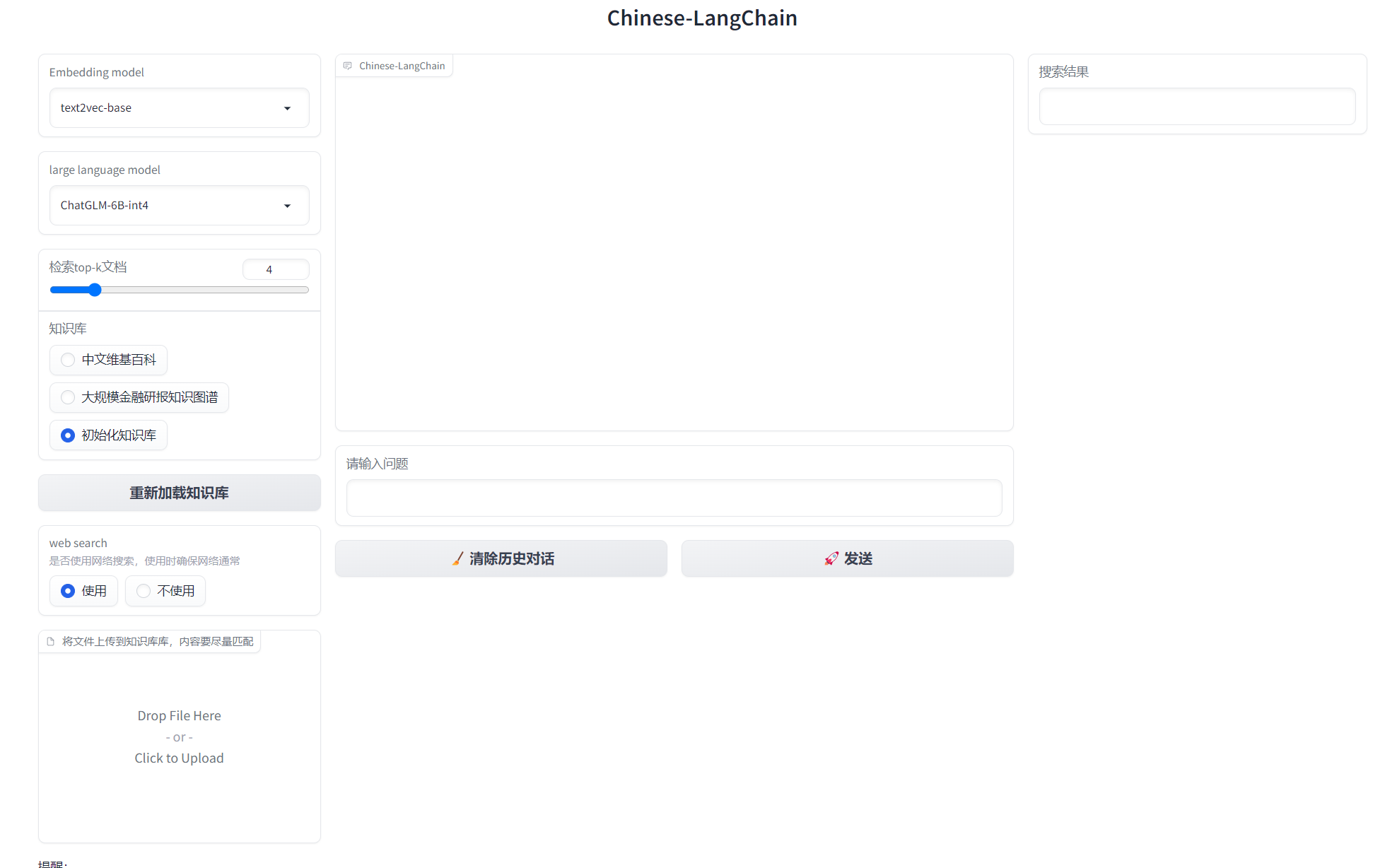

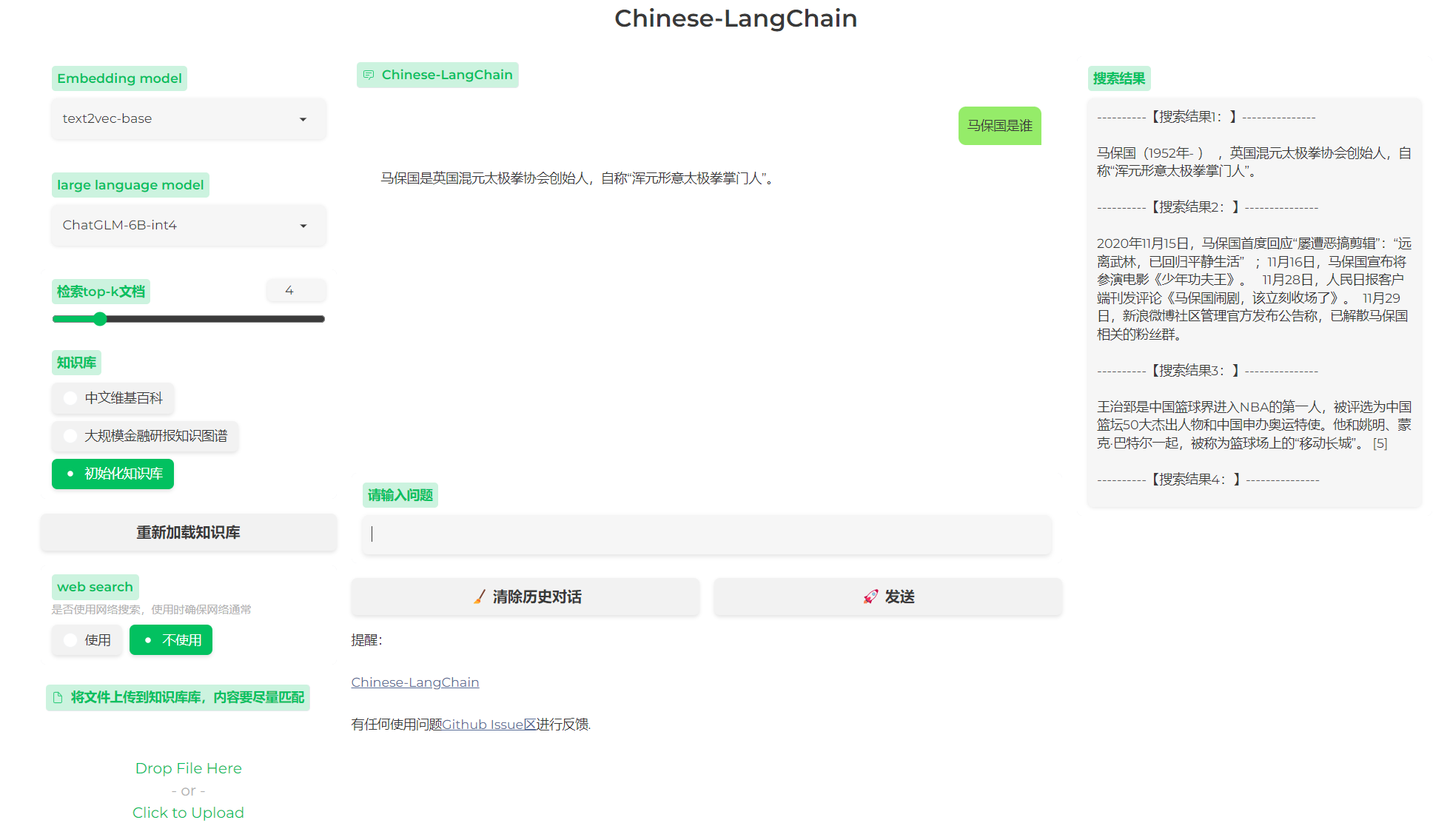

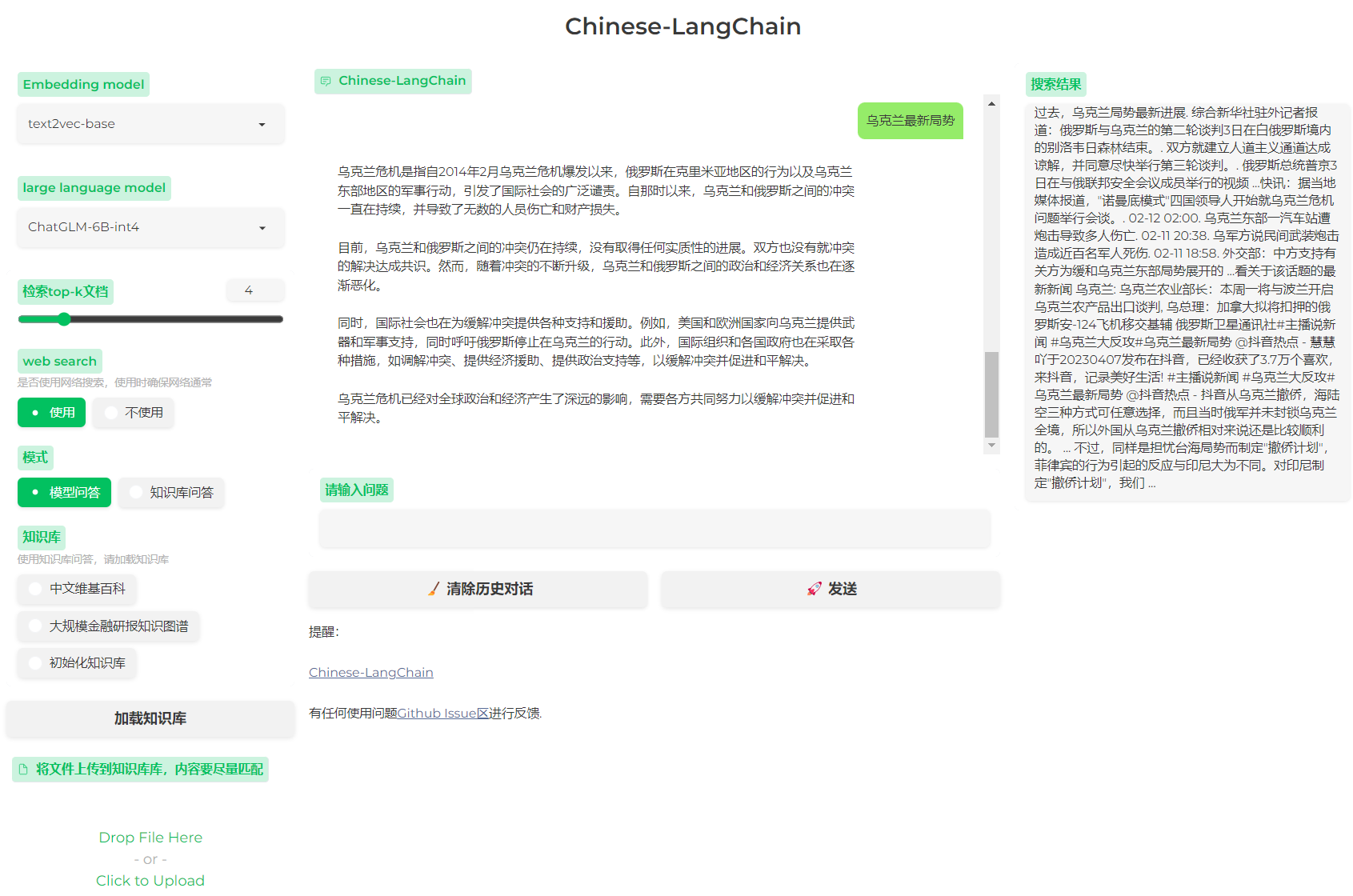

## 🔥 效果演示

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## 🚋 使用教程

|

| 26 |

+

|

| 27 |

+

- 选择知识库询问相关领域的问题

|

| 28 |

+

|

| 29 |

+

## 🏗️ 部署教程

|

| 30 |

+

|

| 31 |

+

### 运行配置

|

| 32 |

+

|

| 33 |

+

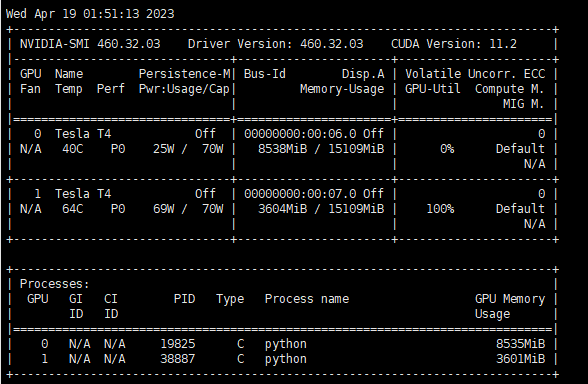

- 显存:12g,实际运行9g够了

|

| 34 |

+

- 运行内存:32g

|

| 35 |

+

|

| 36 |

+

### 运行环境

|

| 37 |

+

|

| 38 |

+

```text

|

| 39 |

+

langchain

|

| 40 |

+

gradio

|

| 41 |

+

transformers

|

| 42 |

+

sentence_transformers

|

| 43 |

+

faiss-cpu

|

| 44 |

+

unstructured

|

| 45 |

+

duckduckgo_search

|

| 46 |

+

mdtex2html

|

| 47 |

+

chardet

|

| 48 |

+

cchardet

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

### 启动Gradio

|

| 52 |

+

|

| 53 |

+

```shell

|

| 54 |

+

python main.py

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

## 🚀 特性

|

| 58 |

+

|

| 59 |

+

- 🔭 2023/04/20 支持模型问答与检索问答模式切换

|

| 60 |

+

- 💻 2023/04/20 感谢HF官方提供免费算力,添加HuggingFace

|

| 61 |

+

Spaces在线体验[[🤗 DEMO](https://huggingface.co/spaces/ChallengeHub/Chinese-LangChain)

|

| 62 |

+

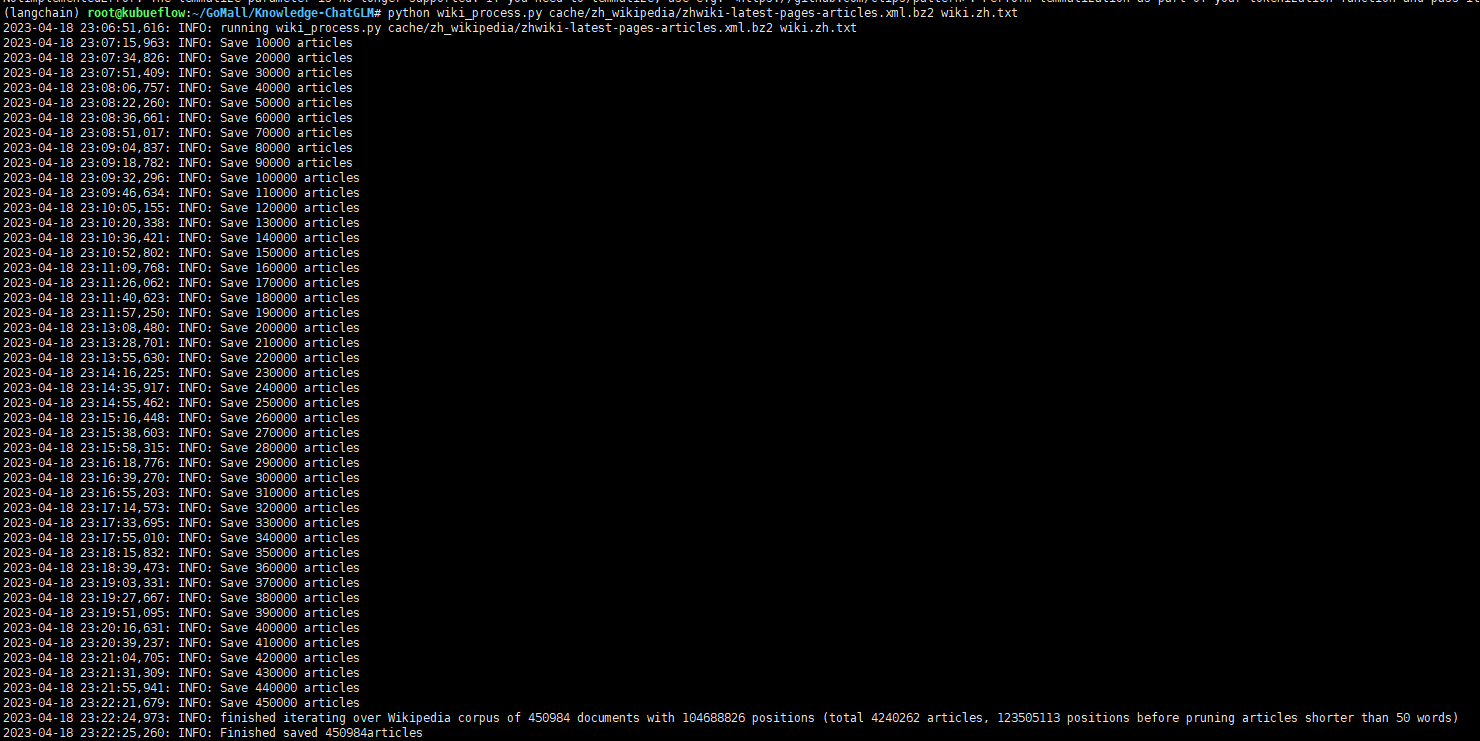

- 🧫 2023/04/19 发布45万Wikipedia的文本预处理语料以及FAISS索引向量

|

| 63 |

+

- 🐯 2023/04/19 引入ChuanhuChatGPT皮肤

|

| 64 |

+

- 📱 2023/04/19 增加web search功能,需要确保网络畅通!(感谢[@wanghao07456](https://github.com/wanghao07456),提供的idea)

|

| 65 |

+

- 📚 2023/04/18 webui增加知识库选择功能

|

| 66 |

+

- 🚀 2023/04/18 修复推理预测超时5s报错问题

|

| 67 |

+

- 🎉 2023/04/17 支持多种文档上传与内容解析:pdf、docx,ppt等

|

| 68 |

+

- 🎉 2023/04/17 支持知识增量更新

|

| 69 |

+

|

| 70 |

+

[//]: # (- 支持检索结果与LLM生成结果对比)

|

| 71 |

+

|

| 72 |

+

## 🧰 知识库

|

| 73 |

+

|

| 74 |

+

### 构建知识库

|

| 75 |

+

|

| 76 |

+

- Wikipedia-zh

|

| 77 |

+

|

| 78 |

+

> 详情见:corpus/zh_wikipedia/README.md

|

| 79 |

+

|

| 80 |

+

### 知识库向量索引

|

| 81 |

+

|

| 82 |

+

| 知识库数据 | FAISS向量 |

|

| 83 |

+

|-------------------------------------------------------------------------------|----------------------------------------------------------------------|

|

| 84 |

+

| 中文维基百科截止4月份数据,45万 | 链接:https://pan.baidu.com/s/1VQeA_dq92fxKOtLL3u3Zpg?pwd=l3pn 提取码:l3pn |

|

| 85 |

+

| 截止去年九月的130w条中文维基百科处理结果和对应faiss向量文件 @[yubuyuabc](https://github.com/yubuyuabc) | 链接:https://pan.baidu.com/s/1Yls_Qtg15W1gneNuFP9O_w?pwd=exij 提取码:exij |

|

| 86 |

+

| 💹 [大规模金融研报知识图谱](http://openkg.cn/dataset/fr2kg) | 链接:https://pan.baidu.com/s/1FcIH5Fi3EfpS346DnDu51Q?pwd=ujjv 提取码:ujjv |

|

| 87 |

+

|

| 88 |

+

## 🔨 TODO

|

| 89 |

+

|

| 90 |

+

* [x] 支持上下文

|

| 91 |

+

* [x] 支持知识增量更新

|

| 92 |

+

* [x] 支持加载不同知识库

|

| 93 |

+

* [x] 支持检索结果与LLM生成结果对比

|

| 94 |

+

* [ ] 支持检索生成结果与原始LLM生成结果对比

|

| 95 |

+

* [ ] 支持模型问答与检索问答

|

| 96 |

+

* [ ] 检索结果过滤与排序

|

| 97 |

+

* [x] 互联网检索结果接入

|

| 98 |

+

* [ ] 模型初始化有问题

|

| 99 |

+

* [ ] 增加非LangChain策略

|

| 100 |

+

* [ ] 显示当前对话策略

|

| 101 |

+

* [ ] 构建一个垂直业务场景知识库,非通用性

|

| 102 |

+

|

| 103 |

+

## 交流

|

| 104 |

+

|

| 105 |

+

欢迎多提建议、Bad cases,目前尚不完善,欢迎进群及时交流,也欢迎大家多提PR</br>

|

| 106 |

+

|

| 107 |

+

<figure class="third">

|

| 108 |

+

<img src="https://raw.githubusercontent.com/yanqiangmiffy/Chinese-LangChain/master/images/ch.jpg" width="180px"><img src="https://raw.githubusercontent.com/yanqiangmiffy/Chinese-LangChain/master/images/chatgroup.jpg" width="180px" height="270px"><img src="https://raw.githubusercontent.com/yanqiangmiffy/Chinese-LangChain/master/images/personal.jpg" width="180px">

|

| 109 |

+

</figure>

|

| 110 |

+

|

| 111 |

+

## ❤️引用

|

| 112 |

+

|

| 113 |

+

- webui参考:https://github.com/thomas-yanxin/LangChain-ChatGLM-Webui

|

| 114 |

+

- knowledge问答参考:https://github.com/imClumsyPanda/langchain-ChatGLM

|

| 115 |

+

- LLM模型:https://github.com/THUDM/ChatGLM-6B

|

| 116 |

+

- CSS:https://huggingface.co/spaces/JohnSmith9982/ChuanhuChatGPT

|

app.py

CHANGED

|

@@ -1,311 +1,221 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

# import gradio as gr

|

| 4 |

|

| 5 |

-

|

| 6 |

-

|

| 7 |

|

| 8 |

-

# %%writefile demo-4bit.py

|

| 9 |

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

|

| 14 |

-

import gradio as gr

|

| 15 |

-

import mdtex2html

|

| 16 |

-

import torch

|

| 17 |

-

from loguru import logger

|

| 18 |

-

from transformers import AutoModel, AutoTokenizer

|

| 19 |

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

time.tzset() # type: ignore # pylint: disable=no-member

|

| 24 |

-

except Exception:

|

| 25 |

-

# Windows

|

| 26 |

-

logger.warning("Windows, cant run time.tzset()")

|

| 27 |

|

| 28 |

|

| 29 |

-

|

| 30 |

-

|

|

|

|

|

|

|

| 31 |

|

| 32 |

-

RETRY_FLAG = False

|

| 33 |

|

| 34 |

-

|

| 35 |

-

#model = AutoModel.from_pretrained(model_name, trust_remote_code=True).quantize(4).half().cuda()

|

| 36 |

-

model = AutoModel.from_pretrained(model_name, trust_remote_code=True).half().cuda()

|

| 37 |

-

model = model.eval()

|

| 38 |

|

| 39 |

-

_ = """Override Chatbot.postprocess"""

|

| 40 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 41 |

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

return []

|

| 45 |

-

for i, (message, response) in enumerate(y):

|

| 46 |

-

y[i] = (

|

| 47 |

-

None if message is None else mdtex2html.convert((message)),

|

| 48 |

-

None if response is None else mdtex2html.convert(response),

|

| 49 |

-

)

|

| 50 |

-

return y

|

| 51 |

-

|

| 52 |

-

|

| 53 |

-

gr.Chatbot.postprocess = postprocess

|

| 54 |

-

|

| 55 |

-

|

| 56 |

-

def parse_text(text):

|

| 57 |

-

lines = text.split("\n")

|

| 58 |

-

lines = [line for line in lines if line != ""]

|

| 59 |

-

count = 0

|

| 60 |

-

for i, line in enumerate(lines):

|

| 61 |

-

if "```" in line:

|

| 62 |

-

count += 1

|

| 63 |

-

items = line.split("`")

|

| 64 |

-

if count % 2 == 1:

|

| 65 |

-

lines[i] = f'<pre><code class="language-{items[-1]}">'

|

| 66 |

-

else:

|

| 67 |

-

lines[i] = "<br></code></pre>"

|

| 68 |

-

else:

|

| 69 |

-

if i > 0:

|

| 70 |

-

if count % 2 == 1:

|

| 71 |

-

line = line.replace("`", r"\`")

|

| 72 |

-

line = line.replace("<", "<")

|

| 73 |

-

line = line.replace(">", ">")

|

| 74 |

-

line = line.replace(" ", " ")

|

| 75 |

-

line = line.replace("*", "*")

|

| 76 |

-

line = line.replace("_", "_")

|

| 77 |

-

line = line.replace("-", "-")

|

| 78 |

-

line = line.replace(".", ".")

|

| 79 |

-

line = line.replace("!", "!")

|

| 80 |

-

line = line.replace("(", "(")

|

| 81 |

-

line = line.replace(")", ")")

|

| 82 |

-

line = line.replace("$", "$")

|

| 83 |

-

lines[i] = "<br>" + line

|

| 84 |

-

text = "".join(lines)

|

| 85 |

-

return text

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

def predict(

|

| 89 |

-

RETRY_FLAG, input, chatbot, max_length, top_p, temperature, history, past_key_values

|

| 90 |

-

):

|

| 91 |

-

try:

|

| 92 |

-

chatbot.append((parse_text(input), ""))

|

| 93 |

-

except Exception as exc:

|

| 94 |

-

logger.error(exc)

|

| 95 |

-

logger.debug(f"{chatbot=}")

|

| 96 |

-

_ = """

|

| 97 |

-

if chatbot:

|

| 98 |

-

chatbot[-1] = (parse_text(input), str(exc))

|

| 99 |

-

yield chatbot, history, past_key_values

|

| 100 |

-

# """

|

| 101 |

-

yield chatbot, history, past_key_values

|

| 102 |

-

"""

|

| 103 |

-

for response, history, past_key_values in model.stream_chat(

|

| 104 |

-

tokenizer,

|

| 105 |

-

input,

|

| 106 |

-

history,

|

| 107 |

-

past_key_values=past_key_values,

|

| 108 |

-

return_past_key_values=True,

|

| 109 |

-

max_length=max_length,

|

| 110 |

-

top_p=top_p,

|

| 111 |

-

temperature=temperature,

|

| 112 |

-

):

|

| 113 |

-

"""

|

| 114 |

-

for response, history in model.stream_chat(tokenizer, input, history, max_length=max_length, top_p=top_p,

|

| 115 |

-

temperature=temperature):

|

| 116 |

-

chatbot[-1] = (parse_text(input), parse_text(response))

|

| 117 |

-

|

| 118 |

-

yield chatbot, history, past_key_values

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

def trans_api(input, max_length=40960, top_p=0.7, temperature=0.95):

|

| 122 |

-

if max_length < 10:

|

| 123 |

-

max_length = 40960

|

| 124 |

-

if top_p < 0.1 or top_p > 1:

|

| 125 |

-

top_p = 0.7

|

| 126 |

-

if temperature <= 0 or temperature > 1:

|

| 127 |

-

temperature = 0.01

|

| 128 |

try:

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

|

| 136 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 137 |

)

|

| 138 |

-

|

| 139 |

-

|

| 140 |

-

|

| 141 |

-

|

| 142 |

-

|

| 143 |

-

|

| 144 |

-

|

| 145 |

-

|

| 146 |

-

|

| 147 |

-

|

| 148 |

-

|

| 149 |

-

|

| 150 |

-

|

| 151 |

-

|

| 152 |

-

|

| 153 |

-

|

| 154 |

-

|

| 155 |

-

|

| 156 |

-

if chat and history:

|

| 157 |

-

chat.pop(-1)

|

| 158 |

-

history.pop(-1)

|

| 159 |

-

return chat, history

|

| 160 |

-

|

| 161 |

-

|

| 162 |

-

# Regenerate response

|

| 163 |

-

def retry_last_answer(

|

| 164 |

-

user_input, chatbot, max_length, top_p, temperature, history, past_key_values

|

| 165 |

-

):

|

| 166 |

-

if chatbot and history:

|

| 167 |

-

# Removing the previous conversation from chat

|

| 168 |

-

chatbot.pop(-1)

|

| 169 |

-

# Setting up a flag to capture a retry

|

| 170 |

-

RETRY_FLAG = True

|

| 171 |

-

# Getting last message from user

|

| 172 |

-

user_input = history[-1][0]

|

| 173 |

-

# Removing bot response from the history

|

| 174 |

-

history.pop(-1)

|

| 175 |

-

|

| 176 |

-

yield from predict(

|

| 177 |

-

RETRY_FLAG, # type: ignore

|

| 178 |

-

user_input,

|

| 179 |

-

chatbot,

|

| 180 |

-

max_length,

|

| 181 |

-

top_p,

|

| 182 |

-

temperature,

|

| 183 |

-

history,

|

| 184 |

-

past_key_values,

|

| 185 |

-

)

|

| 186 |

|

| 187 |

-

|

| 188 |

-

with gr.Blocks(title="ChatGLM2-6B-int4", theme=gr.themes.Soft(text_size="sm")) as demo:

|

| 189 |

-

# gr.HTML("""<h1 align="center">ChatGLM2-6B-int4</h1>""")

|

| 190 |

-

gr.HTML(

|

| 191 |

-

"""<center><a href="https://huggingface.co/spaces/mikeee/chatglm2-6b-4bit?duplicate=true"><img src="https://bit.ly/3gLdBN6" alt="Duplicate Space"></a>It's beyond Fitness,模型由[帛凡]基于ChatGLM-6b进行微调后,在健康(全科)、心理等领域达至少60分的专业水准,而且中文总结能力超越了GPT3.5各版本。</center>"""

|

| 192 |

-

"""<center>特别声明:本应用仅为模型能力演示,无任何商业行为,部署资源为Huggingface官方免费提供,任何通过此项目产生的知识仅用于学术参考,作者和网站均不承担任何责任。</center>"""

|

| 193 |

-

"""<h1 align="center">帛凡 Fitness AI 演示</h1>"""

|

| 194 |

-

"""<center>T4 is just a machine wiht 16G VRAM ,so OOM is easy to occur ,If you meet any error,Please email me 。 👉 [email protected]</center>"""

|

| 195 |

-

)

|

| 196 |

-

|

| 197 |

-

with gr.Accordion("🎈 Info", open=False):

|

| 198 |

-

_ = f"""

|

| 199 |

-

## {model_name}

|

| 200 |

-

|

| 201 |

-

ChatGLM-6B 是开源中英双语对话模型,本次训练基于ChatGLM-6B 的第一代版本,在保留了初代模型对话流畅、部署门槛较低等众多优秀特性的基础之上开展训练。

|

| 202 |

-

|

| 203 |

-

本项目经过多位网友实测,中文总结能力超越了GPT3.5各版本,健康咨询水平优于其它同量级模型,且经优化目前可以支持无限context,远大于4k、8K、16K......,可能是任何个人和中小企业首选模型。

|

| 204 |

-

|

| 205 |

-

*首先,用40万条高质量数据进行强化训练,以提高模型的基础能力;

|

| 206 |

-

|

| 207 |

-

*第二,使用30万条人类反馈数据,构建一个表达方式规范优雅的语言模式(RM模型);

|

| 208 |

-

|

| 209 |

-

*第三,在保留SFT阶段三分之一训练数据的同时,增加了30万条fitness数据,叠加RM模型,对ChatGLM-6B进行强化训练。

|

| 210 |

-

|

| 211 |

-

通过训练我们对模型有了更深刻的认知,LLM在一直在进化,好的方法和数据可以挖掘出模型的更大潜能。

|

| 212 |

-

训练中特别强化了中英文学术论文的翻译和总结,可以成为普通用户和科研人员的得力助手。

|

| 213 |

-

|

| 214 |

-

免责声明:本应用仅为模型能力演示,无任何商业行为,部署资源为huggingface官方免费提供,任何通过此项目产生的知识仅用于学术参考,作者和网站均不承担任何责任 。

|

| 215 |

-

|

| 216 |

-

The T4 GPU is sponsored by a community GPU grant from Huggingface. Thanks a lot!

|

| 217 |

-

|

| 218 |

-

[模型下载地址](https://huggingface.co/fb700/chatglm-fitness-RLHF)

|

| 219 |

-

|

| 220 |

-

|

| 221 |

-

"""

|

| 222 |

-

gr.Markdown(dedent(_))

|

| 223 |

-

chatbot = gr.Chatbot()

|

| 224 |

with gr.Row():

|

| 225 |

-

with gr.Column(scale=4):

|

| 226 |

-

with gr.Column(scale=12):

|

| 227 |

-

user_input = gr.Textbox(

|

| 228 |

-

show_label=False,

|

| 229 |

-

placeholder="Input...",

|

| 230 |

-

).style(container=False)

|

| 231 |

-

RETRY_FLAG = gr.Checkbox(value=False, visible=False)

|

| 232 |

-

with gr.Column(min_width=32, scale=1):

|

| 233 |

-

with gr.Row():

|

| 234 |

-

submitBtn = gr.Button("Submit", variant="primary")

|

| 235 |

-

deleteBtn = gr.Button("删除最后一条对话", variant="secondary")

|

| 236 |

-

retryBtn = gr.Button("重新生成Regenerate", variant="secondary")

|

| 237 |

with gr.Column(scale=1):

|

| 238 |

-

|

| 239 |

-

|

| 240 |

-

|

| 241 |

-

|

| 242 |

-

value=

|

| 243 |

-

step=1.0,

|

| 244 |

-

label="Maximum length",

|

| 245 |

-

interactive=True,

|

| 246 |

-

)

|

| 247 |

-

top_p = gr.Slider(

|

| 248 |

-

0, 1, value=0.7, step=0.01, label="Top P", interactive=True

|

| 249 |

-

)

|

| 250 |

-

temperature = gr.Slider(

|

| 251 |

-

0.01, 1, value=0.95, step=0.01, label="Temperature", interactive=True

|

| 252 |

-

)

|

| 253 |

-

|

| 254 |

-

history = gr.State([])

|

| 255 |

-

past_key_values = gr.State(None)

|

| 256 |

-

|

| 257 |

-

user_input.submit(

|

| 258 |

-

predict,

|

| 259 |

-

[

|

| 260 |

-

RETRY_FLAG,

|

| 261 |

-

user_input,

|

| 262 |

-

chatbot,

|

| 263 |

-

max_length,

|

| 264 |

-

top_p,

|

| 265 |

-

temperature,

|

| 266 |

-

history,

|

| 267 |

-

past_key_values,

|

| 268 |

-

],

|

| 269 |

-

[chatbot, history, past_key_values],

|

| 270 |

-

show_progress="full",

|

| 271 |

-

)

|

| 272 |

-

submitBtn.click(

|

| 273 |

-

predict,

|

| 274 |

-

[

|

| 275 |

-

RETRY_FLAG,

|

| 276 |

-

user_input,

|

| 277 |

-

chatbot,

|

| 278 |

-

max_length,

|

| 279 |

-

top_p,

|

| 280 |

-

temperature,

|

| 281 |

-

history,

|

| 282 |

-

past_key_values,

|

| 283 |

-

],

|

| 284 |

-

[chatbot, history, past_key_values],

|

| 285 |

-

show_progress="full",

|

| 286 |

-

api_name="predict",

|

| 287 |

-

)

|

| 288 |

-

submitBtn.click(reset_user_input, [], [user_input])

|

| 289 |

|

| 290 |

-

|

| 291 |

-

|

| 292 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 293 |

|

| 294 |

-

|

| 295 |

-

|

| 296 |

-

|

| 297 |

-

|

| 298 |

-

|

| 299 |

-

|

| 300 |

-

|

| 301 |

-

|

| 302 |

-

|

| 303 |

-

|

| 304 |

-

|

| 305 |

-

|

| 306 |

-

|

| 307 |

-

|

| 308 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 309 |

|

| 310 |

with gr.Accordion("Example inputs", open=True):

|

| 311 |

etext0 = """ "act": "作为基于文本的冒险游戏",\n "prompt": "我想让你扮演一个基于文本的冒险游戏。我在这个基于文本的冒险游戏中扮演一个角色。请尽可能具体地描述角色所看到的内容和环境,并在游戏输出1、2、3让用户选择进行回复,而不是其它方式。我将输入命令来告诉角色该做什么,而你需要回复角色的行动结果以推动游戏的进行。我的第一个命令是'醒来',请从这里开始故事 “ """

|

|

@@ -384,12 +294,12 @@ with gr.Blocks(title="ChatGLM2-6B-int4", theme=gr.themes.Soft(text_size="sm")) a

|

|

| 384 |

)

|

| 385 |

# """

|

| 386 |

|

| 387 |

-

# demo.queue().launch(share=False, inbrowser=True)

|

| 388 |

-

# demo.queue().launch(share=True, inbrowser=True, debug=True)

|

| 389 |

-

|

| 390 |

-

# concurrency_count > 1 requires more memory, max_size: queue size

|

| 391 |

-

# T4 medium: 30GB, model size: ~4G concurrency_count = 6

|

| 392 |

-

# leave one for api access

|

| 393 |

-

# reduce to 5 if OOM occurs to often

|

| 394 |

|

| 395 |

-

demo.queue(concurrency_count=

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import shutil

|

|

|

|

| 3 |

|

| 4 |

+

from app_modules.presets import *

|

| 5 |

+

from clc.langchain_application import LangChainApplication

|

| 6 |

|

|

|

|

| 7 |

|

| 8 |

+

# 修改成自己的配置!!!

|

| 9 |

+

class LangChainCFG:

|

| 10 |

+

llm_model_name = 'fb700/chatglm-fitness-RLHF' # 本地模型文件 or huggingface远程仓库

|

| 11 |

+

embedding_model_name = 'moka-ai/m3e-large' # 检索模型文件 or huggingface远程仓库

|

| 12 |

+

vector_store_path = './cache'

|

| 13 |

+

docs_path = './docs'

|

| 14 |

+

kg_vector_stores = {

|

| 15 |

+

'中文维基百科': './cache/zh_wikipedia',

|

| 16 |

+

'大规模金融研报': './cache/financial_research_reports',

|

| 17 |

+

'初始化': './cache',

|

| 18 |

+

} # 可以替换成自己的知识库,如果没有需要设置为None

|

| 19 |

+

# kg_vector_stores=None

|

| 20 |

+

patterns = ['模型问答', '知识库问答'] #

|

| 21 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 22 |

|

| 23 |

+

config = LangChainCFG()

|

| 24 |

+

application = LangChainApplication(config)

|

| 25 |

+

application.source_service.init_source_vector()

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

|

| 28 |

+

def get_file_list():

|

| 29 |

+

if not os.path.exists("docs"):

|

| 30 |

+

return []

|

| 31 |

+

return [f for f in os.listdir("docs")]

|

| 32 |

|

|

|

|

| 33 |

|

| 34 |

+

file_list = get_file_list()

|

|

|

|

|

|

|

|

|

|

| 35 |

|

|

|

|

| 36 |

|

| 37 |

+

def upload_file(file):

|

| 38 |

+

if not os.path.exists("docs"):

|

| 39 |

+

os.mkdir("docs")

|

| 40 |

+

filename = os.path.basename(file.name)

|

| 41 |

+

shutil.move(file.name, "docs/" + filename)

|

| 42 |

+

# file_list首位插入新上传的文件

|

| 43 |

+

file_list.insert(0, filename)

|

| 44 |

+

application.source_service.add_document("docs/" + filename)

|

| 45 |

+

return gr.Dropdown.update(choices=file_list, value=filename)

|

| 46 |

|

| 47 |

+

|

| 48 |

+

def set_knowledge(kg_name, history):

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 49 |

try:

|

| 50 |

+

application.source_service.load_vector_store(config.kg_vector_stores[kg_name])

|

| 51 |

+

msg_status = f'{kg_name}知识库已成功加载'

|

| 52 |

+

except Exception as e:

|

| 53 |

+

print(e)

|

| 54 |

+

msg_status = f'{kg_name}知识库未成功加载'

|

| 55 |

+

return history + [[None, msg_status]]

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def clear_session():

|

| 59 |

+

return '', None

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def predict(input,

|

| 63 |

+

large_language_model,

|

| 64 |

+

embedding_model,

|

| 65 |

+

top_k,

|

| 66 |

+

use_web,

|

| 67 |

+

use_pattern,

|

| 68 |

+

history=None):

|

| 69 |

+

# print(large_language_model, embedding_model)

|

| 70 |

+

print(input)

|

| 71 |

+

if history == None:

|

| 72 |

+

history = []

|

| 73 |

+

|

| 74 |

+

if use_web == '使用':

|

| 75 |

+

web_content = application.source_service.search_web(query=input)

|

| 76 |

+

else:

|

| 77 |

+

web_content = ''

|

| 78 |

+

search_text = ''

|

| 79 |

+

if use_pattern == '模型问答':

|

| 80 |

+

result = application.get_llm_answer(query=input, web_content=web_content)

|

| 81 |

+

history.append((input, result))

|

| 82 |

+

search_text += web_content

|

| 83 |

+

return '', history, history, search_text

|

| 84 |

+

|

| 85 |

+

else:

|

| 86 |

+

resp = application.get_knowledge_based_answer(

|

| 87 |

+

query=input,

|

| 88 |

+

history_len=1,

|

| 89 |

+

temperature=0.1,

|

| 90 |

+

top_p=0.9,

|

| 91 |

+

top_k=top_k,

|

| 92 |

+

web_content=web_content,

|

| 93 |

+

chat_history=history

|

| 94 |

)

|

| 95 |

+

history.append((input, resp['result']))

|

| 96 |

+

for idx, source in enumerate(resp['source_documents'][:4]):

|

| 97 |

+

sep = f'----------【搜索结果{idx + 1}:】---------------\n'

|

| 98 |

+

search_text += f'{sep}\n{source.page_content}\n\n'

|

| 99 |

+

print(search_text)

|

| 100 |

+

search_text += "----------【网络检索内容】-----------\n"

|

| 101 |

+

search_text += web_content

|

| 102 |

+

return '', history, history, search_text

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

with open("assets/custom.css", "r", encoding="utf-8") as f:

|

| 106 |

+

customCSS = f.read()

|

| 107 |

+

with gr.Blocks(css=customCSS, theme=small_and_beautiful_theme) as demo:

|

| 108 |

+

gr.Markdown("""<h1><center>Chinese-LangChain by 帛凡 Fitness AI</center></h1>

|

| 109 |

+

<center><font size=3>

|

| 110 |

+

</center></font>

|

| 111 |

+

""")

|

| 112 |

+

state = gr.State()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 113 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 114 |

with gr.Row():

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 115 |

with gr.Column(scale=1):

|

| 116 |

+

embedding_model = gr.Dropdown([

|

| 117 |

+

"moka-ai/m3e-large"

|

| 118 |

+

],

|

| 119 |

+

label="Embedding model",

|

| 120 |

+

value="moka-ai/m3e-large")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 121 |

|

| 122 |

+

large_language_model = gr.Dropdown(

|

| 123 |

+

[

|

| 124 |

+

"帛凡 Fitness AI",

|

| 125 |

+

],

|

| 126 |

+

label="large language model",

|

| 127 |

+

value="帛凡 Fitness AI")

|

| 128 |

+

|

| 129 |

+

top_k = gr.Slider(1,

|

| 130 |

+

20,

|

| 131 |

+

value=4,

|

| 132 |

+

step=1,

|

| 133 |

+

label="检索top-k文档",

|

| 134 |

+

interactive=True)

|

| 135 |

+

|

| 136 |

+

use_web = gr.Radio(["使用", "不使用"], label="web search",

|

| 137 |

+

info="是否使用网络搜索,使用时确保网络通常",

|

| 138 |

+

value="不使用"

|

| 139 |

+

)

|

| 140 |

+

use_pattern = gr.Radio(

|

| 141 |

+

[

|

| 142 |

+

'模型问答',

|

| 143 |

+

'知识库问答',

|

| 144 |

+

],

|

| 145 |

+

label="模式",

|

| 146 |

+

value='模型问答',

|

| 147 |

+

interactive=True)

|

| 148 |

|

| 149 |

+

kg_name = gr.Radio(list(config.kg_vector_stores.keys()),

|

| 150 |

+

label="知识库",

|

| 151 |

+

value=None,

|

| 152 |

+

info="使用知识库问答,请加载知识库",

|

| 153 |

+

interactive=True)

|

| 154 |

+

set_kg_btn = gr.Button("加载知识库")

|

| 155 |

+

|

| 156 |

+

file = gr.File(label="将文件上传到知识库库,内容要尽量匹配",

|

| 157 |

+

visible=True,

|

| 158 |

+

file_types=['.txt', '.md', '.docx', '.pdf']

|

| 159 |

+

)

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

with gr.Column(scale=4):

|

| 163 |

+

with gr.Row():

|

| 164 |

+

chatbot = gr.Chatbot(label='Chinese-LangChain').style(height=400)

|

| 165 |

+

with gr.Row():

|

| 166 |

+

message = gr.Textbox(label='请输入问题')

|

| 167 |

+

with gr.Row():

|

| 168 |

+

clear_history = gr.Button("🧹 清除历史对话")

|

| 169 |

+

send = gr.Button("🚀 发送")

|

| 170 |

+

with gr.Row():

|

| 171 |

+

gr.Markdown("""提醒:<br>

|

| 172 |

+

[帛凡 Fitness AI模型下载地址](https://huggingface.co/fb700/chatglm-fitness-RLHF) <br>

|

| 173 |

+

It's beyond Fitness,模型由[帛凡]基于ChatGLM-6b进行微调后,在健康(全科)、心理等领域达至少60分的专业水准,而且中文总结能力超越了GPT3.5各版本。声明:本应用仅为模型能力演示,无任何商业行为,部署资源为Huggingface官方免费提供,任何通过此项目产生的知识仅用于学术参考,作者和网站均不承担任何责任。帛凡 Fitness AI 演示T4 is just a machine wiht 16G VRAM ,so OOM is easy to occur ,If you meet any error,Please email me 。 👉 [email protected]<br>

|

| 174 |

+

""")

|

| 175 |

+

with gr.Column(scale=2):

|

| 176 |

+

search = gr.Textbox(label='搜索结果')

|

| 177 |

+

|

| 178 |

+

# ============= 触发动作=============

|

| 179 |

+

file.upload(upload_file,

|

| 180 |

+

inputs=file,

|

| 181 |

+

outputs=None)

|

| 182 |

+

set_kg_btn.click(

|

| 183 |

+

set_knowledge,

|

| 184 |

+

show_progress=True,

|

| 185 |

+

inputs=[kg_name, chatbot],

|

| 186 |

+

outputs=chatbot

|

| 187 |

+

)

|

| 188 |

+

# 发送按钮 提交

|

| 189 |

+

send.click(predict,

|

| 190 |

+

inputs=[

|

| 191 |

+

message,

|

| 192 |

+

large_language_model,

|

| 193 |

+

embedding_model,

|

| 194 |

+

top_k,

|

| 195 |

+

use_web,

|

| 196 |

+

use_pattern,

|

| 197 |

+

state

|

| 198 |

+

],

|

| 199 |

+

outputs=[message, chatbot, state, search])

|

| 200 |

+

|

| 201 |

+

# 清空历史对话按钮 提交

|

| 202 |

+

clear_history.click(fn=clear_session,

|

| 203 |

+

inputs=[],

|

| 204 |

+

outputs=[chatbot, state],

|

| 205 |

+

queue=False)

|

| 206 |

+

|

| 207 |

+

# 输入框 回车

|

| 208 |

+

message.submit(predict,

|

| 209 |

+

inputs=[

|

| 210 |

+

message,

|

| 211 |

+

large_language_model,

|

| 212 |

+

embedding_model,

|

| 213 |

+

top_k,

|

| 214 |

+

use_web,

|

| 215 |

+

use_pattern,

|

| 216 |

+

state

|

| 217 |

+

],

|

| 218 |

+

outputs=[message, chatbot, state, search])

|

| 219 |

|

| 220 |

with gr.Accordion("Example inputs", open=True):

|

| 221 |

etext0 = """ "act": "作为基于文本的冒险游戏",\n "prompt": "我想让你扮演一个基于文本的冒险游戏。我在这个基于文本的冒险游戏中扮演一个角色。请尽可能具体地描述角色所看到的内容和环境,并在游戏输出1、2、3让用户选择进行回复,而不是其它方式。我将输入命令来告诉角色该做什么,而你需要回复角色的行动结果以推动游戏的进行。我的第一个命令是'醒来',请从这里开始故事 “ """

|

|

|

|

| 294 |

)

|

| 295 |

# """

|

| 296 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 297 |

|

| 298 |

+

demo.queue(concurrency_count=2).launch(

|

| 299 |

+

server_name='0.0.0.0',

|

| 300 |

+

share=False,

|

| 301 |

+

show_error=True,

|

| 302 |

+

debug=True,

|

| 303 |

+

enable_queue=True,

|

| 304 |

+

inbrowser=True,

|

| 305 |

+

)

|

app_modules/__pycache__/presets.cpython-310.pyc

ADDED

|

Binary file (2.26 kB). View file

|

|

|

app_modules/__pycache__/presets.cpython-39.pyc

ADDED

|

Binary file (2.26 kB). View file

|

|

|

app_modules/overwrites.py

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import annotations

|

| 2 |

+

|

| 3 |

+

from typing import List, Tuple

|

| 4 |

+

|

| 5 |

+

from app_modules.utils import *

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def postprocess(

|

| 9 |

+

self, y: List[Tuple[str | None, str | None]]

|

| 10 |

+

) -> List[Tuple[str | None, str | None]]:

|

| 11 |

+

"""

|

| 12 |

+

Parameters:

|

| 13 |

+

y: List of tuples representing the message and response pairs. Each message and response should be a string, which may be in Markdown format.

|

| 14 |

+

Returns:

|

| 15 |

+

List of tuples representing the message and response. Each message and response will be a string of HTML.

|

| 16 |

+

"""

|

| 17 |

+

if y is None or y == []:

|

| 18 |

+

return []

|

| 19 |

+

temp = []

|

| 20 |

+

for x in y:

|

| 21 |

+

user, bot = x

|

| 22 |

+

if not detect_converted_mark(user):

|

| 23 |

+

user = convert_asis(user)

|

| 24 |

+

if not detect_converted_mark(bot):

|

| 25 |

+

bot = convert_mdtext(bot)

|

| 26 |

+

temp.append((user, bot))

|

| 27 |

+

return temp

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

with open("./assets/custom.js", "r", encoding="utf-8") as f, open("./assets/Kelpy-Codos.js", "r",

|

| 31 |

+

encoding="utf-8") as f2:

|

| 32 |

+

customJS = f.read()

|

| 33 |

+

kelpyCodos = f2.read()

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def reload_javascript():

|

| 37 |

+

print("Reloading javascript...")

|

| 38 |

+

js = f'<script>{customJS}</script><script>{kelpyCodos}</script>'

|

| 39 |

+

|

| 40 |

+

def template_response(*args, **kwargs):

|

| 41 |

+

res = GradioTemplateResponseOriginal(*args, **kwargs)

|

| 42 |

+

res.body = res.body.replace(b'</html>', f'{js}</html>'.encode("utf8"))

|

| 43 |

+

res.init_headers()

|

| 44 |

+

return res

|

| 45 |

+

|

| 46 |

+

gr.routes.templates.TemplateResponse = template_response

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

GradioTemplateResponseOriginal = gr.routes.templates.TemplateResponse

|

app_modules/presets.py

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding:utf-8 -*-

|

| 2 |

+

import gradio as gr

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

title = """<h1 align="left" style="min-width:200px; margin-top:0;"> <img src="https://raw.githubusercontent.com/twitter/twemoji/master/assets/svg/1f432.svg" width="32px" style="display: inline"> Baize-7B </h1>"""

|

| 6 |

+

description_top = """\

|

| 7 |

+

<div align="left">

|

| 8 |

+

<p>

|

| 9 |

+

Disclaimer: The LLaMA model is a third-party version available on Hugging Face model hub. This demo should be used for research purposes only. Commercial use is strictly prohibited. The model output is not censored and the authors do not endorse the opinions in the generated content. Use at your own risk.

|

| 10 |

+

</p >

|

| 11 |

+

</div>

|

| 12 |

+

"""

|

| 13 |

+

description = """\

|

| 14 |

+

<div align="center" style="margin:16px 0">

|

| 15 |

+

The demo is built on <a href="https://github.com/GaiZhenbiao/ChuanhuChatGPT">ChuanhuChatGPT</a>.

|

| 16 |

+

</div>

|

| 17 |

+

"""

|

| 18 |

+

CONCURRENT_COUNT = 100

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

ALREADY_CONVERTED_MARK = "<!-- ALREADY CONVERTED BY PARSER. -->"

|

| 22 |

+

|

| 23 |

+

small_and_beautiful_theme = gr.themes.Soft(

|

| 24 |

+

primary_hue=gr.themes.Color(

|

| 25 |

+

c50="#02C160",

|

| 26 |

+

c100="rgba(2, 193, 96, 0.2)",

|

| 27 |

+

c200="#02C160",

|

| 28 |

+

c300="rgba(2, 193, 96, 0.32)",

|

| 29 |

+

c400="rgba(2, 193, 96, 0.32)",

|

| 30 |

+

c500="rgba(2, 193, 96, 1.0)",

|

| 31 |

+

c600="rgba(2, 193, 96, 1.0)",

|

| 32 |

+

c700="rgba(2, 193, 96, 0.32)",

|

| 33 |

+

c800="rgba(2, 193, 96, 0.32)",

|

| 34 |

+

c900="#02C160",

|

| 35 |

+

c950="#02C160",

|

| 36 |

+

),

|

| 37 |

+

secondary_hue=gr.themes.Color(

|

| 38 |

+

c50="#576b95",

|

| 39 |

+

c100="#576b95",

|

| 40 |

+

c200="#576b95",

|

| 41 |

+

c300="#576b95",

|

| 42 |

+

c400="#576b95",

|

| 43 |

+

c500="#576b95",

|

| 44 |

+

c600="#576b95",

|

| 45 |

+

c700="#576b95",

|

| 46 |

+

c800="#576b95",

|

| 47 |

+

c900="#576b95",

|

| 48 |

+

c950="#576b95",

|

| 49 |

+

),

|

| 50 |

+

neutral_hue=gr.themes.Color(

|

| 51 |

+

name="gray",

|

| 52 |

+

c50="#f9fafb",

|

| 53 |

+

c100="#f3f4f6",

|

| 54 |

+

c200="#e5e7eb",

|

| 55 |

+

c300="#d1d5db",

|

| 56 |

+

c400="#B2B2B2",

|

| 57 |

+

c500="#808080",

|

| 58 |

+

c600="#636363",

|

| 59 |

+

c700="#515151",

|

| 60 |

+

c800="#393939",

|

| 61 |

+

c900="#272727",

|

| 62 |

+

c950="#171717",

|

| 63 |

+

),

|

| 64 |

+

radius_size=gr.themes.sizes.radius_sm,

|

| 65 |

+

).set(

|

| 66 |

+

button_primary_background_fill="#06AE56",

|

| 67 |

+

button_primary_background_fill_dark="#06AE56",

|

| 68 |

+

button_primary_background_fill_hover="#07C863",

|

| 69 |

+

button_primary_border_color="#06AE56",

|

| 70 |

+

button_primary_border_color_dark="#06AE56",

|

| 71 |

+

button_primary_text_color="#FFFFFF",

|

| 72 |

+

button_primary_text_color_dark="#FFFFFF",

|

| 73 |

+

button_secondary_background_fill="#F2F2F2",

|

| 74 |

+

button_secondary_background_fill_dark="#2B2B2B",

|

| 75 |

+

button_secondary_text_color="#393939",

|

| 76 |

+

button_secondary_text_color_dark="#FFFFFF",

|

| 77 |

+

# background_fill_primary="#F7F7F7",

|

| 78 |

+

# background_fill_primary_dark="#1F1F1F",

|

| 79 |

+

block_title_text_color="*primary_500",

|

| 80 |

+

block_title_background_fill="*primary_100",

|

| 81 |

+

input_background_fill="#F6F6F6",

|

| 82 |

+

)

|

app_modules/utils.py

ADDED

|

@@ -0,0 +1,227 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding:utf-8 -*-

|

| 2 |

+

from __future__ import annotations

|

| 3 |

+

|

| 4 |

+

import html

|

| 5 |

+

import logging

|

| 6 |

+

import re

|

| 7 |

+

|

| 8 |

+

import mdtex2html

|

| 9 |

+

from markdown import markdown

|

| 10 |

+

from pygments import highlight

|

| 11 |

+

from pygments.formatters import HtmlFormatter

|

| 12 |

+

from pygments.lexers import ClassNotFound

|

| 13 |

+

from pygments.lexers import guess_lexer, get_lexer_by_name

|

| 14 |

+

|

| 15 |

+

from app_modules.presets import *

|

| 16 |

+

|

| 17 |

+

logging.basicConfig(

|

| 18 |

+

level=logging.INFO,

|

| 19 |

+

format="%(asctime)s [%(levelname)s] [%(filename)s:%(lineno)d] %(message)s",

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def markdown_to_html_with_syntax_highlight(md_str):

|

| 24 |

+

def replacer(match):

|

| 25 |

+

lang = match.group(1) or "text"

|

| 26 |

+

code = match.group(2)

|

| 27 |

+

lang = lang.strip()

|

| 28 |

+

# print(1,lang)

|

| 29 |

+

if lang == "text":

|

| 30 |

+

lexer = guess_lexer(code)

|

| 31 |

+

lang = lexer.name

|

| 32 |

+

# print(2,lang)

|

| 33 |

+

try:

|

| 34 |

+

lexer = get_lexer_by_name(lang, stripall=True)

|

| 35 |

+

except ValueError:

|

| 36 |

+

lexer = get_lexer_by_name("python", stripall=True)

|

| 37 |

+

formatter = HtmlFormatter()

|

| 38 |

+

# print(3,lexer.name)

|

| 39 |

+

highlighted_code = highlight(code, lexer, formatter)

|

| 40 |

+

|

| 41 |

+

return f'<pre><code class="{lang}">{highlighted_code}</code></pre>'

|

| 42 |

+

|

| 43 |

+

code_block_pattern = r"```(\w+)?\n([\s\S]+?)\n```"

|

| 44 |

+

md_str = re.sub(code_block_pattern, replacer, md_str, flags=re.MULTILINE)

|

| 45 |

+

|

| 46 |

+

html_str = markdown(md_str)

|

| 47 |

+

return html_str

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def normalize_markdown(md_text: str) -> str:

|

| 51 |

+

lines = md_text.split("\n")

|

| 52 |

+

normalized_lines = []

|

| 53 |

+

inside_list = False

|

| 54 |

+

|

| 55 |

+

for i, line in enumerate(lines):

|

| 56 |

+

if re.match(r"^(\d+\.|-|\*|\+)\s", line.strip()):

|

| 57 |

+

if not inside_list and i > 0 and lines[i - 1].strip() != "":

|

| 58 |

+

normalized_lines.append("")

|

| 59 |

+

inside_list = True

|

| 60 |

+

normalized_lines.append(line)

|

| 61 |

+

elif inside_list and line.strip() == "":

|

| 62 |

+

if i < len(lines) - 1 and not re.match(

|

| 63 |

+

r"^(\d+\.|-|\*|\+)\s", lines[i + 1].strip()

|

| 64 |

+

):

|

| 65 |

+

normalized_lines.append(line)

|

| 66 |

+

continue

|

| 67 |

+

else:

|

| 68 |

+

inside_list = False

|

| 69 |

+

normalized_lines.append(line)

|

| 70 |

+

|

| 71 |

+

return "\n".join(normalized_lines)

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

def convert_mdtext(md_text):

|

| 75 |

+

code_block_pattern = re.compile(r"```(.*?)(?:```|$)", re.DOTALL)

|

| 76 |

+

inline_code_pattern = re.compile(r"`(.*?)`", re.DOTALL)

|

| 77 |

+

code_blocks = code_block_pattern.findall(md_text)

|

| 78 |

+

non_code_parts = code_block_pattern.split(md_text)[::2]

|

| 79 |

+

|

| 80 |

+

result = []

|

| 81 |

+

for non_code, code in zip(non_code_parts, code_blocks + [""]):

|

| 82 |

+

if non_code.strip():

|

| 83 |

+

non_code = normalize_markdown(non_code)

|

| 84 |

+

if inline_code_pattern.search(non_code):

|

| 85 |

+

result.append(markdown(non_code, extensions=["tables"]))

|

| 86 |

+

else:

|

| 87 |

+

result.append(mdtex2html.convert(non_code, extensions=["tables"]))

|

| 88 |

+

if code.strip():

|

| 89 |

+

# _, code = detect_language(code) # 暂时去除代码高亮功能,因为在大段代码的情况下会出现问题

|

| 90 |

+

# code = code.replace("\n\n", "\n") # 暂时去除代码中的空行,因为在大段代码的情况下会出现问题

|

| 91 |

+

code = f"\n```{code}\n\n```"

|

| 92 |

+

code = markdown_to_html_with_syntax_highlight(code)

|

| 93 |

+

result.append(code)

|

| 94 |

+

result = "".join(result)

|

| 95 |

+

result += ALREADY_CONVERTED_MARK

|

| 96 |

+

return result

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

def convert_asis(userinput):

|

| 100 |

+

return f"<p style=\"white-space:pre-wrap;\">{html.escape(userinput)}</p>" + ALREADY_CONVERTED_MARK

|

| 101 |

+

|

| 102 |