Spaces:

Running

Running

changed the examples

Browse files- app.py +4 -8

- example_images/.DS_Store +0 -0

- example_images/aaron.jpeg +0 -0

- example_images/demo-1.jpg +0 -0

- example_images/demo-2.jpg +0 -0

- example_images/docvqa_example.png +0 -3

- example_images/examples_invoice.png +0 -0

- example_images/examples_weather_events.png +0 -0

- example_images/rococo_1.jpg +0 -0

- example_images/s2w_example.png +0 -0

app.py

CHANGED

|

@@ -36,16 +36,13 @@ def model_inference(input_dict, history):

|

|

| 36 |

|

| 37 |

|

| 38 |

examples=[

|

|

|

|

|

|

|

| 39 |

[{"text": "What art era do this artpiece belong to?", "files": ["example_images/rococo.jpg"]}, []],

|

| 40 |

[{"text": "Caption", "files": ["example_images/rococo.jpg"]}, []],

|

| 41 |

[{"text": "I'm planning a visit to this temple, give me travel tips.", "files": ["example_images/examples_wat_arun.jpg"]}, []],

|

| 42 |

[{"text": "Caption", "files": ["example_images/examples_wat_arun.jpg"]}, []],

|

| 43 |

-

[{"text": "

|

| 44 |

-

[{"text": "Caption", "files": ["example_images/examples_invoice.png"]}, []],

|

| 45 |

-

[{"text": "What is this UI about?", "files": ["example_images/s2w_example.png"]}, []],

|

| 46 |

-

[{"text": "Caption", "files": ["example_images/s2w_example.png"]}, []],

|

| 47 |

-

[{"text": "Where do the severe droughts happen according to this diagram?", "files": ["example_images/examples_weather_events.png"]}, []],

|

| 48 |

-

[{"text": "Caption", "files": ["example_images/examples_weather_events.png"]}, []],

|

| 49 |

]

|

| 50 |

|

| 51 |

demo = gr.ChatInterface(fn=model_inference, title="Moondream 0.5B: The World's Smallest Vision-Language Model",

|

|

@@ -54,5 +51,4 @@ demo = gr.ChatInterface(fn=model_inference, title="Moondream 0.5B: The World's S

|

|

| 54 |

textbox=gr.MultimodalTextbox(label="Query Input", file_types=["image"], file_count="single"), stop_btn="Stop Generation", multimodal=True,

|

| 55 |

additional_inputs=[], cache_examples=False)

|

| 56 |

|

| 57 |

-

demo.launch(debug=True)

|

| 58 |

-

|

|

|

|

| 36 |

|

| 37 |

|

| 38 |

examples=[

|

| 39 |

+

[{"text": "Caption", "files": ["example_images/demo-1.jpg"]}, []],

|

| 40 |

+

[{"text": "Caption", "files": ["example_images/demo-2.jpg"]}, []],

|

| 41 |

[{"text": "What art era do this artpiece belong to?", "files": ["example_images/rococo.jpg"]}, []],

|

| 42 |

[{"text": "Caption", "files": ["example_images/rococo.jpg"]}, []],

|

| 43 |

[{"text": "I'm planning a visit to this temple, give me travel tips.", "files": ["example_images/examples_wat_arun.jpg"]}, []],

|

| 44 |

[{"text": "Caption", "files": ["example_images/examples_wat_arun.jpg"]}, []],

|

| 45 |

+

[{"text": "Caption", "files": ["example_images/aaron.jpeg"]}, []],

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 46 |

]

|

| 47 |

|

| 48 |

demo = gr.ChatInterface(fn=model_inference, title="Moondream 0.5B: The World's Smallest Vision-Language Model",

|

|

|

|

| 51 |

textbox=gr.MultimodalTextbox(label="Query Input", file_types=["image"], file_count="single"), stop_btn="Stop Generation", multimodal=True,

|

| 52 |

additional_inputs=[], cache_examples=False)

|

| 53 |

|

| 54 |

+

demo.launch(debug=True)

|

|

|

example_images/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

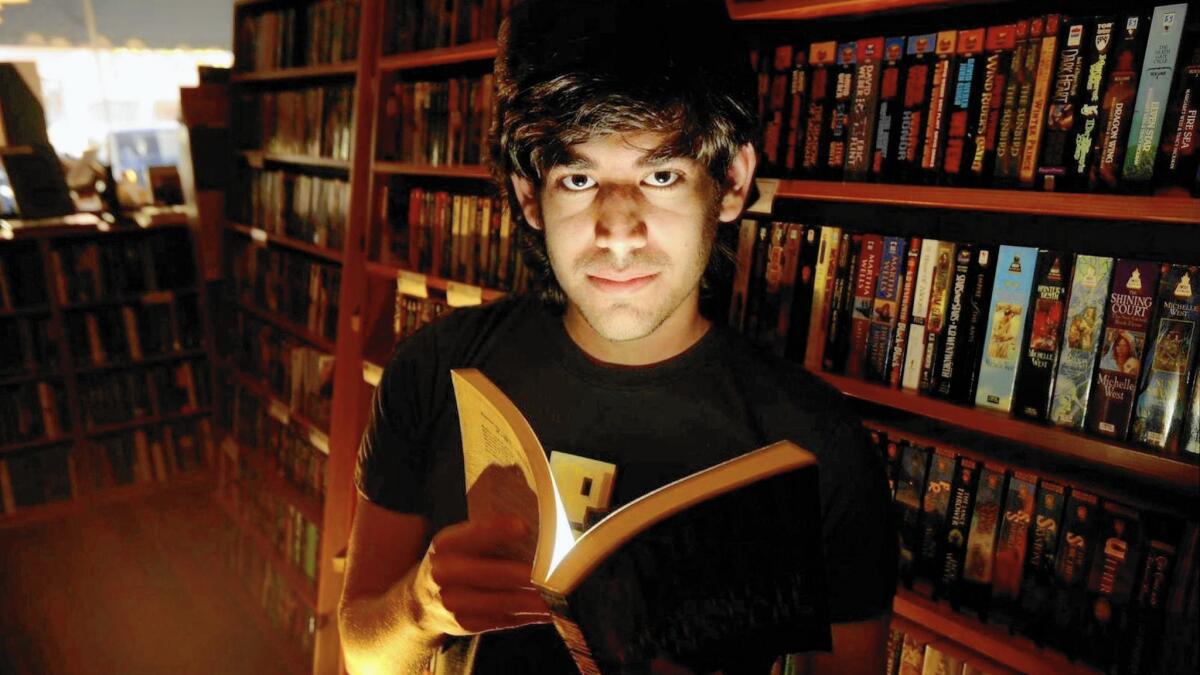

example_images/aaron.jpeg

ADDED

|

example_images/demo-1.jpg

ADDED

|

example_images/demo-2.jpg

ADDED

|

example_images/docvqa_example.png

DELETED

Git LFS Details

|

example_images/examples_invoice.png

DELETED

|

Binary file (50 kB)

|

|

|

example_images/examples_weather_events.png

DELETED

|

Binary file (310 kB)

|

|

|

example_images/rococo_1.jpg

DELETED

|

Binary file (849 kB)

|

|

|

example_images/s2w_example.png

DELETED

|

Binary file (82.8 kB)

|

|

|