Spaces:

Runtime error

Runtime error

paul hilders

commited on

Commit

·

0241217

1

Parent(s):

8d581a7

Add new version of demo for IEAI course

Browse files- .gitmodules +3 -0

- CLIP_explainability/Transformer-MM-Explainability +1 -0

- CLIP_explainability/utils.py +152 -0

- app.py +67 -0

- clip_grounding/datasets/png.py +231 -0

- clip_grounding/datasets/png_utils.py +135 -0

- clip_grounding/evaluation/clip_on_png.py +362 -0

- clip_grounding/evaluation/qualitative_results.py +93 -0

- clip_grounding/utils/image.py +46 -0

- clip_grounding/utils/io.py +116 -0

- clip_grounding/utils/log.py +57 -0

- clip_grounding/utils/paths.py +10 -0

- clip_grounding/utils/visualize.py +183 -0

- example_images/Amsterdam.png +0 -0

- example_images/London.png +0 -0

- example_images/dogs_on_bed.png +0 -0

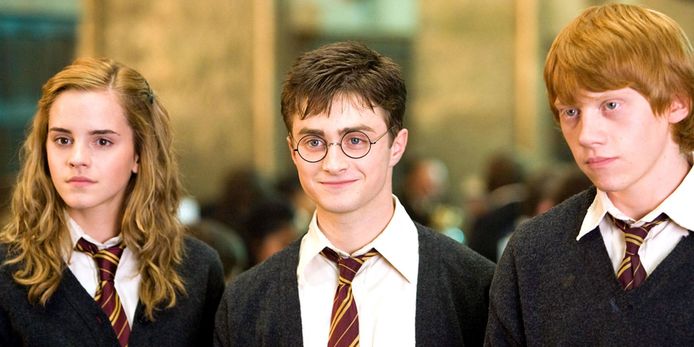

- example_images/harrypotter.png +0 -0

- requirements.txt +121 -0

.gitmodules

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[submodule "CLIP_explainability/Transformer-MM-Explainability"]

|

| 2 |

+

path = CLIP_explainability/Transformer-MM-Explainability

|

| 3 |

+

url = https://github.com/hila-chefer/Transformer-MM-Explainability.git

|

CLIP_explainability/Transformer-MM-Explainability

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Subproject commit 6a2c3c9da3fc186878e0c2bcf238c3a4c76d8af8

|

CLIP_explainability/utils.py

ADDED

|

@@ -0,0 +1,152 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import CLIP.clip as clip

|

| 3 |

+

from PIL import Image

|

| 4 |

+

import numpy as np

|

| 5 |

+

import cv2

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

from captum.attr import visualization

|

| 8 |

+

import os

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

from CLIP.clip.simple_tokenizer import SimpleTokenizer as _Tokenizer

|

| 12 |

+

_tokenizer = _Tokenizer()

|

| 13 |

+

|

| 14 |

+

#@title Control context expansion (number of attention layers to consider)

|

| 15 |

+

#@title Number of layers for image Transformer

|

| 16 |

+

start_layer = 11#@param {type:"number"}

|

| 17 |

+

|

| 18 |

+

#@title Number of layers for text Transformer

|

| 19 |

+

start_layer_text = 11#@param {type:"number"}

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def interpret(image, texts, model, device):

|

| 23 |

+

batch_size = texts.shape[0]

|

| 24 |

+

images = image.repeat(batch_size, 1, 1, 1)

|

| 25 |

+

logits_per_image, logits_per_text = model(images, texts)

|

| 26 |

+

probs = logits_per_image.softmax(dim=-1).detach().cpu().numpy()

|

| 27 |

+

index = [i for i in range(batch_size)]

|

| 28 |

+

one_hot = np.zeros((logits_per_image.shape[0], logits_per_image.shape[1]), dtype=np.float32)

|

| 29 |

+

one_hot[torch.arange(logits_per_image.shape[0]), index] = 1

|

| 30 |

+

one_hot = torch.from_numpy(one_hot).requires_grad_(True)

|

| 31 |

+

one_hot = torch.sum(one_hot.to(device) * logits_per_image)

|

| 32 |

+

model.zero_grad()

|

| 33 |

+

|

| 34 |

+

image_attn_blocks = list(dict(model.visual.transformer.resblocks.named_children()).values())

|

| 35 |

+

num_tokens = image_attn_blocks[0].attn_probs.shape[-1]

|

| 36 |

+

R = torch.eye(num_tokens, num_tokens, dtype=image_attn_blocks[0].attn_probs.dtype).to(device)

|

| 37 |

+

R = R.unsqueeze(0).expand(batch_size, num_tokens, num_tokens)

|

| 38 |

+

for i, blk in enumerate(image_attn_blocks):

|

| 39 |

+

if i < start_layer:

|

| 40 |

+

continue

|

| 41 |

+

grad = torch.autograd.grad(one_hot, [blk.attn_probs], retain_graph=True)[0].detach()

|

| 42 |

+

cam = blk.attn_probs.detach()

|

| 43 |

+

cam = cam.reshape(-1, cam.shape[-1], cam.shape[-1])

|

| 44 |

+

grad = grad.reshape(-1, grad.shape[-1], grad.shape[-1])

|

| 45 |

+

cam = grad * cam

|

| 46 |

+

cam = cam.reshape(batch_size, -1, cam.shape[-1], cam.shape[-1])

|

| 47 |

+

cam = cam.clamp(min=0).mean(dim=1)

|

| 48 |

+

R = R + torch.bmm(cam, R)

|

| 49 |

+

image_relevance = R[:, 0, 1:]

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

text_attn_blocks = list(dict(model.transformer.resblocks.named_children()).values())

|

| 53 |

+

num_tokens = text_attn_blocks[0].attn_probs.shape[-1]

|

| 54 |

+

R_text = torch.eye(num_tokens, num_tokens, dtype=text_attn_blocks[0].attn_probs.dtype).to(device)

|

| 55 |

+

R_text = R_text.unsqueeze(0).expand(batch_size, num_tokens, num_tokens)

|

| 56 |

+

for i, blk in enumerate(text_attn_blocks):

|

| 57 |

+

if i < start_layer_text:

|

| 58 |

+

continue

|

| 59 |

+

grad = torch.autograd.grad(one_hot, [blk.attn_probs], retain_graph=True)[0].detach()

|

| 60 |

+

cam = blk.attn_probs.detach()

|

| 61 |

+

cam = cam.reshape(-1, cam.shape[-1], cam.shape[-1])

|

| 62 |

+

grad = grad.reshape(-1, grad.shape[-1], grad.shape[-1])

|

| 63 |

+

cam = grad * cam

|

| 64 |

+

cam = cam.reshape(batch_size, -1, cam.shape[-1], cam.shape[-1])

|

| 65 |

+

cam = cam.clamp(min=0).mean(dim=1)

|

| 66 |

+

R_text = R_text + torch.bmm(cam, R_text)

|

| 67 |

+

text_relevance = R_text

|

| 68 |

+

|

| 69 |

+

return text_relevance, image_relevance

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def show_image_relevance(image_relevance, image, orig_image, device, show=True):

|

| 73 |

+

# create heatmap from mask on image

|

| 74 |

+

def show_cam_on_image(img, mask):

|

| 75 |

+

heatmap = cv2.applyColorMap(np.uint8(255 * mask), cv2.COLORMAP_JET)

|

| 76 |

+

heatmap = np.float32(heatmap) / 255

|

| 77 |

+

cam = heatmap + np.float32(img)

|

| 78 |

+

cam = cam / np.max(cam)

|

| 79 |

+

return cam

|

| 80 |

+

|

| 81 |

+

# plt.axis('off')

|

| 82 |

+

# f, axarr = plt.subplots(1,2)

|

| 83 |

+

# axarr[0].imshow(orig_image)

|

| 84 |

+

|

| 85 |

+

if show:

|

| 86 |

+

fig, axs = plt.subplots(1, 2)

|

| 87 |

+

axs[0].imshow(orig_image);

|

| 88 |

+

axs[0].axis('off');

|

| 89 |

+

|

| 90 |

+

image_relevance = image_relevance.reshape(1, 1, 7, 7)

|

| 91 |

+

image_relevance = torch.nn.functional.interpolate(image_relevance, size=224, mode='bilinear')

|

| 92 |

+

image_relevance = image_relevance.reshape(224, 224).to(device).data.cpu().numpy()

|

| 93 |

+

image_relevance = (image_relevance - image_relevance.min()) / (image_relevance.max() - image_relevance.min())

|

| 94 |

+

image = image[0].permute(1, 2, 0).data.cpu().numpy()

|

| 95 |

+

image = (image - image.min()) / (image.max() - image.min())

|

| 96 |

+

vis = show_cam_on_image(image, image_relevance)

|

| 97 |

+

vis = np.uint8(255 * vis)

|

| 98 |

+

vis = cv2.cvtColor(np.array(vis), cv2.COLOR_RGB2BGR)

|

| 99 |

+

|

| 100 |

+

if show:

|

| 101 |

+

# axar[1].imshow(vis)

|

| 102 |

+

axs[1].imshow(vis);

|

| 103 |

+

axs[1].axis('off');

|

| 104 |

+

# plt.imshow(vis)

|

| 105 |

+

|

| 106 |

+

return image_relevance

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

def show_heatmap_on_text(text, text_encoding, R_text, show=True):

|

| 110 |

+

CLS_idx = text_encoding.argmax(dim=-1)

|

| 111 |

+

R_text = R_text[CLS_idx, 1:CLS_idx]

|

| 112 |

+

text_scores = R_text / R_text.sum()

|

| 113 |

+

text_scores = text_scores.flatten()

|

| 114 |

+

# print(text_scores)

|

| 115 |

+

text_tokens=_tokenizer.encode(text)

|

| 116 |

+

text_tokens_decoded=[_tokenizer.decode([a]) for a in text_tokens]

|

| 117 |

+

vis_data_records = [visualization.VisualizationDataRecord(text_scores,0,0,0,0,0,text_tokens_decoded,1)]

|

| 118 |

+

|

| 119 |

+

if show:

|

| 120 |

+

visualization.visualize_text(vis_data_records)

|

| 121 |

+

|

| 122 |

+

return text_scores, text_tokens_decoded

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

def show_img_heatmap(image_relevance, image, orig_image, device, show=True):

|

| 126 |

+

return show_image_relevance(image_relevance, image, orig_image, device, show=show)

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

def show_txt_heatmap(text, text_encoding, R_text, show=True):

|

| 130 |

+

return show_heatmap_on_text(text, text_encoding, R_text, show=show)

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

def load_dataset():

|

| 134 |

+

dataset_path = os.path.join('..', '..', 'dummy-data', '71226_segments' + '.pt')

|

| 135 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 136 |

+

|

| 137 |

+

data = torch.load(dataset_path, map_location=device)

|

| 138 |

+

|

| 139 |

+

return data

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

class color:

|

| 143 |

+

PURPLE = '\033[95m'

|

| 144 |

+

CYAN = '\033[96m'

|

| 145 |

+

DARKCYAN = '\033[36m'

|

| 146 |

+

BLUE = '\033[94m'

|

| 147 |

+

GREEN = '\033[92m'

|

| 148 |

+

YELLOW = '\033[93m'

|

| 149 |

+

RED = '\033[91m'

|

| 150 |

+

BOLD = '\033[1m'

|

| 151 |

+

UNDERLINE = '\033[4m'

|

| 152 |

+

END = '\033[0m'

|

app.py

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

import gradio as gr

|

| 3 |

+

|

| 4 |

+

# sys.path.append("../")

|

| 5 |

+

sys.path.append("CLIP_explainability/Transformer-MM-Explainability/")

|

| 6 |

+

|

| 7 |

+

import torch

|

| 8 |

+

import CLIP.clip as clip

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

from clip_grounding.utils.image import pad_to_square

|

| 12 |

+

from clip_grounding.datasets.png import (

|

| 13 |

+

overlay_relevance_map_on_image,

|

| 14 |

+

)

|

| 15 |

+

from CLIP_explainability.utils import interpret, show_img_heatmap, show_heatmap_on_text

|

| 16 |

+

|

| 17 |

+

clip.clip._MODELS = {

|

| 18 |

+

"ViT-B/32": "https://openaipublic.azureedge.net/clip/models/40d365715913c9da98579312b702a82c18be219cc2a73407c4526f58eba950af/ViT-B-32.pt",

|

| 19 |

+

"ViT-B/16": "https://openaipublic.azureedge.net/clip/models/5806e77cd80f8b59890b7e101eabd078d9fb84e6937f9e85e4ecb61988df416f/ViT-B-16.pt",

|

| 20 |

+

}

|

| 21 |

+

|

| 22 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 23 |

+

model, preprocess = clip.load("ViT-B/32", device=device, jit=False)

|

| 24 |

+

|

| 25 |

+

# Gradio Section:

|

| 26 |

+

def run_demo(image, text):

|

| 27 |

+

orig_image = pad_to_square(image)

|

| 28 |

+

img = preprocess(orig_image).unsqueeze(0).to(device)

|

| 29 |

+

text_input = clip.tokenize([text]).to(device)

|

| 30 |

+

|

| 31 |

+

R_text, R_image = interpret(model=model, image=img, texts=text_input, device=device)

|

| 32 |

+

|

| 33 |

+

image_relevance = show_img_heatmap(R_image[0], img, orig_image=orig_image, device=device, show=False)

|

| 34 |

+

overlapped = overlay_relevance_map_on_image(image, image_relevance)

|

| 35 |

+

|

| 36 |

+

text_scores, text_tokens_decoded = show_heatmap_on_text(text, text_input, R_text[0], show=False)

|

| 37 |

+

|

| 38 |

+

highlighted_text = []

|

| 39 |

+

for i, token in enumerate(text_tokens_decoded):

|

| 40 |

+

highlighted_text.append((str(token), float(text_scores[i])))

|

| 41 |

+

|

| 42 |

+

return overlapped, highlighted_text

|

| 43 |

+

|

| 44 |

+

input_img = gr.inputs.Image(type='pil', label="Original Image")

|

| 45 |

+

input_txt = "text"

|

| 46 |

+

inputs = [input_img, input_txt]

|

| 47 |

+

|

| 48 |

+

outputs = [gr.inputs.Image(type='pil', label="Output Image"), "highlight"]

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

iface = gr.Interface(fn=run_demo,

|

| 52 |

+

inputs=inputs,

|

| 53 |

+

outputs=outputs,

|

| 54 |

+

title="CLIP Grounding Explainability",

|

| 55 |

+

description="A demonstration based on the Generic Attention-model Explainability method for Interpreting Bi-Modal Transformers by Chefer et al. (2021): https://github.com/hila-chefer/Transformer-MM-Explainability.",

|

| 56 |

+

examples=[["example_images/London.png", "London Eye"],

|

| 57 |

+

["example_images/London.png", "Big Ben"],

|

| 58 |

+

["example_images/harrypotter.png", "Harry"],

|

| 59 |

+

["example_images/harrypotter.png", "Hermione"],

|

| 60 |

+

["example_images/harrypotter.png", "Ron"],

|

| 61 |

+

["example_images/Amsterdam.png", "Amsterdam canal"],

|

| 62 |

+

["example_images/Amsterdam.png", "Old buildings"],

|

| 63 |

+

["example_images/Amsterdam.png", "Pink flowers"],

|

| 64 |

+

["example_images/dogs_on_bed.png", "Two dogs"],

|

| 65 |

+

["example_images/dogs_on_bed.png", "Book"],

|

| 66 |

+

["example_images/dogs_on_bed.png", "Cat"]])

|

| 67 |

+

iface.launch(debug=True)

|

clip_grounding/datasets/png.py

ADDED

|

@@ -0,0 +1,231 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Dataset object for Panoptic Narrative Grounding.

|

| 3 |

+

|

| 4 |

+

Paper: https://openaccess.thecvf.com/content/ICCV2021/papers/Gonzalez_Panoptic_Narrative_Grounding_ICCV_2021_paper.pdf

|

| 5 |

+

"""

|

| 6 |

+

|

| 7 |

+

import os

|

| 8 |

+

from os.path import join, isdir, exists

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

from torch.utils.data import Dataset

|

| 12 |

+

import cv2

|

| 13 |

+

from PIL import Image

|

| 14 |

+

from skimage import io

|

| 15 |

+

import numpy as np

|

| 16 |

+

import textwrap

|

| 17 |

+

import matplotlib.pyplot as plt

|

| 18 |

+

from matplotlib import transforms

|

| 19 |

+

from imgaug.augmentables.segmaps import SegmentationMapsOnImage

|

| 20 |

+

import matplotlib.colors as mc

|

| 21 |

+

|

| 22 |

+

from clip_grounding.utils.io import load_json

|

| 23 |

+

from clip_grounding.datasets.png_utils import show_image_and_caption

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class PNG(Dataset):

|

| 27 |

+

"""Panoptic Narrative Grounding."""

|

| 28 |

+

|

| 29 |

+

def __init__(self, dataset_root, split) -> None:

|

| 30 |

+

"""

|

| 31 |

+

Initializer.

|

| 32 |

+

|

| 33 |

+

Args:

|

| 34 |

+

dataset_root (str): path to the folder containing PNG dataset

|

| 35 |

+

split (str): MS-COCO split such as train2017/val2017

|

| 36 |

+

"""

|

| 37 |

+

super().__init__()

|

| 38 |

+

|

| 39 |

+

assert isdir(dataset_root)

|

| 40 |

+

self.dataset_root = dataset_root

|

| 41 |

+

|

| 42 |

+

assert split in ["val2017"], f"Split {split} not supported. "\

|

| 43 |

+

"Currently, only supports split `val2017`."

|

| 44 |

+

self.split = split

|

| 45 |

+

|

| 46 |

+

self.ann_dir = join(self.dataset_root, "annotations")

|

| 47 |

+

# feat_dir = join(self.dataset_root, "features")

|

| 48 |

+

|

| 49 |

+

panoptic = load_json(join(self.ann_dir, "panoptic_{:s}.json".format(split)))

|

| 50 |

+

images = panoptic["images"]

|

| 51 |

+

self.images_info = {i["id"]: i for i in images}

|

| 52 |

+

panoptic_anns = panoptic["annotations"]

|

| 53 |

+

self.panoptic_anns = {int(a["image_id"]): a for a in panoptic_anns}

|

| 54 |

+

|

| 55 |

+

# self.panoptic_pred_path = join(

|

| 56 |

+

# feat_dir, split, "panoptic_seg_predictions"

|

| 57 |

+

# )

|

| 58 |

+

# assert isdir(self.panoptic_pred_path)

|

| 59 |

+

|

| 60 |

+

panoptic_narratives_path = join(self.dataset_root, "annotations", f"png_coco_{split}.json")

|

| 61 |

+

self.panoptic_narratives = load_json(panoptic_narratives_path)

|

| 62 |

+

|

| 63 |

+

def __len__(self):

|

| 64 |

+

return len(self.panoptic_narratives)

|

| 65 |

+

|

| 66 |

+

def get_image_path(self, image_id: str):

|

| 67 |

+

image_path = join(self.dataset_root, "images", self.split, f"{image_id.zfill(12)}.jpg")

|

| 68 |

+

return image_path

|

| 69 |

+

|

| 70 |

+

def __getitem__(self, idx: int):

|

| 71 |

+

narr = self.panoptic_narratives[idx]

|

| 72 |

+

|

| 73 |

+

image_id = narr["image_id"]

|

| 74 |

+

image_path = self.get_image_path(image_id)

|

| 75 |

+

assert exists(image_path)

|

| 76 |

+

|

| 77 |

+

image = Image.open(image_path)

|

| 78 |

+

caption = narr["caption"]

|

| 79 |

+

|

| 80 |

+

# show_single_image(image, title=caption, titlesize=12)

|

| 81 |

+

|

| 82 |

+

segments = narr["segments"]

|

| 83 |

+

|

| 84 |

+

image_id = int(narr["image_id"])

|

| 85 |

+

panoptic_ann = self.panoptic_anns[image_id]

|

| 86 |

+

panoptic_ann = self.panoptic_anns[image_id]

|

| 87 |

+

segment_infos = {}

|

| 88 |

+

for s in panoptic_ann["segments_info"]:

|

| 89 |

+

idi = s["id"]

|

| 90 |

+

segment_infos[idi] = s

|

| 91 |

+

|

| 92 |

+

image_info = self.images_info[image_id]

|

| 93 |

+

panoptic_segm = io.imread(

|

| 94 |

+

join(

|

| 95 |

+

self.ann_dir,

|

| 96 |

+

"panoptic_segmentation",

|

| 97 |

+

self.split,

|

| 98 |

+

"{:012d}.png".format(image_id),

|

| 99 |

+

)

|

| 100 |

+

)

|

| 101 |

+

panoptic_segm = (

|

| 102 |

+

panoptic_segm[:, :, 0]

|

| 103 |

+

+ panoptic_segm[:, :, 1] * 256

|

| 104 |

+

+ panoptic_segm[:, :, 2] * 256 ** 2

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

panoptic_ann = self.panoptic_anns[image_id]

|

| 108 |

+

# panoptic_pred = io.imread(

|

| 109 |

+

# join(self.panoptic_pred_path, "{:012d}.png".format(image_id))

|

| 110 |

+

# )[:, :, 0]

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

# # select a single utterance to visualize

|

| 114 |

+

# segment = segments[7]

|

| 115 |

+

# segment_ids = segment["segment_ids"]

|

| 116 |

+

# segment_mask = np.zeros((image_info["height"], image_info["width"]))

|

| 117 |

+

# for segment_id in segment_ids:

|

| 118 |

+

# segment_id = int(segment_id)

|

| 119 |

+

# segment_mask[panoptic_segm == segment_id] = 1.

|

| 120 |

+

|

| 121 |

+

utterances = [s["utterance"] for s in segments]

|

| 122 |

+

outputs = []

|

| 123 |

+

for i, segment in enumerate(segments):

|

| 124 |

+

|

| 125 |

+

# create segmentation mask on image

|

| 126 |

+

segment_ids = segment["segment_ids"]

|

| 127 |

+

|

| 128 |

+

# if no annotation for this word, skip

|

| 129 |

+

if not len(segment_ids):

|

| 130 |

+

continue

|

| 131 |

+

|

| 132 |

+

segment_mask = np.zeros((image_info["height"], image_info["width"]))

|

| 133 |

+

for segment_id in segment_ids:

|

| 134 |

+

segment_id = int(segment_id)

|

| 135 |

+

segment_mask[panoptic_segm == segment_id] = 1.

|

| 136 |

+

|

| 137 |

+

# store the outputs

|

| 138 |

+

text_mask = np.zeros(len(utterances))

|

| 139 |

+

text_mask[i] = 1.

|

| 140 |

+

segment_data = dict(

|

| 141 |

+

image=image,

|

| 142 |

+

text=utterances,

|

| 143 |

+

image_mask=segment_mask,

|

| 144 |

+

text_mask=text_mask,

|

| 145 |

+

full_caption=caption,

|

| 146 |

+

)

|

| 147 |

+

outputs.append(segment_data)

|

| 148 |

+

|

| 149 |

+

# # visualize segmentation mask with associated text

|

| 150 |

+

# segment_color = "red"

|

| 151 |

+

# segmap = SegmentationMapsOnImage(

|

| 152 |

+

# segment_mask.astype(np.uint8), shape=segment_mask.shape,

|

| 153 |

+

# )

|

| 154 |

+

# image_with_segmap = segmap.draw_on_image(np.asarray(image), colors=[0, COLORS[segment_color]])[0]

|

| 155 |

+

# image_with_segmap = Image.fromarray(image_with_segmap)

|

| 156 |

+

|

| 157 |

+

# colors = ["black" for _ in range(len(utterances))]

|

| 158 |

+

# colors[i] = segment_color

|

| 159 |

+

# show_image_and_caption(image_with_segmap, utterances, colors)

|

| 160 |

+

|

| 161 |

+

return outputs

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

def overlay_segmask_on_image(image, image_mask, segment_color="red"):

|

| 165 |

+

segmap = SegmentationMapsOnImage(

|

| 166 |

+

image_mask.astype(np.uint8), shape=image_mask.shape,

|

| 167 |

+

)

|

| 168 |

+

rgb_color = mc.to_rgb(segment_color)

|

| 169 |

+

rgb_color = 255 * np.array(rgb_color)

|

| 170 |

+

image_with_segmap = segmap.draw_on_image(np.asarray(image), colors=[0, rgb_color])[0]

|

| 171 |

+

image_with_segmap = Image.fromarray(image_with_segmap)

|

| 172 |

+

return image_with_segmap

|

| 173 |

+

|

| 174 |

+

|

| 175 |

+

def get_text_colors(text, text_mask, segment_color="red"):

|

| 176 |

+

colors = ["black" for _ in range(len(text))]

|

| 177 |

+

colors[text_mask.nonzero()[0][0]] = segment_color

|

| 178 |

+

return colors

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

def overlay_relevance_map_on_image(image, heatmap):

|

| 182 |

+

width, height = image.size

|

| 183 |

+

|

| 184 |

+

# resize the heatmap to image size

|

| 185 |

+

heatmap = cv2.resize(heatmap, (width, height))

|

| 186 |

+

heatmap = np.uint8(255 * heatmap)

|

| 187 |

+

heatmap = cv2.applyColorMap(heatmap, cv2.COLORMAP_JET)

|

| 188 |

+

heatmap = cv2.cvtColor(heatmap, cv2.COLOR_BGR2RGB)

|

| 189 |

+

|

| 190 |

+

# create overlapped super image

|

| 191 |

+

img = np.asarray(image)

|

| 192 |

+

super_img = heatmap * 0.4 + img * 0.6

|

| 193 |

+

super_img = np.uint8(super_img)

|

| 194 |

+

super_img = Image.fromarray(super_img)

|

| 195 |

+

|

| 196 |

+

return super_img

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

def visualize_item(image, text, image_mask, text_mask, segment_color="red"):

|

| 200 |

+

|

| 201 |

+

segmap = SegmentationMapsOnImage(

|

| 202 |

+

image_mask.astype(np.uint8), shape=image_mask.shape,

|

| 203 |

+

)

|

| 204 |

+

rgb_color = mc.to_rgb(segment_color)

|

| 205 |

+

rgb_color = 255 * np.array(rgb_color)

|

| 206 |

+

image_with_segmap = segmap.draw_on_image(np.asarray(image), colors=[0, rgb_color])[0]

|

| 207 |

+

image_with_segmap = Image.fromarray(image_with_segmap)

|

| 208 |

+

|

| 209 |

+

colors = ["black" for _ in range(len(text))]

|

| 210 |

+

|

| 211 |

+

text_idx = text_mask.argmax()

|

| 212 |

+

colors[text_idx] = segment_color

|

| 213 |

+

show_image_and_caption(image_with_segmap, text, colors)

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

|

| 217 |

+

if __name__ == "__main__":

|

| 218 |

+

from clip_grounding.utils.paths import REPO_PATH, DATASET_ROOTS

|

| 219 |

+

|

| 220 |

+

PNG_ROOT = DATASET_ROOTS["PNG"]

|

| 221 |

+

dataset = PNG(dataset_root=PNG_ROOT, split="val2017")

|

| 222 |

+

|

| 223 |

+

item = dataset[0]

|

| 224 |

+

sub_item = item[1]

|

| 225 |

+

visualize_item(

|

| 226 |

+

image=sub_item["image"],

|

| 227 |

+

text=sub_item["text"],

|

| 228 |

+

image_mask=sub_item["image_mask"],

|

| 229 |

+

text_mask=sub_item["text_mask"],

|

| 230 |

+

segment_color="red",

|

| 231 |

+

)

|

clip_grounding/datasets/png_utils.py

ADDED

|

@@ -0,0 +1,135 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Helper functions for Panoptic Narrative Grounding."""

|

| 2 |

+

|

| 3 |

+

import os

|

| 4 |

+

from os.path import join, isdir, exists

|

| 5 |

+

from typing import List

|

| 6 |

+

|

| 7 |

+

import torch

|

| 8 |

+

from PIL import Image

|

| 9 |

+

from skimage import io

|

| 10 |

+

import numpy as np

|

| 11 |

+

import textwrap

|

| 12 |

+

import matplotlib.pyplot as plt

|

| 13 |

+

from matplotlib import transforms

|

| 14 |

+

from imgaug.augmentables.segmaps import SegmentationMapsOnImage

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def rainbow_text(x,y,ls,lc,fig, ax,**kw):

|

| 18 |

+

"""

|

| 19 |

+

Take a list of strings ``ls`` and colors ``lc`` and place them next to each

|

| 20 |

+

other, with text ls[i] being shown in color lc[i].

|

| 21 |

+

|

| 22 |

+

Ref: https://stackoverflow.com/questions/9169052/partial-coloring-of-text-in-matplotlib

|

| 23 |

+

"""

|

| 24 |

+

t = ax.transAxes

|

| 25 |

+

|

| 26 |

+

for s,c in zip(ls,lc):

|

| 27 |

+

|

| 28 |

+

text = ax.text(x,y,s+" ",color=c, transform=t, **kw)

|

| 29 |

+

text.draw(fig.canvas.get_renderer())

|

| 30 |

+

ex = text.get_window_extent()

|

| 31 |

+

t = transforms.offset_copy(text._transform, x=ex.width, units='dots')

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

def find_first_index_greater_than(elements, key):

|

| 35 |

+

return next(x[0] for x in enumerate(elements) if x[1] > key)

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

def split_caption_phrases(caption_phrases, colors, max_char_in_a_line=50):

|

| 39 |

+

char_lengths = np.cumsum([len(x) for x in caption_phrases])

|

| 40 |

+

thresholds = [max_char_in_a_line * i for i in range(1, 1 + char_lengths[-1] // max_char_in_a_line)]

|

| 41 |

+

|

| 42 |

+

utt_per_line = []

|

| 43 |

+

col_per_line = []

|

| 44 |

+

start_index = 0

|

| 45 |

+

for t in thresholds:

|

| 46 |

+

index = find_first_index_greater_than(char_lengths, t)

|

| 47 |

+

utt_per_line.append(caption_phrases[start_index:index])

|

| 48 |

+

col_per_line.append(colors[start_index:index])

|

| 49 |

+

start_index = index

|

| 50 |

+

|

| 51 |

+

return utt_per_line, col_per_line

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def show_image_and_caption(image: Image, caption_phrases: list, colors: list = None):

|

| 55 |

+

|

| 56 |

+

if colors is None:

|

| 57 |

+

colors = ["black" for _ in range(len(caption_phrases))]

|

| 58 |

+

|

| 59 |

+

fig, axes = plt.subplots(1, 2, figsize=(15, 4))

|

| 60 |

+

|

| 61 |

+

ax = axes[0]

|

| 62 |

+

ax.imshow(image)

|

| 63 |

+

ax.set_xticks([])

|

| 64 |

+

ax.set_yticks([])

|

| 65 |

+

|

| 66 |

+

ax = axes[1]

|

| 67 |

+

utt_per_line, col_per_line = split_caption_phrases(caption_phrases, colors, max_char_in_a_line=50)

|

| 68 |

+

y = 0.7

|

| 69 |

+

for U, C in zip(utt_per_line, col_per_line):

|

| 70 |

+

rainbow_text(

|

| 71 |

+

0., y,

|

| 72 |

+

U,

|

| 73 |

+

C,

|

| 74 |

+

size=15, ax=ax, fig=fig,

|

| 75 |

+

horizontalalignment='left',

|

| 76 |

+

verticalalignment='center',

|

| 77 |

+

)

|

| 78 |

+

y -= 0.11

|

| 79 |

+

|

| 80 |

+

ax.axis("off")

|

| 81 |

+

|

| 82 |

+

fig.tight_layout()

|

| 83 |

+

plt.show()

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

def show_images_and_caption(

|

| 87 |

+

images: List,

|

| 88 |

+

caption_phrases: list,

|

| 89 |

+

colors: list = None,

|

| 90 |

+

image_xlabels: List=[],

|

| 91 |

+

figsize=None,

|

| 92 |

+

show=False,

|

| 93 |

+

xlabelsize=14,

|

| 94 |

+

):

|

| 95 |

+

|

| 96 |

+

if colors is None:

|

| 97 |

+

colors = ["black" for _ in range(len(caption_phrases))]

|

| 98 |

+

caption_phrases[0] = caption_phrases[0].capitalize()

|

| 99 |

+

|

| 100 |

+

if figsize is None:

|

| 101 |

+

figsize = (5 * len(images) + 8, 4)

|

| 102 |

+

|

| 103 |

+

if image_xlabels is None:

|

| 104 |

+

image_xlabels = ["" for _ in range(len(images))]

|

| 105 |

+

|

| 106 |

+

fig, axes = plt.subplots(1, len(images) + 1, figsize=figsize)

|

| 107 |

+

|

| 108 |

+

for i, image in enumerate(images):

|

| 109 |

+

ax = axes[i]

|

| 110 |

+

ax.imshow(image)

|

| 111 |

+

ax.set_xticks([])

|

| 112 |

+

ax.set_yticks([])

|

| 113 |

+

ax.set_xlabel(image_xlabels[i], fontsize=xlabelsize)

|

| 114 |

+

|

| 115 |

+

ax = axes[-1]

|

| 116 |

+

utt_per_line, col_per_line = split_caption_phrases(caption_phrases, colors, max_char_in_a_line=40)

|

| 117 |

+

y = 0.7

|

| 118 |

+

for U, C in zip(utt_per_line, col_per_line):

|

| 119 |

+

rainbow_text(

|

| 120 |

+

0., y,

|

| 121 |

+

U,

|

| 122 |

+

C,

|

| 123 |

+

size=23, ax=ax, fig=fig,

|

| 124 |

+

horizontalalignment='left',

|

| 125 |

+

verticalalignment='center',

|

| 126 |

+

# weight='bold'

|

| 127 |

+

)

|

| 128 |

+

y -= 0.11

|

| 129 |

+

|

| 130 |

+

ax.axis("off")

|

| 131 |

+

|

| 132 |

+

fig.tight_layout()

|

| 133 |

+

|

| 134 |

+

if show:

|

| 135 |

+

plt.show()

|

clip_grounding/evaluation/clip_on_png.py

ADDED

|

@@ -0,0 +1,362 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Evaluates cross-modal correspondence of CLIP on PNG images."""

|

| 2 |

+

|

| 3 |

+

import os

|

| 4 |

+

import sys

|

| 5 |

+

from os.path import join, exists

|

| 6 |

+

|

| 7 |

+

import warnings

|

| 8 |

+

warnings.filterwarnings('ignore')

|

| 9 |

+

|

| 10 |

+

from clip_grounding.utils.paths import REPO_PATH

|

| 11 |

+

sys.path.append(join(REPO_PATH, "CLIP_explainability/Transformer-MM-Explainability/"))

|

| 12 |

+

|

| 13 |

+

import torch

|

| 14 |

+

import CLIP.clip as clip

|

| 15 |

+

from PIL import Image

|

| 16 |

+

import numpy as np

|

| 17 |

+

import cv2

|

| 18 |

+

import matplotlib.pyplot as plt

|

| 19 |

+

from captum.attr import visualization

|

| 20 |

+

from torchmetrics import JaccardIndex

|

| 21 |

+

from collections import defaultdict

|

| 22 |

+

from IPython.core.display import display, HTML

|

| 23 |

+

from skimage import filters

|

| 24 |

+

|

| 25 |

+

from CLIP_explainability.utils import interpret, show_img_heatmap, show_txt_heatmap, color, _tokenizer

|

| 26 |

+

from clip_grounding.datasets.png import PNG

|

| 27 |

+

from clip_grounding.utils.image import pad_to_square

|

| 28 |

+

from clip_grounding.utils.visualize import show_grid_of_images

|

| 29 |

+

from clip_grounding.utils.log import tqdm_iterator, print_update

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

# global usage

|

| 33 |

+

# specify device

|

| 34 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 35 |

+

|

| 36 |

+

# load CLIP model

|

| 37 |

+

model, preprocess = clip.load("ViT-B/32", device=device, jit=False)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def show_cam(mask):

|

| 41 |

+

heatmap = cv2.applyColorMap(np.uint8(255 * mask), cv2.COLORMAP_JET)

|

| 42 |

+

heatmap = np.float32(heatmap) / 255

|

| 43 |

+

cam = heatmap

|

| 44 |

+

cam = cam / np.max(cam)

|

| 45 |

+

return cam

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def interpret_and_generate(model, img, texts, orig_image, return_outputs=False, show=True):

|

| 49 |

+

text = clip.tokenize(texts).to(device)

|

| 50 |

+

R_text, R_image = interpret(model=model, image=img, texts=text, device=device)

|

| 51 |

+

batch_size = text.shape[0]

|

| 52 |

+

|

| 53 |

+

outputs = []

|

| 54 |

+

for i in range(batch_size):

|

| 55 |

+

text_scores, text_tokens_decoded = show_txt_heatmap(texts[i], text[i], R_text[i], show=show)

|

| 56 |

+

image_relevance = show_img_heatmap(R_image[i], img, orig_image=orig_image, device=device, show=show)

|

| 57 |

+

plt.show()

|

| 58 |

+

outputs.append({"text_scores": text_scores, "image_relevance": image_relevance, "tokens_decoded": text_tokens_decoded})

|

| 59 |

+

|

| 60 |

+

if return_outputs:

|

| 61 |

+

return outputs

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def process_entry_text_to_image(entry, unimodal=False):

|

| 65 |

+

image = entry['image']

|

| 66 |

+

text_mask = entry['text_mask']

|

| 67 |

+

text = entry['text']

|

| 68 |

+

orig_image = pad_to_square(image)

|

| 69 |

+

|

| 70 |

+

img = preprocess(orig_image).unsqueeze(0).to(device)

|

| 71 |

+

text_index = text_mask.argmax()

|

| 72 |

+

texts = [text[text_index]] if not unimodal else ['']

|

| 73 |

+

|

| 74 |

+

return img, texts, orig_image

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

def preprocess_ground_truth_mask(mask, resize_shape):

|

| 78 |

+

mask = Image.fromarray(mask.astype(np.uint8) * 255)

|

| 79 |

+

mask = pad_to_square(mask, color=0)

|

| 80 |

+

mask = mask.resize(resize_shape)

|

| 81 |

+

mask = np.asarray(mask) / 255.

|

| 82 |

+

return mask

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def apply_otsu_threshold(relevance_map):

|

| 86 |

+

threshold = filters.threshold_otsu(relevance_map)

|

| 87 |

+

otsu_map = (relevance_map > threshold).astype(np.uint8)

|

| 88 |

+

return otsu_map

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

def evaluate_text_to_image(method, dataset, debug=False):

|

| 92 |

+

|

| 93 |

+

instance_level_metrics = defaultdict(list)

|

| 94 |

+

entry_level_metrics = defaultdict(list)

|

| 95 |

+

|

| 96 |

+

jaccard = JaccardIndex(num_classes=2)

|

| 97 |

+

jaccard = jaccard.to(device)

|

| 98 |

+

|

| 99 |

+

num_iter = len(dataset)

|

| 100 |

+

if debug:

|

| 101 |

+

num_iter = 100

|

| 102 |

+

|

| 103 |

+

iterator = tqdm_iterator(range(num_iter), desc=f"Evaluating on {type(dataset).__name__} dataset")

|

| 104 |

+

for idx in iterator:

|

| 105 |

+

instance = dataset[idx]

|

| 106 |

+

|

| 107 |

+

instance_iou = 0.

|

| 108 |

+

for entry in instance:

|

| 109 |

+

|

| 110 |

+

# preprocess the image and text

|

| 111 |

+

unimodal = True if method == "clip-unimodal" else False

|

| 112 |

+

test_img, test_texts, orig_image = process_entry_text_to_image(entry, unimodal=unimodal)

|

| 113 |

+

|

| 114 |

+

if method in ["clip", "clip-unimodal"]:

|

| 115 |

+

|

| 116 |

+

# compute the relevance scores

|

| 117 |

+

outputs = interpret_and_generate(model, test_img, test_texts, orig_image, return_outputs=True, show=False)

|

| 118 |

+

|

| 119 |

+

# use the image relevance score to compute IoU w.r.t. ground truth segmentation masks

|

| 120 |

+

|

| 121 |

+

# NOTE: since we pass single entry (1-sized batch), outputs[0] contains our reqd outputs

|

| 122 |

+

relevance_map = outputs[0]["image_relevance"]

|

| 123 |

+

elif method == "random":

|

| 124 |

+

relevance_map = np.random.uniform(low=0., high=1., size=tuple(test_img.shape[2:]))

|

| 125 |

+

|

| 126 |

+

otsu_relevance_map = apply_otsu_threshold(relevance_map)

|

| 127 |

+

|

| 128 |

+

ground_truth_mask = entry["image_mask"]

|

| 129 |

+

ground_truth_mask = preprocess_ground_truth_mask(ground_truth_mask, relevance_map.shape)

|

| 130 |

+

|

| 131 |

+

entry_iou = jaccard(

|

| 132 |

+

torch.from_numpy(otsu_relevance_map).to(device),

|

| 133 |

+

torch.from_numpy(ground_truth_mask.astype(np.uint8)).to(device),

|

| 134 |

+

)

|

| 135 |

+

entry_iou = entry_iou.item()

|

| 136 |

+

instance_iou += (entry_iou / len(entry))

|

| 137 |

+

|

| 138 |

+

entry_level_metrics["iou"].append(entry_iou)

|

| 139 |

+

|

| 140 |

+

# capture instance (image-sentence pair) level IoU

|

| 141 |

+

instance_level_metrics["iou"].append(instance_iou)

|

| 142 |

+

|

| 143 |

+

average_metrics = {k: np.mean(v) for k, v in entry_level_metrics.items()}

|

| 144 |

+

|

| 145 |

+

return (

|

| 146 |

+

average_metrics,

|

| 147 |

+

instance_level_metrics,

|

| 148 |

+

entry_level_metrics

|

| 149 |

+

)

|

| 150 |

+

|

| 151 |

+

|

| 152 |

+

def process_entry_image_to_text(entry, unimodal=False):

|

| 153 |

+

|

| 154 |

+

if not unimodal:

|

| 155 |

+

if len(np.asarray(entry["image"]).shape) == 3:

|

| 156 |

+

mask = np.repeat(np.expand_dims(entry['image_mask'], -1), 3, axis=-1)

|

| 157 |

+

else:

|

| 158 |

+

mask = np.asarray(entry['image_mask'])

|

| 159 |

+

|

| 160 |

+

masked_image = (mask * np.asarray(entry['image'])).astype(np.uint8)

|

| 161 |

+

masked_image = Image.fromarray(masked_image)

|

| 162 |

+

orig_image = pad_to_square(masked_image)

|

| 163 |

+

img = preprocess(orig_image).unsqueeze(0).to(device)

|

| 164 |

+

else:

|

| 165 |

+

orig_image_shape = max(np.asarray(entry['image']).shape[:2])

|

| 166 |

+

orig_image = Image.fromarray(np.zeros((orig_image_shape, orig_image_shape, 3), dtype=np.uint8))

|

| 167 |

+

# orig_image = Image.fromarray(np.random.randint(0, 256, (orig_image_shape, orig_image_shape, 3), dtype=np.uint8))

|

| 168 |

+

img = preprocess(orig_image).unsqueeze(0).to(device)

|

| 169 |

+

|

| 170 |

+

texts = [' '.join(entry['text'])]

|

| 171 |

+

|

| 172 |

+

return img, texts, orig_image

|

| 173 |

+

|

| 174 |

+

|

| 175 |

+

def process_text_mask(text, text_mask, tokens):

|

| 176 |

+

|

| 177 |

+

token_level_mask = np.zeros(len(tokens))

|

| 178 |

+

|

| 179 |

+

for label, subtext in zip(text_mask, text):

|

| 180 |

+

|

| 181 |

+

subtext_tokens=_tokenizer.encode(subtext)

|

| 182 |

+

subtext_tokens_decoded=[_tokenizer.decode([a]) for a in subtext_tokens]

|

| 183 |

+

|

| 184 |

+

if label == 1:

|

| 185 |

+

start = tokens.index(subtext_tokens_decoded[0])

|

| 186 |

+

end = tokens.index(subtext_tokens_decoded[-1])

|

| 187 |

+

token_level_mask[start:end + 1] = 1

|

| 188 |

+

|

| 189 |

+

return token_level_mask

|

| 190 |

+

|

| 191 |

+

|

| 192 |

+

def evaluate_image_to_text(method, dataset, debug=False, clamp_sentence_len=70):

|

| 193 |

+

|

| 194 |

+

instance_level_metrics = defaultdict(list)

|

| 195 |

+

entry_level_metrics = defaultdict(list)

|

| 196 |

+

|

| 197 |

+

# skipped if text length > 77 which is CLIP limit

|

| 198 |

+

num_entries_skipped = 0

|

| 199 |

+

num_total_entries = 0

|

| 200 |

+

|

| 201 |

+

num_iter = len(dataset)

|

| 202 |

+

if debug:

|

| 203 |

+

num_iter = 100

|

| 204 |

+

|

| 205 |

+

jaccard_image_to_text = JaccardIndex(num_classes=2).to(device)

|

| 206 |

+

|

| 207 |

+

iterator = tqdm_iterator(range(num_iter), desc=f"Evaluating on {type(dataset).__name__} dataset")

|

| 208 |

+

for idx in iterator:

|

| 209 |

+

instance = dataset[idx]