Spaces:

Sleeping

Sleeping

initial commit

Browse files- .gitattributes +1 -0

- app.py +22 -0

- args.yaml +107 -0

- data.yaml +25 -0

- detection_pipeline.py +112 -0

- events.out.tfevents.1716932751.3799194233d7.8657.0 +3 -0

- output.jpg +0 -0

- poetry.lock +0 -0

- pyproject.toml +30 -0

- results/F1_curve.png +0 -0

- results/PR_curve.png +0 -0

- results/P_curve.png +0 -0

- results/R_curve.png +0 -0

- results/confusion_matrix.png +0 -0

- results/confusion_matrix_normalized.png +0 -0

- results/labels.jpg +0 -0

- results/labels_correlogram.jpg +0 -0

- results/results.csv +21 -0

- results/results.png +0 -0

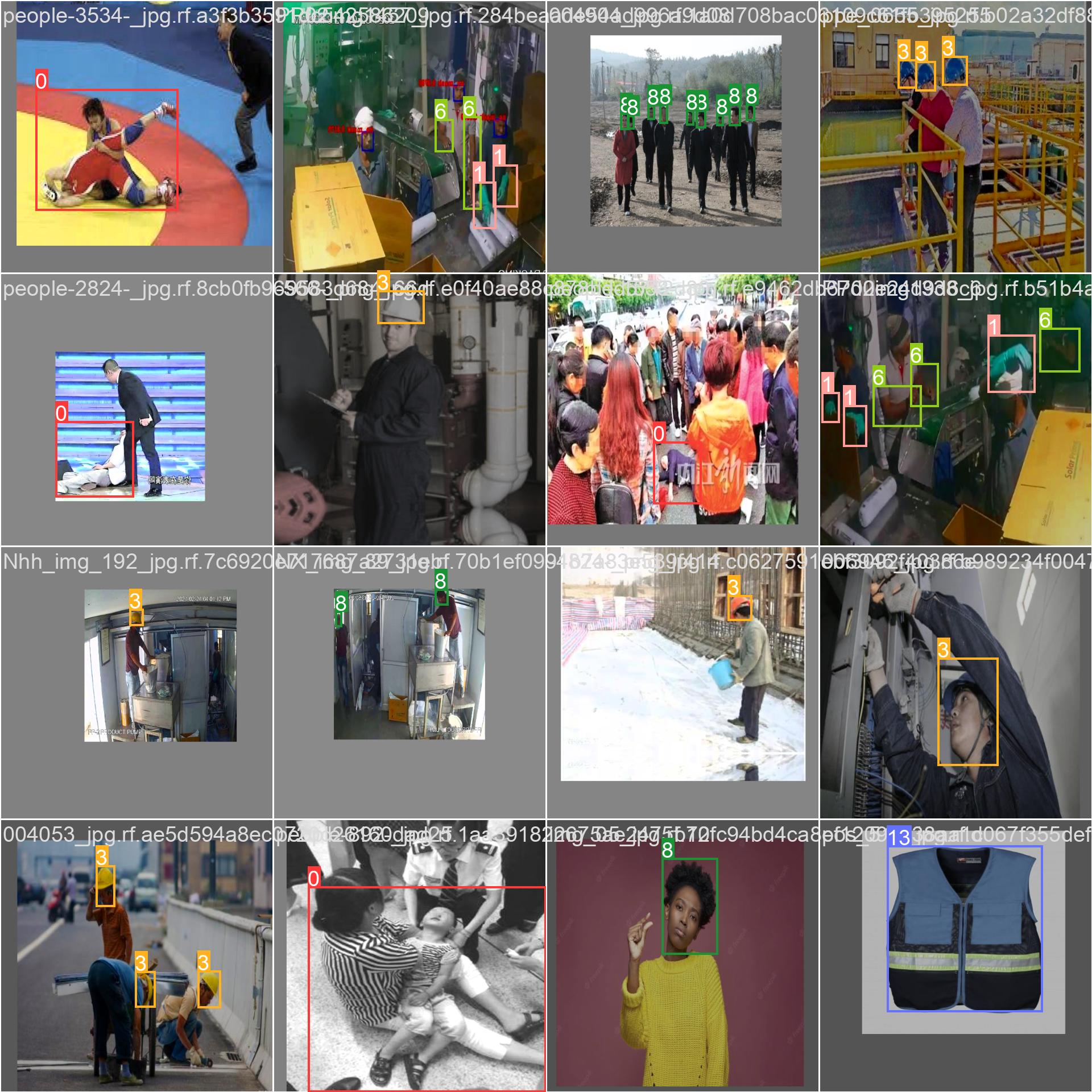

- results/train_batch0.jpg +0 -0

- results/train_batch1.jpg +0 -0

- results/train_batch2.jpg +0 -0

- results/train_batch4810.jpg +0 -0

- results/train_batch4811.jpg +0 -0

- results/train_batch4812.jpg +0 -0

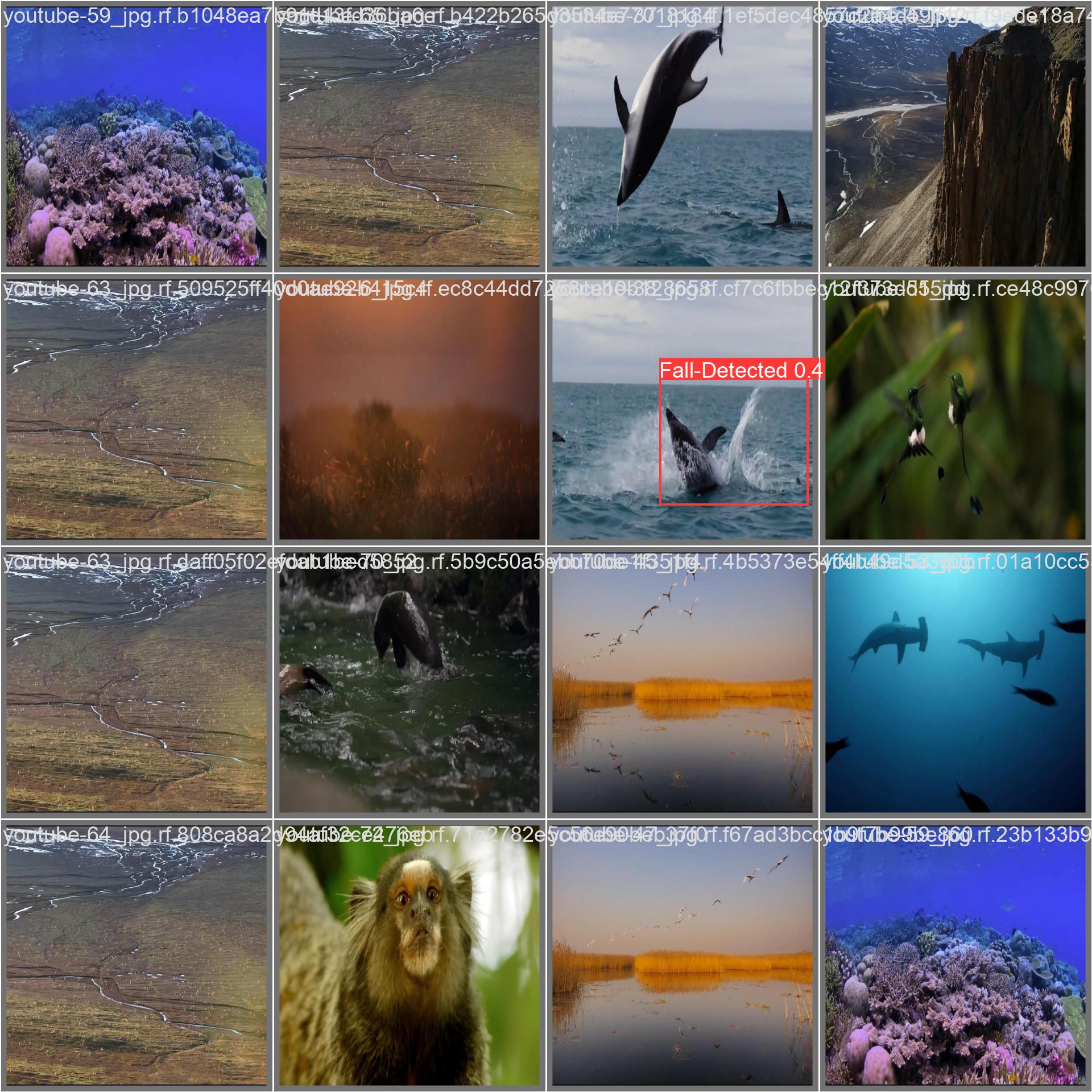

- results/val_batch0_labels.jpg +0 -0

- results/val_batch0_pred.jpg +0 -0

- results/val_batch1_labels.jpg +0 -0

- results/val_batch1_pred.jpg +0 -0

- results/val_batch2_labels.jpg +0 -0

- results/val_batch2_pred.jpg +0 -0

- sample/1.jpg +0 -0

- weights/best.onnx +3 -0

- weights/best.pt +3 -0

- weights/last.pt +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

weights filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

from detection_pipeline import DetectionModel

|

| 4 |

+

|

| 5 |

+

model = DetectionModel()

|

| 6 |

+

|

| 7 |

+

def predict(image, threshold):

|

| 8 |

+

preds = model(image)

|

| 9 |

+

preds = list(filter(lambda x: x[4] > threshold, preds))

|

| 10 |

+

|

| 11 |

+

output = model.visualize(image, preds)

|

| 12 |

+

return output

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

interface = gr.Interface(

|

| 17 |

+

fn=predict,

|

| 18 |

+

inputs=[gr.Image(label="Input"), gr.Slider(0, 1, .3, step=.05, label="Threshold")],

|

| 19 |

+

outputs=gr.Image(label="Output")

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

interface.launch()

|

args.yaml

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: detect

|

| 2 |

+

mode: train

|

| 3 |

+

model: /content/weights/yolov10n.pt

|

| 4 |

+

data: /content/data.yaml

|

| 5 |

+

epochs: 20

|

| 6 |

+

time: null

|

| 7 |

+

patience: 100

|

| 8 |

+

batch: 64

|

| 9 |

+

imgsz: 640

|

| 10 |

+

save: true

|

| 11 |

+

save_period: -1

|

| 12 |

+

val_period: 1

|

| 13 |

+

cache: false

|

| 14 |

+

device: null

|

| 15 |

+

workers: 8

|

| 16 |

+

project: null

|

| 17 |

+

name: train

|

| 18 |

+

exist_ok: false

|

| 19 |

+

pretrained: true

|

| 20 |

+

optimizer: auto

|

| 21 |

+

verbose: true

|

| 22 |

+

seed: 0

|

| 23 |

+

deterministic: true

|

| 24 |

+

single_cls: false

|

| 25 |

+

rect: false

|

| 26 |

+

cos_lr: false

|

| 27 |

+

close_mosaic: 10

|

| 28 |

+

resume: false

|

| 29 |

+

amp: true

|

| 30 |

+

fraction: 1.0

|

| 31 |

+

profile: false

|

| 32 |

+

freeze: null

|

| 33 |

+

multi_scale: false

|

| 34 |

+

overlap_mask: true

|

| 35 |

+

mask_ratio: 4

|

| 36 |

+

dropout: 0.0

|

| 37 |

+

val: true

|

| 38 |

+

split: val

|

| 39 |

+

save_json: false

|

| 40 |

+

save_hybrid: false

|

| 41 |

+

conf: null

|

| 42 |

+

iou: 0.7

|

| 43 |

+

max_det: 300

|

| 44 |

+

half: false

|

| 45 |

+

dnn: false

|

| 46 |

+

plots: true

|

| 47 |

+

source: null

|

| 48 |

+

vid_stride: 1

|

| 49 |

+

stream_buffer: false

|

| 50 |

+

visualize: false

|

| 51 |

+

augment: false

|

| 52 |

+

agnostic_nms: false

|

| 53 |

+

classes: null

|

| 54 |

+

retina_masks: false

|

| 55 |

+

embed: null

|

| 56 |

+

show: false

|

| 57 |

+

save_frames: false

|

| 58 |

+

save_txt: false

|

| 59 |

+

save_conf: false

|

| 60 |

+

save_crop: false

|

| 61 |

+

show_labels: true

|

| 62 |

+

show_conf: true

|

| 63 |

+

show_boxes: true

|

| 64 |

+

line_width: null

|

| 65 |

+

format: torchscript

|

| 66 |

+

keras: false

|

| 67 |

+

optimize: false

|

| 68 |

+

int8: false

|

| 69 |

+

dynamic: false

|

| 70 |

+

simplify: false

|

| 71 |

+

opset: null

|

| 72 |

+

workspace: 4

|

| 73 |

+

nms: false

|

| 74 |

+

lr0: 0.01

|

| 75 |

+

lrf: 0.01

|

| 76 |

+

momentum: 0.937

|

| 77 |

+

weight_decay: 0.0005

|

| 78 |

+

warmup_epochs: 3.0

|

| 79 |

+

warmup_momentum: 0.8

|

| 80 |

+

warmup_bias_lr: 0.1

|

| 81 |

+

box: 7.5

|

| 82 |

+

cls: 0.5

|

| 83 |

+

dfl: 1.5

|

| 84 |

+

pose: 12.0

|

| 85 |

+

kobj: 1.0

|

| 86 |

+

label_smoothing: 0.0

|

| 87 |

+

nbs: 64

|

| 88 |

+

hsv_h: 0.015

|

| 89 |

+

hsv_s: 0.7

|

| 90 |

+

hsv_v: 0.4

|

| 91 |

+

degrees: 0.0

|

| 92 |

+

translate: 0.1

|

| 93 |

+

scale: 0.5

|

| 94 |

+

shear: 0.0

|

| 95 |

+

perspective: 0.0

|

| 96 |

+

flipud: 0.0

|

| 97 |

+

fliplr: 0.5

|

| 98 |

+

bgr: 0.0

|

| 99 |

+

mosaic: 1.0

|

| 100 |

+

mixup: 0.0

|

| 101 |

+

copy_paste: 0.0

|

| 102 |

+

auto_augment: randaugment

|

| 103 |

+

erasing: 0.4

|

| 104 |

+

crop_fraction: 1.0

|

| 105 |

+

cfg: null

|

| 106 |

+

tracker: botsort.yaml

|

| 107 |

+

save_dir: runs/detect/train

|

data.yaml

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

names:

|

| 2 |

+

- Fall-Detected

|

| 3 |

+

- Gloves

|

| 4 |

+

- Goggles

|

| 5 |

+

- Hardhat

|

| 6 |

+

- Ladder

|

| 7 |

+

- Mask

|

| 8 |

+

- NO-Gloves

|

| 9 |

+

- NO-Goggles

|

| 10 |

+

- NO-Hardhat

|

| 11 |

+

- NO-Mask

|

| 12 |

+

- NO-Safety Vest

|

| 13 |

+

- Person

|

| 14 |

+

- Safety Cone

|

| 15 |

+

- Safety Vest

|

| 16 |

+

nc: 14

|

| 17 |

+

roboflow:

|

| 18 |

+

license: CC BY 4.0

|

| 19 |

+

project: personal-protective-equipment-combined-model

|

| 20 |

+

url: https://universe.roboflow.com/roboflow-universe-projects/personal-protective-equipment-combined-model/dataset/4

|

| 21 |

+

version: 4

|

| 22 |

+

workspace: roboflow-universe-projects

|

| 23 |

+

test: ../test/images

|

| 24 |

+

train: Personal-Protective-Equipment---Combined-Model-4/train/images

|

| 25 |

+

val: Personal-Protective-Equipment---Combined-Model-4/valid/images

|

detection_pipeline.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import onnx

|

| 2 |

+

import onnxruntime as ort

|

| 3 |

+

import numpy as np

|

| 4 |

+

|

| 5 |

+

import cv2

|

| 6 |

+

import yaml

|

| 7 |

+

|

| 8 |

+

import copy

|

| 9 |

+

|

| 10 |

+

class DetectionModel():

|

| 11 |

+

def __init__(self, model_path="weights/best.onnx"):

|

| 12 |

+

self.current_input = None

|

| 13 |

+

self.latest_output = None

|

| 14 |

+

self.model = None

|

| 15 |

+

self.model_ckpt = model_path

|

| 16 |

+

|

| 17 |

+

if self.__check_model():

|

| 18 |

+

self.model = ort.InferenceSession(self.model_ckpt)

|

| 19 |

+

else:

|

| 20 |

+

raise Exception("Model couldn't be validated using ONNX, please check the checkpoint")

|

| 21 |

+

|

| 22 |

+

self._load_labels()

|

| 23 |

+

|

| 24 |

+

def __check_model(self):

|

| 25 |

+

model = onnx.load(self.model_ckpt)

|

| 26 |

+

try:

|

| 27 |

+

onnx.checker.check_model(model)

|

| 28 |

+

return True

|

| 29 |

+

except:

|

| 30 |

+

return False

|

| 31 |

+

|

| 32 |

+

def _preprepocess_input(self, image: np.ndarray) -> np.ndarray:

|

| 33 |

+

""" Preprocess the input image

|

| 34 |

+

|

| 35 |

+

Resizes the image to 640x640, transposes the matrix so that it's CxHxW and normalizes the image.

|

| 36 |

+

Then the result is converted to `np.float32` and returned with the extra `batch` dimension

|

| 37 |

+

|

| 38 |

+

Args:

|

| 39 |

+

image (np.ndarray): The input image

|

| 40 |

+

|

| 41 |

+

Returns:

|

| 42 |

+

processed_image (np.ndarray): The preprocessed image as 1x3x640x640 `np.float32` array

|

| 43 |

+

|

| 44 |

+

"""

|

| 45 |

+

processed_image = copy.deepcopy(image)

|

| 46 |

+

processed_image = cv2.resize(processed_image, (640, 640))

|

| 47 |

+

processed_image = processed_image.transpose(2, 0, 1)

|

| 48 |

+

processed_image = (processed_image / 255.0).astype(np.float32)

|

| 49 |

+

processed_image = np.expand_dims(processed_image, axis=0)

|

| 50 |

+

|

| 51 |

+

return processed_image

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def _postprocess_output(self, predictions) -> np.ndarray:

|

| 55 |

+

""" Postprocess the output of the model

|

| 56 |

+

|

| 57 |

+

Args:

|

| 58 |

+

predictions (np.ndarray): The output of the model as a `np.ndarray`

|

| 59 |

+

|

| 60 |

+

Returns:

|

| 61 |

+

detections (np.ndarray): The detections as a `np.ndarray` with shape (N, 6) where N is the number of detections.

|

| 62 |

+

The columns are as follows: [x1, y1, x2, y2, confidence, class]

|

| 63 |

+

|

| 64 |

+

"""

|

| 65 |

+

w_ratio = self.current_input.shape[1] / 640

|

| 66 |

+

h_ratio = self.current_input.shape[0] / 640

|

| 67 |

+

|

| 68 |

+

detections = []

|

| 69 |

+

for pred in predictions:

|

| 70 |

+

# detections.append([int(pred[0]), int(pred[1]), int(pred[2]), int(pred[3]), pred[4], self.ix2l[pred[5]]])

|

| 71 |

+

detections.append([int(pred[0] * w_ratio), int(pred[1] * h_ratio), int(pred[2] * w_ratio), int(pred[3] * h_ratio), pred[4], self.ix2l[pred[5]]])

|

| 72 |

+

|

| 73 |

+

return list(detections)

|

| 74 |

+

|

| 75 |

+

def _load_labels(self):

|

| 76 |

+

with open("data.yaml", "r") as f:

|

| 77 |

+

data = yaml.safe_load(f)

|

| 78 |

+

|

| 79 |

+

self.labels = data['names']

|

| 80 |

+

self.l2ix = {l:i for i, l in enumerate(self.labels)}

|

| 81 |

+

self.ix2l = {i:l for i, l in enumerate(self.labels)}

|

| 82 |

+

|

| 83 |

+

def __call__(self, image: np.ndarray):

|

| 84 |

+

|

| 85 |

+

processed_image = self._preprepocess_input(image)

|

| 86 |

+

|

| 87 |

+

self.latest_output = list(self.model.run(None, {"images": processed_image})[0][0])

|

| 88 |

+

self.current_input = image

|

| 89 |

+

|

| 90 |

+

detections = self._postprocess_output(self.latest_output)

|

| 91 |

+

|

| 92 |

+

return detections

|

| 93 |

+

|

| 94 |

+

def visualize(self, input_image: np.ndarray, detections: list[list]) -> np.ndarray:

|

| 95 |

+

""" Visualizes the detections on the current input image

|

| 96 |

+

|

| 97 |

+

Args:

|

| 98 |

+

detections (list[list]): The detections as a list of lists

|

| 99 |

+

|

| 100 |

+

Returns:

|

| 101 |

+

image (np.ndarray): The image with the detections drawn on it

|

| 102 |

+

|

| 103 |

+

"""

|

| 104 |

+

image = copy.deepcopy(input_image)

|

| 105 |

+

for det in detections:

|

| 106 |

+

x1, y1, x2, y2, conf, label = det

|

| 107 |

+

cv2.rectangle(image, (x1, y1), (x2, y2), (0, 255, 0), 2)

|

| 108 |

+

cv2.putText(image, f"{label}: {conf:.3f}", (x1, y1), cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 0, 0), 2, cv2.LINE_AA)

|

| 109 |

+

|

| 110 |

+

image = cv2.resize(image, self.current_input.shape[:2][::-1])

|

| 111 |

+

|

| 112 |

+

return image

|

events.out.tfevents.1716932751.3799194233d7.8657.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:68b83913446010168055f71af86af8eff75de78eb49059d17819384f96a73829

|

| 3 |

+

size 19192

|

output.jpg

ADDED

|

poetry.lock

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

pyproject.toml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[tool.poetry]

|

| 2 |

+

name = "ppe-detector-yolov10"

|

| 3 |

+

version = "0.1.0"

|

| 4 |

+

description = ""

|

| 5 |

+

authors = ["Alper Balbay <[email protected]>"]

|

| 6 |

+

readme = "README.md"

|

| 7 |

+

|

| 8 |

+

[tool.poetry.dependencies]

|

| 9 |

+

python = "^3.11"

|

| 10 |

+

onnxruntime = "^1.18.1"

|

| 11 |

+

gradio = "^4.37.2"

|

| 12 |

+

onnx = "^1.16.1"

|

| 13 |

+

opencv-contrib-python = "^4.10.0.84"

|

| 14 |

+

pillow = "^10.4.0"

|

| 15 |

+

supervision = "^0.21.0"

|

| 16 |

+

ultralytics = "^8.2.48"

|

| 17 |

+

pyyaml = "^6.0.1"

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

[tool.poetry.group.dev.dependencies]

|

| 21 |

+

pytest = "^8.2.2"

|

| 22 |

+

ipython = "^8.26.0"

|

| 23 |

+

ipykernel = "^6.29.5"

|

| 24 |

+

jupyter = "^1.0.0"

|

| 25 |

+

ruff = "^0.5.0"

|

| 26 |

+

pre-commit = "^3.7.1"

|

| 27 |

+

|

| 28 |

+

[build-system]

|

| 29 |

+

requires = ["poetry-core"]

|

| 30 |

+

build-backend = "poetry.core.masonry.api"

|

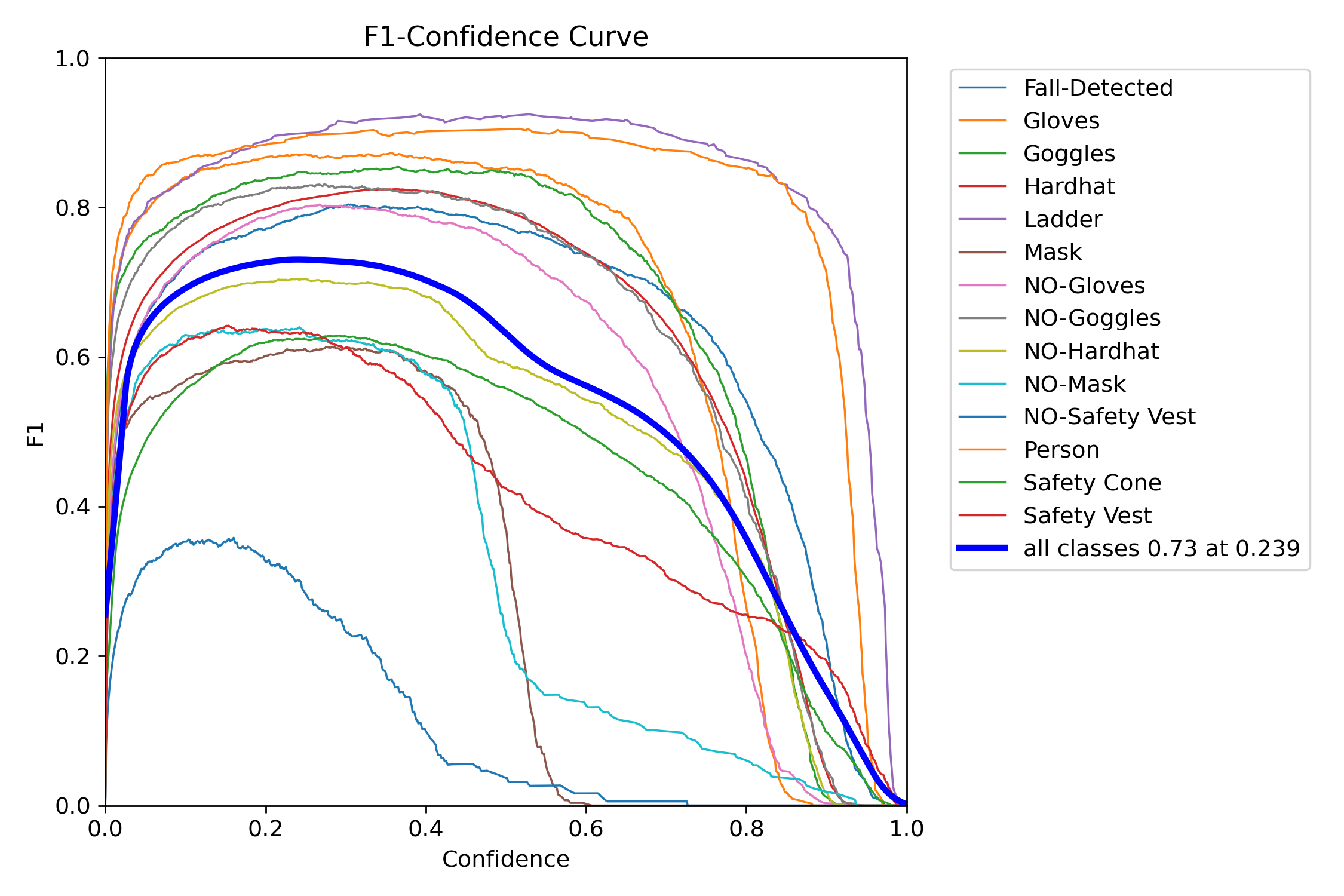

results/F1_curve.png

ADDED

|

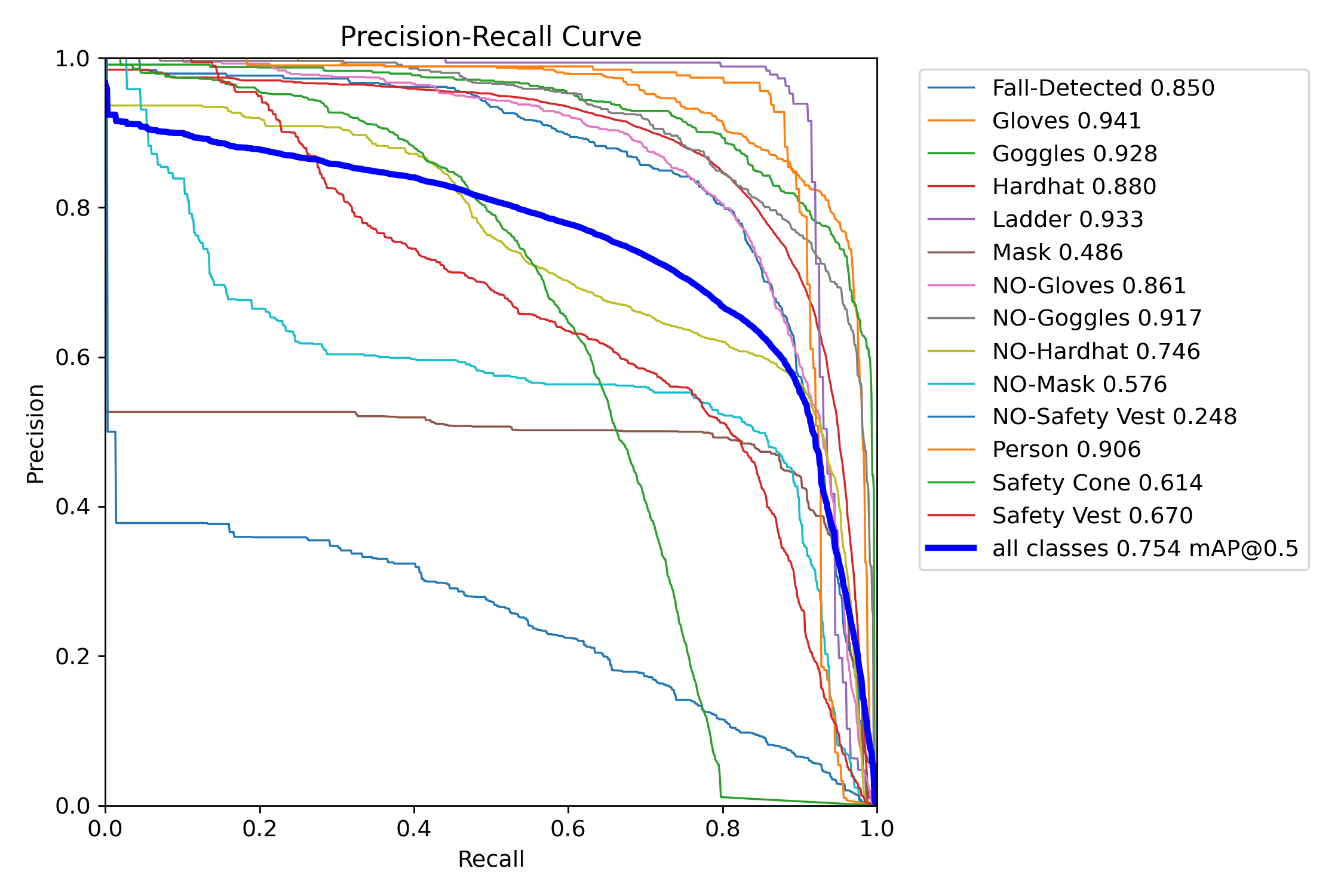

results/PR_curve.png

ADDED

|

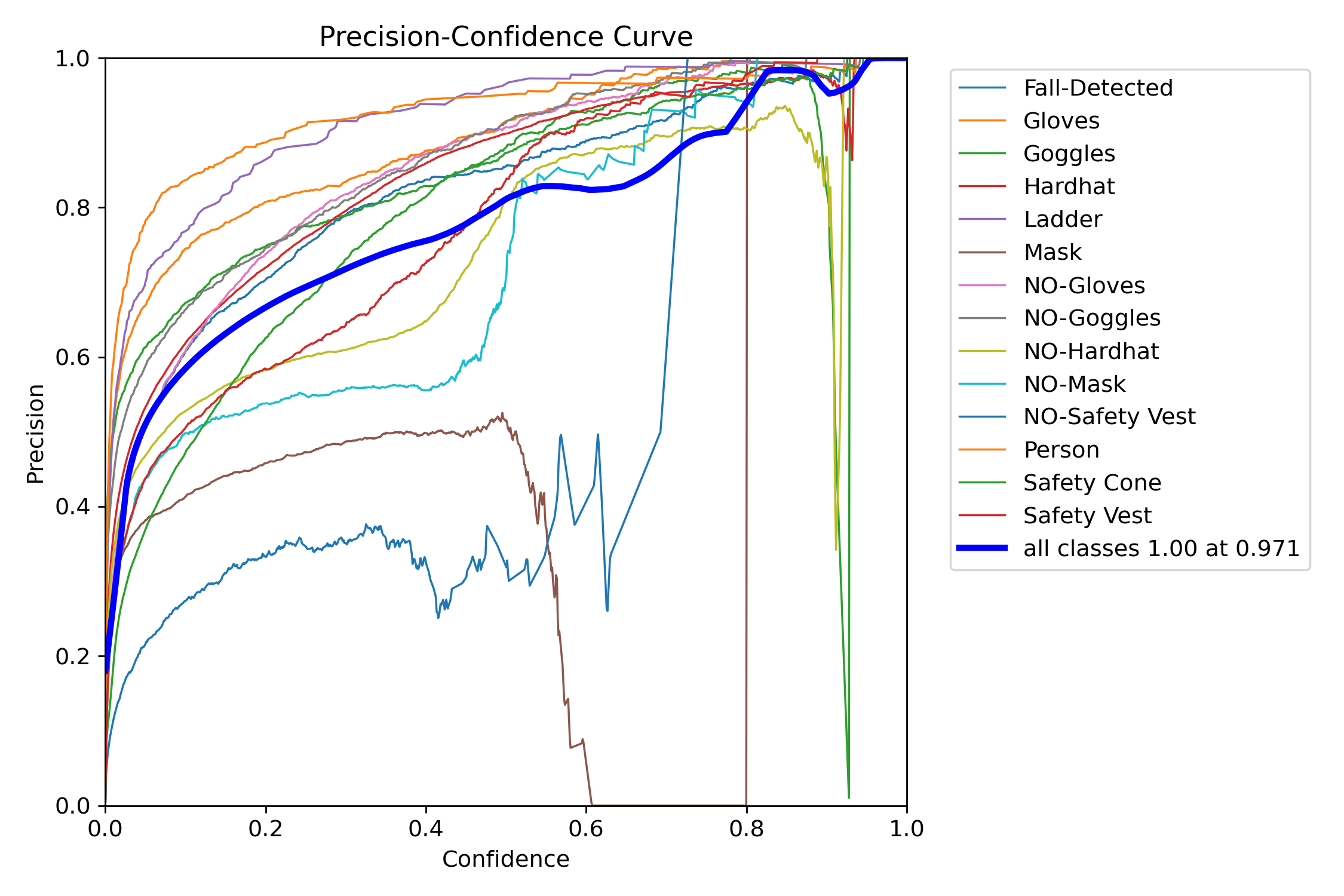

results/P_curve.png

ADDED

|

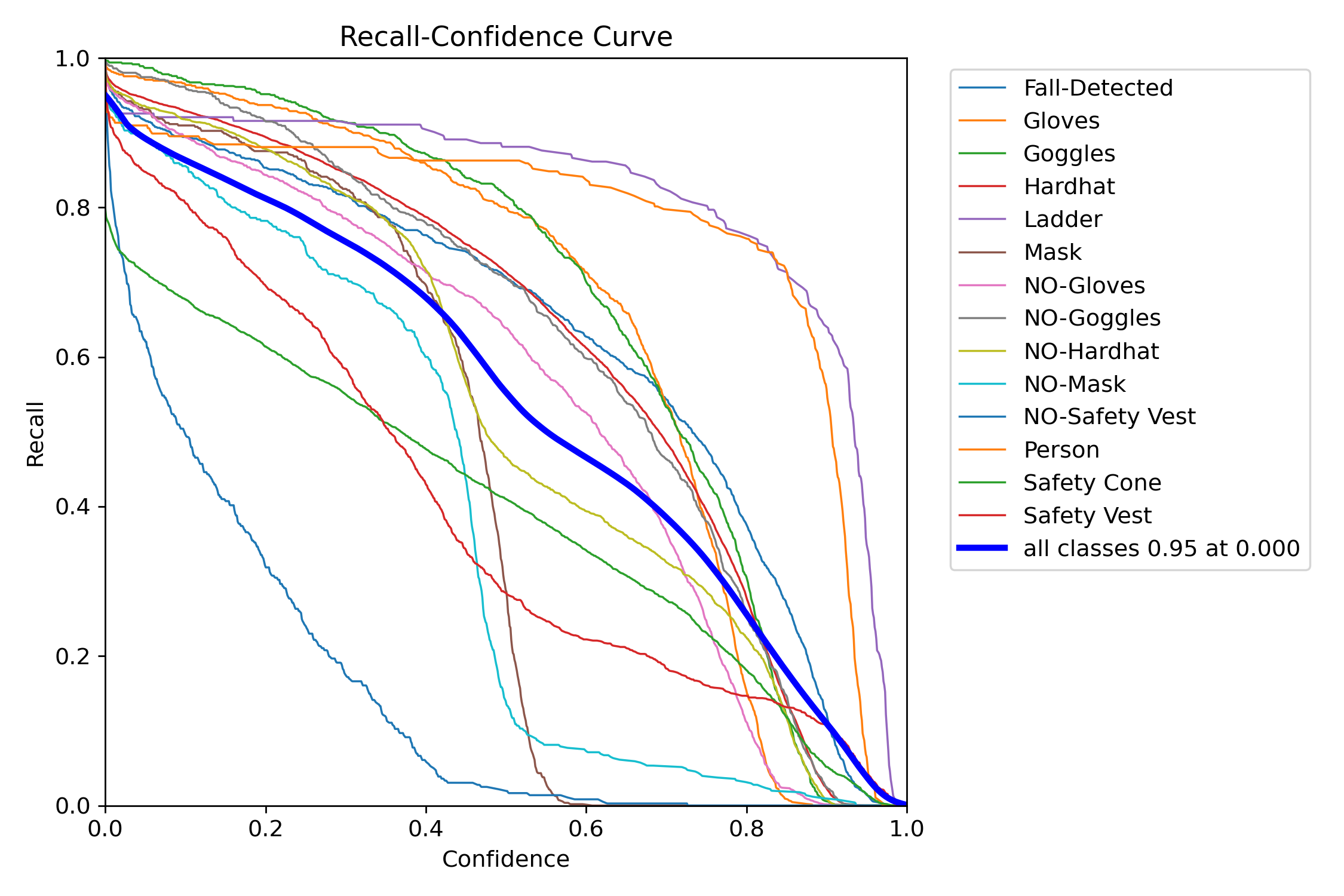

results/R_curve.png

ADDED

|

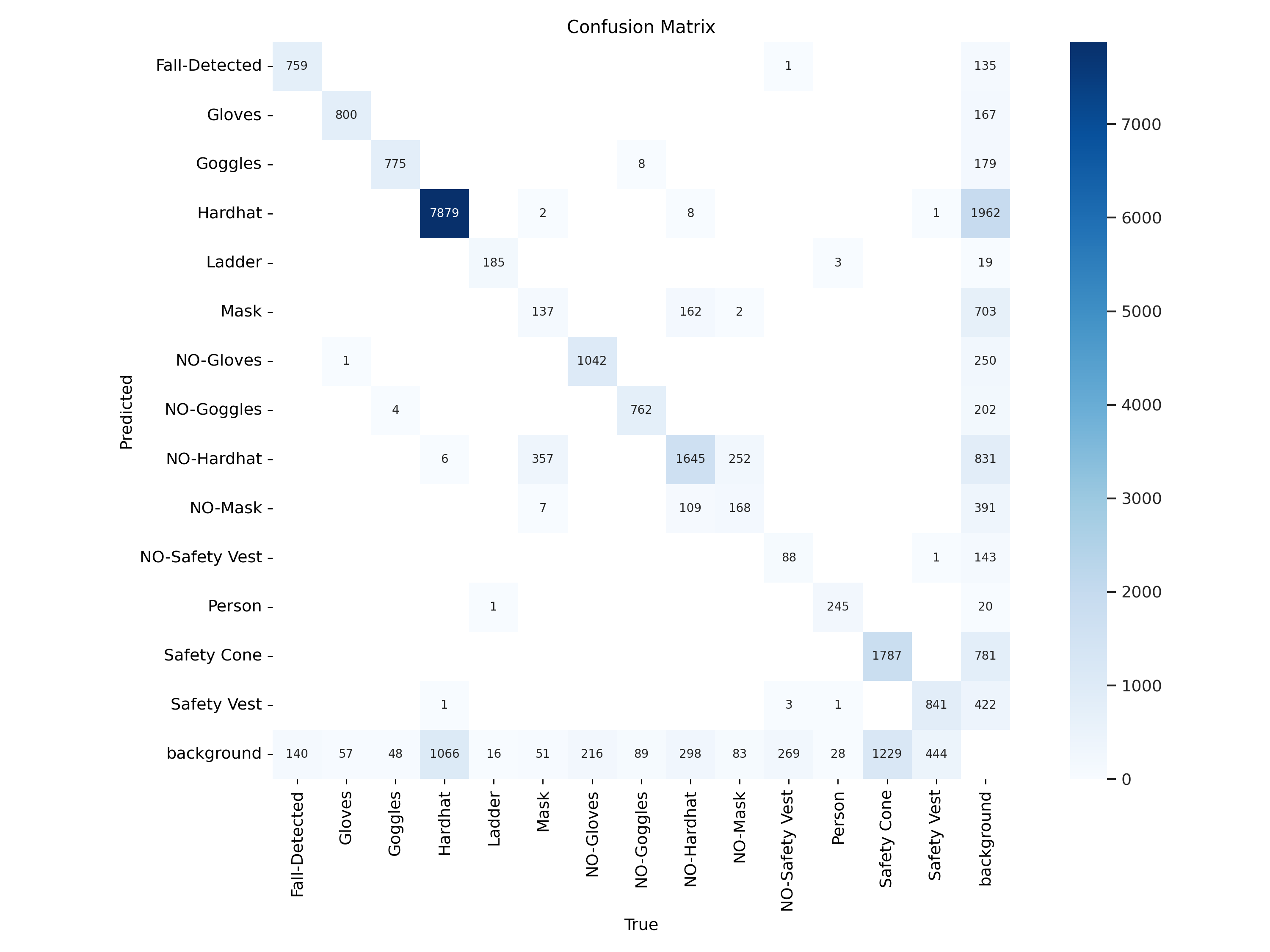

results/confusion_matrix.png

ADDED

|

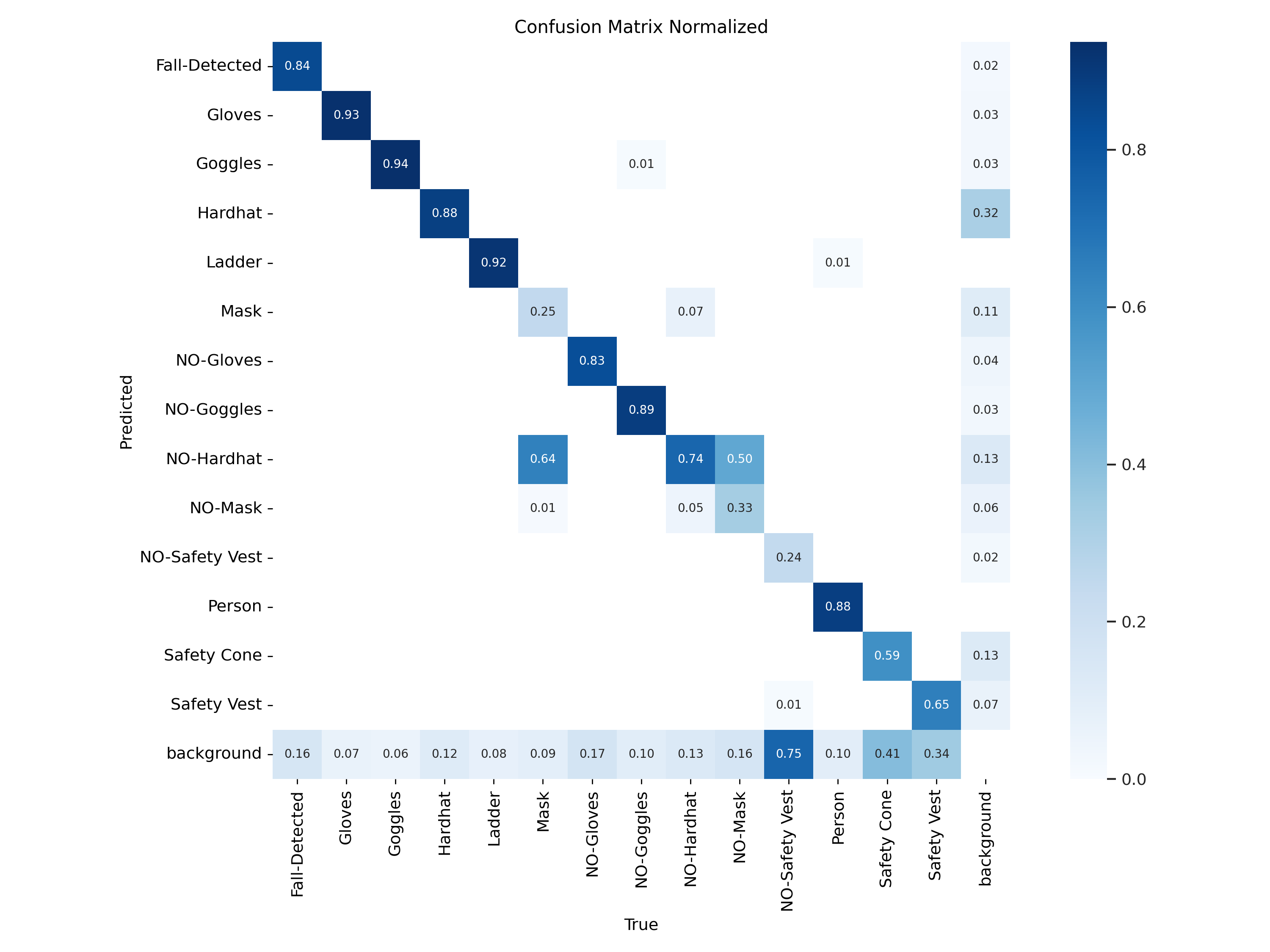

results/confusion_matrix_normalized.png

ADDED

|

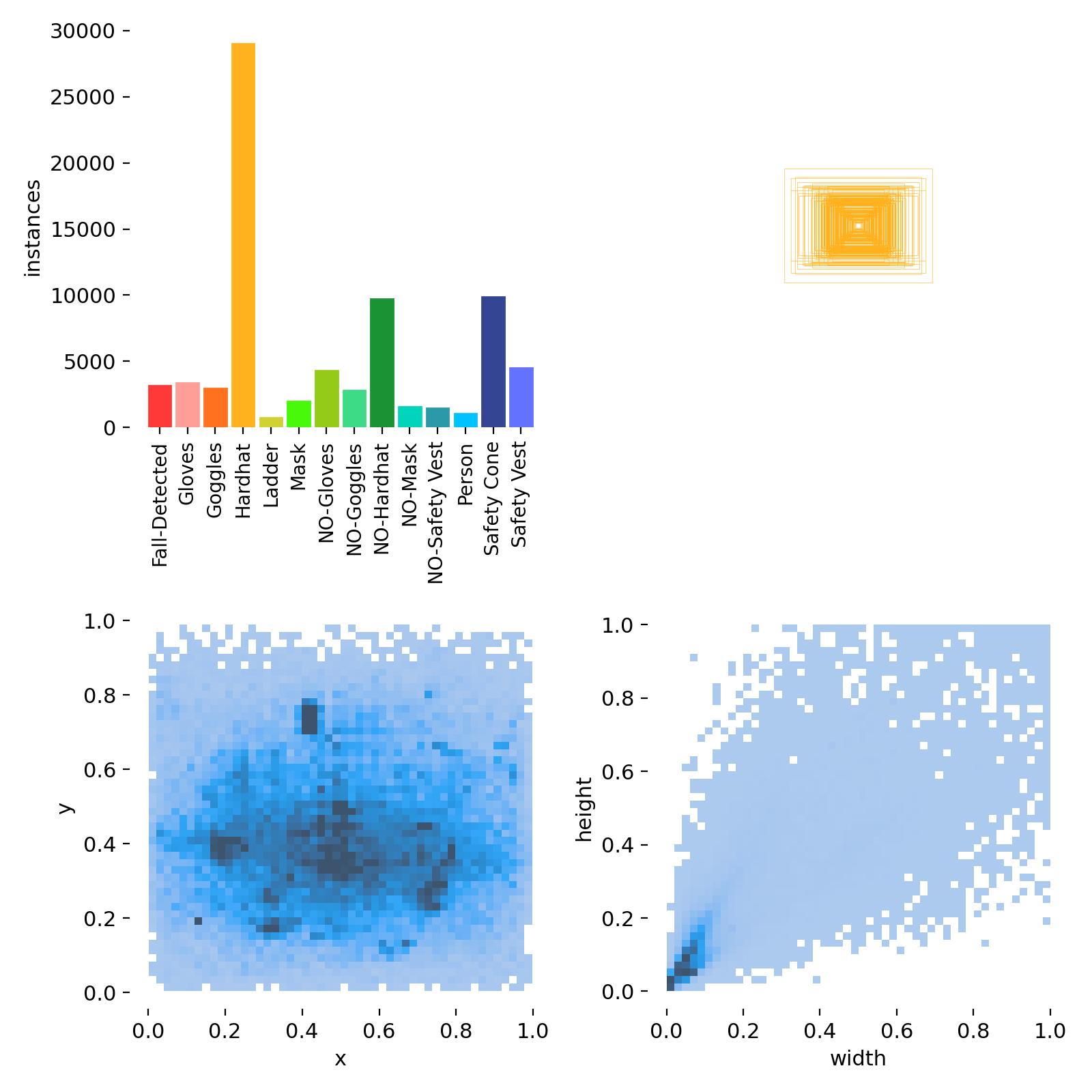

results/labels.jpg

ADDED

|

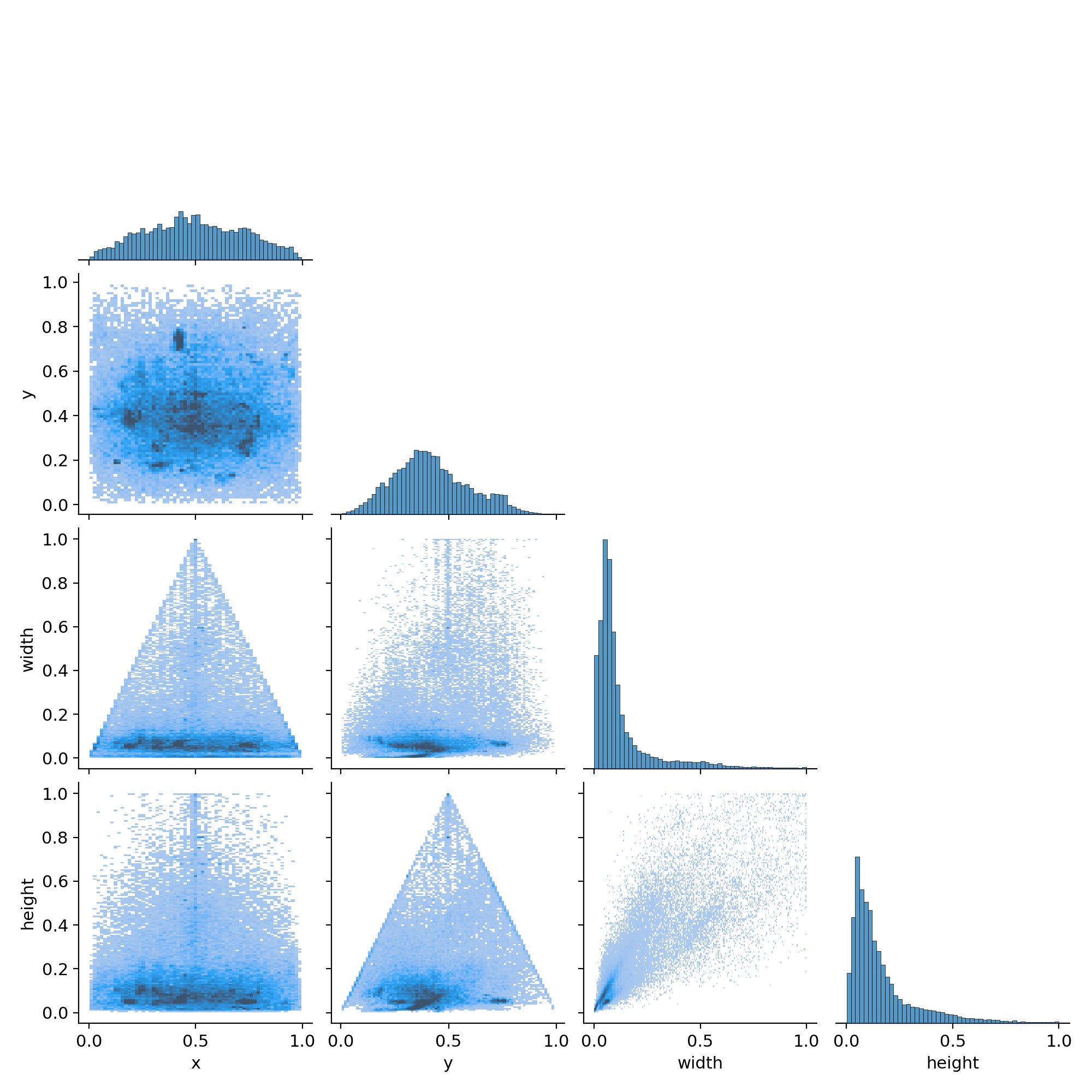

results/labels_correlogram.jpg

ADDED

|

results/results.csv

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/box_om, train/cls_om, train/dfl_om, train/box_oo, train/cls_oo, train/dfl_oo, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_om, val/cls_om, val/dfl_om, val/box_oo, val/cls_oo, val/dfl_oo, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

1, 1.6175, 2.7954, 1.4461, 1.5534, 5.1155, 1.2799, 0.48128, 0.42329, 0.39035, 0.22623, 1.6007, 2.3219, 1.4647, 1.5281, 5.6513, 1.3244, 0.00018495, 0.00018495, 0.00018495

|

| 3 |

+

2, 1.5467, 1.791, 1.3574, 1.5459, 2.8324, 1.2449, 0.50376, 0.46524, 0.42232, 0.2272, 1.6174, 1.9051, 1.4696, 1.6003, 2.6558, 1.3655, 0.00035195, 0.00035195, 0.00035195

|

| 4 |

+

3, 1.5489, 1.5434, 1.3614, 1.5918, 1.9494, 1.2795, 0.56758, 0.5093, 0.49855, 0.26267, 1.611, 1.5642, 1.4715, 1.6232, 1.8324, 1.4041, 0.00050061, 0.00050061, 0.00050061

|

| 5 |

+

4, 1.5166, 1.4101, 1.3394, 1.5802, 1.7031, 1.2834, 0.62729, 0.54169, 0.53358, 0.292, 1.6274, 1.4076, 1.5022, 1.6425, 1.6105, 1.4478, 0.00047343, 0.00047343, 0.00047343

|

| 6 |

+

5, 1.4889, 1.3234, 1.3191, 1.5672, 1.57, 1.2735, 0.62211, 0.59476, 0.6077, 0.34404, 1.5323, 1.262, 1.4028, 1.5889, 1.3627, 1.3654, 0.00047343, 0.00047343, 0.00047343

|

| 7 |

+

6, 1.4626, 1.2463, 1.2983, 1.55, 1.4592, 1.2602, 0.66122, 0.61622, 0.62095, 0.36023, 1.513, 1.2064, 1.3778, 1.5598, 1.3171, 1.3557, 0.00044591, 0.00044591, 0.00044591

|

| 8 |

+

7, 1.4298, 1.1899, 1.2781, 1.5266, 1.3888, 1.2485, 0.61603, 0.66624, 0.64983, 0.38158, 1.4564, 1.0936, 1.3251, 1.51, 1.1819, 1.3098, 0.00041839, 0.00041839, 0.00041839

|

| 9 |

+

8, 1.4129, 1.1561, 1.2676, 1.5104, 1.3392, 1.2407, 0.61885, 0.66341, 0.6509, 0.3745, 1.5002, 1.099, 1.3369, 1.5551, 1.1736, 1.3251, 0.00039087, 0.00039087, 0.00039087

|

| 10 |

+

9, 1.3956, 1.1182, 1.2551, 1.4974, 1.2879, 1.2325, 0.65077, 0.69959, 0.68473, 0.41182, 1.4222, 1.0129, 1.2994, 1.48, 1.0704, 1.2851, 0.00036335, 0.00036335, 0.00036335

|

| 11 |

+

10, 1.3789, 1.0854, 1.242, 1.4823, 1.2438, 1.2192, 0.64959, 0.70791, 0.69474, 0.42239, 1.4016, 0.98037, 1.2826, 1.4588, 1.0296, 1.273, 0.00033582, 0.00033582, 0.00033582

|

| 12 |

+

11, 1.3798, 0.97678, 1.2634, 1.4729, 1.0571, 1.2425, 0.66872, 0.72864, 0.70739, 0.435, 1.381, 0.95833, 1.2755, 1.4412, 0.99441, 1.2626, 0.0003083, 0.0003083, 0.0003083

|

| 13 |

+

12, 1.3564, 0.94245, 1.2491, 1.4509, 1.0138, 1.2256, 0.66667, 0.74568, 0.72413, 0.44586, 1.3702, 0.91433, 1.2578, 1.441, 0.94525, 1.2481, 0.00028078, 0.00028078, 0.00028078

|

| 14 |

+

13, 1.3397, 0.91353, 1.2365, 1.433, 0.98027, 1.2159, 0.66883, 0.76455, 0.73376, 0.45962, 1.349, 0.88796, 1.2533, 1.4167, 0.92008, 1.2452, 0.00025326, 0.00025326, 0.00025326

|

| 15 |

+

14, 1.3208, 0.88717, 1.2241, 1.4155, 0.95617, 1.2, 0.66917, 0.77092, 0.73769, 0.46454, 1.3385, 0.87, 1.2441, 1.4019, 0.89797, 1.2303, 0.00022574, 0.00022574, 0.00022574

|

| 16 |

+

15, 1.3062, 0.86752, 1.2179, 1.3984, 0.92803, 1.1968, 0.66989, 0.77004, 0.74171, 0.46884, 1.3253, 0.85879, 1.2346, 1.3883, 0.88954, 1.2262, 0.00019821, 0.00019821, 0.00019821

|

| 17 |

+

16, 1.2904, 0.84195, 1.2058, 1.3888, 0.89869, 1.1847, 0.68683, 0.77113, 0.74443, 0.47411, 1.3159, 0.83833, 1.2319, 1.3847, 0.86035, 1.2219, 0.00017069, 0.00017069, 0.00017069

|

| 18 |

+

17, 1.2762, 0.82295, 1.2, 1.3719, 0.87611, 1.1774, 0.69727, 0.77272, 0.74916, 0.47828, 1.3081, 0.82849, 1.2272, 1.373, 0.84983, 1.2148, 0.00014317, 0.00014317, 0.00014317

|

| 19 |

+

18, 1.2618, 0.80402, 1.1896, 1.3544, 0.8532, 1.1675, 0.69688, 0.78053, 0.75226, 0.48302, 1.3011, 0.81901, 1.2186, 1.3651, 0.84, 1.2065, 0.00011565, 0.00011565, 0.00011565

|

| 20 |

+

19, 1.2466, 0.7869, 1.1784, 1.3404, 0.8356, 1.1586, 0.69074, 0.78705, 0.75501, 0.48633, 1.2951, 0.80962, 1.2114, 1.3592, 0.83344, 1.2037, 8.8126e-05, 8.8126e-05, 8.8126e-05

|

| 21 |

+

20, 1.2327, 0.76884, 1.1722, 1.3244, 0.81734, 1.1528, 0.69436, 0.7831, 0.75406, 0.48651, 1.2919, 0.80526, 1.2104, 1.3553, 0.82762, 1.2005, 6.0604e-05, 6.0604e-05, 6.0604e-05

|

results/results.png

ADDED

|

results/train_batch0.jpg

ADDED

|

results/train_batch1.jpg

ADDED

|

results/train_batch2.jpg

ADDED

|

results/train_batch4810.jpg

ADDED

|

results/train_batch4811.jpg

ADDED

|

results/train_batch4812.jpg

ADDED

|

results/val_batch0_labels.jpg

ADDED

|

results/val_batch0_pred.jpg

ADDED

|

results/val_batch1_labels.jpg

ADDED

|

results/val_batch1_pred.jpg

ADDED

|

results/val_batch2_labels.jpg

ADDED

|

results/val_batch2_pred.jpg

ADDED

|

sample/1.jpg

ADDED

|

weights/best.onnx

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cfdf167b393544b058f0d4bc74c772c209c98961e1bf234b4e11c228f50f4269

|

| 3 |

+

size 9260104

|

weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:48301bdc12155643eef9cc044d46457a2441d672d3633d138ce1ffa0267b9253

|

| 3 |

+

size 5764580

|

weights/last.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1d4b7d938465330d47c1fa5b4f6f50b599be613f9c2737a90ed7f93e58629058

|

| 3 |

+

size 5764580

|