Commit

·

4a75cb7

1

Parent(s):

be09297

Upload 19 files

Browse files- FRCNN_MODEL_3Classes_100Epochs.pth +3 -0

- Training_jupyterFile.ipynb +0 -0

- app.py +133 -0

- img1.jpg +0 -0

- img10.jpg +0 -0

- img11.jpg +0 -0

- img12.jpg +0 -0

- img13.jpg +0 -0

- img14.jpg +0 -0

- img15.jpg +0 -0

- img16.jpg +0 -0

- img2.jpg +0 -0

- img3.jpg +0 -0

- img4.jpg +0 -0

- img5.jpg +0 -0

- img6.jpg +0 -0

- img8.jpg +0 -0

- img9.jpg +0 -0

- requirements.txt +11 -0

FRCNN_MODEL_3Classes_100Epochs.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ea6c7bed92de7ef93e4c81ce566b87d246172fb3a643154580899b5031944e06

|

| 3 |

+

size 76062139

|

Training_jupyterFile.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

app.py

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import torch

|

| 3 |

+

import torchvision

|

| 4 |

+

import torchvision.transforms as transforms

|

| 5 |

+

from torchvision import datasets, models

|

| 6 |

+

from torchvision.transforms import functional as FT

|

| 7 |

+

from torchvision import transforms as T

|

| 8 |

+

from torch import nn, optim

|

| 9 |

+

from torch.nn import functional as F

|

| 10 |

+

from torch.utils.data import DataLoader, sampler, random_split, Dataset

|

| 11 |

+

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

|

| 12 |

+

from torchvision.transforms import ToTensor

|

| 13 |

+

from PIL import Image, ImageDraw

|

| 14 |

+

from pycocotools.coco import COCO

|

| 15 |

+

import cv2

|

| 16 |

+

import numpy as np

|

| 17 |

+

import pandas as pd

|

| 18 |

+

import os

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

import tempfile

|

| 22 |

+

from tempfile import NamedTemporaryFile

|

| 23 |

+

|

| 24 |

+

dataset_path = "Dataset"

|

| 25 |

+

|

| 26 |

+

#load classes

|

| 27 |

+

coco = COCO(os.path.join(dataset_path, "train", "_annotations.coco.json"))

|

| 28 |

+

categories = coco.cats

|

| 29 |

+

n_classes = len(categories.keys())

|

| 30 |

+

|

| 31 |

+

# load the faster rcnn model

|

| 32 |

+

modeltest = models.detection.fasterrcnn_mobilenet_v3_large_fpn(num_classes=4)

|

| 33 |

+

in_features = modeltest.roi_heads.box_predictor.cls_score.in_features # we need to change the head

|

| 34 |

+

modeltest.roi_heads.box_predictor = models.detection.faster_rcnn.FastRCNNPredictor(in_features, n_classes)

|

| 35 |

+

|

| 36 |

+

# Load the saved parameters into the model

|

| 37 |

+

modeltest.load_state_dict(torch.load("FRCNN_MODEL_3Classes_100Epochs.pth"))

|

| 38 |

+

|

| 39 |

+

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

|

| 40 |

+

modeltest.to(device)

|

| 41 |

+

|

| 42 |

+

# Number of classes

|

| 43 |

+

classes = ['pole', 'cross_arm', 'pole', 'tag']

|

| 44 |

+

|

| 45 |

+

st.title(""" Object Detection Using Faster-RCNN For Electrical Domain """)

|

| 46 |

+

|

| 47 |

+

# st.subheader("Prediction of Object Detection")

|

| 48 |

+

|

| 49 |

+

images = ["img16.jpg","img1.jpg","img2.jpg","img3.jpg","img4.jpg","img5.jpg","img6.jpg","img8.jpg",

|

| 50 |

+

"img10.jpg","img11.jpg","img12.jpg","img13.jpg","img14.jpg","img15.jpg","img9.jpg"]

|

| 51 |

+

|

| 52 |

+

with st.sidebar:

|

| 53 |

+

st.write("Choose an Image from Sample Images ")

|

| 54 |

+

st.image(images)

|

| 55 |

+

|

| 56 |

+

# with st.sidebar:

|

| 57 |

+

# st.write("Choose an Image From The DropDown")

|

| 58 |

+

# selected_image = st.selectbox("Select an image", images)

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

# with st.sidebar:

|

| 62 |

+

# st.write("Choose an Image")

|

| 63 |

+

# for image in images:

|

| 64 |

+

# with Image.open(image) as img:

|

| 65 |

+

# st.image(img, width=100, quality=90) # quality parameter is not there in image, it will give error

|

| 66 |

+

|

| 67 |

+

# with st.sidebar:

|

| 68 |

+

# st.write("Choose an Image")

|

| 69 |

+

# st.image(images,width=100)

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

# define the function to perform object detection on an image

|

| 73 |

+

def detect_objects(image_path):

|

| 74 |

+

# load the image

|

| 75 |

+

image = Image.open(image_path).convert('RGB')

|

| 76 |

+

|

| 77 |

+

# convert the image to a tensor

|

| 78 |

+

image_tensor = ToTensor()(image).to(device)

|

| 79 |

+

|

| 80 |

+

# run the image through the model to get the predictions

|

| 81 |

+

modeltest.eval()

|

| 82 |

+

with torch.no_grad():

|

| 83 |

+

predictions = modeltest([image_tensor])

|

| 84 |

+

|

| 85 |

+

# filter out the predictions below the threshold

|

| 86 |

+

threshold = 0.5

|

| 87 |

+

scores = predictions[0]['scores'].cpu().numpy()

|

| 88 |

+

boxes = predictions[0]['boxes'].cpu().numpy()

|

| 89 |

+

labels = predictions[0]['labels'].cpu().numpy()

|

| 90 |

+

mask = scores > threshold

|

| 91 |

+

scores = scores[mask]

|

| 92 |

+

boxes = boxes[mask]

|

| 93 |

+

labels = labels[mask]

|

| 94 |

+

|

| 95 |

+

# create a new image with the predicted objects outlined in rectangles

|

| 96 |

+

draw = ImageDraw.Draw(image)

|

| 97 |

+

for box, label in zip(boxes, labels):

|

| 98 |

+

|

| 99 |

+

# draw the rectangle around the object

|

| 100 |

+

draw.rectangle([(box[0], box[1]), (box[2], box[3])], outline='red')

|

| 101 |

+

|

| 102 |

+

# write the object class above the rectangle

|

| 103 |

+

class_name = classes[label]

|

| 104 |

+

draw.text((box[0], box[1]), class_name, fill='yellow')

|

| 105 |

+

|

| 106 |

+

# show the image

|

| 107 |

+

st.write("Obects detected in the image are: ")

|

| 108 |

+

st.image(image, use_column_width=True)

|

| 109 |

+

# st.image.show()

|

| 110 |

+

|

| 111 |

+

file = st.file_uploader('Upload an Image', type=(["jpeg", "jpg", "png"]))

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

if file is None:

|

| 115 |

+

st.write("Please upload an image file")

|

| 116 |

+

else:

|

| 117 |

+

image = Image.open(file)

|

| 118 |

+

st.write("Input Image")

|

| 119 |

+

st.image(image, use_column_width=True)

|

| 120 |

+

with NamedTemporaryFile(dir='.', suffix='.') as f:

|

| 121 |

+

f.write(file.getbuffer())

|

| 122 |

+

# your_function_which_takes_a_path(f.name)

|

| 123 |

+

detect_objects(f.name)

|

| 124 |

+

|

| 125 |

+

st.subheader("Model Description : ")

|

| 126 |

+

st.write(""" The Faster R-CNN model with MobileNet V3 Large as the backbone and Feature Pyramid Network (FPN) architecture is a popular

|

| 127 |

+

object detection model that combines high detection accuracy with efficient computation. The MobileNet V3 Large backbone

|

| 128 |

+

is a lightweight neural network architecture that reduces the number of parameters while maintaining high accuracy,

|

| 129 |

+

making it suitable for mobile and embedded devices. The FPN architecture enhances the feature representation of the model

|

| 130 |

+

by aggregating features from multiple scales and improving spatial resolution. This combination of a lightweight backbone

|

| 131 |

+

with an efficient feature extraction architecture makes Faster R-CNN with MobileNet V3 Large FPN a popular choice for

|

| 132 |

+

object detection in real-time applications and on devices with limited computational resources.

|

| 133 |

+

""")

|

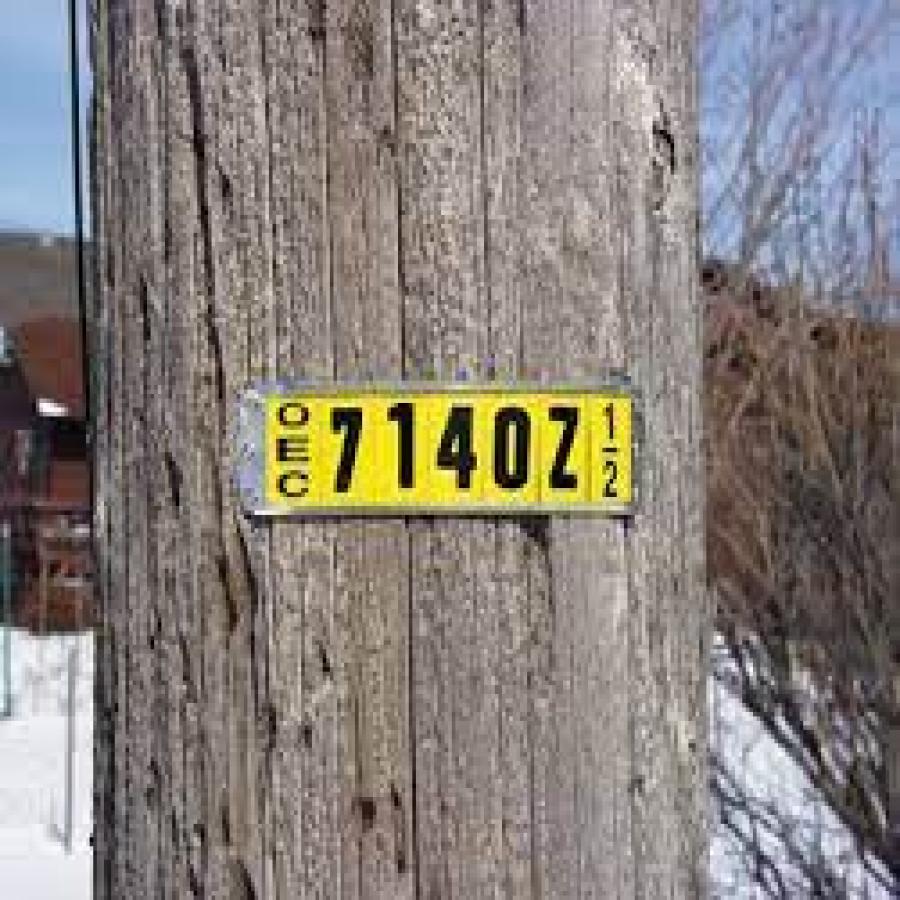

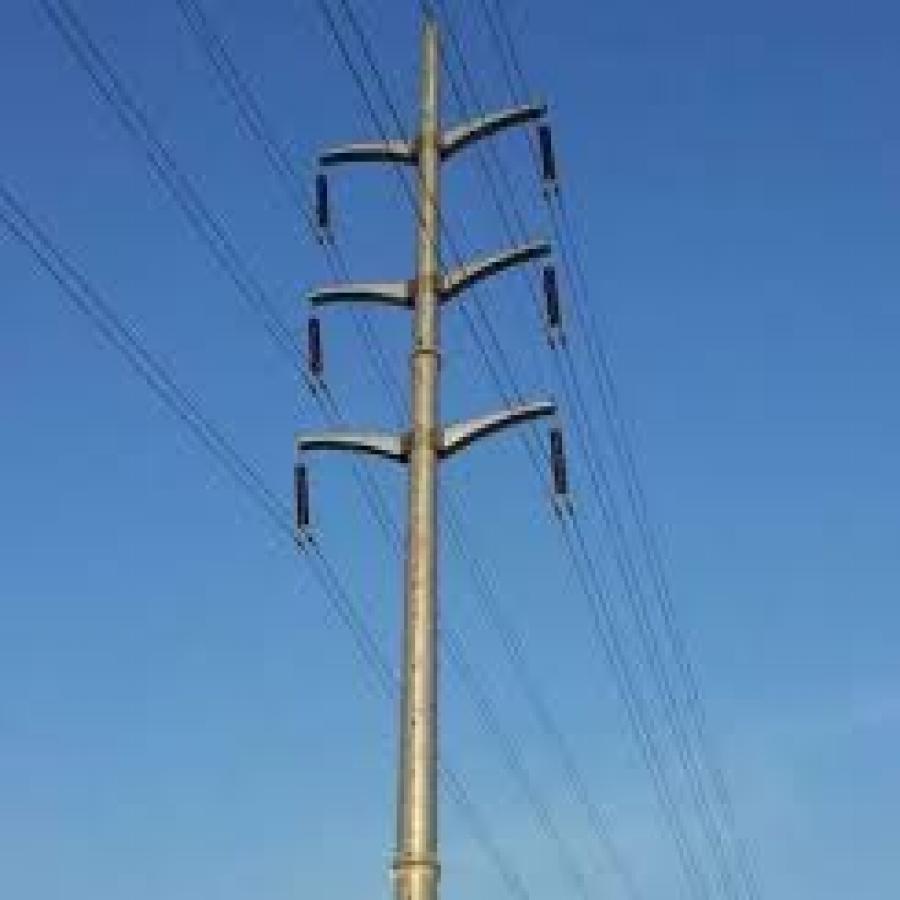

img1.jpg

ADDED

|

img10.jpg

ADDED

|

img11.jpg

ADDED

|

img12.jpg

ADDED

|

img13.jpg

ADDED

|

img14.jpg

ADDED

|

img15.jpg

ADDED

|

img16.jpg

ADDED

|

img2.jpg

ADDED

|

img3.jpg

ADDED

|

img4.jpg

ADDED

|

img5.jpg

ADDED

|

img6.jpg

ADDED

|

img8.jpg

ADDED

|

img9.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

streamlit

|

| 2 |

+

torch

|

| 3 |

+

torchvision

|

| 4 |

+

matplotlib

|

| 5 |

+

transformers

|

| 6 |

+

pandas

|

| 7 |

+

numpy

|

| 8 |

+

seaborn

|

| 9 |

+

scikit-learn

|

| 10 |

+

opencv-python

|

| 11 |

+

pycocotools

|