Document RNN (#4)

Browse files- .github/workflows/ci-cd.yml +0 -1

- .gitignore +1 -0

- docs/source/img/architecture-rnn-ltr.png +0 -0

- docs/source/img/architecture-rnn-ltr.psd +0 -0

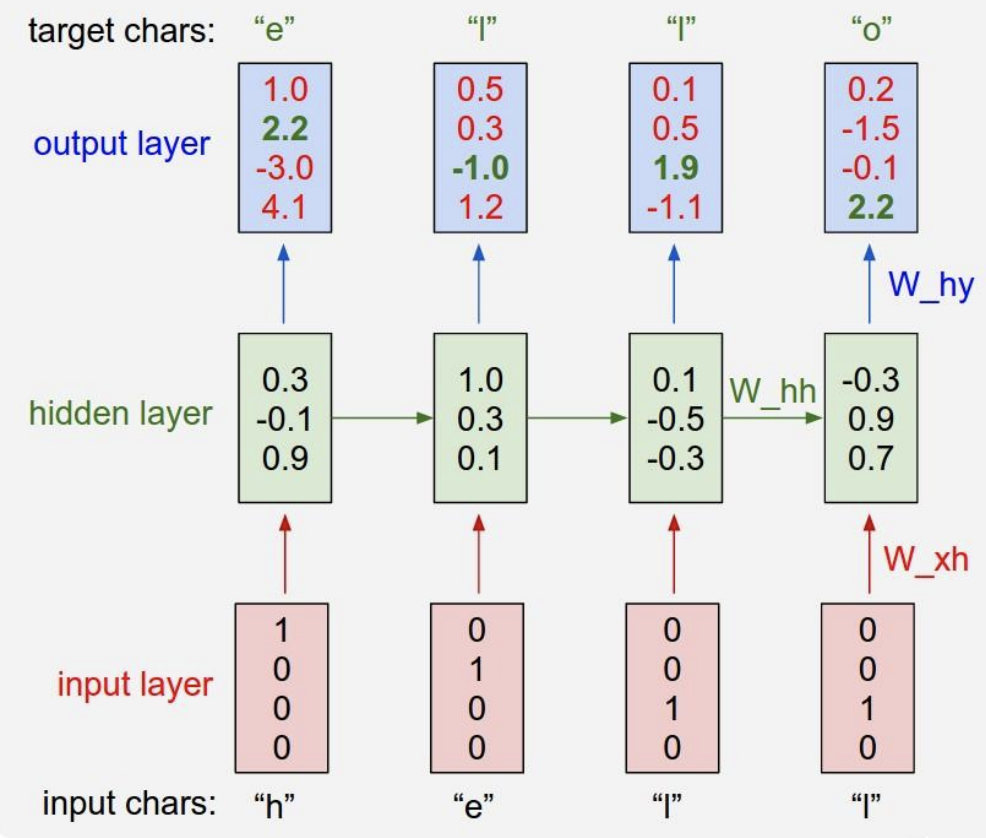

- docs/source/img/char-level-language-model.png +0 -0

- docs/source/img/description-block-rnn-ltr.png +0 -0

- docs/source/img/description-block-rnn-ltr.psd +0 -0

- docs/source/img/gradient-clipping.png +0 -0

- docs/source/img/rnn-4-black-boxes-connected.drawio +121 -0

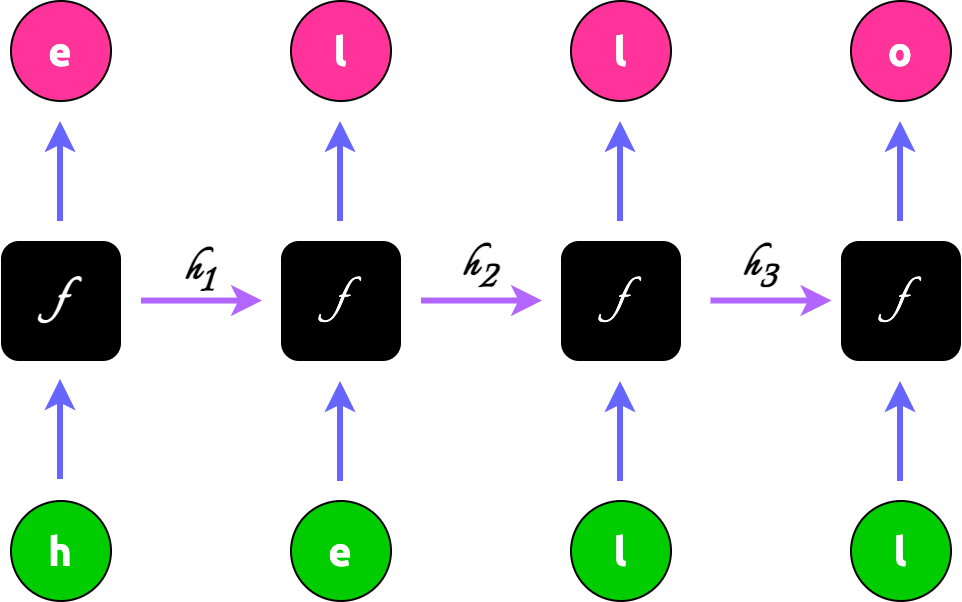

- docs/source/img/rnn-4-black-boxes-connected.png +0 -0

- docs/source/img/rnn-4-black-boxes.drawio +94 -0

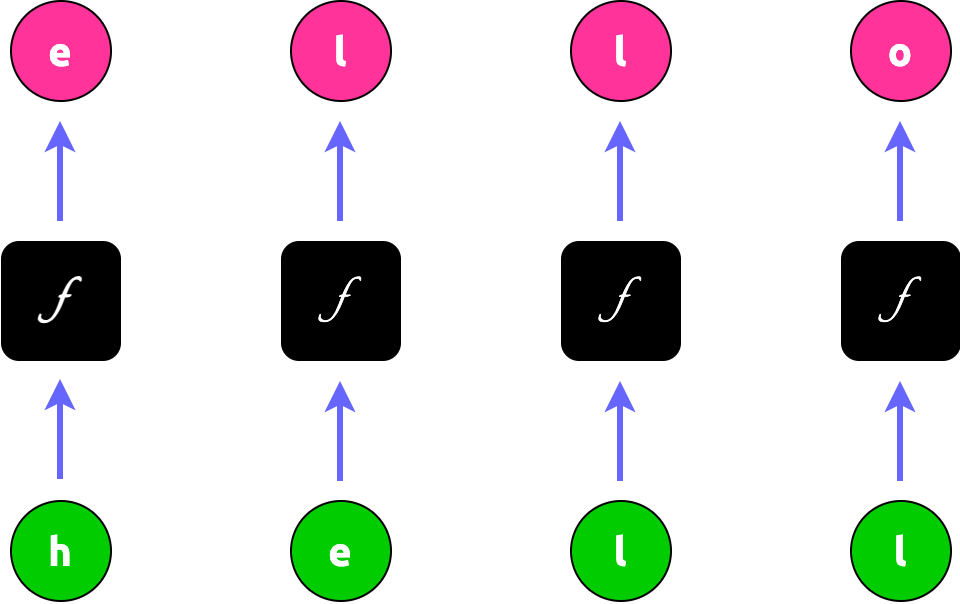

- docs/source/img/rnn-4-black-boxes.png +0 -0

- docs/source/img/rnn-multi-sequences.drawio +250 -0

- docs/source/img/rnn-multi-sequences.png +0 -0

- docs/source/img/rnn.drawio +149 -0

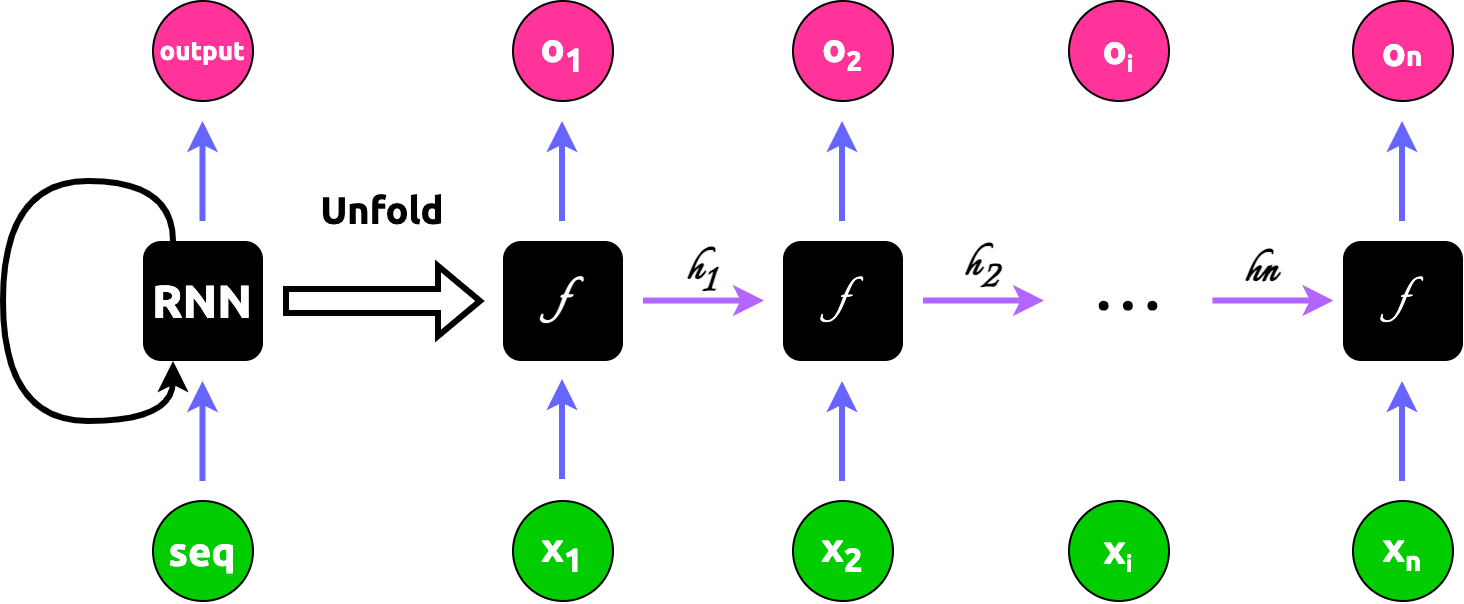

- docs/source/img/rnn.png +0 -0

- docs/source/lamassu.rst +1 -1

- docs/source/rnn/rnn.rst +612 -0

- docs/source/rnn/vanilla.rst +0 -176

- lamassu/rnn/example.py +50 -0

- lamassu/rnn/rnn.py +114 -0

- lamassu/rnn/vanilla.py +0 -25

- setup.py +1 -1

.github/workflows/ci-cd.yml

CHANGED

|

@@ -31,7 +31,6 @@ jobs:

|

|

| 31 |

with:

|

| 32 |

python-version: "3.10"

|

| 33 |

- name: Package up SDK

|

| 34 |

-

if: github.ref == 'refs/heads/master'

|

| 35 |

run: python setup.py sdist

|

| 36 |

- name: Publish a Python distribution to PyPI

|

| 37 |

if: github.ref == 'refs/heads/master'

|

|

|

|

| 31 |

with:

|

| 32 |

python-version: "3.10"

|

| 33 |

- name: Package up SDK

|

|

|

|

| 34 |

run: python setup.py sdist

|

| 35 |

- name: Publish a Python distribution to PyPI

|

| 36 |

if: github.ref == 'refs/heads/master'

|

.gitignore

CHANGED

|

@@ -1,2 +1,3 @@

|

|

| 1 |

.idea/

|

| 2 |

.DS_Store

|

|

|

|

|

|

| 1 |

.idea/

|

| 2 |

.DS_Store

|

| 3 |

+

__pycache__

|

docs/source/img/architecture-rnn-ltr.png

DELETED

|

Binary file (47.6 kB)

|

|

|

docs/source/img/architecture-rnn-ltr.psd

DELETED

|

Binary file (359 kB)

|

|

|

docs/source/img/char-level-language-model.png

ADDED

|

docs/source/img/description-block-rnn-ltr.png

DELETED

|

Binary file (55.5 kB)

|

|

|

docs/source/img/description-block-rnn-ltr.psd

DELETED

|

Binary file (394 kB)

|

|

|

docs/source/img/gradient-clipping.png

DELETED

|

Binary file (4.71 kB)

|

|

|

docs/source/img/rnn-4-black-boxes-connected.drawio

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<mxfile host="app.diagrams.net" modified="2024-03-19T01:01:04.926Z" agent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36" etag="OgWHmKqu6mVN4yDKoCwM" version="24.0.7" type="device">

|

| 2 |

+

<diagram name="Page-1" id="gxr7cFC-hZQY0lpAcxoR">

|

| 3 |

+

<mxGraphModel dx="816" dy="516" grid="1" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="850" pageHeight="1100" math="0" shadow="0">

|

| 4 |

+

<root>

|

| 5 |

+

<mxCell id="0" />

|

| 6 |

+

<mxCell id="1" parent="0" />

|

| 7 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-21" value="<font size="1" data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="" color="#ffffff"><b style="font-size: 27px;">f</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" vertex="1" parent="1">

|

| 8 |

+

<mxGeometry x="40" y="320" width="60" height="60" as="geometry" />

|

| 9 |

+

</mxCell>

|

| 10 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-22" value="<font color="#ffffff" face="Italianno" style="font-size: 27px;">f</font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" vertex="1" parent="1">

|

| 11 |

+

<mxGeometry x="180" y="320" width="60" height="60" as="geometry" />

|

| 12 |

+

</mxCell>

|

| 13 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-23" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" vertex="1" parent="1">

|

| 14 |

+

<mxGeometry x="320" y="320" width="60" height="60" as="geometry" />

|

| 15 |

+

</mxCell>

|

| 16 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-24" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" vertex="1" parent="1">

|

| 17 |

+

<mxGeometry x="460" y="320" width="60" height="60" as="geometry" />

|

| 18 |

+

</mxCell>

|

| 19 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-25" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 20 |

+

<mxGeometry relative="1" as="geometry">

|

| 21 |

+

<mxPoint x="69.5" y="439" as="sourcePoint" />

|

| 22 |

+

<mxPoint x="69.5" y="389" as="targetPoint" />

|

| 23 |

+

</mxGeometry>

|

| 24 |

+

</mxCell>

|

| 25 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-26" value="<font face="Ubuntu" style="font-size: 20px;"><b>h</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 26 |

+

<mxGeometry x="45" y="450" width="50" height="50" as="geometry" />

|

| 27 |

+

</mxCell>

|

| 28 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-27" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 29 |

+

<mxGeometry relative="1" as="geometry">

|

| 30 |

+

<mxPoint x="69.5" y="310" as="sourcePoint" />

|

| 31 |

+

<mxPoint x="69.5" y="260" as="targetPoint" />

|

| 32 |

+

</mxGeometry>

|

| 33 |

+

</mxCell>

|

| 34 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-28" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>e</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 35 |

+

<mxGeometry x="45" y="200" width="50" height="50" as="geometry" />

|

| 36 |

+

</mxCell>

|

| 37 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-29" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>l</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 38 |

+

<mxGeometry x="185" y="200" width="50" height="50" as="geometry" />

|

| 39 |

+

</mxCell>

|

| 40 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-30" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>l</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 41 |

+

<mxGeometry x="325" y="200" width="50" height="50" as="geometry" />

|

| 42 |

+

</mxCell>

|

| 43 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-31" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>o</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 44 |

+

<mxGeometry x="465" y="200" width="50" height="50" as="geometry" />

|

| 45 |

+

</mxCell>

|

| 46 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-32" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 47 |

+

<mxGeometry relative="1" as="geometry">

|

| 48 |

+

<mxPoint x="209.5" y="310" as="sourcePoint" />

|

| 49 |

+

<mxPoint x="209.5" y="260" as="targetPoint" />

|

| 50 |

+

</mxGeometry>

|

| 51 |

+

</mxCell>

|

| 52 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-33" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 53 |

+

<mxGeometry relative="1" as="geometry">

|

| 54 |

+

<mxPoint x="349.5" y="310" as="sourcePoint" />

|

| 55 |

+

<mxPoint x="349.5" y="260" as="targetPoint" />

|

| 56 |

+

</mxGeometry>

|

| 57 |

+

</mxCell>

|

| 58 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-34" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 59 |

+

<mxGeometry relative="1" as="geometry">

|

| 60 |

+

<mxPoint x="489.5" y="310" as="sourcePoint" />

|

| 61 |

+

<mxPoint x="489.5" y="260" as="targetPoint" />

|

| 62 |

+

</mxGeometry>

|

| 63 |

+

</mxCell>

|

| 64 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-35" value="<font face="Ubuntu" style="font-size: 20px;"><b>e</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 65 |

+

<mxGeometry x="185" y="450" width="50" height="50" as="geometry" />

|

| 66 |

+

</mxCell>

|

| 67 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-36" value="<font face="Ubuntu" style="font-size: 20px;"><b>l</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 68 |

+

<mxGeometry x="325" y="450" width="50" height="50" as="geometry" />

|

| 69 |

+

</mxCell>

|

| 70 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-37" value="<font face="Ubuntu" style="font-size: 20px;"><b>l</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 71 |

+

<mxGeometry x="465" y="450" width="50" height="50" as="geometry" />

|

| 72 |

+

</mxCell>

|

| 73 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-38" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 74 |

+

<mxGeometry relative="1" as="geometry">

|

| 75 |

+

<mxPoint x="209.5" y="440" as="sourcePoint" />

|

| 76 |

+

<mxPoint x="209.5" y="390" as="targetPoint" />

|

| 77 |

+

</mxGeometry>

|

| 78 |

+

</mxCell>

|

| 79 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-39" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 80 |

+

<mxGeometry relative="1" as="geometry">

|

| 81 |

+

<mxPoint x="349.5" y="440" as="sourcePoint" />

|

| 82 |

+

<mxPoint x="349.5" y="390" as="targetPoint" />

|

| 83 |

+

</mxGeometry>

|

| 84 |

+

</mxCell>

|

| 85 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-40" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 86 |

+

<mxGeometry relative="1" as="geometry">

|

| 87 |

+

<mxPoint x="489.5" y="440" as="sourcePoint" />

|

| 88 |

+

<mxPoint x="489.5" y="390" as="targetPoint" />

|

| 89 |

+

</mxGeometry>

|

| 90 |

+

</mxCell>

|

| 91 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-44" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 92 |

+

<mxGeometry relative="1" as="geometry">

|

| 93 |

+

<mxPoint x="110" y="349.78" as="sourcePoint" />

|

| 94 |

+

<mxPoint x="170.5" y="349.78" as="targetPoint" />

|

| 95 |

+

</mxGeometry>

|

| 96 |

+

</mxCell>

|

| 97 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-45" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 98 |

+

<mxGeometry relative="1" as="geometry">

|

| 99 |

+

<mxPoint x="250" y="349.76" as="sourcePoint" />

|

| 100 |

+

<mxPoint x="310.5" y="349.76" as="targetPoint" />

|

| 101 |

+

</mxGeometry>

|

| 102 |

+

</mxCell>

|

| 103 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-46" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 104 |

+

<mxGeometry relative="1" as="geometry">

|

| 105 |

+

<mxPoint x="394.75" y="349.76" as="sourcePoint" />

|

| 106 |

+

<mxPoint x="455.25" y="349.76" as="targetPoint" />

|

| 107 |

+

</mxGeometry>

|

| 108 |

+

</mxCell>

|

| 109 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-47" value="<font data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="font-size: 25px;"><span style="font-size: 25px;">h<sub style="font-size: 25px;">1</sub></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontSize=25;fontStyle=1" vertex="1" parent="1">

|

| 110 |

+

<mxGeometry x="110" y="320" width="60" height="30" as="geometry" />

|

| 111 |

+

</mxCell>

|

| 112 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-48" value="<font style="font-size: 25px;"><span style="font-size: 25px;"><span style="font-size: 25px;">h</span><span style="font-size: 25px;"><sub style="font-size: 25px;">2</sub></span></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontFamily=Italianno;fontSource=https%3A%2F%2Ffonts.googleapis.com%2Fcss%3Ffamily%3DItalianno;fontSize=25;fontStyle=1" vertex="1" parent="1">

|

| 113 |

+

<mxGeometry x="250" y="320" width="60" height="30" as="geometry" />

|

| 114 |

+

</mxCell>

|

| 115 |

+

<mxCell id="o5WZRm4PuDRFcwwRBSCM-49" value="<font style="font-size: 25px;"><span style="font-size: 25px;"><span style="font-size: 25px;">h</span><span style="font-size: 25px;"><sub style="font-size: 25px;">3</sub></span></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontFamily=Italianno;fontSource=https%3A%2F%2Ffonts.googleapis.com%2Fcss%3Ffamily%3DItalianno;fontSize=25;fontStyle=1" vertex="1" parent="1">

|

| 116 |

+

<mxGeometry x="390" y="320" width="60" height="30" as="geometry" />

|

| 117 |

+

</mxCell>

|

| 118 |

+

</root>

|

| 119 |

+

</mxGraphModel>

|

| 120 |

+

</diagram>

|

| 121 |

+

</mxfile>

|

docs/source/img/rnn-4-black-boxes-connected.png

ADDED

|

docs/source/img/rnn-4-black-boxes.drawio

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<mxfile host="app.diagrams.net" modified="2024-03-19T00:55:52.180Z" agent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36" etag="0mAcZEyuQtVV9Bg2w-Tf" version="24.0.7" type="device">

|

| 2 |

+

<diagram name="Page-1" id="DUD_6-T85kScICrpKMMz">

|

| 3 |

+

<mxGraphModel dx="1536" dy="972" grid="1" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="850" pageHeight="1100" math="0" shadow="0">

|

| 4 |

+

<root>

|

| 5 |

+

<mxCell id="0" />

|

| 6 |

+

<mxCell id="1" parent="0" />

|

| 7 |

+

<mxCell id="LGyqkTtVOXbUGTZdzTyq-1" value="<font size="1" data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="" color="#ffffff"><b style="font-size: 27px;">f</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 8 |

+

<mxGeometry x="20" y="300" width="60" height="60" as="geometry" />

|

| 9 |

+

</mxCell>

|

| 10 |

+

<mxCell id="LGyqkTtVOXbUGTZdzTyq-2" value="<font color="#ffffff" face="Italianno" style="font-size: 27px;">f</font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 11 |

+

<mxGeometry x="160" y="300" width="60" height="60" as="geometry" />

|

| 12 |

+

</mxCell>

|

| 13 |

+

<mxCell id="LGyqkTtVOXbUGTZdzTyq-4" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 14 |

+

<mxGeometry x="300" y="300" width="60" height="60" as="geometry" />

|

| 15 |

+

</mxCell>

|

| 16 |

+

<mxCell id="LGyqkTtVOXbUGTZdzTyq-5" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 17 |

+

<mxGeometry x="440" y="300" width="60" height="60" as="geometry" />

|

| 18 |

+

</mxCell>

|

| 19 |

+

<mxCell id="LGyqkTtVOXbUGTZdzTyq-11" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 20 |

+

<mxGeometry relative="1" as="geometry">

|

| 21 |

+

<mxPoint x="49.5" y="419" as="sourcePoint" />

|

| 22 |

+

<mxPoint x="49.5" y="369" as="targetPoint" />

|

| 23 |

+

</mxGeometry>

|

| 24 |

+

</mxCell>

|

| 25 |

+

<mxCell id="hK792VXiPIr8ubialXFB-1" value="<font face="Ubuntu" style="font-size: 20px;"><b>h</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 26 |

+

<mxGeometry x="25" y="430" width="50" height="50" as="geometry" />

|

| 27 |

+

</mxCell>

|

| 28 |

+

<mxCell id="hK792VXiPIr8ubialXFB-2" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 29 |

+

<mxGeometry relative="1" as="geometry">

|

| 30 |

+

<mxPoint x="49.5" y="290" as="sourcePoint" />

|

| 31 |

+

<mxPoint x="49.5" y="240" as="targetPoint" />

|

| 32 |

+

</mxGeometry>

|

| 33 |

+

</mxCell>

|

| 34 |

+

<mxCell id="hK792VXiPIr8ubialXFB-3" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>e</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 35 |

+

<mxGeometry x="25" y="180" width="50" height="50" as="geometry" />

|

| 36 |

+

</mxCell>

|

| 37 |

+

<mxCell id="hK792VXiPIr8ubialXFB-4" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>l</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 38 |

+

<mxGeometry x="165" y="180" width="50" height="50" as="geometry" />

|

| 39 |

+

</mxCell>

|

| 40 |

+

<mxCell id="hK792VXiPIr8ubialXFB-5" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>l</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 41 |

+

<mxGeometry x="305" y="180" width="50" height="50" as="geometry" />

|

| 42 |

+

</mxCell>

|

| 43 |

+

<mxCell id="hK792VXiPIr8ubialXFB-6" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>o</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 44 |

+

<mxGeometry x="445" y="180" width="50" height="50" as="geometry" />

|

| 45 |

+

</mxCell>

|

| 46 |

+

<mxCell id="hK792VXiPIr8ubialXFB-8" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 47 |

+

<mxGeometry relative="1" as="geometry">

|

| 48 |

+

<mxPoint x="189.5" y="290" as="sourcePoint" />

|

| 49 |

+

<mxPoint x="189.5" y="240" as="targetPoint" />

|

| 50 |

+

</mxGeometry>

|

| 51 |

+

</mxCell>

|

| 52 |

+

<mxCell id="hK792VXiPIr8ubialXFB-9" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 53 |

+

<mxGeometry relative="1" as="geometry">

|

| 54 |

+

<mxPoint x="329.5" y="290" as="sourcePoint" />

|

| 55 |

+

<mxPoint x="329.5" y="240" as="targetPoint" />

|

| 56 |

+

</mxGeometry>

|

| 57 |

+

</mxCell>

|

| 58 |

+

<mxCell id="hK792VXiPIr8ubialXFB-10" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 59 |

+

<mxGeometry relative="1" as="geometry">

|

| 60 |

+

<mxPoint x="469.5" y="290" as="sourcePoint" />

|

| 61 |

+

<mxPoint x="469.5" y="240" as="targetPoint" />

|

| 62 |

+

</mxGeometry>

|

| 63 |

+

</mxCell>

|

| 64 |

+

<mxCell id="hK792VXiPIr8ubialXFB-11" value="<font face="Ubuntu" style="font-size: 20px;"><b>e</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 65 |

+

<mxGeometry x="165" y="430" width="50" height="50" as="geometry" />

|

| 66 |

+

</mxCell>

|

| 67 |

+

<mxCell id="hK792VXiPIr8ubialXFB-12" value="<font face="Ubuntu" style="font-size: 20px;"><b>l</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 68 |

+

<mxGeometry x="305" y="430" width="50" height="50" as="geometry" />

|

| 69 |

+

</mxCell>

|

| 70 |

+

<mxCell id="hK792VXiPIr8ubialXFB-13" value="<font face="Ubuntu" style="font-size: 20px;"><b>l</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" vertex="1" parent="1">

|

| 71 |

+

<mxGeometry x="445" y="430" width="50" height="50" as="geometry" />

|

| 72 |

+

</mxCell>

|

| 73 |

+

<mxCell id="hK792VXiPIr8ubialXFB-14" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 74 |

+

<mxGeometry relative="1" as="geometry">

|

| 75 |

+

<mxPoint x="189.5" y="420" as="sourcePoint" />

|

| 76 |

+

<mxPoint x="189.5" y="370" as="targetPoint" />

|

| 77 |

+

</mxGeometry>

|

| 78 |

+

</mxCell>

|

| 79 |

+

<mxCell id="hK792VXiPIr8ubialXFB-15" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 80 |

+

<mxGeometry relative="1" as="geometry">

|

| 81 |

+

<mxPoint x="329.5" y="420" as="sourcePoint" />

|

| 82 |

+

<mxPoint x="329.5" y="370" as="targetPoint" />

|

| 83 |

+

</mxGeometry>

|

| 84 |

+

</mxCell>

|

| 85 |

+

<mxCell id="hK792VXiPIr8ubialXFB-16" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" edge="1" parent="1">

|

| 86 |

+

<mxGeometry relative="1" as="geometry">

|

| 87 |

+

<mxPoint x="469.5" y="420" as="sourcePoint" />

|

| 88 |

+

<mxPoint x="469.5" y="370" as="targetPoint" />

|

| 89 |

+

</mxGeometry>

|

| 90 |

+

</mxCell>

|

| 91 |

+

</root>

|

| 92 |

+

</mxGraphModel>

|

| 93 |

+

</diagram>

|

| 94 |

+

</mxfile>

|

docs/source/img/rnn-4-black-boxes.png

ADDED

|

docs/source/img/rnn-multi-sequences.drawio

ADDED

|

@@ -0,0 +1,250 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<mxfile host="app.diagrams.net" modified="2024-03-19T01:28:19.045Z" agent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36" etag="1-yK62UkPGlKoTsEWqch" version="24.0.7" type="device">

|

| 2 |

+

<diagram name="Page-1" id="6HRoGfWBaaDKhnXAU6vd">

|

| 3 |

+

<mxGraphModel dx="2156" dy="1926" grid="1" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="850" pageHeight="1100" math="0" shadow="0">

|

| 4 |

+

<root>

|

| 5 |

+

<mxCell id="0" />

|

| 6 |

+

<mxCell id="1" parent="0" />

|

| 7 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-1" value="<font size="1" data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="" color="#ffffff"><b style="font-size: 27px;">f</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 8 |

+

<mxGeometry x="40" y="320" width="60" height="60" as="geometry" />

|

| 9 |

+

</mxCell>

|

| 10 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-2" value="<font color="#ffffff" face="Italianno" style="font-size: 27px;">f</font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 11 |

+

<mxGeometry x="180" y="320" width="60" height="60" as="geometry" />

|

| 12 |

+

</mxCell>

|

| 13 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-3" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 14 |

+

<mxGeometry x="320" y="320" width="60" height="60" as="geometry" />

|

| 15 |

+

</mxCell>

|

| 16 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-4" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 17 |

+

<mxGeometry x="460" y="320" width="60" height="60" as="geometry" />

|

| 18 |

+

</mxCell>

|

| 19 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-5" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 20 |

+

<mxGeometry relative="1" as="geometry">

|

| 21 |

+

<mxPoint x="69.5" y="439" as="sourcePoint" />

|

| 22 |

+

<mxPoint x="69.5" y="389" as="targetPoint" />

|

| 23 |

+

</mxGeometry>

|

| 24 |

+

</mxCell>

|

| 25 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-6" value="<font face="Ubuntu" style="font-size: 20px;"><b>h</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 26 |

+

<mxGeometry x="45" y="450" width="50" height="50" as="geometry" />

|

| 27 |

+

</mxCell>

|

| 28 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-7" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 29 |

+

<mxGeometry relative="1" as="geometry">

|

| 30 |

+

<mxPoint x="69.5" y="310" as="sourcePoint" />

|

| 31 |

+

<mxPoint x="69.5" y="260" as="targetPoint" />

|

| 32 |

+

</mxGeometry>

|

| 33 |

+

</mxCell>

|

| 34 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-8" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>e</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 35 |

+

<mxGeometry x="45" y="200" width="50" height="50" as="geometry" />

|

| 36 |

+

</mxCell>

|

| 37 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-9" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>l</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 38 |

+

<mxGeometry x="185" y="200" width="50" height="50" as="geometry" />

|

| 39 |

+

</mxCell>

|

| 40 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-10" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>l</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 41 |

+

<mxGeometry x="325" y="200" width="50" height="50" as="geometry" />

|

| 42 |

+

</mxCell>

|

| 43 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-11" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>o</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 44 |

+

<mxGeometry x="465" y="200" width="50" height="50" as="geometry" />

|

| 45 |

+

</mxCell>

|

| 46 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-12" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 47 |

+

<mxGeometry relative="1" as="geometry">

|

| 48 |

+

<mxPoint x="209.5" y="310" as="sourcePoint" />

|

| 49 |

+

<mxPoint x="209.5" y="260" as="targetPoint" />

|

| 50 |

+

</mxGeometry>

|

| 51 |

+

</mxCell>

|

| 52 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-13" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 53 |

+

<mxGeometry relative="1" as="geometry">

|

| 54 |

+

<mxPoint x="349.5" y="310" as="sourcePoint" />

|

| 55 |

+

<mxPoint x="349.5" y="260" as="targetPoint" />

|

| 56 |

+

</mxGeometry>

|

| 57 |

+

</mxCell>

|

| 58 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-14" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 59 |

+

<mxGeometry relative="1" as="geometry">

|

| 60 |

+

<mxPoint x="489.5" y="310" as="sourcePoint" />

|

| 61 |

+

<mxPoint x="489.5" y="260" as="targetPoint" />

|

| 62 |

+

</mxGeometry>

|

| 63 |

+

</mxCell>

|

| 64 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-15" value="<font face="Ubuntu" style="font-size: 20px;"><b>e</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 65 |

+

<mxGeometry x="185" y="450" width="50" height="50" as="geometry" />

|

| 66 |

+

</mxCell>

|

| 67 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-16" value="<font face="Ubuntu" style="font-size: 20px;"><b>l</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 68 |

+

<mxGeometry x="325" y="450" width="50" height="50" as="geometry" />

|

| 69 |

+

</mxCell>

|

| 70 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-17" value="<font face="Ubuntu" style="font-size: 20px;"><b>l</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 71 |

+

<mxGeometry x="465" y="450" width="50" height="50" as="geometry" />

|

| 72 |

+

</mxCell>

|

| 73 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-18" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 74 |

+

<mxGeometry relative="1" as="geometry">

|

| 75 |

+

<mxPoint x="209.5" y="440" as="sourcePoint" />

|

| 76 |

+

<mxPoint x="209.5" y="390" as="targetPoint" />

|

| 77 |

+

</mxGeometry>

|

| 78 |

+

</mxCell>

|

| 79 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-19" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 80 |

+

<mxGeometry relative="1" as="geometry">

|

| 81 |

+

<mxPoint x="349.5" y="440" as="sourcePoint" />

|

| 82 |

+

<mxPoint x="349.5" y="390" as="targetPoint" />

|

| 83 |

+

</mxGeometry>

|

| 84 |

+

</mxCell>

|

| 85 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-20" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 86 |

+

<mxGeometry relative="1" as="geometry">

|

| 87 |

+

<mxPoint x="489.5" y="440" as="sourcePoint" />

|

| 88 |

+

<mxPoint x="489.5" y="390" as="targetPoint" />

|

| 89 |

+

</mxGeometry>

|

| 90 |

+

</mxCell>

|

| 91 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-21" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 92 |

+

<mxGeometry relative="1" as="geometry">

|

| 93 |

+

<mxPoint x="110" y="349.78" as="sourcePoint" />

|

| 94 |

+

<mxPoint x="170.5" y="349.78" as="targetPoint" />

|

| 95 |

+

</mxGeometry>

|

| 96 |

+

</mxCell>

|

| 97 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-22" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 98 |

+

<mxGeometry relative="1" as="geometry">

|

| 99 |

+

<mxPoint x="250" y="349.76" as="sourcePoint" />

|

| 100 |

+

<mxPoint x="310.5" y="349.76" as="targetPoint" />

|

| 101 |

+

</mxGeometry>

|

| 102 |

+

</mxCell>

|

| 103 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-23" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 104 |

+

<mxGeometry relative="1" as="geometry">

|

| 105 |

+

<mxPoint x="394.75" y="349.76" as="sourcePoint" />

|

| 106 |

+

<mxPoint x="455.25" y="349.76" as="targetPoint" />

|

| 107 |

+

</mxGeometry>

|

| 108 |

+

</mxCell>

|

| 109 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-24" value="<font data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="font-size: 25px;"><span style="font-size: 25px;">h<sub style="font-size: 25px;">1</sub></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontSize=25;fontStyle=1" parent="1" vertex="1">

|

| 110 |

+

<mxGeometry x="110" y="320" width="60" height="30" as="geometry" />

|

| 111 |

+

</mxCell>

|

| 112 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-25" value="<font style="font-size: 25px;"><span style="font-size: 25px;"><span style="font-size: 25px;">h</span><span style="font-size: 25px;"><sub style="font-size: 25px;">2</sub></span></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontFamily=Italianno;fontSource=https%3A%2F%2Ffonts.googleapis.com%2Fcss%3Ffamily%3DItalianno;fontSize=25;fontStyle=1" parent="1" vertex="1">

|

| 113 |

+

<mxGeometry x="250" y="320" width="60" height="30" as="geometry" />

|

| 114 |

+

</mxCell>

|

| 115 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-26" value="<font style="font-size: 25px;"><span style="font-size: 25px;"><span style="font-size: 25px;">h</span><span style="font-size: 25px;"><sub style="font-size: 25px;">3</sub></span></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontFamily=Italianno;fontSource=https%3A%2F%2Ffonts.googleapis.com%2Fcss%3Ffamily%3DItalianno;fontSize=25;fontStyle=1" parent="1" vertex="1">

|

| 116 |

+

<mxGeometry x="390" y="320" width="60" height="30" as="geometry" />

|

| 117 |

+

</mxCell>

|

| 118 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-27" value="<font face="Ubuntu" size="1" style="" color="#ffffff"><b style="font-size: 23px;">RNN</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 119 |

+

<mxGeometry x="-140" y="320" width="60" height="60" as="geometry" />

|

| 120 |

+

</mxCell>

|

| 121 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-28" value="<font face="Ubuntu" style="font-size: 20px;"><b>hell</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 122 |

+

<mxGeometry x="-135" y="450" width="50" height="50" as="geometry" />

|

| 123 |

+

</mxCell>

|

| 124 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-29" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>ello</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 125 |

+

<mxGeometry x="-135" y="200" width="50" height="50" as="geometry" />

|

| 126 |

+

</mxCell>

|

| 127 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-30" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 128 |

+

<mxGeometry relative="1" as="geometry">

|

| 129 |

+

<mxPoint x="-110.25999999999999" y="310" as="sourcePoint" />

|

| 130 |

+

<mxPoint x="-110.25999999999999" y="260" as="targetPoint" />

|

| 131 |

+

</mxGeometry>

|

| 132 |

+

</mxCell>

|

| 133 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-31" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 134 |

+

<mxGeometry relative="1" as="geometry">

|

| 135 |

+

<mxPoint x="-110.25999999999999" y="440" as="sourcePoint" />

|

| 136 |

+

<mxPoint x="-110.25999999999999" y="390" as="targetPoint" />

|

| 137 |

+

</mxGeometry>

|

| 138 |

+

</mxCell>

|

| 139 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-33" value="" style="shape=flexArrow;endArrow=classic;html=1;rounded=0;strokeWidth=3;" parent="1" edge="1">

|

| 140 |

+

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

| 141 |

+

<mxPoint x="-70" y="350" as="sourcePoint" />

|

| 142 |

+

<mxPoint x="30" y="350" as="targetPoint" />

|

| 143 |

+

</mxGeometry>

|

| 144 |

+

</mxCell>

|

| 145 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-34" value="<b><font style="font-size: 19px;" face="Ubuntu">Unfold</font></b>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;" parent="1" vertex="1">

|

| 146 |

+

<mxGeometry x="-50" y="290" width="60" height="30" as="geometry" />

|

| 147 |

+

</mxCell>

|

| 148 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-35" value="<font size="1" data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="" color="#ffffff"><b style="font-size: 27px;">f</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 149 |

+

<mxGeometry x="40" y="-50" width="60" height="60" as="geometry" />

|

| 150 |

+

</mxCell>

|

| 151 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-36" value="<font color="#ffffff" face="Italianno" style="font-size: 27px;">f</font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 152 |

+

<mxGeometry x="180" y="-50" width="60" height="60" as="geometry" />

|

| 153 |

+

</mxCell>

|

| 154 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-37" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 155 |

+

<mxGeometry relative="1" as="geometry">

|

| 156 |

+

<mxPoint x="69.5" y="69" as="sourcePoint" />

|

| 157 |

+

<mxPoint x="69.5" y="19" as="targetPoint" />

|

| 158 |

+

</mxGeometry>

|

| 159 |

+

</mxCell>

|

| 160 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-38" value="<font face="Ubuntu" style="font-size: 20px;"><b>c</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 161 |

+

<mxGeometry x="45" y="80" width="50" height="50" as="geometry" />

|

| 162 |

+

</mxCell>

|

| 163 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-39" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 164 |

+

<mxGeometry relative="1" as="geometry">

|

| 165 |

+

<mxPoint x="69.5" y="-60" as="sourcePoint" />

|

| 166 |

+

<mxPoint x="69.5" y="-110" as="targetPoint" />

|

| 167 |

+

</mxGeometry>

|

| 168 |

+

</mxCell>

|

| 169 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-40" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>a</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 170 |

+

<mxGeometry x="45" y="-170" width="50" height="50" as="geometry" />

|

| 171 |

+

</mxCell>

|

| 172 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-41" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>t</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 173 |

+

<mxGeometry x="185" y="-170" width="50" height="50" as="geometry" />

|

| 174 |

+

</mxCell>

|

| 175 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-42" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 176 |

+

<mxGeometry relative="1" as="geometry">

|

| 177 |

+

<mxPoint x="209.5" y="-60" as="sourcePoint" />

|

| 178 |

+

<mxPoint x="209.5" y="-110" as="targetPoint" />

|

| 179 |

+

</mxGeometry>

|

| 180 |

+

</mxCell>

|

| 181 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-43" value="<font face="Ubuntu" style="font-size: 20px;"><b>a</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 182 |

+

<mxGeometry x="185" y="80" width="50" height="50" as="geometry" />

|

| 183 |

+

</mxCell>

|

| 184 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-44" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 185 |

+

<mxGeometry relative="1" as="geometry">

|

| 186 |

+

<mxPoint x="209.5" y="70" as="sourcePoint" />

|

| 187 |

+

<mxPoint x="209.5" y="20" as="targetPoint" />

|

| 188 |

+

</mxGeometry>

|

| 189 |

+

</mxCell>

|

| 190 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-45" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#B266FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 191 |

+

<mxGeometry relative="1" as="geometry">

|

| 192 |

+

<mxPoint x="110" y="-20.220000000000027" as="sourcePoint" />

|

| 193 |

+

<mxPoint x="170.5" y="-20.220000000000027" as="targetPoint" />

|

| 194 |

+

</mxGeometry>

|

| 195 |

+

</mxCell>

|

| 196 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-46" value="<font data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="font-size: 25px;"><span style="font-size: 25px;">h<sub style="font-size: 25px;">1</sub></span></font>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;fontSize=25;fontStyle=1" parent="1" vertex="1">

|

| 197 |

+

<mxGeometry x="110" y="-50" width="60" height="30" as="geometry" />

|

| 198 |

+

</mxCell>

|

| 199 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-47" value="<font face="Ubuntu" size="1" style="" color="#ffffff"><b style="font-size: 23px;">RNN</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 200 |

+

<mxGeometry x="-140" y="-50" width="60" height="60" as="geometry" />

|

| 201 |

+

</mxCell>

|

| 202 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-48" value="<font face="Ubuntu" style="font-size: 20px;"><b>ca</b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 203 |

+

<mxGeometry x="-135" y="80" width="50" height="50" as="geometry" />

|

| 204 |

+

</mxCell>

|

| 205 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-49" value="<font face="Ubuntu"><span style="font-size: 20px;"><b>at</b></span></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#FF3399;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 206 |

+

<mxGeometry x="-135" y="-170" width="50" height="50" as="geometry" />

|

| 207 |

+

</mxCell>

|

| 208 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-50" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 209 |

+

<mxGeometry relative="1" as="geometry">

|

| 210 |

+

<mxPoint x="-110.25999999999999" y="-60" as="sourcePoint" />

|

| 211 |

+

<mxPoint x="-110.25999999999999" y="-110" as="targetPoint" />

|

| 212 |

+

</mxGeometry>

|

| 213 |

+

</mxCell>

|

| 214 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-51" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 215 |

+

<mxGeometry relative="1" as="geometry">

|

| 216 |

+

<mxPoint x="-110.25999999999999" y="70" as="sourcePoint" />

|

| 217 |

+

<mxPoint x="-110.25999999999999" y="20" as="targetPoint" />

|

| 218 |

+

</mxGeometry>

|

| 219 |

+

</mxCell>

|

| 220 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-53" value="" style="shape=flexArrow;endArrow=classic;html=1;rounded=0;strokeWidth=3;" parent="1" edge="1">

|

| 221 |

+

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

| 222 |

+

<mxPoint x="-70" y="-20" as="sourcePoint" />

|

| 223 |

+

<mxPoint x="30" y="-20" as="targetPoint" />

|

| 224 |

+

</mxGeometry>

|

| 225 |

+

</mxCell>

|

| 226 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-54" value="<b><font style="font-size: 19px;" face="Ubuntu">Unfold</font></b>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;" parent="1" vertex="1">

|

| 227 |

+

<mxGeometry x="-50" y="-80" width="60" height="30" as="geometry" />

|

| 228 |

+

</mxCell>

|

| 229 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-55" value="" style="endArrow=none;dashed=1;html=1;dashPattern=1 3;strokeWidth=3;rounded=0;" parent="1" edge="1">

|

| 230 |

+

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

| 231 |

+

<mxPoint x="-225" y="104.82000000000001" as="sourcePoint" />

|

| 232 |

+

<mxPoint x="-150" y="105.18" as="targetPoint" />

|

| 233 |

+

</mxGeometry>

|

| 234 |

+

</mxCell>

|

| 235 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-56" value="" style="endArrow=none;dashed=1;html=1;dashPattern=1 3;strokeWidth=3;rounded=0;" parent="1" edge="1">

|

| 236 |

+

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

| 237 |

+

<mxPoint x="-240" y="474.63" as="sourcePoint" />

|

| 238 |

+

<mxPoint x="-165" y="474.99" as="targetPoint" />

|

| 239 |

+

</mxGeometry>

|

| 240 |

+

</mxCell>

|

| 241 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-57" value="<b><font style="font-size: 19px;" face="Ubuntu">sequence 1</font></b>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;" parent="1" vertex="1">

|

| 242 |

+

<mxGeometry x="-345" y="90" width="110" height="30" as="geometry" />

|

| 243 |

+

</mxCell>

|

| 244 |

+

<mxCell id="vckTE8xcX2gjwNGocpAb-58" value="<b><font style="font-size: 19px;" face="Ubuntu">sequence 2</font></b>" style="text;html=1;align=center;verticalAlign=middle;whiteSpace=wrap;rounded=0;" parent="1" vertex="1">

|

| 245 |

+

<mxGeometry x="-360" y="460" width="110" height="30" as="geometry" />

|

| 246 |

+

</mxCell>

|

| 247 |

+

</root>

|

| 248 |

+

</mxGraphModel>

|

| 249 |

+

</diagram>

|

| 250 |

+

</mxfile>

|

docs/source/img/rnn-multi-sequences.png

ADDED

|

docs/source/img/rnn.drawio

ADDED

|

@@ -0,0 +1,149 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<mxfile host="app.diagrams.net" modified="2024-03-19T01:41:00.069Z" agent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36" etag="gBXA4TJMJ-xFoxQL8in4" version="24.0.7" type="device">

|

| 2 |

+

<diagram name="Page-1" id="DUD_6-T85kScICrpKMMz">

|

| 3 |

+

<mxGraphModel dx="1783" dy="590" grid="1" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="850" pageHeight="1100" math="0" shadow="0">

|

| 4 |

+

<root>

|

| 5 |

+

<mxCell id="0" />

|

| 6 |

+

<mxCell id="1" parent="0" />

|

| 7 |

+

<mxCell id="Kn0003oJxsBQeWTrvDDb-1" value="<font size="1" data-font-src="https://fonts.googleapis.com/css?family=Italianno" face="Italianno" style="" color="#ffffff"><b style="font-size: 27px;">f</b></font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 8 |

+

<mxGeometry x="40" y="320" width="60" height="60" as="geometry" />

|

| 9 |

+

</mxCell>

|

| 10 |

+

<mxCell id="Kn0003oJxsBQeWTrvDDb-2" value="<font color="#ffffff" face="Italianno" style="font-size: 27px;">f</font>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 11 |

+

<mxGeometry x="180" y="320" width="60" height="60" as="geometry" />

|

| 12 |

+

</mxCell>

|

| 13 |

+

<mxCell id="Kn0003oJxsBQeWTrvDDb-4" value="<span style="color: rgb(255, 255, 255); font-family: Italianno; font-size: 27px;">f</span>" style="rounded=1;whiteSpace=wrap;html=1;fillColor=#000000;strokeColor=none;fontColor=#FF6666;" parent="1" vertex="1">

|

| 14 |

+

<mxGeometry x="460" y="320" width="60" height="60" as="geometry" />

|

| 15 |

+

</mxCell>

|

| 16 |

+

<mxCell id="Kn0003oJxsBQeWTrvDDb-5" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 17 |

+

<mxGeometry relative="1" as="geometry">

|

| 18 |

+

<mxPoint x="69.5" y="439" as="sourcePoint" />

|

| 19 |

+

<mxPoint x="69.5" y="389" as="targetPoint" />

|

| 20 |

+

</mxGeometry>

|

| 21 |

+

</mxCell>

|

| 22 |

+

<mxCell id="Kn0003oJxsBQeWTrvDDb-6" value="<font face="Ubuntu" style="font-size: 20px;"><b>x<sub>1</sub></b></font>" style="ellipse;whiteSpace=wrap;html=1;aspect=fixed;fillColor=#00CC00;fontColor=#FFFFFF;" parent="1" vertex="1">

|

| 23 |

+

<mxGeometry x="45" y="450" width="50" height="50" as="geometry" />

|

| 24 |

+

</mxCell>

|

| 25 |

+

<mxCell id="Kn0003oJxsBQeWTrvDDb-7" style="edgeStyle=orthogonalEdgeStyle;rounded=0;orthogonalLoop=1;jettySize=auto;html=1;exitX=0.5;exitY=0;exitDx=0;exitDy=0;strokeWidth=3;strokeColor=#6666FF;fontColor=#FF6666;" parent="1" edge="1">

|

| 26 |

+

<mxGeometry relative="1" as="geometry">

|

| 27 |

+

<mxPoint x="69.5" y="310" as="sourcePoint" />

|

| 28 |

+

<mxPoint x="69.5" y="260" as="targetPoint" />

|

| 29 |

+

</mxGeometry>

|

| 30 |

+

</mxCell>

|

| 31 |

+