Post

6659

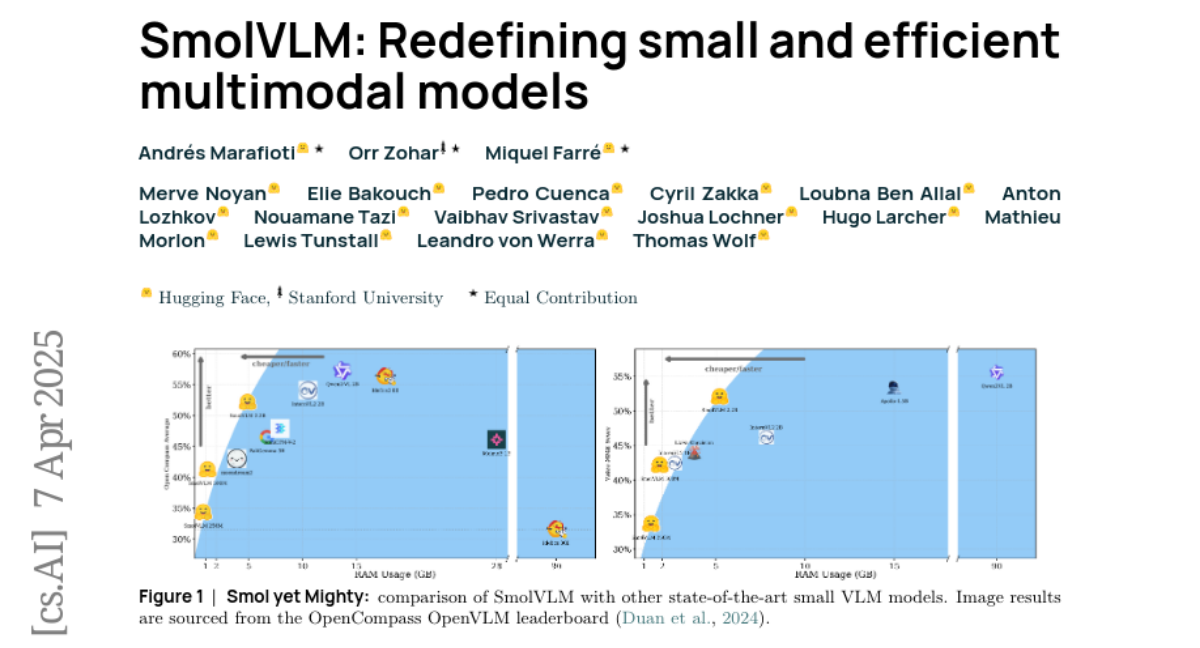

SmolVLM is now available on PocketPal — you can run it offline on your smartphone to interpret the world around you. 🌍📱

And check out this real-time camera demo by @ngxson , powered by llama.cpp:

https://github.com/ngxson/smolvlm-realtime-webcam

https://x.com/pocketpal_ai

And check out this real-time camera demo by @ngxson , powered by llama.cpp:

https://github.com/ngxson/smolvlm-realtime-webcam

https://x.com/pocketpal_ai