hheat

commited on

Commit

·

a714244

1

Parent(s):

0fd42d6

init lucid

Browse files- .gitattributes +1 -0

- README.md +154 -0

- resources/Training_Instructions.md +64 -0

- resources/ours_qualitative.jpeg +0 -0

- resources/output.gif +3 -0

- resources/output_16.gif +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

resources/*.gif filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,154 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# LucidFusion: Generating 3D Gaussians with Arbitrary Unposed Images

|

| 2 |

+

|

| 3 |

+

[Hao He](https://heye0507.github.io/)$^{\color{red}{\*}}$ [Yixun Liang](https://yixunliang.github.io/)$^{\color{red}{\*}}$, [Luozhou Wang](https://wileewang.github.io/), [Yuanhao Cai](https://github.com/caiyuanhao1998), [Xinli Xu](https://scholar.google.com/citations?user=lrgPuBUAAAAJ&hl=en&inst=1381320739207392350), [Hao-Xiang Guo](), [Xiang Wen](), [Yingcong Chen](https://www.yingcong.me)$^{\**}$

|

| 4 |

+

|

| 5 |

+

$\color{red}{\*}$: Equal contribution.

|

| 6 |

+

\**: Corresponding author.

|

| 7 |

+

|

| 8 |

+

[Paper PDF (Arxiv)](Coming Soon) | [Project Page (Coming Soon)]() | [Gradio Demo](Coming Soon)

|

| 9 |

+

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

<div align="center">

|

| 13 |

+

<img src="resources/output_16.gif" width="95%"/>

|

| 14 |

+

<br>

|

| 15 |

+

<p><i>Note: we compress these motion pictures for faster previewing.</i></p>

|

| 16 |

+

</div>

|

| 17 |

+

|

| 18 |

+

<div align=center>

|

| 19 |

+

<img src="resources/ours_qualitative.jpeg" width="95%"/>

|

| 20 |

+

|

| 21 |

+

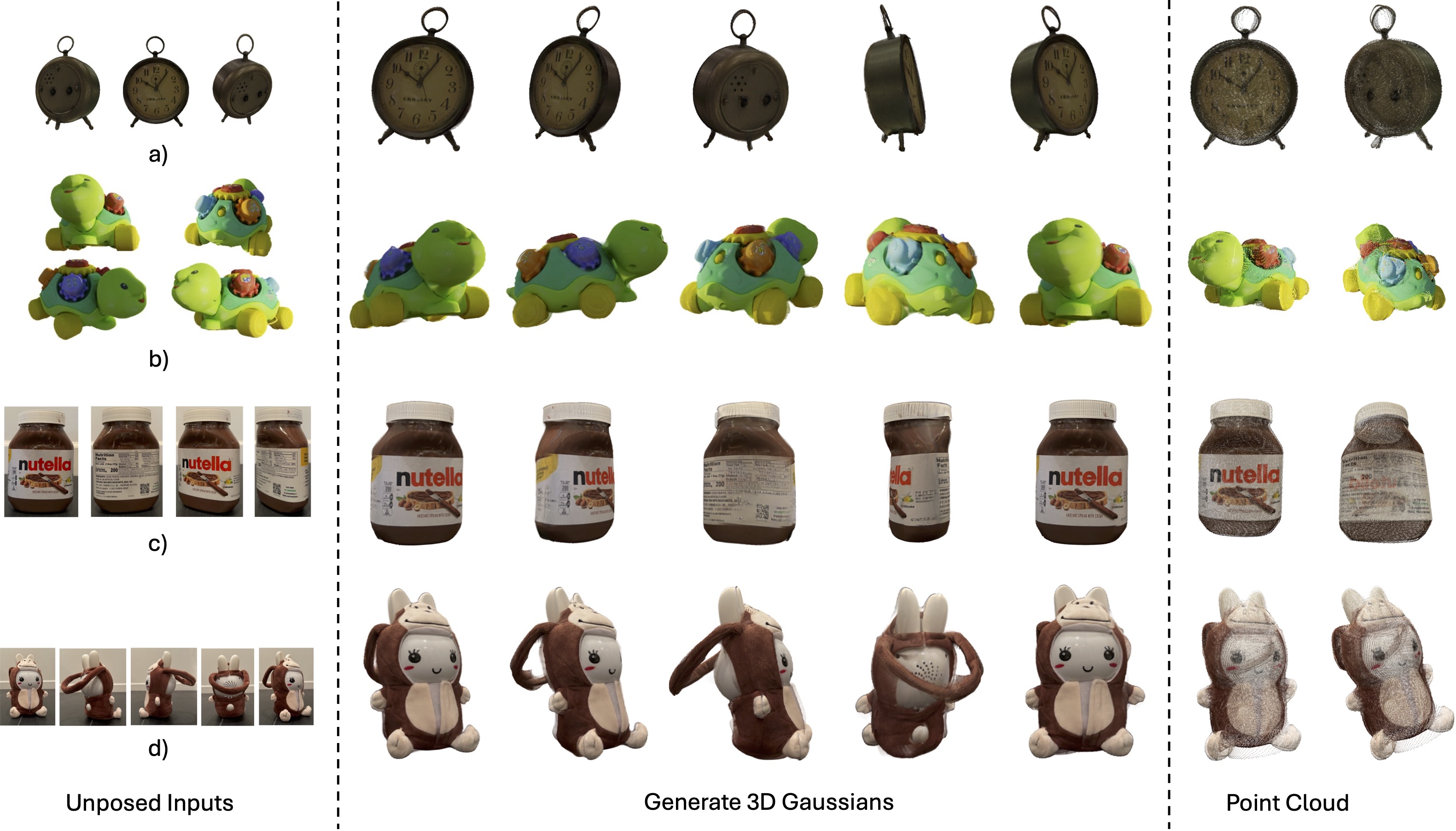

Examples of cross-dataset content creations with our framework, the *LucidFusion*, around **~13FPS** on A800.

|

| 22 |

+

|

| 23 |

+

</div>

|

| 24 |

+

|

| 25 |

+

## 🎏 Abstract

|

| 26 |

+

We present a flexible end-to-end feed-forward framework, named the *LucidFusion*, to generate high-resolution 3D Gaussians from unposed, sparse, and arbitrary numbers of multiview images.

|

| 27 |

+

|

| 28 |

+

<details><summary>CLICK for the full abstract</summary>

|

| 29 |

+

|

| 30 |

+

> Recent large reconstruction models have made notable progress in generating high-quality 3D objects from single images. However, these methods often struggle with controllability, as they lack information from multiple views, leading to incomplete or inconsistent 3D reconstructions. To address this limitation, we introduce LucidFusion, a flexible end-to-end feed-forward framework that leverages the Relative Coordinate Map (RCM). Unlike traditional methods linking images to 3D world thorough pose, LucidFusion utilizes RCM to align geometric features coherently across different views, making it highly adaptable for 3D generation from arbitrary, unposed images. Furthermore, LucidFusion seamlessly integrates with the original single-image-to-3D pipeline, producing detailed 3D Gaussians at a resolution of $512 \times 512$, making it well-suited for a wide range of applications.

|

| 31 |

+

|

| 32 |

+

</details>

|

| 33 |

+

|

| 34 |

+

## 🔧 Training Instructions

|

| 35 |

+

|

| 36 |

+

Our code is now released!

|

| 37 |

+

|

| 38 |

+

### Install

|

| 39 |

+

```

|

| 40 |

+

conda create -n LucidFusion python=3.9.19

|

| 41 |

+

conda activate LucidFusion

|

| 42 |

+

|

| 43 |

+

# For example, we use torch 2.3.1 + cuda 11.8, and tested with latest torch (2.4.1) which works with the latest xformers (0.0.28).

|

| 44 |

+

pip install torch==2.4.1 torchvision==0.19.1 torchaudio==2.4.1 --index-url https://download.pytorch.org/whl/cu118

|

| 45 |

+

|

| 46 |

+

# Xformers is required! please refer to https://github.com/facebookresearch/xformers for details.

|

| 47 |

+

# [linux only] cuda 11.8 version

|

| 48 |

+

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu118

|

| 49 |

+

|

| 50 |

+

# For 3D Gaussian Splatting, we use LGM modified version, details please refer to https://github.com/3DTopia/LGM

|

| 51 |

+

git clone --recursive https://github.com/ashawkey/diff-gaussian-rasterization

|

| 52 |

+

pip install ./diff-gaussian-rasterization

|

| 53 |

+

|

| 54 |

+

# Other dependencies

|

| 55 |

+

pip install -r requirements.txt

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

### Pretrained Weights

|

| 59 |

+

|

| 60 |

+

Our pre-trained weights will be released soon, please check back!

|

| 61 |

+

|

| 62 |

+

Our current model loads pre-trained diffusion model for config. We use stable-diffusion-2-1-base, to download it, simply run

|

| 63 |

+

```

|

| 64 |

+

python pretrained/download.py

|

| 65 |

+

```

|

| 66 |

+

You can omit this step if you already have stable-diffusion-2-1-base, and simply update "model_key" with your local SD-2-1 path for scripts in scripts/ folder.

|

| 67 |

+

|

| 68 |

+

## 🔥 Inference

|

| 69 |

+

A shell script is provided with example files.

|

| 70 |

+

To run, you first need to set up the pre-trained weights as follows:

|

| 71 |

+

|

| 72 |

+

```

|

| 73 |

+

cd LucidFusion

|

| 74 |

+

mkdir output/demo

|

| 75 |

+

|

| 76 |

+

# Download the pretrained weights and name it as best.ckpt

|

| 77 |

+

|

| 78 |

+

# Place the pretrained weights in LucidFusion/output/demo/best.ckpt

|

| 79 |

+

```

|

| 80 |

+

We have also provided some preprocessed examples.

|

| 81 |

+

|

| 82 |

+

For GSO files, the example objects are "alarm", "chicken", "hat", "lunch_bag", "mario", and "shoe1".

|

| 83 |

+

|

| 84 |

+

To run GSO demo:

|

| 85 |

+

```

|

| 86 |

+

# You can adjust "DEMO" field inside the gso_demo.sh to load other examples.

|

| 87 |

+

|

| 88 |

+

bash scripts/gso_demo.sh

|

| 89 |

+

```

|

| 90 |

+

|

| 91 |

+

To run the images demo, masks are obtained using preprocess.py. The example objects are "nutella_new", "monkey_chair", "dog_chair".

|

| 92 |

+

|

| 93 |

+

```

|

| 94 |

+

bash scripts/demo.sh

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

To run the diffusion demo as a single-image-to-multi-view setup, we use the pixel diffusion trained in the CRM, as described in the paper. You can also use other multi-view diffusion models to generate multi-view outputs from a single image.

|

| 98 |

+

|

| 99 |

+

For dependencies issue, please check https://github.com/thu-ml/CRM

|

| 100 |

+

|

| 101 |

+

We also provide LGM's imagegen diffusion, simply set --crm=false in diffusion_demo.sh. You can change the --seed with different seed option.

|

| 102 |

+

|

| 103 |

+

```

|

| 104 |

+

bash script/diffusion_demo.sh

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

You can also try your own example! To do that:

|

| 109 |

+

|

| 110 |

+

1. Obtain images and place them in the examples folder:

|

| 111 |

+

```

|

| 112 |

+

LucidFusion

|

| 113 |

+

├── examples/

|

| 114 |

+

| ├── "your obj name"/

|

| 115 |

+

| | ├── "image_01.png"

|

| 116 |

+

| | ├── "image_02.png"

|

| 117 |

+

| | ├── ...

|

| 118 |

+

```

|

| 119 |

+

2. Run preprocess.py to extract the recentered image and its mask:

|

| 120 |

+

```

|

| 121 |

+

# Run the following will create two folders (images, masks) in "your-obj-name" folder.

|

| 122 |

+

# You can check to see if the extract mask is corrected.

|

| 123 |

+

python preprocess.py examples/you-obj-name --outdir examples/your-obj-name

|

| 124 |

+

```

|

| 125 |

+

|

| 126 |

+

3. Modify demo.sh to set DEMO=“examples/your-obj-name”, then run the script:

|

| 127 |

+

```

|

| 128 |

+

bash scripts/demo.sh

|

| 129 |

+

```

|

| 130 |

+

|

| 131 |

+

## 🤗 Gradio Demo

|

| 132 |

+

|

| 133 |

+

We are currently building an online demo of LucidFusion with Gradio. It is still under development, and will coming out soon!

|

| 134 |

+

|

| 135 |

+

## 🚧 Todo

|

| 136 |

+

|

| 137 |

+

- [x] Release the inference codes

|

| 138 |

+

- [ ] Release our weights

|

| 139 |

+

- [ ] Release the Gardio Demo

|

| 140 |

+

- [ ] Release the Stage 1 and 2 training codes

|

| 141 |

+

|

| 142 |

+

## 📍 Citation

|

| 143 |

+

If you find our work useful, please consider citing our paper.

|

| 144 |

+

```

|

| 145 |

+

TODO

|

| 146 |

+

```

|

| 147 |

+

|

| 148 |

+

## 💼 Acknowledgement

|

| 149 |

+

This work is built on many amazing research works and open-source projects:

|

| 150 |

+

- [gaussian-splatting](https://github.com/graphdeco-inria/gaussian-splatting) and [diff-gaussian-rasterization](https://github.com/graphdeco-inria/diff-gaussian-rasterization)

|

| 151 |

+

- [ZeroShape](https://github.com/zxhuang1698/ZeroShape)

|

| 152 |

+

- [LGM](https://github.com/3DTopia/LGM)

|

| 153 |

+

|

| 154 |

+

Thanks for their excellent work and great contribution to 3D generation area.

|

resources/Training_Instructions.md

ADDED

|

@@ -0,0 +1,64 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Preparation

|

| 2 |

+

This is the official implementation of *LucidFusion: Generating 3D Gaussians with Arbitrary Unposed Images*.

|

| 3 |

+

|

| 4 |

+

## Install

|

| 5 |

+

```

|

| 6 |

+

# Xformers is required! please refer to https://github.com/facebookresearch/xformers for details.

|

| 7 |

+

# For example, we use torch 2.3.1 + cuda 11.8

|

| 8 |

+

conda install pytorch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 pytorch-cuda=11.8 -c pytorch -c nvidia

|

| 9 |

+

# [linux only] cuda 11.8 version

|

| 10 |

+

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu118

|

| 11 |

+

|

| 12 |

+

# For 3D Gaussian Splatting, we use the official installation. Please refer to https://github.com/graphdeco-inria/gaussian-splatting for details

|

| 13 |

+

git clone https://github.com/graphdeco-inria/gaussian-splatting --recursive

|

| 14 |

+

pip install ./diff-gaussian-rasterization

|

| 15 |

+

|

| 16 |

+

# Other dependencies

|

| 17 |

+

conda env create -f environment.yml

|

| 18 |

+

conda activate LucidFusion

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

## Pretrained Weights

|

| 22 |

+

|

| 23 |

+

Our pre-trained weights will be released soon, please check back!

|

| 24 |

+

Our current model loads pre-trained diffusion model weights ([Stable Diffusion 2.1] (https://huggingface.co/stabilityai/stable-diffusion-2-1/tree/main) in default) for training purposes.

|

| 25 |

+

|

| 26 |

+

## Inference

|

| 27 |

+

A shell script is provided with example files.

|

| 28 |

+

To run, you first need to setup the pretrained weights as follows:

|

| 29 |

+

|

| 30 |

+

```

|

| 31 |

+

cd LucidFusion

|

| 32 |

+

mkdir output/demo

|

| 33 |

+

|

| 34 |

+

# Download the pretrained weights and name it as best.ckpt

|

| 35 |

+

|

| 36 |

+

# Place the pretrained weights in LucidFusion/output/demo/best.ckpt

|

| 37 |

+

|

| 38 |

+

# The result will be saved in the LucidFusion/output/demo/<obj name>/

|

| 39 |

+

|

| 40 |

+

bash scripts/demo.sh

|

| 41 |

+

```

|

| 42 |

+

|

| 43 |

+

More example files will be released soon

|

| 44 |

+

|

| 45 |

+

You can also try your own example! To do that:

|

| 46 |

+

|

| 47 |

+

First obtain images and place them in the examples folder

|

| 48 |

+

```

|

| 49 |

+

LucidFusion

|

| 50 |

+

├── examples/

|

| 51 |

+

| ├── "your obj name"/

|

| 52 |

+

| | ├── "image_01.png"

|

| 53 |

+

| | ├── "image_02.png"

|

| 54 |

+

| | ├── ...

|

| 55 |

+

```

|

| 56 |

+

Then you need to run preprocess.py to extract recentered image and its mask:

|

| 57 |

+

```

|

| 58 |

+

# Run the following will create two folders (images, masks) in "your obj name" folder.

|

| 59 |

+

# You can check to see if the extract mask is corrected.

|

| 60 |

+

python preprocess.py "examples/"you obj name" --outdir examples/"your obj name"

|

| 61 |

+

|

| 62 |

+

# Modify demo.sh to DEMO='examples/"you obj name" ' then run the file

|

| 63 |

+

bash scripts/demo.sh

|

| 64 |

+

```

|

resources/ours_qualitative.jpeg

ADDED

|

resources/output.gif

ADDED

|

Git LFS Details

|

resources/output_16.gif

ADDED

|

Git LFS Details

|