Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,81 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

tags:

|

| 4 |

+

- contrastive learning

|

| 5 |

+

- CLAP

|

| 6 |

+

- audio classification

|

| 7 |

+

- zero-shot classification

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

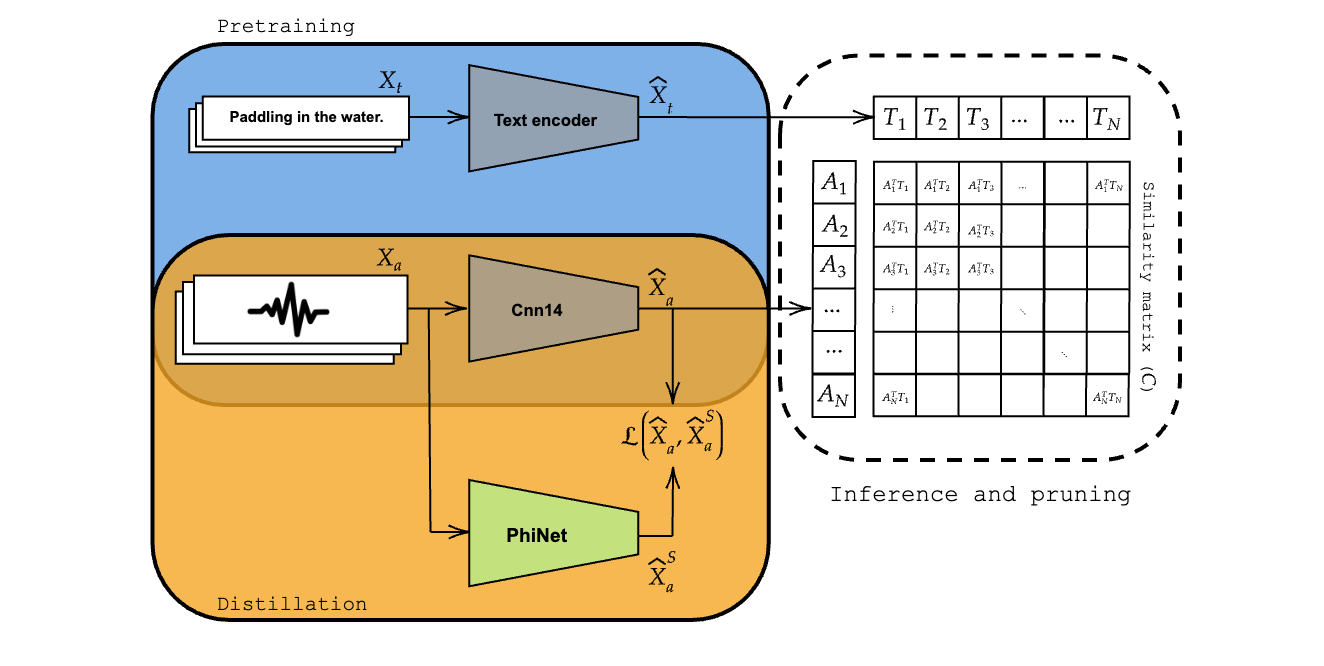

# tinyCLAP: Distilling Contrastive Language-Audio Pretrained models

|

| 11 |

+

|

| 12 |

+

[](https://arxiv.org/abs/2311.14517)

|

| 13 |

+

|

| 14 |

+

This repository contains the official implementation of [tinyCLAP](https://arxiv.org/abs/2311.14517).

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## Requirements

|

| 19 |

+

|

| 20 |

+

To install requirements:

|

| 21 |

+

|

| 22 |

+

```setup

|

| 23 |

+

pip install -r extra_requirements.txt

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

## Training

|

| 27 |

+

|

| 28 |

+

To train the model(s) in the paper, run this command:

|

| 29 |

+

|

| 30 |

+

```bash

|

| 31 |

+

MODEL_NAME=phinet_alpha_1.50_beta_0.75_t0_6_N_7

|

| 32 |

+

|

| 33 |

+

./run_tinyCLAP.sh $MODEL_NAME

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

Note that `MODEL_NAME` is formatted such that the script will automatically parse the configuration for the student model.

|

| 37 |

+

You can change parameters by changing the model name.

|

| 38 |

+

|

| 39 |

+

Please note:

|

| 40 |

+

- To use the original CLAP encoder in the distillation setting, replace the model name with `Cnn14`;

|

| 41 |

+

- To reproduce the variants of PhiNet from the manuscript, refer to the hyperparameters listed in Table 1.

|

| 42 |

+

|

| 43 |

+

## Evaluation

|

| 44 |

+

|

| 45 |

+

The command to evaluate the model on each dataset varies slightly among datasets.

|

| 46 |

+

Below are listed all the necessary commands.

|

| 47 |

+

|

| 48 |

+

### ESC50

|

| 49 |

+

|

| 50 |

+

```bash

|

| 51 |

+

python train_clap.py --experiment_name tinyCLAP_$MODEL_NAME --zs_eval True --esc_folder $PATH_TO_ESC

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

### UrbanSound8K

|

| 55 |

+

|

| 56 |

+

```bash

|

| 57 |

+

python train_clap.py --experiment_name tinyCLAP_$MODEL_NAME --zs_eval True --us8k_folder $PATH_TO_US8K

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

### TUT17

|

| 61 |

+

|

| 62 |

+

```bash

|

| 63 |

+

python train_clap.py --experiment_name tinyCLAP_$MODEL_NAME --zs_eval True --tut17_folder $PATH_TO_TUT17

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

## Pre-trained Models

|

| 67 |

+

|

| 68 |

+

You can download pretrained models here:

|

| 69 |

+

|

| 70 |

+

- [My awesome model](https://drive.google.com/mymodel.pth) trained on ImageNet using parameters x,y,z.

|

| 71 |

+

|

| 72 |

+

## Citing tinyCLAP

|

| 73 |

+

|

| 74 |

+

```

|

| 75 |

+

@inproceedings{paissan2024tinyclap,

|

| 76 |

+

title={tinyCLAP: Distilling Constrastive Language-Audio Pretrained Models},

|

| 77 |

+

author={Paissan, Francesco and Farella, Elisabetta},

|

| 78 |

+

journal={Interspeech 2024},

|

| 79 |

+

year={2024}

|

| 80 |

+

}

|

| 81 |

+

```

|