id

int64 5

1.93M

| title

stringlengths 0

128

| description

stringlengths 0

25.5k

| collection_id

int64 0

28.1k

| published_timestamp

timestamp[s] | canonical_url

stringlengths 14

581

| tag_list

stringlengths 0

120

| body_markdown

stringlengths 0

716k

| user_username

stringlengths 2

30

|

|---|---|---|---|---|---|---|---|---|

1,913,015 | Computer Vision Meetup: Performance Optimisation for Multimodal LLMs | In this talk we’ll delve into Multi-Modal LLMs, exploring the fusion of language and vision in... | 0 | 2024-07-05T17:34:35 | https://dev.to/voxel51/computer-vision-meetup-performance-optimisation-for-multimodal-llms-k5l | ai, computervision, machinelearning, datascience | In this talk we’ll delve into Multi-Modal LLMs, exploring the fusion of language and vision in cutting-edge models. We'll highlight the challenges in handling diverse data heterogeneity, its architecture design, strategies for efficient training, and optimization techniques to enhance both performance and inference speed. Through case studies and future outlooks, we’ll illustrate the importance of these optimizations in advancing applications across various domains.

About the Speaker

[Neha Sharma](https://www.linkedin.com/in/hashux/) has a rich background in digital products and technology services, having delivered successful projects for industry giants like IBM and launching innovative products for tech startups. As a Product Manager at Ori, Neha specializes in developing cutting-edge AI solutions by actively engaging on various AI-based use cases centered around latest/popular LLMs, demonstrating her commitment to staying at the forefront of AI technology.

Not a Meetup member? Sign up to attend the next event:

https://voxel51.com/computer-vision-ai-meetups/

Recorded on July 3, 2024 at the AI, Machine Learning and Computer Vision Meetup.

| jguerrero-voxel51 |

1,912,663 | How I stopped my procrastination: Insights into developer mindset | You’ve been at your job for years, you know how to write code good enough to get you by comfortably,... | 0 | 2024-07-05T17:33:52 | https://dev.to/middleware/how-i-stopped-my-procrastination-insights-into-developer-mindset-23hl | productivity, development, beginners, programming | You’ve been at your job for years, you know how to write code good enough to get you by comfortably, yet every now and then there’s a ticket, a ticket that lingers on-and-on on that kanban board, red with delays, reschedules and “is this done?” comments. “I’ll pick it up first thing in the morning” is what you told yourself yesterday, and the day is already dusking off.

<img src="https://media4.giphy.com/media/5q3NyUvgt1w9unrLJ9/200w.gif?cid=6c09b952va9i6h9jue6xqe50vw4o7n9vbwl2cfvn3jbwxnc1&ep=v1_gifs_search&rid=200w.gif&ct=g"/>

We as programmers and developers are likely to procrastinate, and there are good enough reasons for it. And being lazy might be one of them, but the documentation of our brains is much bigger than that.

## Why programmers are likely to procrastinate

### Cognitive overload 😵💫

As problem-solvers, we tend to use the ‘most-contextual’ part of the brain way too often, i.e the **Frontal Cortex**.

The frontal cortex is responsible for executive functions, which involve analyzing information, identifying patterns, decision making, and most importantly, _doing-the-right-thing-even-if-it-is-the-hard-thing-to-do_ actions.

<img src="https://i.pinimg.com/originals/00/69/51/0069513d884baad8cee121e091fb51ad.gif"/>

For these seemingly-simple tasks, the frontal cortex needs **bucket-loads of energy**, exhibiting a very high metabolic rate.

We, as mere mortals with anti-bluelight glasses, have fixed reserves of energy, and when we expend them, compromises start to occur.

_Behave’s_ author Sapolsky point out that when the frontal cortex is overloaded, subjects become less prosocial, they lie more, are less charitable, and are more likely to cheat on their diets.

> “Willpower is more than just a metaphor; self-control is a finite resource”,

he says, stating that _doing-the-right-thing-even-if-it-is-the-hard-thing-to-do_ is not merely an emotional and moral choice, but is far deeply tied to the physiology of the brain.

### I’m just a kid

We have all had our fair share of beginner-developer moments. When we plowed through nights and days trying to learn and retain how that _x_ language or _y_ framework functions. And the number of times we almost gave up.

<img src="https://gifdb.com/images/high/what-a-noob-puppet-misyfy7rmepive8a.gif"/>

But after a certain threshold, coding didn’t seem to have that big of a draining effect. And there’s a good reason for it.

When we practice and learn something, we start shifting the cognitive processes to the more reflexive (stemming from a reflex action, an automatic “muscle memory”) parts of the brain, like the cerebellum.

And once that is achieved, we reduce the burden of computation on the Frontal cortex, making the tasks less energy-taxing.

### Decision Fatigue

Cognitive tasks are not limited to logic-oriented, calculative, cautious tasks that one does as a programmer.

It also includes a seemingly simple task: **Decision making**, like using the correct approach to write readable code, or something as simple as which task should I ship first?

And like cognitive loads, decision-fatigue is tied to your energy reserves too, and can ultimately affect your productivity.

## Process rather than Procrastinate

Now that we have broken down Procrastination, it's time to actually tackle it.

### 1. Germinate

To achieve anything, whether it’s your jira-ticket’s completion, or a side project, one must start to build. _“Am I taking the most efficient, readable, perfectly orchestrated approach to solve this problem”_; the friction of uncertainty and self doubt usually make it hard enough to begin with.

Opening your laptop is the first victory to overcome the code-block. And the only way to do that is to have something certain, some anchor that doesn’t give you any unease.

<img src="https://media4.giphy.com/media/ZXWWsIMwaHjiHMtNf4/200w.gif?cid=6c09b952ibhnhiqzxzxah6ue2m3owb9grbhswhlclie2nk62&ep=v1_gifs_search&rid=200w.gif&ct=g"/>

I’ve had times where I tried to find a perfect time window to start programming on a weekend, that was me trying to find a unicorn.

So I made it a point that at a certain hour in the morning, I will open Monkey Type and try to hit the day’s first 80+ words a minute.

It’s something I stole from Atomic Habits, and it helped immensely. This simple ritual in the first 5 minutes of my day consisted of adrenaline-rushed, muscle-memory reflexes that had nothing to do with uncertainty of my task.

Thus, I could be eased into opening my IDE to complete that Jira ticket, whose dread had haunted my jira board for a week.

### 2. Granular-ize

Something I learnt fairly recently is to plan in a way that your programming journey will have little to no decision making once you start with writing code.

Everything else can, and should be pre-planned, into its utmost smallest task-granules, making things as clear as they possibly can be.

<img src="https://media2.giphy.com/media/Ig9dsuczC9dDkOKrIa/200w.gif?cid=6c09b952ay2xn8tymf29sg01ctdyj2c08tiwrwybh1uymklh&ep=v1_gifs_search&rid=200w.gif&ct=g"/>

The concept of **Engineering Requirements Document** (ERDs) was something I looked down upon as a corporate scut-work for the upper management to feel included in the process.

Turns out I was wrong, and it’s intended to make sure that all the decision fatigue occurs during initial stages, and the rest of the shipment of tickets spread throughout the week remains relatively unharmed from taxing your Frontal cortex’s energy reserves.

### 3. Game-ify

Updating my Jira tickets on my kanban board didn’t give me enough belongingness to the fruits of my labor. Neither was I able to track my personal progress of what I completed, what I shipped and what I spilled.

<img src="https://www.jenlivinglife.com/wp-content/uploads/2019/01/gyi7eys35a32b61551d35012060362_499x280.gif"/>

So I kept a set of my mini daily tasks on a local Microsoft’s To Do list. The satisfying chime of completing the task gave me a big boost on being on track, without having to worry about what is left undone and what is yet to be picked up.

Since this was personal, I could add tasks like “get a brownie on 3 completions” and wait for it to ping up when I marked it as done.

Motivation is a cocktail of neurotransmitters, make sure you zap your brains with some hits, every now and then.

Another tool that can help in the same is Middleware’s Project Overview. It systematically gave the reason for my spills and overcommitment in a sprint, and can help give an overview of your Jira Boards and Projects.

### 4. Gauge, Grapple and Give-up (not totally)

Friction in the building process goes far beyond decision-fatigues. You fail at something for long enough and you are likely to not want to do that thing anymore.

<img src="https://64.media.tumblr.com/tumblr_lifd0k25wJ1qaakxao1_500.gif" />

It's wise to gauge the problem, allocate a time-duration, and wrestle with the problem with all your might. But when you know that it’s time-up, ask for help.

You need to make a voluntary decision to make the problem smaller than your hands, or add more hands to it.

There’s nothing wrong with an extra set of limbs, or brains. Homo erectus showed the first signs of ordered-socialization within communities, and then came homo sapiens with their big-brains of computation. Companionship precedes cognition, at least within evolution.

## 5. Conclusion

We as developers often forget how human we are, I mean we are running services that cater to millions of humans.

>_Who are we, but gods on keyboards_?

But, something as little as a missed breakfast can affect our productivity, and something as big as a system-failure can drive us to work harder. We are complex machines with simple values.

As a developer who would rather write a script for an hour, rather than clicking 4 buttons in 4 seconds, I can vouch, it’s always wise to fix a process rather than the absolute problem. Fix habitual actions, and you fix procrastination.

| eforeshaan |

1,913,046 | เว็บตรง100: ความสนุกแบบไม่ต้องผ่านเอเย่นต์! | เว็บตรง100: ความสนุกแบบไม่ต้องผ่านเอเย่นต์! พบกับประสบการณ์การเล่นเกมออนไลน์ที่ดีที่สุดกับ... | 0 | 2024-07-05T17:33:09 | https://dev.to/ric_moa_cbb48ce3749ff149c/ewbtrng100-khwaamsnukaebbaimtngphaaneeynt-p92 | เว็บตรง100: ความสนุกแบบไม่ต้องผ่านเอเย่นต์!

พบกับประสบการณ์การเล่นเกมออนไลน์ที่ดีที่สุดกับ [เว็บตรง100](https://hhoc.org/) ที่ไม่ต้องผ่านเอเย่นต์! รับประกันความปลอดภัยและความโปร่งใส พร้อมโปรโมชั่นและโบนัสสุดคุ้มที่จะทำให้คุณตื่นเต้นทุกวัน สมัครง่าย ฝาก-ถอนรวดเร็วตลอด 24 ชั่วโมง อย่าพลาด! มาเป็นส่วนหนึ่งของเราวันนี้เพื่อความสนุกที่ไม่มีที่สิ้นสุด! | ric_moa_cbb48ce3749ff149c |

|

1,911,847 | Finding Your True North: A Concise Guide to Authenticity | Authenticity. It's a word thrown around a lot these days, but what does it truly mean? At its core,... | 27,967 | 2024-07-05T17:31:02 | https://dev.to/rishiabee/finding-your-true-north-a-concise-guide-to-authenticity-5cbp | authenticity, leadership, management | Authenticity. It's a word thrown around a lot these days, but what does it truly mean? At its core, authenticity is about being genuine, acting with integrity, and living according to your inner compass. It's the unwavering sense of "you" that shines through in your actions, decisions, and even your style.

---

> "**The only person you are destined to become is the person you decide to be.**" - Ralph Waldo Emerson _(This quote emphasizes the power of self-discovery and living according to your own choices.)_

> "**The greatest danger for most of us is not that our aim is too high and we miss it, but that it is too low and we reach it.**" - Michelangelo _(This quote reminds us that true fulfillment comes from striving to be our authentic selves, even if it's challenging.)_

---

So, how can you cultivate your own authentic self? Here's your roadmap:

- **Craft a Personal Manifesto:**

Take time to reflect on your values, your passions, and your non-negotiables. Write them down! This personal manifesto will serve as your guiding light.

- **Embrace Vulnerability:**

Let people see the real you, flaws and all. Authenticity thrives on connection, and connection requires vulnerability. Share your passions, your struggles, and your true opinions.

- **Consistency is Key:**

While your style or hobbies may evolve, your core values should remain constant. This consistency builds trust and allows others to understand who you are at your core.

- **Set Boundaries:**

Respecting your limitations and saying "no" is crucial for authenticity. Don't spread yourself thin trying to please everyone. Prioritize what aligns with your values and protects your well-being.

- **Know When to Stop:**

Don't force a certain image or behavior. Authenticity isn't about maintaining a facade. It's about allowing your true self to shine through, even when it's messy or inconvenient.

- **Transparency Matters:**

Be honest and upfront in your communication. Authenticity doesn't mean blurting out every thought, but it does mean striving for genuineness.

- **Embrace Your Range:**

You can be both a nature enthusiast and a techie, a lover of classical music and a dab hand at baking. Embrace the multifaceted nature of your personality!

Remember, authenticity is a journey, not a destination. There will be stumbles and moments of doubt. But by staying true to your core values and letting your genuine self shine through, you'll find the path to your own unique and powerful form of authenticity.

---

> "**To thine own self be true.**" - William Shakespeare _(A timeless quote reminding us to stay faithful to our authentic selves.)_

---

## The Stories Within

Consider Sarah, a graphic designer with a whimsical, artistic streak. Her colleagues know her for her vibrant clothing choices and her infectious laugh. But when it comes to client work, Sarah is a meticulous professional, delivering polished designs that prioritize functionality. This is authenticity in action. Sarah embraces her full self, both the playful and the serious, without compromising her core values of creativity and excellence.

Now, there's Michael, a history teacher known for his dry wit and passion for obscure historical events. At home, however, Michael is a devoted family man, indulging in silly movie nights with his kids. This apparent contradiction isn't inauthenticity; it's simply the multifaceted nature of a person. Michael's dedication to his students and his family stems from the same core value: a love for learning and connection.

---

> "**Authenticity is a collection of choices that reflect your inner values.**" - Frederic Buechner _(This quote highlights that authenticity is an active process based on your core beliefs.)_

---

## Be You, Unapologetically: Bullet points to Authenticity

**Know Your Values:** Craft a personal manifesto that reflects your core principles and what matters most to you.

**Embrace Vulnerability:** Let people see the real you, strengths and weaknesses. Build connections through open communication.

**Live with Consistency:** While your style may change, your core values should remain constant. This builds trust and allows others to understand who you are.

**Set Boundaries:** Respect your limits and say "no" when needed. Focus your energy on what aligns with your values and protects your well-being.

**Be Transparent:** Strive for honesty and genuineness in your communication.

**Embrace Your Multifaceted Self:** You can have a range of interests and passions. Authenticity celebrates the unique blend that makes you, you.

**The Journey Matters:** Authenticity is a continuous process. There will be challenges, but staying true to yourself is the path to a fulfilling life.

---

> "**Authenticity is the new rebellion.**" - Margaret Mead _(This quote suggests that living authentically can be a form of nonconformity in a world that often pressures us to fit in.)_

--- | rishiabee |

1,912,818 | BLOG ON HOW TO HOST STATIC WEBSITE ON AZURE BLOB STORAGE | You will need to download Visual Studio Code. It is a code editor. It is static because it is fixed... | 0 | 2024-07-05T17:30:52 | https://dev.to/free2soar007/blog-on-how-to-host-static-website-on-azure-blob-storage-4jo0 | You will need to download Visual Studio Code. It is a code editor.

It is static because it is fixed i.e.one cannot interact with it.

STEPS

a. Download a sample website and save.

b. Open Visual Studio Code

i. To open Visual Studio Code; search for Visual Studio Code in the search bar at the bottom left, beside the windows icon

ii. Click on file at the top left. See below screenshot

iii. Select open folder from the dropdown to open the folder.

iv. Go to the download folder to select the folder you want to work on.

Click on it and select Folder

v. Click on index.html on visualstudio code to open it

vi. Then, you edit the portions written in white. They are between the tags. Extra care has to be taken so as not to delete or tamper with the codes.

The portions written in white are the things rendering on the page i.e. the things we can see on the page when it is opened on the browser.

After editing, you can now save.

2. Go to Azure Portal

i. Login to Azure

ii. Create a Storage Account. Search for Storage Accounts in search bar, and select it

iii. Click on +Create on the top left of the page

iv. Edit the Basic page, leave the others at default. Then, review and create.

After successfully creating the storage account, click on go to Resource.

v. Locate the static website.

The static website can be located by either Clicking on 'capabilities' or by clicking 'Data Management' on left pane as shown below

Data Management

Click on Data Management, then, click on 'Static Website'

vi. After clicking static website; you enable it. Then, you input the index document name and the error document path. Then, you click save.

The index majorly is the root folder; from the index, you can link to other folders.

vii. After saving, an Azure Storage account will be created, as well as the 'Primary Endpoint link', and 'Secondary Endpoint link'. These links when opened on the browser will take you to your website.

viii. Go to Data Storage

Click on Containers. This will open the recently created storage account.

Click on the Azure storage account that was created

ix. Click on the Azure storage account, click 'upload' select the file to be uploaded from the folder. Drag and drop on the Azure storage account. Then, click on upload.

x. To access the website, go to the overview page.

Select Data Management, Click on Static website, and copy the 'Primary Endpoint', and paste it in your browser.

https://anthonywebsite.z19.web.core.windows.net/

| free2soar007 |

|

1,913,044 | The Future of Digital Marketing: Trends to Watch in 2024 | As the digital landscape continues to evolve, businesses must stay ahead of the curve to remain... | 0 | 2024-07-05T17:27:48 | https://dev.to/amandigitalswag/the-future-of-digital-marketing-trends-to-watch-in-2024-3lbi | amandigitalswag, digitalmarketing, bestdigitalmarketingcompany | As the digital landscape continues to evolve, businesses must stay ahead of the curve to remain competitive. Here are some of the key trends shaping the future of digital marketing in 2024:

1. AI and Machine Learning Integration

Artificial Intelligence (AI) and Machine Learning (ML) are no longer just buzzwords; they are integral parts of digital marketing strategies. AI-driven tools can analyze vast amounts of data to provide insights into customer behavior, predict trends, and automate tasks. Chatbots and virtual assistants powered by AI enhance customer service by providing instant responses and personalized experiences.

2. Personalization at Scale

Consumers expect brands to understand their preferences and deliver personalized experiences. Advanced data analytics and AI enable marketers to create highly targeted campaigns that resonate with individual users. Personalization extends beyond email marketing to include dynamic website content, personalized product recommendations, and tailored social media ads.

3. Voice Search Optimization

With the increasing use of smart speakers and voice-activated devices, optimizing for voice search is becoming crucial. Voice search queries are typically longer and more conversational, requiring a shift in SEO strategies. Marketers need to focus on natural language processing and optimizing content for voice-specific keywords.

4. Video Marketing Dominance

Video content continues to dominate digital marketing, with platforms like YouTube, TikTok, and Instagram Reels leading the charge. Live streaming, interactive videos, and short-form content are particularly effective in capturing audience attention. Marketers should invest in high-quality video production and leverage storytelling to engage viewers.

5. Sustainability and Social Responsibility

Consumers are increasingly concerned about the ethical and environmental impact of their purchases. Brands that demonstrate a commitment to sustainability and social responsibility can build stronger connections with their audience. Transparency in supply chains, eco-friendly practices, and social advocacy are becoming important aspects of brand identity.

6. Augmented Reality (AR) and Virtual Reality (VR)

AR and VR technologies are transforming the way consumers interact with brands. From virtual try-ons to immersive brand experiences, these technologies offer innovative ways to engage customers. As AR and VR become more accessible, marketers should explore how these tools can enhance their campaigns.

7. Privacy and Data Security

With increasing concerns about data privacy, marketers must prioritize transparency and compliance with regulations such as GDPR and CCPA. Building trust with consumers through clear data policies and secure data handling practices is essential. Brands that respect user privacy will stand out in a crowded market.

8. Influencer Marketing Evolution

Influencer marketing continues to be a powerful strategy, but its landscape is evolving. Micro-influencers and nano-influencers are gaining traction due to their highly engaged and loyal followings. Authenticity and genuine connections are key, as consumers are becoming more discerning about influencer endorsements.

9. Omnichannel Marketing

An omnichannel approach ensures a seamless customer experience across multiple touchpoints, including online and offline channels. Integrated marketing strategies that provide consistent messaging and personalized interactions can drive higher engagement and conversions. Brands should focus on creating cohesive journeys that cater to the preferences of their target audience.

10. Blockchain for Digital Advertising

Blockchain technology offers potential solutions to some of the challenges faced in digital advertising, such as ad fraud and transparency issues. By providing a decentralized and secure way to verify transactions, blockchain can enhance the credibility and effectiveness of digital ad campaigns.

Conclusion

The [digital marketing](https://amandigitalswag.in/shantipuram/

) landscape is rapidly changing, driven by technological advancements and shifting consumer behaviors. Staying informed about these trends and adapting strategies accordingly will be crucial for businesses aiming to thrive in 2024 and beyond. By embracing innovation and focusing on customer-centric approaches, marketers can navigate the complexities of the digital world and achieve long-term success.

[AmanDigitalSwag](https://amandigitalswag.in/shantipuram/

) offers the [Best Digital Marketing](https://amandigitalswag.in/shantipuram/

). Save 30% with the [Top Online Marketing Agency](https://amandigitalswag.in/shantipuram/

)! Experience rapid growth and outstanding service. For more information, visit us at :

https://amandigitalswag.in/shantipuram/

| amandigitalswag |

1,913,042 | Laravel logging | To help you learn more about what's happening within your application, Laravel provides robust... | 0 | 2024-07-05T17:20:30 | https://dev.to/developeralamin/laravel-logging-32jm | To help you learn more about what's happening within your application, Laravel provides robust logging services that allow you to log messages to files, the system error log, and even to Slack to notify your entire team.

In this folder there have the log file.

```

storage/logs

```

We can create laravel custom log file. Go to the `config\logging.php`

```

//By default have

'single' => [

'driver' => 'single',

'path' => storage_path('logs/laravel.log'),

'level' => env('LOG_LEVEL', 'debug'),

'replace_placeholders' => true,

],

//here is the custom log example:

'custom' => [

'driver' => 'single',

'path' => storage_path('logs/custom.log'),

'level' => env('LOG_LEVEL', 'debug'),

'replace_placeholders' => true,

],

```

If we want to show the every log data show our custom log file . Edit the `.env` file

replace

```

LOG_CHANNEL=stack to LOG_CHANNEL=custom

```

| developeralamin |

|

1,913,011 | Deadline Extended for the Wix Studio Challenge | Due to the fourth of July holiday in the U.S. and some confusion around submission guidelines, we... | 0 | 2024-07-05T17:17:08 | https://dev.to/devteam/deadline-extended-for-the-wix-studio-challenge-2c7f | wixstudiochallenge, webdev, devchallenge, javascript | Due to the fourth of July holiday in the U.S. and some confusion around submission guidelines, we have decided to extend the deadline for the Wix Studio Challenge and give participants an extra week to work on their projects.

**<mark>The NEW deadline for the Wix Studio Challenge is July 14.</mark> We will announce winners on July 16.**

As such, we'll take this opportunity to clarify some elements of the challenge below.

### All projects must be created with the **Wix Studio Editor**

The Wix Studio Editor and the standard Wix Editor are different platforms. To ensure you are working in Wix Studio, new users should take the following steps:

1. Navigate to the [Wix Studio page](https://www.wix.com/studio)

2. Click "Start Creating"

3. Create an account

4. Select "For a client, as a freelancer or an agency" and click continue

5. Click "Go to Wix Studio"

6. Click "As a freelancer"

7. Select "Web Development" and click continue

8. Click "Start Creating"

### Usage of Wix Apps and Design Templates

You may use any App on the Wix App Market including those made by Wix (ie. Wix Apps), but you must start your project from a blank template. Please do not use any pre-built visual design templates.

### Submission Template

The submission template should have pre-filled prompts for you to fill out. On the off chance that it does not, please ensure your submission includes the following:

- An overview of your project

- A link to your Wix Studio app

- Screenshots of your project

- Insight into how you leveraged Wix Studio’s JavaScript development capabilities

- List of APIs and libraries you utilized

- List of teammate usernames if you worked on a team

Here is the template again for anyone who was having trouble previously:

{% cta https://dev.to/new?prefill=---%0Atitle%3A%20%0Apublished%3A%20%0Atags%3A%20%20devchallenge%2C%20wixstudiochallenge%2C%20webdev%2C%20javascript%0A---%0A%0A*This%20is%20a%20submission%20for%20the%20%5BWix%20Studio%20Challenge%20%5D(https%3A%2F%2Fdev.to%2Fchallenges%2Fwix).*%0A%0A%0A%23%23%20What%20I%20Built%0A%3C!--%20Share%20an%20overview%20about%20your%20project.%20--%3E%0A%0A%23%23%20Demo%0A%3C!--%20Share%20a%20link%20to%20your%20Wix%20Studio%20app%20and%20include%20some%20screenshots%20here.%20--%3E%0A%0A%23%23%20Development%20Journey%0A%3C!--%20Tell%20us%20how%20you%20leveraged%20Wix%20Studio%E2%80%99s%20JavaScript%20development%20capabilities--%3E%0A%0A%3C!--%20Which%20APIs%20and%20Libraries%20did%20you%20utilize%3F%20--%3E%0A%0A%3C!--%20Team%20Submissions%3A%20Please%20pick%20one%20member%20to%20publish%20the%20submission%20and%20credit%20teammates%20by%20listing%20their%20DEV%20usernames%20directly%20in%20the%20body%20of%20the%20post.%20--%3E%0A%0A%0A%3C!--%20Don%27t%20forget%20to%20add%20a%20cover%20image%20(if%20you%20want).%20--%3E%0A%0A%0A%3C!--%20Thanks%20for%20participating!%20%E2%86%92 %}

Wix Studio Challenge Submission Template

{% endcta %}

### Challenge Overview

If you’re new to the challenge or couldn’t participate because of our previous deadline, consider this your lucky day!

**We challenge you to build an innovative eCommerce experience with Wix Studio.** [Ania Kubów](https://www.youtube.com/@AniaKubow) is our special guest judge, and we have $3,000 up for grabs for one talented winner!

You can find all challenge details in our original announcement post:

{% link https://dev.to/devteam/join-us-for-the-wix-studio-challenge-with-special-guest-judge-ania-kubow-3000-in-prizes-3ial %}

We hope this extra time gives all participants the opportunity to build something they are truly proud of! We can’t wait to see your submissions.

Happy Coding!

| thepracticaldev |

1,913,040 | Understanding Web Hosting: A Comprehensive Guide | Web hosting is a fundamental component of the internet, serving as the backbone for websites and... | 0 | 2024-07-05T17:12:30 | https://dev.to/leo_jack_4f2533dc77f81d90/understanding-web-hosting-a-comprehensive-guide-2h9d | webtesting, webhostingcompany | Web hosting is a fundamental component of the internet, serving as the backbone for websites and online applications. For anyone looking to establish an online presence, understanding web hosting is crucial. This guide will delve into what web hosting is, the types of web hosting available, and how to choose the right web hosting service for your needs.

**What is Web Hosting?**

**[Web hosting](https://www.riteanswers.com/)** refers to the service that allows individuals and organizations to make their websites accessible via the World Wide Web. Web hosting providers supply the technologies and services needed for a website or webpage to be viewed on the internet. Websites are hosted, or stored, on special computers called servers. When internet users want to view your website, all they need to do is type your website address or domain into their browser. Their computer will then connect to your server and your web pages will be delivered to them through the browser.

**Types of Web Hosting**

Shared Hosting: This is the most common and affordable type of web hosting. In shared hosting, multiple websites share a single server's resources, including bandwidth, storage, and processing power. It's ideal for small websites and blogs with low to moderate traffic.

**VPS Hosting**: Virtual Private Server (VPS) hosting provides a middle ground between shared hosting and dedicated hosting. A VPS hosting environment mimics a dedicated server within a shared hosting environment. It is suitable for websites that have grown beyond the limitations of shared hosting.

**Dedicated Hosting**: With dedicated hosting, you get an entire server to yourself. This type of hosting is best for websites with high traffic volumes or those requiring substantial resources. It offers the highest level of performance, security, and control.

**Cloud Hosting**: Cloud hosting uses a network of servers to ensure maximum uptime and scalability. This type of hosting is perfect for websites that experience fluctuating traffic levels, as it allows for resources to be allocated dynamically based on demand.

**Managed Hosting**: In managed hosting, the hosting provider takes care of all server-related issues, including maintenance, security, and updates. This option is excellent for those who do not have the technical expertise to manage a server or who prefer to focus on their core business activities.

Choosing the Right Web Hosting Service

When selecting a **[web hosting service](https://www.riteanswers.com/

)**, consider the following factors:

**Performance**: Look for hosting services that guarantee high uptime and fast load times.

**Security**: Ensure that the hosting provider offers robust security measures, including SSL certificates and regular backups.

**Support**: Opt for providers that offer 24/7 customer support to help resolve any issues promptly.

**Scalability**: Choose a hosting service that can grow with your website, offering options to upgrade resources as needed.

**Cost**: Compare the pricing plans of different providers to find one that offers good value for your specific requirements.

Understanding the various aspects of web hosting will enable you to make an informed decision that best suits your website's needs. Whether you're starting a personal blog or running a large e-commerce site, the right web hosting service is critical to your online success. | leo_jack_4f2533dc77f81d90 |

1,913,039 | 2058. Find the Minimum and Maximum Number of Nodes Between Critical Points | 2058. Find the Minimum and Maximum Number of Nodes Between Critical Points Medium A critical point... | 27,523 | 2024-07-05T17:09:40 | https://dev.to/mdarifulhaque/2058-find-the-minimum-and-maximum-number-of-nodes-between-critical-points-13c3 | php, leetcode, algorithms, programming | 2058\. Find the Minimum and Maximum Number of Nodes Between Critical Points

Medium

A **critical point** in a linked list is defined as **either** a **local maxima** or a **local minima**.

A node is a **local maxima** if the current node has a value **strictly greater** than the previous node and the next node.

A node is a **local minima** if the current node has a value **strictly smaller** than the previous node and the next node.

Note that a node can only be a local maxima/minima if there exists **both** a previous node and a next node.

Given a linked list `head`, return _an array of length 2 containing `[minDistance, maxDistance]` where `minDistance` is the **minimum distance** between **any two distinct** critical points and `maxDistance` is the **maximum distance** between **any two distinct** critical points. If there are **fewer** than two critical points, return `[-1, -1]`_.

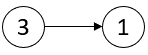

**Example 1:**

- **Input:** head = [3,1]

- **Output:** [-1,-1]

- **Explanation:** There are no critical points in [3,1].

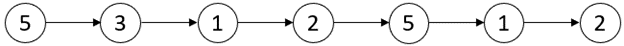

**Example 2:**

- **Input:** head = [5,3,1,2,5,1,2]

- **Output:** [1,3]

- **Explanation:** There are three critical points:

- [5,3,1,2,5,1,2]: The third node is a local minima because 1 is less than 3 and 2.

- [5,3,1,2,5,1,2]: The fifth node is a local maxima because 5 is greater than 2 and 1.

- [5,3,1,2,5,1,2]: The sixth node is a local minima because 1 is less than 5 and 2.

- The minimum distance is between the fifth and the sixth node. minDistance = 6 - 5 = 1.

- The maximum distance is between the third and the sixth node. maxDistance = 6 - 3 = 3.

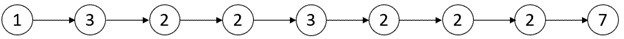

**Example 3:**

- **Input:** head = [1,3,2,2,3,2,2,2,7]

- **Output:** [3,3]

- **Explanation:** There are two critical points:

- [1,3,2,2,3,2,2,2,7]: The second node is a local maxima because 3 is greater than 1 and 2.

- [1,3,2,2,3,2,2,2,7]: The fifth node is a local maxima because 3 is greater than 2 and 2.

- Both the minimum and maximum distances are between the second and the fifth node.

- Thus, minDistance and maxDistance is 5 - 2 = 3.

- Note that the last node is not considered a local maxima because it does not have a next node.

**Constraints:**

- The number of nodes in the list is in the range <code>[2, 10<sup>5</sup>]</code>.

- <code>1 <= Node.val <= 10<sup>5</sup></code>

**Solution:**

```

/**

* Definition for a singly-linked list.

* class ListNode {

* public $val = 0;

* public $next = null;

* function __construct($val = 0, $next = null) {

* $this->val = $val;

* $this->next = $next;

* }

* }

*/

class Solution {

/**

* @param ListNode $head

* @return Integer[]

*/

function nodesBetweenCriticalPoints($head) {

$result = [-1, -1];

// Initialize minimum distance to the maximum possible value

$minDistance = PHP_INT_MAX;

// Pointers to track the previous node, current node, and indices

$previousNode = $head;

$currentNode = $head->next;

$currentIndex = 1;

$previousCriticalIndex = 0;

$firstCriticalIndex = 0;

while ($currentNode->next != null) {

// Check if the current node is a local maxima or minima

if (($currentNode->val < $previousNode->val &&

$currentNode->val < $currentNode->next->val) ||

($currentNode->val > $previousNode->val &&

$currentNode->val > $currentNode->next->val)) {

// If this is the first critical point found

if ($previousCriticalIndex == 0) {

$previousCriticalIndex = $currentIndex;

$firstCriticalIndex = $currentIndex;

} else {

// Calculate the minimum distance between critical points

$minDistance = min($minDistance, $currentIndex - $previousCriticalIndex);

$previousCriticalIndex = $currentIndex;

}

}

// Move to the next node and update indices

$currentIndex++;

$previousNode = $currentNode;

$currentNode = $currentNode->next;

}

// If at least two critical points were found

if ($minDistance != PHP_INT_MAX) {

$maxDistance = $previousCriticalIndex - $firstCriticalIndex;

$result = [$minDistance, $maxDistance];

}

return $result;

}

}

```

- **[LinkedIn](https://www.linkedin.com/in/arifulhaque/)**

- **[GitHub](https://github.com/mah-shamim)**

| mdarifulhaque |

1,913,038 | Solving Robotic or Distorted Voice in Discord: A Comprehensive Guide with Free Phone Numbers for Discord | Have you ever been in the middle of an intense gaming session or an important chat on Discord, only... | 0 | 2024-07-05T17:09:19 | https://dev.to/legitsms/solving-robotic-or-distorted-voice-in-discord-a-comprehensive-guide-with-free-phone-numbers-for-discord-1f6e | webdev, beginners, javascript, programming | Have you ever been in the middle of an intense gaming session or an important chat on Discord, only to have your voice sound like a robot or become annoyingly distorted? You're not alone. This issue can be incredibly frustrating, but fortunately, there are several solutions you can try to resolve it. In this article, we'll dive into why this problem occurs and offer practical, easy-to-follow steps to fix it. Plus, we’ll cover some related tips, such as how to use free phone numbers for Discord and free phone numbers for Discord verification, which can come in handy for troubleshooting and enhancing your overall Discord experience.

Understanding the Issue: Why Does Your Voice Sound Robotic or Distorted on Discord?

Before we jump into the solutions, it’s essential to understand why this problem occurs. A robotic or distorted voice on Discord is typically due to one or more of the following reasons:

- Network Issues: Poor internet connection or high latency can disrupt the audio quality.

- Hardware Problems: Issues with your microphone or headset.

- Software Conflicts: Other applications or settings interfering with Discord.

- Server Problems: Issues with the Discord servers or the specific voice channel you’re using.

Fixing Network Issues

Check Your Internet Connection

Your internet connection is the backbone of a smooth Discord experience. Here's what you can do:

1. Speed Test: Run an internet speed test to ensure you have a stable and fast connection.

2. Wired Connection: If possible, use a wired connection instead of Wi-Fi to reduce latency.

3. Restart Router: Sometimes, a simple router restart can resolve temporary connectivity issues.

Optimize Discord’s Voice Settings

Discord has several settings that can be tweaked to improve voice quality:

1. Open Discord and go to User Settings (cog icon).

2. Select Voice & Video.

3. Under Input Sensitivity, enable Automatically determine input sensitivity.

4. Set the Input Mode to Voice Activity.

5. Adjust the Quality of Service (QoS) setting by disabling Enable Quality of Service High Packet Priority.

Addressing Hardware Problems

Microphone and Headset Check

Ensure your microphone and headset are functioning correctly:

1. Check Connections: Ensure all cables are securely connected.

2. Test with Other Apps: Use your microphone and headset with another application to see if the issue persists.

3. Update Drivers: Make sure your audio drivers are up to date. You can do this through Device Manager on Windows or via your hardware manufacturer’s website.

Reduce Background Noise

Background noise can cause distortion:

1. Use Noise-Cancelling Features: Many modern headsets come with noise-cancelling features. Enable these if available.

2. Discord Noise Suppression: Enable Krisp, Discord’s built-in noise suppression feature, by going to User Settings > Voice & Video > Advanced and toggling Noise Suppression.

Resolving Software Conflicts

Close Background Applications

Other applications running in the background can interfere with Discord:

1. Close Unnecessary Programs: Use Task Manager (Ctrl + Shift + Esc) to close any unnecessary applications.

2. Check for Conflicts: Ensure no other voice chat applications are running that might conflict with Discord.

Update Discord

Always use the latest version of Discord:

1. Check for Updates: Discord usually updates automatically, but you can check manually by restarting the app.

Adjust Quality of Service Settings

QoS settings can sometimes cause issues:

1. Disable QoS: Go to User Settings > Voice & Video > Quality of Service and disable Enable Quality of Service High Packet Priority.

Server Problems and Solutions

Switch Servers or Regions

Sometimes, the issue might be with the Discord server or region you are connected to:

1. Change Server Region: If you are an admin of the server, go to Server Settings > Overview > Server Region and change to a different region.

2. Join a Different Voice Channel: Sometimes, simply switching to another voice channel can resolve the issue.

Enhancing Your Discord Experience: Using Free Phone Numbers for Verification

Verification is an essential step for securing your Discord account and avoiding spammers. Here’s how to use free phone numbers effectively.

Why Use Free Phone Numbers for Discord Verification?

Using a temp number for Discord can be beneficial:

1. Privacy: Protect your number.

2. Convenience: Easily get past verification steps without using your real number.

How to Get a Temp Phone Number for Discord

1. Online Services: Use services like LegitSMS.com as the best and most reliable provider of free phone numbers for Discord.

2. Temporary Number Apps: Apps like Hushed or Burner provide temporary phone numbers.

3. Comprehensive Guide: Read this blog post about using temp phone numbers for Discord registration and verification for more detailed instructions.

Verification Process

1. Get a Number: Obtain a temp number for Discord from one of the services mentioned.

2. Enter Number in Discord: Go to User Settings > My Account > Phone and enter the temporary number.

3. Receive Code: Check the temporary number service for the verification code.

4. Enter Code: Enter the code in Discord to complete verification.

Conclusion

Dealing with a robotic or distorted voice on Discord can be annoying, but by following the steps outlined above, you can significantly improve your audio quality and overall Discord experience. Whether it’s optimizing your network settings, checking your hardware, resolving software conflicts, or even using a temp phone number for Discord verification, these solutions cover all the bases. Now, you can enjoy clear, uninterrupted conversations on Discord, just the way it should be.

FAQs

1. What causes a robotic voice on Discord?

- A robotic voice on Discord is usually caused by network issues, hardware problems, or software conflicts.

2. How can I fix my distorted voice on Discord?

- To fix a distorted voice, check your internet connection, update your audio drivers, adjust Discord’s voice settings, and ensure no background applications are interfering.

3. Can I use a temporary phone number for Discord verification?

- Yes, using a temporary phone number for Discord verification is a great way to protect your privacy and easily complete the verification process.

4. Why should I disable QoS on Discord?

- Disabling QoS can help if you are experiencing audio issues, as sometimes QoS settings can cause problems with certain network configurations.

5. What should I do if changing servers doesn't fix my voice issues on Discord?

- If changing servers doesn’t help, try restarting your router, closing background applications, updating Discord, or using a wired connection to improve stability.

For more information on free phone numbers and FAQs, visit LegitSMS FAQ. If you need further assistance with Discord, check out the official Discord website https://discord.com/here. | legitsms |

1,913,037 | Top Engineering College in Dharmapuri: Shreenivasa Engineering College | When it comes to pursuing engineering education in Dharmapuri, Shreenivasa Engineering College stands... | 0 | 2024-07-05T17:09:11 | https://dev.to/shreenivasa_123/top-engineering-college-in-dharmapuri-shreenivasa-engineering-college-289b | college, education, engineering | When it comes to pursuing engineering education in Dharmapuri, Shreenivasa Engineering College stands out as the [top engineering college in Dharmapuri](https://shreenivasa.info/). Our institution is renowned for its commitment to academic excellence, cutting-edge facilities, and a student-centric approach. With a diverse range of engineering programs, we cater to the evolving needs of the industry and ensure that our graduates are well-equipped to face future challenges.

At Shreenivasa Engineering College, we pride ourselves on our world-class faculty who are not only experts in their respective fields but also passionate about imparting knowledge. Our state-of-the-art laboratories and research centers provide students with hands-on experience, fostering innovation and practical skills. Additionally, our college boasts an impressive placement record, with numerous top-tier companies recruiting our graduates every year.

Our curriculum is designed to be both rigorous and flexible, allowing students to specialize in areas of interest while gaining a solid foundation in core engineering principles. We emphasize project-based learning, encouraging students to engage in real-world problem-solving and collaborative projects. This approach not only enhances technical skills but also develops critical thinking and teamwork abilities.

Shreenivasa Engineering College also offers a vibrant campus life, with a wide range of extracurricular activities and student organizations. From technical clubs to cultural events, there are numerous opportunities for students to explore their interests and develop leadership skills. Our supportive campus environment ensures that every student can thrive both academically and personally.

As the top engineering college in Dharmapuri, Shreenivasa Engineering College is dedicated to shaping the engineers of tomorrow. We continuously update our programs to keep pace with technological advancements and industry trends. Our strong industry connections and alumni network provide students with valuable mentorship and career opportunities.

Choosing Shreenivasa Engineering College means choosing a future filled with promise and potential. Join us to embark on an exciting journey of discovery and innovation. With our comprehensive education and supportive community, you will be well-prepared to make significant contributions to the engineering field.

Discover why Shreenivasa Engineering College is recognized as the top engineering college in Dharmapuri. Enroll today and become part of an institution that is committed to excellence and success.

| shreenivasa_123 |

1,913,036 | PYTHON THE BEAST! | Alright Python is an object-oriented programing language. It makes development of new applications... | 0 | 2024-07-05T17:08:54 | https://dev.to/daphneynep/python-the-beast-24mp | Alright Python is an object-oriented programing language. It makes development of new applications useful for high-level built-in data structures and dynamic typing. Also, it’s useful for scripting or “glue” code to combine existing components written in different languages. Ok as I am diving more into python, learning the modules and doing some of the labs given I am getting nervous because it’s not clicking to me yet! I mean most people says python is their favorite program language. Maybe It could be because it’s “simple and easy to learn syntax emphasizes readability and therefore reduces the cost and complication of long-term program maintenance” (Flatiron School, Intro to Python).

Why didn’t I know Python was so difficult? As much as it makes since it’s also confusing at the same time. Sounds crazy right? I just feel like there’s a lot of layers that just keeps coming one after the other as you finish one part, it’s like a mystery. It’s crazy but I really feel challenged to continue learning and having a more understand on how to code with Python. For example, below I am currently stuck on something like this:

**# Wrong:**

def foo(x):

if x >= 0:

return math.sqrt(x)

def bar(x):

if x < 0:

return

return math.sqrt(x)

**# Correct:**

def foo(x):

if x >= 0:

return math.sqrt(x)

else:

return None

def bar(x):

if x < 0:

return None

return math.sqrt(x)

When I try to follow the correct way to write my code it doesn’t seem to work. Although What I am working on is a bit different from these codes above but the idea is the same. I’m not sure what I am doing wrong yet but I’ll keep running it again and again until I get it right.

| daphneynep |

|

1,901,749 | 🌀Huracán: El proyecto educativo para ingenieros aprendiendo ZK | Aprender sobre ZK hoy no es una tarea fácil. Es una tecnología nueva, sin mucha documentación.... | 0 | 2024-07-05T17:06:48 | https://dev.to/turupawn/huracan-el-proyecto-educativo-para-ingenieros-aprendiendo-zk-2dl4 | ---

title: 🌀Huracán: El proyecto educativo para ingenieros aprendiendo ZK

published: true

description:

tags:

# cover_image: https://direct_url_to_image.jpg

# Use a ratio of 100:42 for best results.

# published_at: 2024-06-26 16:56 +0000

---

Aprender sobre ZK hoy no es una tarea fácil. Es una tecnología nueva, sin mucha documentación. Huracán nace de mi propia necesidad de aprender sobre ZK de una manera práctica, orientada a desarrolladores e ingenieros.

Huracán es un proyecto totalmente funcional, capaz de hacer transacciones privadas en Ethereum y blockchains EVM. Está basado en proyectos de privacidad actualmente en funcionamiento, pero con el código mínimo para facilitar el proceso de aprendizaje. Vamos a cubrir cómo esta tecnología puede adaptarse a nuevos casos de uso y futuras regulaciones. Además, al final del artículo comparto qué se necesita para llevar este proyecto de pruebas en testnet a uso real en producción.

Al terminar esta guía vas a poder ir a otros proyectos de la misma naturaleza entender cómo están construidos.

¿Prefieres ver el código completo? [Dirígete a Github](https://github.com/Turupawn/Huracan) para encontrar la totalidad del código en esta guíua.

_Cómo está construído Huracán:_

* Circuito hecho con Circom

* Hasheo con Poseidon

* Contratos de lógica de depósito y retiro en solidity

* Construcción de merkle trees en js y solidity, verificación en Circom

* Frontend con html y js vainilla

* web3.js para interacción con web3 y snarkjs para probar en el navegador (zk-WASM)

* Relayer con ethers.js 6 y express para preservar el anonimato de los usuarios

## Tabla de contenido

1. [Cómo funciona Huracán](#1-cómo-funciona-huracán)

1. [El circuito](#2-el-circuito)

1. [Los contratos](#3-los-contratos)

1. [El frontend](#4-el-frontend)

1. [El relayer](#5-el-relayer)

1. [¿Cómo llevar Huracán a producción?](#6-cómo-llevar-huracán-a-producción)

1. [Ideas para profundizar](#7-ideas-para-profundizar)

## 1. Cómo funciona Huracán

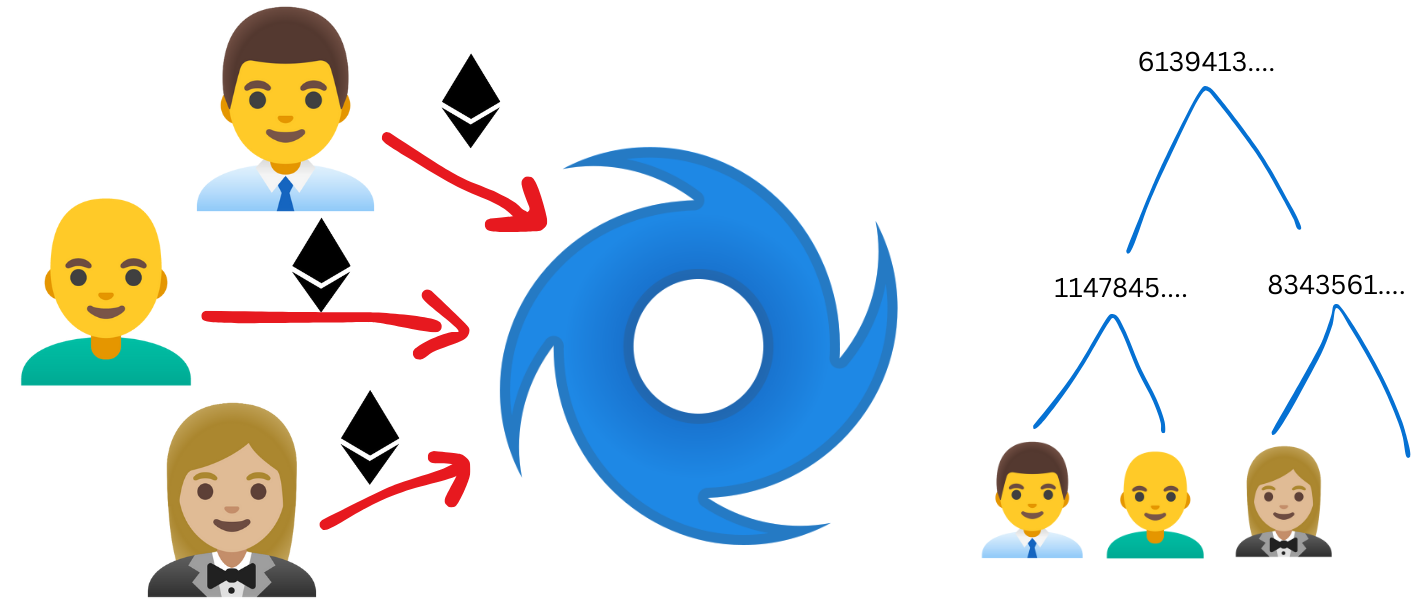

Huracán es una herramienta DeFi que protege la identidad de sus usuarios utilizando la técnica conocida como _pruebas de inclusión anónima_ para realizar lo que comúnmente llamamos _mixer_. Este sistema es capaz de demostrar que un usuario ha depositado ether en un contrato sin demostrar cual de todos fué.

<p><small><em>Cada usuario que deposita Ether en Huracán es colocado como una hoja en un merkle tree dentro del contrato</em></small></p>

Para lograr esto, necesitamos un smart contract donde se depositarán los fondos y al hacerlo generará un merkle tree donde cada hoja representa un depositante. Adicionalmente, ocuparemos un circuito que generará las pruebas de inclusión que mantendrán al usuario anónimo al momento de retirar los fondos. Y también un relayer que ejecutará la transacción en nombre del usuario anónimo para proteger su privacidad.

<p><small><em>Los usuarios pueden luego retirar sus fondos demostrando que parte del merkle tree sin revelar cuál hoja les pertenece</em></small></p>

A continuación el código, la explicación breve y los materiales de apoyo necesarios para construir y lanzar tu propio proyecto con privacidad.

## 2. El circuito

_Material de apoyo: [Smart Contracts privados con Solidity y Circom](https://dev.to/turupawn/smart-contracts-privados-con-solidity-y-circom-3a8h)_

El circuito se encarga si eres parte del merkle tree, es decir, que eres uno de los depositantes sin revelar cuál eres pues mantienes los parámetros privados pero generas una prueba de inclusión que es capaz de ser verificada por un smart contract. ¿Cuáles parámetros privados? Durante el depósito, hasheamos una llave privada y un nulificador para crear así una nueva hoja en el árbol. La llave privada es un parámetro privado que nos servirá para luego demostrar que somos nosotros los dueños de esa hora. El nulificador es otro parámetros cuyo hash será pasado al contrato de solidity al momento de redimir los fondos, esto para prevenir que un usuario retire fondos 2 veces seguidas (double spend). El resto de parámetros privados son la ayuda que ocupa el circuito para reconstruir el árbol y revisar que somos parte de él.

Iniciamos instalando la librería `circomlib` que contiene los circuitos de poseidon que estaremos utilizando en este tutorial.

```bash

git clone https://github.com/iden3/circomlib.git

```

Ahora creamos nuestro circuito `proveWithdrawal` que prueba que hemos depositado en el contrato sin revelar quién somos.

`proveWithdrawal.circom`

```js

pragma circom 2.0.0;

include "circomlib/circuits/poseidon.circom";

template switchPosition() {

signal input in[2];

signal input s;

signal output out[2];

s * (1 - s) === 0;

out[0] <== (in[1] - in[0])*s + in[0];

out[1] <== (in[0] - in[1])*s + in[1];

}

template commitmentHasher() {

signal input privateKey;

signal input nullifier;

signal output commitment;

signal output nullifierHash;

component commitmentHashComponent;

commitmentHashComponent = Poseidon(2);

commitmentHashComponent.inputs[0] <== privateKey;

commitmentHashComponent.inputs[1] <== nullifier;

commitment <== commitmentHashComponent.out;

component nullifierHashComponent;

nullifierHashComponent = Poseidon(1);

nullifierHashComponent.inputs[0] <== nullifier;

nullifierHash <== nullifierHashComponent.out;

}

template proveWithdrawal(levels) {

signal input root;

signal input recipient;

signal input privateKey;

signal input nullifier;

signal input pathElements[levels];

signal input pathIndices[levels];

signal output nullifierHash;

signal leaf;

component commitmentHasherComponent;

commitmentHasherComponent = commitmentHasher();

commitmentHasherComponent.privateKey <== privateKey;

commitmentHasherComponent.nullifier <== nullifier;

leaf <== commitmentHasherComponent.commitment;

nullifierHash <== commitmentHasherComponent.nullifierHash;

component selectors[levels];

component hashers[levels];

signal computedPath[levels];

for (var i = 0; i < levels; i++) {

selectors[i] = switchPosition();

selectors[i].in[0] <== i == 0 ? leaf : computedPath[i - 1];

selectors[i].in[1] <== pathElements[i];

selectors[i].s <== pathIndices[i];

hashers[i] = Poseidon(2);

hashers[i].inputs[0] <== selectors[i].out[0];

hashers[i].inputs[1] <== selectors[i].out[1];

computedPath[i] <== hashers[i].out;

}

root === computedPath[levels - 1];

}

component main {public [root, recipient]} = proveWithdrawal(2);

```

Para compilar el circuito necesitamos tener instalado ambos circom y snarkjs. Si no lo tienes instalado usa la guía de instalación de Circom.

{% collapsible Guía de instalación de Circom %}

Coloca estos comandos para instalar circom y snarkjs.

```bash

curl --proto '=https' --tlsv1.2 https://sh.rustup.rs -sSf | sh

git clone https://github.com/iden3/circom.git

cd circom

cargo build --release

cargo install --path circom

npm install -g snarkjs

```

{% endcollapsible %}

Ejecuta los comandos para generar la trusted setup y generar los archivos artefactos que estaremos usando más adelante en el frontend.

```bash

circom proveWithdrawal.circom --r1cs --wasm --sym

snarkjs powersoftau new bn128 12 pot12_0000.ptau -v

snarkjs powersoftau contribute pot12_0000.ptau pot12_0001.ptau --name="First contribution" -v

snarkjs powersoftau prepare phase2 pot12_0001.ptau pot12_final.ptau -v

snarkjs groth16 setup proveWithdrawal.r1cs pot12_final.ptau proveWithdrawal_0000.zkey

snarkjs zkey contribute proveWithdrawal_0000.zkey proveWithdrawal_0001.zkey --name="1st Contributor Name" -v

snarkjs zkey export verificationkey proveWithdrawal_0001.zkey verification_key.json

```

Ahora podemos generar el contrato verificador en `verfier.sol`.

```bash

snarkjs zkey export solidityverifier proveWithdrawal_0001.zkey verifier.sol

```

## 3. Los contratos

_Material de apoyo: [Solidity en 15 minutos](https://dev.to/turupawn/como-lanzar-un-dex-paso-a-pasox-2cnm)_

Los contratos son la garantía transparente que todo ha funcionado de manera correcta. Nos permite llevar un conteo de cuánto se ha depositado y también verifican que las pruebas sean válidas para así liberar los fondos. Es importante que todo lo que ocurre en los smart contracts es público, es la parte de nuestro sistema que no es anónima.

Haremos uso de tres contratos. El primero es el contrato verificador que recién generamos en el archivo `verifier.sol`, lánzalo ahora.

El segundo contrato es el de poseidon, si estás en Scroll Sepolia simplemente usa el que yo ya lancé en `0x52f28FEC91a076aCc395A8c730dCa6440B6D9519`. Si quieres usar otra blockchain descolapsa y sigue los pasos:

{% collapsible Lanza el contrato de poseidon %}

La versión de poseidon que usamos en nuestro circuito y contrato deben ser exactamente compatibles. Por lo tanto usamos la versión en `circomlibjs` tal y como lo muestro, solo asegúrate de colocar tu llave privada y url RPC en `TULLAVEPRIVADA` y `TUURLRPC`.

```bash

git clone https://github.com/iden3/circomlibjs.git

node --input-type=module --eval "import { writeFileSync } from 'fs'; import('./circomlibjs/src/poseidon_gencontract.js').then(({ createCode }) => { const output = createCode(2); writeFileSync('poseidonBytecode', output); })"

cast send --rpc-url TUURLRPC --private-key TULLAVEPRIVADA --create $(cat bytecode)

```

{% endcollapsible %}

Ahora lanza el contrato `Huracan` pasando como parámetro la dirección del contrato verificador y de poseidon.

```js

// SPDX-License-Identifier: MIT

pragma solidity >=0.7.0 <0.9.0;

interface IPoseidon {

function poseidon(uint[2] memory inputs) external returns(uint[1] memory output);

}

interface ICircomVerifier {

function verifyProof(uint[2] calldata _pA, uint[2][2] calldata _pB, uint[2] calldata _pC, uint[3] calldata _pubSignals) external view returns (bool);

}

contract Huracan {

ICircomVerifier circomVerifier;

uint nextIndex;

uint public constant LEVELS = 2;

uint public constant MAX_SIZE = 4;

uint public NOTE_VALUE = 0.001 ether;

uint[] public filledSubtrees = new uint[](LEVELS);

uint[] public emptySubtrees = new uint[](LEVELS);

address POSEIDON_ADDRESS;

uint public root;

mapping(uint => uint) public commitments;

mapping(uint => bool) public nullifiers;

event Deposit(uint index, uint commitment);

constructor(address poseidonAddress, address circomVeriferAddress) {

POSEIDON_ADDRESS = poseidonAddress;

circomVerifier = ICircomVerifier(circomVeriferAddress);

for (uint32 i = 1; i < LEVELS; i++) {

emptySubtrees[i] = IPoseidon(POSEIDON_ADDRESS).poseidon([

emptySubtrees[i-1],

0

])[0];

}

}

function deposit(uint commitment) public payable {

require(msg.value == NOTE_VALUE, "Invalid value sent");

require(nextIndex != MAX_SIZE, "Merkle tree is full. No more leaves can be added");

uint currentIndex = nextIndex;

uint currentLevelHash = commitment;

uint left;

uint right;

for (uint32 i = 0; i < LEVELS; i++) {

if (currentIndex % 2 == 0) {

left = currentLevelHash;

right = emptySubtrees[i];

filledSubtrees[i] = currentLevelHash;

} else {

left = filledSubtrees[i];

right = currentLevelHash;

}

currentLevelHash = IPoseidon(POSEIDON_ADDRESS).poseidon([left, right])[0];

currentIndex /= 2;

}

root = currentLevelHash;

emit Deposit(nextIndex, commitment);

commitments[nextIndex] = commitment;

nextIndex = nextIndex + 1;

}

function withdraw(uint[2] calldata _pA, uint[2][2] calldata _pB, uint[2] calldata _pC, uint[3] calldata _pubSignals) public {

circomVerifier.verifyProof(_pA, _pB, _pC, _pubSignals);

uint nullifierHash = _pubSignals[0];

uint rootPublicInput = _pubSignals[1];

address recipient = address(uint160(_pubSignals[2]));

require(root == rootPublicInput, "Invalid merke root");

require(!nullifiers[nullifierHash], "Vote already casted");

nullifiers[nullifierHash] = true;

(bool sent, bytes memory data) = recipient.call{value: NOTE_VALUE}("");

require(sent, "Failed to send Ether");

data;

}

}

```

## 4. El frontend

_Material de apoyo: [Interfaces con privacidad en Solidity y zk-WASM](https://dev.to/turupawn/interfaces-con-privacidad-en-solidity-y-zk-wasm-1amg)_

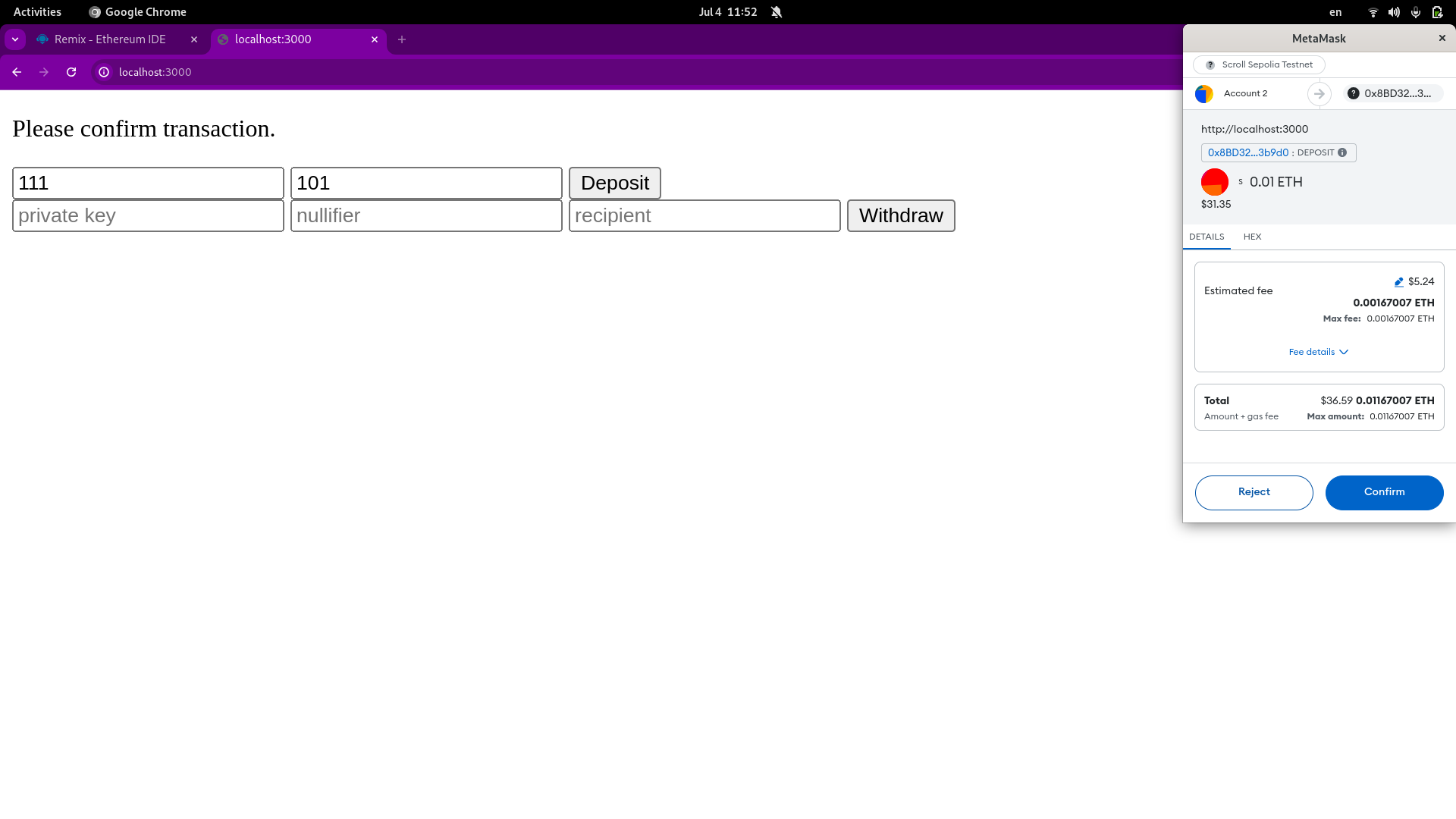

El frontend es la interfaz gráfica con la que estaremos interactuando. En esta demostración estaremos usando html y js vainilla para que los desarrolladores podamos adaptarla a cualquier frontend framework que estemos usando. Una característica muy importante del frontend es que debe ser capaz de producir las pruebas zk sin soltar información privada en el internet. Por eso es importante la tecnología zk-WASM que permite de una manera eficiente construir pruebas en nuestro navegador.

Ahora crea la siguiente estructura de archivos:

```

js/

blockchain_stuff.js

snarkjs.min.js

json_abi/

Huracan.json

Poseidon.json

zk_artifacts/

myCircuit_final.zkey

myCircuit.wasm

index.html

```

* `js/snarkjs.min.js`: descarga este archivo que contiene la librería de snark.js

* `json_abi/Huracan.json`: el ABI del contrato CircomCustomLogic que recién lanzamos, por ejemplo en Remix, lo puedes hacer dando clic en el botón "ABI" en la pestaña de compilación.

* `json_abi/Poseidon.json`: coloca [esto](https://gist.github.com/Turupawn/b89999eb8b00d7507908d6fbf6aa7f0b)

* `zk_artifacts`: coloca en esta carpeta los artefactos generados anteriormente. Nota: Cambia el nombre de myCircuit_0002.zkey por myCircuit_final.zkey

* `index.html`, `js/blockchain_stuff.js` y `js/zk_stuff.js` los detallo a continuación

El archivo de HTML contiene la interfaz necesaria para que los usuarios interactén con Huracán.

`index.html`

```html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

</head>

<body>

<input id="connect_button" type="button" value="Connect" onclick="connectWallet()" style="display: none"></input>

<p id="account_address" style="display: none"></p>

<p id="web3_message"></p>

<p id="contract_state"></p>

<input type="input" value="" id="depositPrivateKey" placeholder="private key"></input>

<input type="input" value="" id="depositNullifier" placeholder="nullifier"></input>

<input type="button" value="Deposit" onclick="_deposit()"></input>

<br>

<input type="input" value="" id="withdrawPrivateKey" placeholder="private key"></input>

<input type="input" value="" id="withdrawNullifier" placeholder="nullifier"></input>

<input type="input" value="" id="withdrawRecipient" placeholder="recipient"></input>

<input type="button" value="Withdraw" onclick="_withdraw()"></input>

<br>

<script type="text/javascript" src="https://cdnjs.cloudflare.com/ajax/libs/web3/1.3.5/web3.min.js"></script>

<script type="text/javascript" src="js/zk_stuff.js"></script>

<script type="text/javascript" src="js/blockchain_stuff.js"></script>

<script type="text/javascript" src="js/snarkjs.min.js"></script>

</body>

</html>

<script>

function _deposit()

{

depositPrivateKey = document.getElementById("depositPrivateKey").value

depositNullifier = document.getElementById("depositNullifier").value

deposit(depositPrivateKey, depositNullifier)

}

function _withdraw()

{

withdrawPrivateKey = document.getElementById("withdrawPrivateKey").value

withdrawNullifier = document.getElementById("withdrawNullifier").value

withdrawRecipient = document.getElementById("withdrawRecipient").value

withdraw(withdrawPrivateKey, withdrawNullifier, withdrawRecipient)

}

</script>

```

Ahora colocamos toda lo logica relacionada a web3, es decir la conexión a la wallet en el browser, lectura del estado y llamado de funciones.

`js/blockchain_stuff.js`

```js

const NETWORK_ID = 534351

const HURACAN_ADDRESS = "0x8BD32BDC921f5239c0f5d9eaf093B49A67C3b9d0"

const HURACAN_ABI_PATH = "./json_abi/Huracan.json"

const POSEIDON_ADDRESS = "0x52f28FEC91a076aCc395A8c730dCa6440B6D9519"

const POSEIDON_ABI_PATH = "./json_abi/Poseidon.json"

const RELAYER_URL = "http://localhost:8080"

var huracanContract

var poseidonContract

var accounts

var web3

let leaves

function metamaskReloadCallback() {

window.ethereum.on('accountsChanged', (accounts) => {

document.getElementById("web3_message").textContent="Se cambió el account, refrescando...";

window.location.reload()

})

window.ethereum.on('networkChanged', (accounts) => {

document.getElementById("web3_message").textContent="Se el network, refrescando...";

window.location.reload()

})

}

const getWeb3 = async () => {

return new Promise((resolve, reject) => {

if(document.readyState=="complete")

{

if (window.ethereum) {

const web3 = new Web3(window.ethereum)

window.location.reload()

resolve(web3)

} else {

reject("must install MetaMask")

document.getElementById("web3_message").textContent="Error: Porfavor conéctate a Metamask";

}

}else

{

window.addEventListener("load", async () => {

if (window.ethereum) {

const web3 = new Web3(window.ethereum)

resolve(web3)

} else {

reject("must install MetaMask")

document.getElementById("web3_message").textContent="Error: Please install Metamask";

}

});

}

});

};

const getContract = async (web3, address, abi_path) => {

const response = await fetch(abi_path);

const data = await response.json();

const netId = await web3.eth.net.getId();

contract = new web3.eth.Contract(

data,

address

);

return contract

}

async function loadDapp() {

metamaskReloadCallback()

document.getElementById("web3_message").textContent="Please connect to Metamask"

var awaitWeb3 = async function () {

web3 = await getWeb3()

web3.eth.net.getId((err, netId) => {

if (netId == NETWORK_ID) {

var awaitContract = async function () {

huracanContract = await getContract(web3, HURACAN_ADDRESS, HURACAN_ABI_PATH)

poseidonContract = await getContract(web3, POSEIDON_ADDRESS, POSEIDON_ABI_PATH)

document.getElementById("web3_message").textContent="You are connected to Metamask"

onContractInitCallback()

web3.eth.getAccounts(function(err, _accounts){

accounts = _accounts

if (err != null)

{

console.error("An error occurred: "+err)

} else if (accounts.length > 0)

{

onWalletConnectedCallback()

document.getElementById("account_address").style.display = "block"

} else

{

document.getElementById("connect_button").style.display = "block"

}

});

};

awaitContract();

} else {

document.getElementById("web3_message").textContent="Please connect to Goerli";

}

});

};

awaitWeb3();

}

async function connectWallet() {

await window.ethereum.request({ method: "eth_requestAccounts" })

accounts = await web3.eth.getAccounts()

onWalletConnectedCallback()

}

loadDapp()

const onContractInitCallback = async () => {

document.getElementById("web3_message").textContent="Reading merkle tree data...";

leaves = []

let i =0

let maxSize = await huracanContract.methods.MAX_SIZE().call()

for(let i=0; i<maxSize; i++)

{

leaves.push(await huracanContract.methods.commitments(i).call())

}

document.getElementById("web3_message").textContent="All ready!";

}

const onWalletConnectedCallback = async () => {

}

//// Functions ////

const deposit = async (depositPrivateKey, depositNullifier) => {

let commitment = await poseidonContract.methods.poseidon([depositPrivateKey,depositNullifier]).call()

let value = await huracanContract.methods.NOTE_VALUE().call()

document.getElementById("web3_message").textContent="Please confirm transaction.";

const result = await huracanContract.methods.deposit(commitment)

.send({ from: accounts[0], gas: 0, value: value })

.on('transactionHash', function(hash){

document.getElementById("web3_message").textContent="Executing...";

})

.on('receipt', function(receipt){

document.getElementById("web3_message").textContent="Success."; })

.catch((revertReason) => {

console.log("ERROR! Transaction reverted: " + revertReason.receipt.transactionHash)

});

}

const withdraw = async (privateKey, nullifier, recipient) => {

document.getElementById("web3_message").textContent="Generating proof...";

let commitment = await poseidonContract.methods.poseidon([privateKey,nullifier]).call()

let index = null

for(let i=0; i<leaves.length;i++)

{

if(commitment == leaves[i])

{

index = i

}

}

if(index == null)

{

console.log("Commitment not found in merkle tree")

return

}

let root = await huracanContract.methods.root().call()

let proof = await getWithdrawalProof(index, privateKey, nullifier, recipient, root)

await sendProofToRelayer(proof.pA, proof.pB, proof.pC, proof.publicSignals)

}

const sendProofToRelayer = async (pA, pB, pC, publicSignals) => {

fetch(RELAYER_URL + "/relay?pA=" + pA + "&pB=" + pB + "&pC=" + pC + "&publicSignals=" + publicSignals)

.then(res => res.json())

.then(out =>

console.log(out))

.catch();

}

```

Finalmente el archivo que contiene la logica relacionada a ZK. Este archivo se encarga de generar las pruebas ZK.

`js/zk_stuff.js`

```js

async function getMerklePath(leaves) {

if (leaves.length === 0) {

throw new Error('Leaves array is empty');

}

let layers = [leaves];

// Build the Merkle tree

while (layers[layers.length - 1].length > 1) {

const currentLayer = layers[layers.length - 1];

const nextLayer = [];

for (let i = 0; i < currentLayer.length; i += 2) {

const left = currentLayer[i];

const right = currentLayer[i + 1] ? currentLayer[i + 1] : left; // Handle odd number of nodes

nextLayer.push(await poseidonContract.methods.poseidon([left,right]).call())

}

layers.push(nextLayer);

}

const root = layers[layers.length - 1][0];

function getPath(leafIndex) {

let pathElements = [];

let pathIndices = [];

let currentIndex = leafIndex;

for (let i = 0; i < layers.length - 1; i++) {

const currentLayer = layers[i];

const isLeftNode = currentIndex % 2 === 0;

const siblingIndex = isLeftNode ? currentIndex + 1 : currentIndex - 1;

pathIndices.push(isLeftNode ? 0 : 1);

pathElements.push(siblingIndex < currentLayer.length ? currentLayer[siblingIndex] : currentLayer[currentIndex]);

currentIndex = Math.floor(currentIndex / 2);

}

return {

PathElements: pathElements,

PathIndices: pathIndices

};

}

// You can get the path for any leaf index by calling getPath(leafIndex)

return {

getMerklePathForLeaf: getPath,

root: root

};

}

function addressToUint(address) {

const hexString = address.replace(/^0x/, '');

const uint = BigInt('0x' + hexString);

return uint;

}

async function getWithdrawalProof(index, privateKey, nullifier, recipient, root) {

let merklePath = await getMerklePath(leaves)

let pathElements = merklePath.getMerklePathForLeaf(index).PathElements;

let pathIndices = merklePath.getMerklePathForLeaf(index).PathIndices;

let proverParams = {

"privateKey": privateKey,

"nullifier": nullifier,

"recipient": addressToUint(recipient),

"root": root,

"pathElements": pathElements,

"pathIndices": pathIndices

}

const { proof, publicSignals } = await snarkjs.groth16.fullProve(

proverParams,

"../zk_artifacts/proveWithdrawal.wasm", "../zk_artifacts/proveWithdrawal_final.zkey"

);

let pA = proof.pi_a

pA.pop()

let pB = proof.pi_b

pB.pop()

let pC = proof.pi_c

pC.pop()

document.getElementById("web3_message").textContent="Proof generated please confirm transaction.";

return {

pA: pA,

pB: pB,

pC: pC,

publicSignals: publicSignals

}

}

```

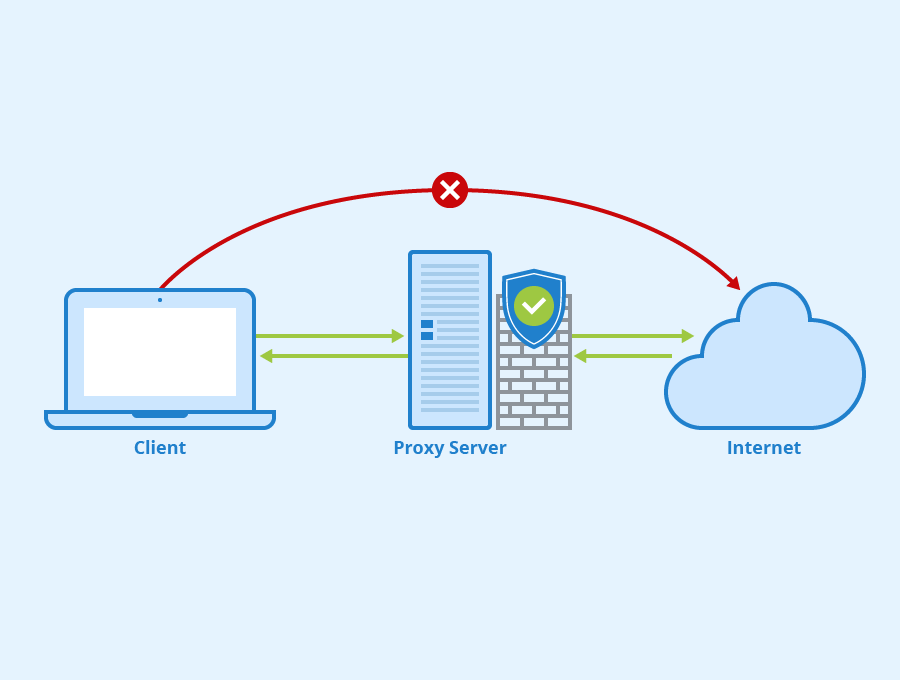

### 5. El relayer

¿De qué sirve usar pruebas de anonimato zk si al final nosotros mismos la ejecutamos? Si hacemos esto perderíamos la privacidad porque en Ethereum todo es público. Por eso ocupamos a un relayer. Un intermediario que ejecuta la transacción on-chain a nombre de del usuario anónimo.

Iniciamos creando el archivo del backend.

`relayer.mjs`

```js

import fs from "fs"

import cors from "cors"

import express from "express"

import { ethers } from 'ethers';

const app = express()

app.use(cors())

const JSON_CONTRACT_PATH = "./json_abi/Huracan.json"

const CHAIN_ID = "534351"

const PORT = 8080

var contract

var provider

var signer

const { RPC_URL, HURACAN_ADDRESS, RELAYER_PRIVATE_KEY, RELAYER_ADDRESS } = process.env;

const loadContract = async (data) => {

data = JSON.parse(data);

contract = new ethers.Contract(HURACAN_ADDRESS, data, signer);

}

async function initAPI() {

provider = new ethers.JsonRpcProvider(RPC_URL);

signer = new ethers.Wallet(RELAYER_PRIVATE_KEY, provider);

fs.readFile(JSON_CONTRACT_PATH, 'utf8', function (err,data) {

if (err) {

return console.log(err);

}

loadContract(data)

});

app.listen(PORT, () => {

console.log(`Listening to port ${PORT}`)

})

}

async function relayMessage(pA, pB, pC, publicSignals)

{

console.log(pA)

console.log(pB)

console.log(pC)

console.log(publicSignals)

const transaction = {

from: RELAYER_ADDRESS,

to: HURACAN_ADDRESS,

value: '0',

gasPrice: "700000000", // 0.7 gwei

nonce: await provider.getTransactionCount(RELAYER_ADDRESS),

chainId: CHAIN_ID,

data: contract.interface.encodeFunctionData(

"withdraw",[pA, pB, pC, publicSignals]

)

};