hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

ce18a63e359a5770113f245fe115b88cfc882651 | 104 | md | Markdown | krm-functions/sig-cli/README.md | natasha41575/krm-functions-registry | 8d72cd032ee0642f17875439256bbfa1e8f3901a | [

"Apache-2.0"

] | 1 | 2022-01-18T22:34:25.000Z | 2022-01-18T22:34:25.000Z | krm-functions/sig-cli/README.md | natasha41575/krm-functions-registry | 8d72cd032ee0642f17875439256bbfa1e8f3901a | [

"Apache-2.0"

] | 10 | 2021-12-07T22:56:49.000Z | 2022-03-17T20:57:14.000Z | krm-functions/sig-cli/README.md | natasha41575/krm-functions-registry | 8d72cd032ee0642f17875439256bbfa1e8f3901a | [

"Apache-2.0"

] | 2 | 2022-01-26T05:48:18.000Z | 2022-02-08T00:20:31.000Z | # KRM functions - SIG CLI

This directory contains in-tree SIG-CLI sponsored and authored KRM functions. | 34.666667 | 77 | 0.798077 | eng_Latn | 0.995109 |

ce1a9f95b0efcb99afd58f015bf26468d1928ee5 | 2,393 | md | Markdown | src/pages/blog/becoming-an-allstar.md | WaylonWalker/cuttin-scrap | b3a0c9365e44dcac2d0cde7017e0cda245428cbe | [

"MIT"

] | null | null | null | src/pages/blog/becoming-an-allstar.md | WaylonWalker/cuttin-scrap | b3a0c9365e44dcac2d0cde7017e0cda245428cbe | [

"MIT"

] | null | null | null | src/pages/blog/becoming-an-allstar.md | WaylonWalker/cuttin-scrap | b3a0c9365e44dcac2d0cde7017e0cda245428cbe | [

"MIT"

] | null | null | null | ---

templateKey: 'blog-post'

path: /becoming-an-allstar

title: Becoming an All-star!

author: Rhiannon Walker

date: 2017-01-30

---

_January 30, 2017_

When your life gets put into risk and dying becomes closer than living, everything changes. The hugs mean just that much more. The "I love yous" stick. What goes in your body or surrounds your body now means the world! When your body fails you and medicine revives you, you are not yet a super hero.

I feel like Deadpool in the hyperbaric chamber. My body is going through all these chemical changes so that I can become an all-star! Make no mistake all-star qualities are arriving by the minute. Little things don't mean as much to me, like getting the laundry done. Instead I would rather spend the extra time reading one more book at bedtime to my kids. I now think eating dessert should come first, because you NEVER know.

What happened you ask that forced this change? Tuesday happened, and by Tuesday night I was taken by ambulance to Barnes hospital. It took 12 rounds of IV antibiotics to save me. I'm not going to go into the details, because they are not important to the general public, but what is important is that I survived. I am still here for my kids, family, and friends.

So how do you change your life for the positive? How do you hang on with everything to see the next day? It's a feeling. It's putting on a new pair of glasses with the correct prescription strength. It's putting faith in your medical team, and trusting your gut.

Mostly what got me through that night was saying over, and over again, "Wyatt and Ayla need their Mommy!"

Take care of your body. Take care of those you love. It's not worth the risk of what you'd leave behind.

10 positives as promised:

1. Medicine - truly did save my life

2. My Children

3. Waylon - Man I love him, that was a lot to put on him, and a scare drive alone down to St. Louis.

4. My age - I am confident that with my age, I will prevail and kick Cancer's behind.

5. Netflix and Puzzles - there is only so much you can do in a hospital bed.

6. My Guardian Angel - because of her I believe I am here. Miss you Mom.

7. Leggings

8. Family and Friend's love and support.

9. Excedrin - these migraines have been horrendous lately.

10. Our shower - man it felt great to take a shower once I got home!

With Love,

Rhiannon | 37.984127 | 432 | 0.748433 | eng_Latn | 0.999725 |

ce1aafac82a6aa3ab9aab1d8a30eee23162da238 | 250 | md | Markdown | README.md | Markek1/Rubiks-cube-simulator | ac087a7c51d791c92b6103bb37164b887aa9f6ea | [

"MIT"

] | null | null | null | README.md | Markek1/Rubiks-cube-simulator | ac087a7c51d791c92b6103bb37164b887aa9f6ea | [

"MIT"

] | null | null | null | README.md | Markek1/Rubiks-cube-simulator | ac087a7c51d791c92b6103bb37164b887aa9f6ea | [

"MIT"

] | null | null | null | # Rubiks-cube-simulator

Features:

* Rotation

* Translation of standard notation into roatations (and the reverse)

* Graphical visualization of the cube

| 31.25 | 96 | 0.796 | eng_Latn | 0.661693 |

ce1ce0d4fde85e20b4359787b222a0d7df34f78e | 3,359 | md | Markdown | README.md | zhming0/k8s-eip | 1842c4d74daa6df22155aaa459389c78e840e6a4 | [

"MIT"

] | 1 | 2020-04-18T14:25:16.000Z | 2020-04-18T14:25:16.000Z | README.md | zhming0/k8s-eip | 1842c4d74daa6df22155aaa459389c78e840e6a4 | [

"MIT"

] | null | null | null | README.md | zhming0/k8s-eip | 1842c4d74daa6df22155aaa459389c78e840e6a4 | [

"MIT"

] | null | null | null | # K8S-EIP

[](https://circleci.com/gh/zhming0/k8s-eip)

Bind a group of AWS Elastic IPs to a group of Kubernetes Nodes that matches criteria.

## Huh? What is this?

### Q: What is k8s-eip trying to solve?

I don't want to create many unnecessary ELBs just for my toy cluster created by kops.

### Q: Can't you just use `nginx-ingress` so you just create one ELB for many services?

Nah, I don't want to pay that $18/month either. I don't want any ELB.

### Q: What a miser! But how?

`k8s-eip` for you.

It's similar to the [kube-ip](https://github.com/doitintl/kubeip) but for AWS and less mature.

It would bind a group of specified Elastic IPs to a number of K8S nodes on AWS.

It runs in a periodic way, so **you can't use it for HA/critical use cases**.

### Q: Would it trigger the scary Elastic IP remap fee?

As a project with a goal of saving money, surely no :).

Actually, it will **try** not to.

## How to use it?

### Prerequisite

* You are an admin of k8s cluster on AWS and you have `kubectl` configured properly.

Quick test: `kubectl cluster-info`

* You have credentials for an IAM user with following permission:

```json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:DescribeInstances",

"ec2:AssociateAddress"

],

"Resource": "*"

}

]

}

```

* You have labeled the targetted nodes

Via: `kubectl`

```

kubectl label node YOUR_NODE_NAME bastion=true

```

Via: `kops`

```yaml

---

...

kind: InstanceGroup

...

spec:

...

nodeLabels:

...

bastion: "true" # Or anything you want

...

```

### Using Helm v3

First, Prepare a yaml file like this:

```yaml

awsAccessKeyId: "XXXXXXX" # AWS_ACCESS_KEY_ID of the IAM user account

awsSecretAccessKey: "XXXXXXXXXXXXXXXX" # secret access key of the IAM user account

awsRegion: us-east-1

# Elastic IPs that you own and want to attach to targeting nodes

ips: "8.8.8.8,1.1.1.1" # example

# The label on Nodes that you want to have elastic IPs attached

labelSelector: "bastion=true" # example

```

Then

```bash

helm upgrade -i \

-f values.yaml \ # The yaml file that you prepared

-n kube-system \ # This could be any namespace, kube-system is a good idea

k8s-eip \ # any name

https://github.com/zhming0/k8s-eip/releases/download/v0.0.1/k8s-eip-0.0.1.tgz

```

Note:

- *Helm is flexible.

There are many ways to supply values.

Please refer to [their doc](https://helm.sh/docs/intro/using_helm/#customizing-the-chart-before-installing)

for other options.*

- use `--dry-run` to preview the changes

### Using Helm v2

Nope :)

### Kubectl directly

Good luck :)

## Project status

As you can see, this is early stage and not actively maintained

as I don't believe it create much value.

Any help is still appreciated though.

Some potential work could be:

- Use K8S's `watch` API to replace/enhance periodic run

- Improve determinism, reduce unnecessary Elastic IPs mapping even when

there are many changes happening to k8s nodes.

| 26.65873 | 130 | 0.693063 | eng_Latn | 0.959586 |

ce1fd593a185c3d62e66e1af2256b006b92ab093 | 316 | md | Markdown | brainstorm.md | cmsteffen-code/pscan | f93fb593277b57dea7158553c04dd6462fa1427a | [

"MIT"

] | null | null | null | brainstorm.md | cmsteffen-code/pscan | f93fb593277b57dea7158553c04dd6462fa1427a | [

"MIT"

] | null | null | null | brainstorm.md | cmsteffen-code/pscan | f93fb593277b57dea7158553c04dd6462fa1427a | [

"MIT"

] | null | null | null | I want to design an asynchronous scan.

1. Create a sniffer to handle incoming TCP packets from the specific host.

2. Send out a spray of SYN packets to the target ports.

3. Capture and log any RST or SYN/ACK packets.

4. Send RST/ACK responses.

5. After the time-out is reached, end the scan.

6. Reveal the results.

| 35.111111 | 74 | 0.759494 | eng_Latn | 0.995527 |

ce20568b3670bc95333ab582b80b1c56f95958e1 | 51 | md | Markdown | README.md | AlexanderSilvaB/cpplanning | 643af74a8e7067a19cf98a37c38ee346e741ef31 | [

"MIT"

] | null | null | null | README.md | AlexanderSilvaB/cpplanning | 643af74a8e7067a19cf98a37c38ee346e741ef31 | [

"MIT"

] | null | null | null | README.md | AlexanderSilvaB/cpplanning | 643af74a8e7067a19cf98a37c38ee346e741ef31 | [

"MIT"

] | null | null | null | # cpplanning

A path planning algorithms playground

| 17 | 37 | 0.843137 | eng_Latn | 0.960584 |

ce207ca858dba9405bbd6a1184993839ec90ab78 | 598 | md | Markdown | README.md | lordkevinmo/Car-data-analysis | 69ce2aa522a855e7654948d362fc95a1e7363fc5 | [

"MIT"

] | null | null | null | README.md | lordkevinmo/Car-data-analysis | 69ce2aa522a855e7654948d362fc95a1e7363fc5 | [

"MIT"

] | null | null | null | README.md | lordkevinmo/Car-data-analysis | 69ce2aa522a855e7654948d362fc95a1e7363fc5 | [

"MIT"

] | null | null | null | # Car-data-analysis

Analyse de donnée sur les relations entre le prix d'une voiture et ses différentes caractéristiques comme la puissance du moteur et autres. Le jeu de donnée est issue du lien suivant : https://archive.ics.uci.edu/ml/machine-learning-databases/autos/imports-85.data

L'analyse a été réalisé avec python grâce à la distribution open source Anaconda https://www.anaconda.com/ qui inclut les outils pour la science des données. Pour cette analyse j'ai utilisé l'éditeur de développement intégré Spyder ainsi que les bibliothèques, pandas, matplotlib, numpy, scipy et scikit learn.

| 119.6 | 310 | 0.80602 | fra_Latn | 0.986171 |

ce2180179f802e0e9140e0a3571231e0d685cab0 | 2,215 | md | Markdown | docs/parallel/chapter5/03_How_to_create_a_task_with_Celery.md | studying-notes/py-notes | 050f5b1ac329835bf1c09496267592d7089dfcc0 | [

"MIT"

] | 1 | 2021-07-10T20:40:55.000Z | 2021-07-10T20:40:55.000Z | docs/parallel/chapter5/03_How_to_create_a_task_with_Celery.md | studying-notes/py-notes | 050f5b1ac329835bf1c09496267592d7089dfcc0 | [

"MIT"

] | null | null | null | docs/parallel/chapter5/03_How_to_create_a_task_with_Celery.md | studying-notes/py-notes | 050f5b1ac329835bf1c09496267592d7089dfcc0 | [

"MIT"

] | null | null | null | # 如何使用Celery创建任务

在本节中,我们将展示如何使用 Celery 模块创建一个任务。Celery 提供了下面的方法来调用任务:

- `apply_async(args[, kwargs[, ...]])`: 发送一个任务消息

- `delay(*args, **kwargs)`: 发送一个任务消息的快捷方式,但是不支持设置一些执行信息

`delay` 方法用起来更方便,因为它可以像普通函数一样被调用: :

```python

task.delay(arg1, arg2, kwarg1='x', kwarg2='y')

```

如果要使用 `apply_async` 的话,你需要这样写: :

```python

task.apply_async (args=[arg1, arg2] kwargs={'kwarg1': 'x','kwarg2': 'y'})

```

##

我们通过以下简单的两个脚本来执行一个任务: :

```python

# addTask.py: Executing a simple task

from celery import Celery

app = Celery('addTask', broker='amqp://guest@localhost//')

@app.task

def add(x, y):

return x + y

```

第二个脚本如下: :

```python

# addTask_main.py : RUN the AddTask example with

import addTask

if __name__ == '__main__':

result = addTask.add.delay(5,5)

```

这里重申以下,RabbitMQ 服务会在安装之后自动启动,所以这里我们只需要启动 Celery 服务就可以了,启动的命令如下: :

```python

celery -A addTask worker --loglevel=info --pool=solo

```

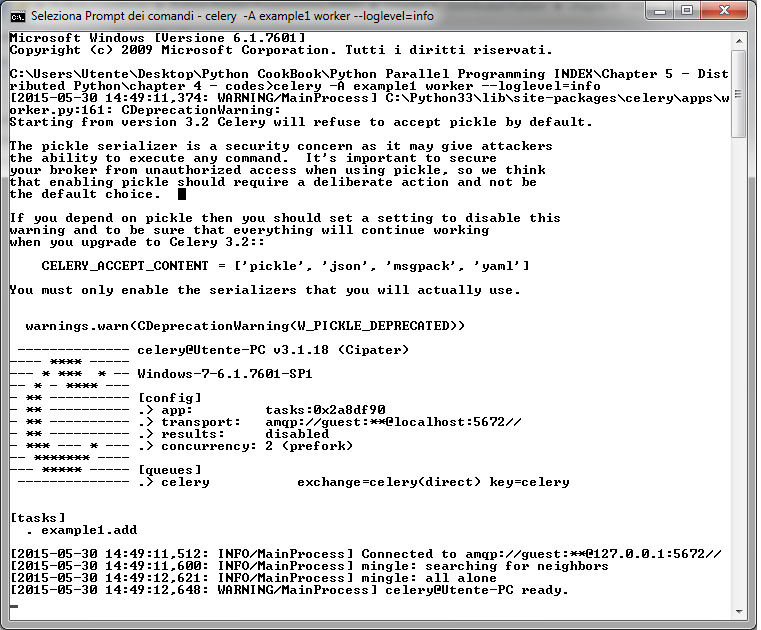

命令的输出如下:

其中,有个警告告诉我们关闭 pickle 序列化工具,可以避免一些安全隐患。pickle 作为默认的序列化工作是因为它用起来很方便(通过它可以将很复杂的 Python 对象当做函数变量传给任务)。不管你用不用 pickle ,如果想要关闭警告的话,可以设置 `CELERY_ACCEPT_CONTENT` 变量。详细信息可以参考:http://celery.readthedocs.org/en/latest/configuration.html 。

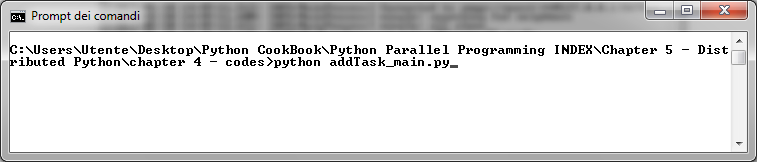

现在,让我们执行 `addTask_main.py` 脚本来添加一个任务:

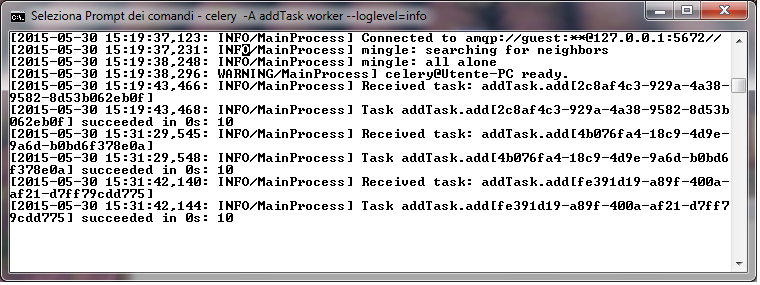

最后,第一个命令的输出会显示:

在最后一行,可以看到结果是10,和我们的期望一样。

##

让我们先来看 `addTask.py` 这个脚本。在前两行的代码中,我们创建了一个 Celery 的应用实例,然后用 RabbitMQ 服务作为消息代理: :

```python

from celery import Celery

app = Celery('addTask', broker='amqp://guest@localhost//')

```

Celery 函数的第一个变量是当前 module 的名字( `addTask.py` ) 第二个变量是消息代理的信息,一个可以连接代理的 broker(RabbitMQ). 然后,我们声明了任务。每一个任务必须用 `@app.task` 来装饰。

这个装饰器帮助 Celery 标明了哪些函数可以通过任务队列调度。在装饰器后面,我们定义了 worker 可以执行的任务。我们的第一个任务很简单,只是计算两个数的和: :

```python

@app.task

def add(x, y):

return x + y

```

在第二个脚本中, `AddTask_main.py` ,我们通过 `delay()` 方法来调用任务: :

```python

if __name__ == '__main__':

result = addTask.add.delay(5,5)

```

记住,这个方法只是 `apply_async()` 的一个快捷方式,通过 `apply_async()` 方法我们可以更精确地控制任务执行。

如果 RabbitMQ 是默认配置的话,Celery 也可以通过 `amqp://scheme` 来连接。

| 23.56383 | 226 | 0.690293 | yue_Hant | 0.585692 |

ce23f6bf9344840a3c8f204e8eba70f2b9dc2bba | 1,305 | md | Markdown | curriculum/challenges/italian/14-responsive-web-design-22/learn-html-by-building-a-cat-photo-app/5dc174fcf86c76b9248c6eb2.md | palash-signoz/freeCodeCamp | db33f49b7b775df55e465243f244d648cd75aff5 | [

"BSD-3-Clause"

] | 1 | 2021-11-26T13:27:53.000Z | 2021-11-26T13:27:53.000Z | curriculum/challenges/italian/14-responsive-web-design-22/learn-html-by-building-a-cat-photo-app/5dc174fcf86c76b9248c6eb2.md | palash-signoz/freeCodeCamp | db33f49b7b775df55e465243f244d648cd75aff5 | [

"BSD-3-Clause"

] | 169 | 2020-10-13T16:49:51.000Z | 2020-12-08T22:53:48.000Z | curriculum/challenges/italian/14-responsive-web-design-22/learn-html-by-building-a-cat-photo-app/5dc174fcf86c76b9248c6eb2.md | palash-signoz/freeCodeCamp | db33f49b7b775df55e465243f244d648cd75aff5 | [

"BSD-3-Clause"

] | null | null | null | ---

id: 5dc174fcf86c76b9248c6eb2

title: Step 1

challengeType: 0

dashedName: step-1

---

# --description--

Gli elementi HTML hanno un tag di apertura come `<h1>` e un tag di chiusura come `</h1>`.

Trova l'elemento `h1` e cambia il testo tra i tag di apertura e chiusura in `CatPhotoApp`.

# --hints--

Il testo `CatPhotoApp` dovrebbe essere presente nel codice. Controlla la tua ortografia.

```js

assert(code.match(/catphotoapp/i));

```

L'elemento `h1` dovrebbe avere un tag di apertura. I tag di apertura hanno questa sintassi: `<nomeElemento>`.

```js

assert(document.querySelector('h1'));

```

L'elemento `h1` dovrebbe avere un tag di chiusura. I tag di chiusura hanno un carattere `/` subito dopo il carattere `<`.

```js

assert(code.match(/<\/h1\>/));

```

Hai più di un elemento `h1`. Rimuovi l'elemento `h1` di troppo.

```js

assert(document.querySelectorAll('h1').length === 1);

```

Il testo dell'elemento `h1` dovrebbe essere `CatPhotoApp`. Hai omesso il testo, hai un refuso o il testo non è tra i tag di apertura e chiusura dell'elemento `h1`.

```js

assert(document.querySelector('h1').innerText.toLowerCase() === 'catphotoapp');

```

# --seed--

## --seed-contents--

```html

<html>

<body>

--fcc-editable-region--

<h1>Hello World</h1>

--fcc-editable-region--

</body>

</html>

```

| 21.75 | 163 | 0.687356 | ita_Latn | 0.980578 |

ce23f94fc7ede3c2f91bab6852fb8e56d9dff252 | 337 | md | Markdown | numpy/linear-algebra/README.md | kahilah/hpc-python | 5d2efa08076ed2706c81ca255c7e4574c937557c | [

"MIT"

] | 1 | 2021-12-16T08:55:28.000Z | 2021-12-16T08:55:28.000Z | numpy/linear-algebra/README.md | kahilah/hpc-python | 5d2efa08076ed2706c81ca255c7e4574c937557c | [

"MIT"

] | null | null | null | numpy/linear-algebra/README.md | kahilah/hpc-python | 5d2efa08076ed2706c81ca255c7e4574c937557c | [

"MIT"

] | 1 | 2021-12-05T02:40:42.000Z | 2021-12-05T02:40:42.000Z | ## Linear algebra

1. Construct two symmetric 2x2 matrices **A** and **B**.

*Hint: a symmetric matrix can be constructed easily from a square matrix

as **Asym** = **A** + **A**^T*

2. Calculate the matrix product **C** = **A** * **B** using `numpy.dot()`.

3. Calculate the eigenvalues of matrix **C** with `numpy.linalg.eigvals()`.

| 42.125 | 75 | 0.64095 | eng_Latn | 0.978426 |

ce25958f53fe160e39513af2ba6b96b83d0d3c82 | 356 | md | Markdown | www/src/data/StarterShowcase/startersData/gatsby-starter-timeline-theme.md | rrs94/gatsby | 2a7a8710ff579dcf4f3489d4e7b995dfb8de298c | [

"MIT"

] | 1 | 2020-07-07T15:10:20.000Z | 2020-07-07T15:10:20.000Z | www/src/data/StarterShowcase/startersData/gatsby-starter-timeline-theme.md | rrs94/gatsby | 2a7a8710ff579dcf4f3489d4e7b995dfb8de298c | [

"MIT"

] | 2 | 2018-05-15T16:07:33.000Z | 2018-05-19T21:40:24.000Z | www/src/data/StarterShowcase/startersData/gatsby-starter-timeline-theme.md | rrs94/gatsby | 2a7a8710ff579dcf4f3489d4e7b995dfb8de298c | [

"MIT"

] | 1 | 2018-08-16T06:09:40.000Z | 2018-08-16T06:09:40.000Z | ---

date: January 3, 2018

demo: http://portfolio-v3.surge.sh/

repo: https://github.com/amandeepmittal/gatsby-portfolio-v3

description: n/a

tags:

- portfolio

features:

- Single Page, Timeline View

- A portfolio Developers and Product launchers

- Bring in Data, plug-n-play

- Responsive Design, optimized for Mobile devices

- Seo Friendly

- Uses Flexbox

---

| 22.25 | 59 | 0.755618 | eng_Latn | 0.528331 |

ce2a1f2a5f0fec548207aa9e899fb860559eda21 | 3,775 | md | Markdown | docs/ubuntu_18_04_lts_setup.md | graphistry/graphistry-cli | 1c92ba124998f988ac13b8f299f20145c9d1543c | [

"BSD-3-Clause"

] | 13 | 2018-05-13T00:30:00.000Z | 2022-01-09T06:38:18.000Z | docs/ubuntu_18_04_lts_setup.md | graphistry/graphistry-cli | 1c92ba124998f988ac13b8f299f20145c9d1543c | [

"BSD-3-Clause"

] | 7 | 2018-08-01T17:18:29.000Z | 2021-04-07T18:25:21.000Z | docs/ubuntu_18_04_lts_setup.md | graphistry/graphistry-cli | 1c92ba124998f988ac13b8f299f20145c9d1543c | [

"BSD-3-Clause"

] | 3 | 2018-10-02T17:16:21.000Z | 2021-07-30T20:07:25.000Z | # Ubuntu 18.04 LTS manual configuration

For latest test version of scripts, see your Graphistry release's folder `etc/scripts`.

# Warning

We do *not* recommend manually installing the environment dependencies. Instead, use a Graphistry-managed Cloud Marketplace instance, a prebuilt cloud image, or another partner-supplied starting point.

However, sometimes a manual installation is necessary. Use this script as a reference. For more recent versions, check your Graphistry distribution's `etc/scripts` folder, and its links to best practices.

# About

The reference script below was last tested with an Azure Ubuntu 18.04 LTS AMI on NC series.

* Nvidia driver 430.26

* CUDA 10.2

* Docker CE 19.03.1

* docker-compose 1.24.1

* nvidia-container 1.0.4

# Manual environment configuration

Each subsection ends with a test command.

```

###################

# #

# <3 <3 <3 <3 #

# #

###################

sudo apt update

sudo apt upgrade -y

###################

# #

# Nvidia driver #

# #

###################

sudo add-apt-repository -y ppa:graphics-drivers/ppa

sudo apt-get update

sudo apt install -y nvidia-driver-430

sudo reboot

nvidia-smi

###################

# #

# Docker 19.03+ #

# #

###################

#apt is 18, so go official

sudo apt-get install -y \

apt-transport-https \

ca-certificates \

curl \

software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo apt-key fingerprint 0EBFCD88

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

sudo apt-get update

sudo apt install -y docker-ce=5:19.03.1~3-0~ubuntu-bionic

sudo systemctl start docker

sudo systemctl enable docker

sudo docker --version

sudo docker run hello-world

####################

# #

# docker-compose #

# #

####################

sudo curl -L "https://github.com/docker/compose/releases/download/1.24.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --version

####################

# #

# nvidia runtime #

# #

####################

### Sometimes needed

#curl -s -L https://nvidia.github.io/nvidia-container-runtime/gpgkey | sudo apt-key add - && sudo apt update

#distribution=$(. /etc/os-release;echo $ID$VERSION_ID) && curl -s -L https://nvidia.github.io/nvidia-container-runtime/$distribution/nvidia-container-runtime.list | sudo tee /etc/apt/sources.list.d/nvidia-container-runtime.list && sudo apt-get update

#sudo apt-get install -y nvidia-container-runtime

distribution=$(. /etc/os-release;echo $ID$VERSION_ID) && echo $distribution

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart docker

#_not_ default runtime

sudo docker run --gpus all nvidia/cuda:9.0-base nvidia-smi

####################

# #

# nvidia default #

# #

####################

# Nvidia docker as default runtime (needed for docker-compose)

sudo yum install -y vim

sudo vim /etc/docker/daemon.json

{

"default-runtime": "nvidia",

"runtimes": {

"nvidia": {

"path": "/usr/bin/nvidia-container-runtime",

"runtimeArgs": []

}

}

}

sudo systemctl restart docker

sudo docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi

sudo docker run --rm nvidia/cuda nvidia-smi

```

| 27.554745 | 250 | 0.635497 | eng_Latn | 0.322825 |

ce2a36c64b5d436b4b120a3e3b89ee729197a546 | 32 | md | Markdown | README.md | lRoshi/trabajo | b724d31dd8ed2cb65100fa7293ed381db03d4b1e | [

"Unlicense"

] | null | null | null | README.md | lRoshi/trabajo | b724d31dd8ed2cb65100fa7293ed381db03d4b1e | [

"Unlicense"

] | null | null | null | README.md | lRoshi/trabajo | b724d31dd8ed2cb65100fa7293ed381db03d4b1e | [

"Unlicense"

] | null | null | null | # trabajo

trabajo para el profe

| 10.666667 | 21 | 0.78125 | spa_Latn | 0.999977 |

ce2acee5a8e9969bd3a40c162ebf875038698d40 | 6,631 | md | Markdown | README.md | GinSanaduki/Let_us_RSA_on_Bourne_Shell | ef851ce556ab5d1f32709c1336c17ea12ef2a2f4 | [

"BSD-3-Clause"

] | null | null | null | README.md | GinSanaduki/Let_us_RSA_on_Bourne_Shell | ef851ce556ab5d1f32709c1336c17ea12ef2a2f4 | [

"BSD-3-Clause"

] | null | null | null | README.md | GinSanaduki/Let_us_RSA_on_Bourne_Shell | ef851ce556ab5d1f32709c1336c17ea12ef2a2f4 | [

"BSD-3-Clause"

] | null | null | null | # Let_us_RSA_on_Bourne_Shell

RSAの仕組みをUNIXで体感する(ALL UNIX COMMAND)

* まとめたら、もう少しまともなshellを作るから、とりあえずマークダウンで書いとくぞ。

* ベースは

https://qiita.com/jabba/items/e5d6f826d9a8f2cefd60

公開鍵暗号とRSA暗号の仕組み - Qiita

と

https://blog.desumachi.tk/2017/10/17/%E3%82%B7%E3%82%A7%E3%83%AB%E8%8A%B8%E3%81%A7%E6%9C%80%E5%B0%8F%E5%85%AC%E5%80%8D%E6%95%B0%E3%83%BB%E6%9C%80%E5%A4%A7%E5%85%AC%E7%B4%84%E6%95%B0%E3%82%92%E6%B1%82%E3%82%81%E3%82%8B/

シェル芸で最小公倍数・最大公約数を求める – ですまち日記帳

を参考にした。

元ネタはRubyで書いてあるが、これは基本awk(桁あふれを起こすので、MPFR対応のgawkを使用する)で書いている。

以下のファイルを暗号化したいとする。

```bash

$ cat test.txt

Jabba the Hutto$

```

16進数にしたいので、xxdでダンプする。

odはめんどうくさいので、後で考える・・・。

```bash

cat test.txt | \

iconv -f "$(locale charmap)" -t utf32be | \

xxd -p | \

tr -d '\n' | \

awk -f test1.awk | \

sed -r 's/^0+/0x/' | \

xargs printf 'U+%04X\n' > XDD.txt

```

```awk

#!/usr/bin/gawk -f

# test1.awk

# awk -f test1.awk

BEGIN{

FS="";

}

{

for(i = 1; i <= NF; i++){

Remainder = i % 8;

if(i == NF || Remainder == 0){

printf("%s\n", $i);

} else {

printf("%s", $i);

}

}

}

```

```bash

$ cat XDD.txt

U+004A

U+0061

U+0062

U+0062

U+0061

U+0020

U+0074

U+0068

U+0065

U+0020

U+0048

U+0075

U+0074

U+0074

$

```

例をカンタンにするためにそのとても大きな2つの素数をp= 7、q= 19とする。

素数は・・・なんかでまた求めるのを書いておくよ・・・。

```bash

$ p=7

$ q=19

$

$ p_minus1=$((p - 1))

$ q_minus1=$((q - 1))

$ echo $p_minus1

6

$ echo $q_minus1

18

$

```

* yesコマンドで2つの数字をスペース区切りで無限に生成する

* awkコマンドでそれぞれの数に今の行数を掛けて、間に改行を挟んで出力する

* awkコマンドで同じ数が2回目に出てきたらそれを出力して終了する

```bash

$ r=`yes $p_minus1 $q_minus1 | \

awk '{print $1*NR RS $2*NR}' | \

awk 'a[$1]++{print;exit;}'`

$ echo $r

18

```

* 公開鍵の条件は1より大きくL(=18)よりも小さい。

* しかも公開鍵とLとの最大公約数は1であること、つまり互いに素であることが条件。

* ここでは公開鍵をpublicとする。

```bash

# 並列にしてはあるが、時間がそれなりにかかるので注意

$ public=`seq 2 $r | \

awk -f test3.awk -v Max=$r | \

xargs -P 0 -r -I{} sh -c '{}' | \

sort -k 2n,2 -k 1n,1 | \

head -n 1 | \

cut -f 1 -d ' '`

$ echo $public

5

$

```

```awk

#!/usr/bin/gawk -f

# test3.awk

# awk -f test3.awk -v Max=$r

BEGIN{

Max = Max + 0;

if(Max < 2){

exit 99;

}

}

{

$0 = $0 + 0;

print "yes "$0" "Max" | awk -f test4.awk | grep -Fv --line-buffered . | awk -f test5.awk | awk -f test6.awk -v Disp="$0;

}

```

```awk

#!/usr/bin/gawk -f

# test4.awk

# awk -f test4.awk

{

print $1/NR RS $2/NR;

}

```

```awk

#!/usr/bin/gawk -f

# test5.awk

# awk -f test5.awk

a[$1]++{print;exit;}

```

* 公開鍵は「$p_qで割ったの余りの表を使って$public乗すること」になる。

```bash

$ p_q=$((p * q))

$ echo $p_q

133

$

```

* 秘密鍵の条件は

* 秘密鍵 * 公開鍵=L * N + 1となる数。

* ここでは秘密鍵をprivateとする。

```bash

$ private=`seq 2 $r | awk -f test7.awk -v Max=$r -v Public=$public`

$ echo $private

11

$

```

```awk

#!/usr/bin/gawk -f

# test7.awk

# awk -f test7.awk -v Max=$r -v Public=$public

BEGIN{

Max = Max + 0;

Public = Public + 0;

}

{

$0 = $0 + 0;

Public_ColZero = Public * $0;

Remainder = Public_ColZero % Max;

if(Remainder == 1){

print;

exit;

}

}

```

* 冒頭のJaba the Huttを公開鍵(public=5)で暗号化する。

元の数字を5回かけて、133の余りを出す。

```bash

$ /usr/bin/gawk -M -f test8.awk -v Max=$p_q -v Exponentiation=$public Conv_XDD.csv | \

tr -d '\n' | \

awk '{print substr($0,1,length($0) - 1);}' > Encrypt.csv

# stderr

i : 1, $i : 74

Exponentiation : 5

Exp : 2219006624

Max : 133

Remainder : 44

i : 2, $i : 97

Exponentiation : 5

Exp : 8587340257

Max : 133

Remainder : 13

i : 3, $i : 98

Exponentiation : 5

Exp : 9039207968

Max : 133

Remainder : 91

i : 4, $i : 98

Exponentiation : 5

Exp : 9039207968

Max : 133

Remainder : 91

i : 5, $i : 97

Exponentiation : 5

Exp : 8587340257

Max : 133

Remainder : 13

i : 6, $i : 32

Exponentiation : 5

Exp : 33554432

Max : 133

Remainder : 128

i : 7, $i : 116

Exponentiation : 5

Exp : 21003416576

Max : 133

Remainder : 51

i : 8, $i : 104

Exponentiation : 5

Exp : 12166529024

Max : 133

Remainder : 111

i : 9, $i : 101

Exponentiation : 5

Exp : 10510100501

Max : 133

Remainder : 5

i : 10, $i : 32

Exponentiation : 5

Exp : 33554432

Max : 133

Remainder : 128

i : 11, $i : 72

Exponentiation : 5

Exp : 1934917632

Max : 133

Remainder : 116

i : 12, $i : 117

Exponentiation : 5

Exp : 21924480357

Max : 133

Remainder : 129

i : 13, $i : 116

Exponentiation : 5

Exp : 21003416576

Max : 133

Remainder : 51

i : 14, $i : 116

Exponentiation : 5

Exp : 21003416576

Max : 133

Remainder : 51

$cat Encrypt.csv

44,13,91,91,13,128,51,111,5,128,116,129,51,51

$

```

```awk

#!/usr/bin/gawk -f

# test8.awk

# /usr/bin/gawk -M -f test8.awk -v Max=$p_q -v Exponentiation=$public Conv_XDD.csv > Encrypt.csv

# /usr/bin/gawk -M -f test8.awk -v Max=$p_q -v Exponentiation=$private Encrypt.csv > Decrypt.csv

BEGIN{

FS = ",";

Max = Max + 0;

Exponentiation = Exponentiation + 0;

# print Max;

# print Exponentiation;

}

{

delete Arrays;

for(i = 1; i <= NF; i++){

print "i : "i", $i : "$i > "/dev/stderr";

Exp = $i ** Exponentiation;

print "Exponentiation : "Exponentiation > "/dev/stderr";

print "Exp : "Exp > "/dev/stderr";

Remainder = Exp % Max;

print "Max : "Max > "/dev/stderr";

print "Remainder : "Remainder > "/dev/stderr";

print Remainder"," > "/dev/stdout";

}

}

```

* 逆パターンとして、秘密鍵の11と133を使って復号する。

* 暗号の数字を11回かけて、133の余りを出す。

```bash

$ /usr/bin/gawk -M -f test8.awk -v Max=$p_q -v Exponentiation=$private Encrypt.csv | \

tr -d '\n' | \

awk '{print substr($0,1,length($0) - 1);}' > Decrypt.csv

# stderr

i : 1, $i : 44

Exponentiation : 11

Exp : 1196683881290399744

Max : 133

Remainder : 74

i : 2, $i : 13

Exponentiation : 11

Exp : 1792160394037

Max : 133

Remainder : 97

i : 3, $i : 91

Exponentiation : 11

Exp : 3543686674874777831491

Max : 133

Remainder : 98

i : 4, $i : 91

Exponentiation : 11

Exp : 3543686674874777831491

Max : 133

Remainder : 98

i : 5, $i : 13

Exponentiation : 11

Exp : 1792160394037

Max : 133

Remainder : 97

i : 6, $i : 128

Exponentiation : 11

Exp : 151115727451828646838272

Max : 133

Remainder : 32

i : 7, $i : 51

Exponentiation : 11

Exp : 6071163615208263051

Max : 133

Remainder : 116

i : 8, $i : 111

Exponentiation : 11

Exp : 31517572945366073781711

Max : 133

Remainder : 104

i : 9, $i : 5

Exponentiation : 11

Exp : 48828125

Max : 133

Remainder : 101

i : 10, $i : 128

Exponentiation : 11

Exp : 151115727451828646838272

Max : 133

Remainder : 32

i : 11, $i : 116

Exponentiation : 11

Exp : 51172646912339021398016

Max : 133

Remainder : 72

i : 12, $i : 129

Exponentiation : 11

Exp : 164621598066108688876929

Max : 133

Remainder : 117

i : 13, $i : 51

Exponentiation : 11

Exp : 6071163615208263051

Max : 133

Remainder : 116

i : 14, $i : 51

Exponentiation : 11

Exp : 6071163615208263051

Max : 133

Remainder : 116

$ cat Decrypt.csv

74,97,98,98,97,32,116,104,101,32,72,117,116,116

$

```

* ダンプから起こしたunicode pointと突合する

```bash

$ diff -q Decrypt.csv Conv_XDD.csv

$ echo $?

0

$

```

# まあ、こんな感じだ。

# Bourne Shellでも、できてよかったね。

| 15.788095 | 220 | 0.638667 | yue_Hant | 0.28132 |

ce2c588f1865c11ceb3fa0280b1280fdf480022e | 131 | md | Markdown | release.md | SecurityRAT/SecurityRAT | c0dcdb774308ef7b36f225be74b33b1aec7c98ac | [

"Apache-2.0"

] | 147 | 2016-05-30T16:28:31.000Z | 2022-03-30T15:34:20.000Z | release.md | SecurityRAT/SecurityRAT | c0dcdb774308ef7b36f225be74b33b1aec7c98ac | [

"Apache-2.0"

] | 160 | 2016-07-05T14:28:38.000Z | 2022-03-02T12:58:15.000Z | release.md | SecurityRAT/SecurityRAT | c0dcdb774308ef7b36f225be74b33b1aec7c98ac | [

"Apache-2.0"

] | 48 | 2016-05-06T10:50:03.000Z | 2022-03-30T13:05:57.000Z | ## Bug Fixes

- Fixed minor issue where displayed link to authenticate to JIRA (during import) is REST API link instead of origin.

| 32.75 | 116 | 0.770992 | eng_Latn | 0.999414 |

ce2e536076d1457af82c37bd8fd3e03cc4a2b4ea | 1,075 | md | Markdown | content/articles/content/Laser-bending.md | 4m-association/4m-association | 2a5d6a7c539d927dffefdb1a2f4c706e0efa7ba2 | [

"RSA-MD"

] | 1 | 2020-11-18T16:20:10.000Z | 2020-11-18T16:20:10.000Z | content/articles/content/Laser-bending.md | 4m-association/4m-association | 2a5d6a7c539d927dffefdb1a2f4c706e0efa7ba2 | [

"RSA-MD"

] | null | null | null | content/articles/content/Laser-bending.md | 4m-association/4m-association | 2a5d6a7c539d927dffefdb1a2f4c706e0efa7ba2 | [

"RSA-MD"

] | 2 | 2021-09-10T14:15:53.000Z | 2021-11-30T09:28:38.000Z | title: Laser bending

date: 2009-11-17

tags: metals-processing

Technology suitable for small quantity production

The use of a Laser in forming technology enables the prototype production of freeform sheet metal parts without using any solid tool. The laser beam is applied to a workpiece where the sheet is locally heated. After heating two possible mechanisms appear in dependency of the energy input. If only the surface layer of the sheet is locally heated, when cooling down, the tensile stress in the surface layer lead to a bending moment in direction to the laser (temperature gradient mechanism). The second mechanism leads to a reduction of the sheet length. If the sheet thickness is heated completely after cooling down the material is contracting yielding a reduction in the sheet length. Both mechanisms are applicable at microscale while the first is more frequently and industrially used e.g. in case of optical sensor adjustment in compact disk drives. If the energy density is high enough and the pulse duration short, even metallic foils can be bended. | 153.571429 | 959 | 0.816744 | eng_Latn | 0.999822 |

ce2f059c27b73a5418022484344ec8ad297dd5a6 | 1,639 | md | Markdown | catalog/tobiiro-shadow/en-US_tobiiro-shadow.md | htron-dev/baka-db | cb6e907a5c53113275da271631698cd3b35c9589 | [

"MIT"

] | 3 | 2021-08-12T20:02:29.000Z | 2021-09-05T05:03:32.000Z | catalog/tobiiro-shadow/en-US_tobiiro-shadow.md | zzhenryquezz/baka-db | da8f54a87191a53a7fca54b0775b3c00f99d2531 | [

"MIT"

] | 8 | 2021-07-20T00:44:48.000Z | 2021-09-22T18:44:04.000Z | catalog/tobiiro-shadow/en-US_tobiiro-shadow.md | zzhenryquezz/baka-db | da8f54a87191a53a7fca54b0775b3c00f99d2531 | [

"MIT"

] | 2 | 2021-07-19T01:38:25.000Z | 2021-07-29T08:10:29.000Z | # Tobiiro Shadow

- **type**: manga

- **volumes**: 4

- **original-name**: 鳶色シャドウ

- **start-date**: 1992-01-19

## Tags

- mystery

- romance

- supernatural

- josei

## Authors

- Hara

- Chieko (Story & Art)

## Sinopse

From Emily's Random Shoujo Manga Page:

It all begins long ago in the past. In a place near an ancient wall. The wall looks more like a cliff, really. Anyway, two cute children grew up together. Sumire is a gentle girl, and Yasuhira is a bossy boy, but he loves Sumire. When Yasuhira proposed marriage to Sumire, it was more like the confirmation of a long-held assumption that they would eventually wed. But they did lot live happily ever after. We see a bloodied sword, and someone crying…

Flash forward to modern times. These days, a school has been built near the ancient wall. It is such a tall, imposing wall/cliff thing, that naturally the students have made up ghost stories about it. Some of the girls are determined to go to the wall, where they believe they can see some real ghosts. A classmate, Sumire, is unwillingly dragged along. Sumire tries to get out of going, but her friends insist. When they get to the wall, Sumire feels an unusual wind, and then is confronted with a startling sight — a boy crying. He is just standing there, tears pouring down his cheeks. Before she can say anything, her friends call her away. The boy looks startled to see her. Sumire realizes he looks familiar… is that her classmate Katsuragi-kun?

## Links

- [My Anime list](https://myanimelist.net/manga/16771/Tobiiro_Shadow)

| 51.21875 | 751 | 0.747407 | eng_Latn | 0.999371 |

ce2f11cf7e779975c66ae5548a5fcb59b58d93e0 | 1,114 | md | Markdown | tools/git/README.md | yudatun/documentation | bd37195aa8385b7b334a440c4efa86e84ab968af | [

"Apache-2.0"

] | null | null | null | tools/git/README.md | yudatun/documentation | bd37195aa8385b7b334a440c4efa86e84ab968af | [

"Apache-2.0"

] | null | null | null | tools/git/README.md | yudatun/documentation | bd37195aa8385b7b334a440c4efa86e84ab968af | [

"Apache-2.0"

] | null | null | null | Git

========================================

build with sources

----------------------------------------

Got reason of the problem, it was gnutls package.

It's working weird behind a proxy. But openssl is

working fine even in weak network. So workaround is

that we should compile git with openssl. To do this, run the following commands:

```

$ sudo apt-get install build-essential fakeroot dpkg-dev

$ mkdir ~/git-openssl

$ cd ~/git-openssl

$ sudo apt-get source git

$ sudo apt-get build-dep git

$ sudo apt-get install libcurl4-openssl-dev

$ dpkg-source -x git_1.7.9.5-1.dsc

$ cd git-1.7.9.5

```

(Remember to replace 1.7.9.5 with the actual version of git in your system.)

Then, edit debian/control file (run the command: gksu gedit debian/control) and

replace all instances of libcurl4-gnutls-dev with libcurl4-openssl-dev.

Then build the package (if it's failing on test, you can remove the line TEST=test from the file debian/rules):

```

$ sudo dpkg-buildpackage -rfakeroot -b

```

Install new package:

```

i386: sudo dpkg -i ../git_1.7.9.5-1_i386.deb

x86_64: sudo dpkg -i ../git_1.7.9.5-1_amd64.deb

```

| 27.85 | 111 | 0.682226 | eng_Latn | 0.866732 |

ce2f63ec33d97be7e2c2ad019a679e1a84acc475 | 436 | md | Markdown | _pages/projects.md | ahkhalwai/ahkhalwai.github.io | 3334b05c25d5673feebdaf0464118c790c1fcac1 | [

"MIT"

] | 3 | 2021-05-29T16:27:16.000Z | 2021-06-08T15:35:22.000Z | _pages/projects.md | ahkhalwai/ahkhalwai.github.io | 3334b05c25d5673feebdaf0464118c790c1fcac1 | [

"MIT"

] | null | null | null | _pages/projects.md | ahkhalwai/ahkhalwai.github.io | 3334b05c25d5673feebdaf0464118c790c1fcac1 | [

"MIT"

] | 2 | 2021-06-08T13:21:45.000Z | 2021-06-08T13:26:44.000Z | ---

layout: archive

title: ""

permalink: /projects/

author_profile: true

---

{% include base_path %}

{% for post in site.projects %}

{% include archive-single.html %}

{% endfor %}

<br>

Project Visitors

Total Visitor

<br>

| 16.148148 | 106 | 0.71789 | eng_Latn | 0.370239 |

ce31f3ca46f0d69d68b3e518909ae3d780a6e401 | 194 | md | Markdown | README.md | ritikjain626/Weather-app | 17ed0e0dc276ddf061fb88829705221783079ebd | [

"MIT"

] | null | null | null | README.md | ritikjain626/Weather-app | 17ed0e0dc276ddf061fb88829705221783079ebd | [

"MIT"

] | null | null | null | README.md | ritikjain626/Weather-app | 17ed0e0dc276ddf061fb88829705221783079ebd | [

"MIT"

] | null | null | null | # Weather-app

A weather app which describe a condition of a given location. also, you can search for any city in the world.

You Can check it out at https://ritikjain626.github.io/Weather-app/

| 32.333333 | 109 | 0.768041 | eng_Latn | 0.998125 |

ce3275576ecb713d9b1b429417e7846a10b0b8ed | 795 | md | Markdown | issues/add-multi-instance-support.md | sigsum/sigsum-log-go | 1594b0830d8cd18ab158dfffb64dd3c219da8f10 | [

"Apache-2.0"

] | null | null | null | issues/add-multi-instance-support.md | sigsum/sigsum-log-go | 1594b0830d8cd18ab158dfffb64dd3c219da8f10 | [

"Apache-2.0"

] | 7 | 2021-06-23T21:41:06.000Z | 2021-12-10T20:54:09.000Z | issues/add-multi-instance-support.md | sigsum/sigsum-log-go | 1594b0830d8cd18ab158dfffb64dd3c219da8f10 | [

"Apache-2.0"

] | null | null | null | **Title:** Add multi-instance support </br>

**Date:** 2021-12-09 </br>

# Summary

Add support for multiple active sigsum-log-go instances for the same log.

# Description

A sigsum log accepts add-cosignature requests to make the final cosigned tree

head available. Right now a single active sigsum-log-go instance is assumed per

log, so that there is no need to coordinate cosigned tree heads among instances.

Some log operators will likely want to run multiple instances of both the

Trillian components and sigsum-log-go, backed by a managed data base setup.

Trillian supports this, but sigsum-log-go does not due to lack of coordination.

This issue requires both design considerations and an implementation of the

`StateManager` interface to support multi-instance setups of sigsum-log-go.

| 44.166667 | 80 | 0.792453 | eng_Latn | 0.997645 |

ce329dc4726ad8bbdd7084e43755aa2e2a567c23 | 291 | markdown | Markdown | src/pages/articles/2011-02-08-wolverine-or-2-batmen.markdown | broderboy/timbroder.com-sculpin | 7982298523ac56db8c45fa4d18f8a2801c7c0445 | [

"MIT"

] | 2 | 2015-12-21T00:49:21.000Z | 2019-03-03T10:20:24.000Z | src/pages/articles/2011-02-08-wolverine-or-2-batmen.markdown | timbroder/timbroder.com-sculpin | 7982298523ac56db8c45fa4d18f8a2801c7c0445 | [

"MIT"

] | 16 | 2021-03-01T20:47:45.000Z | 2022-03-08T23:00:02.000Z | src/pages/articles/2011-02-08-wolverine-or-2-batmen.markdown | broderboy/timbroder.com-sculpin | 7982298523ac56db8c45fa4d18f8a2801c7c0445 | [

"MIT"

] | null | null | null | ---

author: tim

comments: true

date: 2011-02-08 20:59:49+00:00

dsq_thread_id: '242777682'

layout: post

link: ''

slug: wolverine-or-2-batmen

title: Wolverine? Or 2 Batmen?

wordpress_id: 824

category: Comics

---

| 19.4 | 44 | 0.738832 | eng_Latn | 0.097886 |

ce32b2abac1b510097880ea288eebf9fad8bd227 | 859 | md | Markdown | technologies.md | krsiakdaniel/movies-old | 5214f9f92a8d1d507ebe3cc768bd1e5d652533e0 | [

"MIT"

] | null | null | null | technologies.md | krsiakdaniel/movies-old | 5214f9f92a8d1d507ebe3cc768bd1e5d652533e0 | [

"MIT"

] | null | null | null | technologies.md | krsiakdaniel/movies-old | 5214f9f92a8d1d507ebe3cc768bd1e5d652533e0 | [

"MIT"

] | null | null | null | # ⚙️ Technologies

[Dependencies](https://github.com/krsiakdaniel/movies/network/dependencies), technologies, tools and services used to build this app.

## Core

- [React](https://reactjs.org/)

- [JavaScript](https://developer.mozilla.org/en-US/docs/Web/JavaScript)

- [TypeScript](https://www.typescriptlang.org/)

## Design

- [Chakra UI](https://chakra-ui.com/getting-started)

- [Emotion](https://emotion.sh/docs/introduction)

## API

- [TMDb](https://developers.themoviedb.org/3/getting-started/introduction)

## Services

- [Netlify](https://app.netlify.com/sites/movies-krsiak/deploys)

- [Codacy](https://app.codacy.com/manual/krsiakdaniel/movies/dashboard?bid=17493411)

- [Smartlook](https://www.smartlook.com/)

- [Cypress Dashboard](https://dashboard.cypress.io/projects/tcj8uu/runs)

- [Uptime Robot status](https://stats.uptimerobot.com/7DxZ0imzV4)

| 31.814815 | 133 | 0.743888 | yue_Hant | 0.443045 |

ce33b9fb42d106d6ac53b8ea089f5d92030e8820 | 3,171 | md | Markdown | sdk-api-src/content/devicetopology/nf-devicetopology-iperchanneldblevel-getlevel.md | amorilio/sdk-api | 54ef418912715bd7df39c2561fbc3d1dcef37d7e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | sdk-api-src/content/devicetopology/nf-devicetopology-iperchanneldblevel-getlevel.md | amorilio/sdk-api | 54ef418912715bd7df39c2561fbc3d1dcef37d7e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | sdk-api-src/content/devicetopology/nf-devicetopology-iperchanneldblevel-getlevel.md | amorilio/sdk-api | 54ef418912715bd7df39c2561fbc3d1dcef37d7e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

UID: NF:devicetopology.IPerChannelDbLevel.GetLevel

title: IPerChannelDbLevel::GetLevel (devicetopology.h)

description: The GetLevel method gets the volume level, in decibels, of the specified channel.

helpviewer_keywords: ["GetLevel","GetLevel method [Core Audio]","GetLevel method [Core Audio]","IPerChannelDbLevel interface","IPerChannelDbLevel interface [Core Audio]","GetLevel method","IPerChannelDbLevel.GetLevel","IPerChannelDbLevel::GetLevel","IPerChannelDbLevelGetLevel","coreaudio.iperchanneldblevel_getlevel","devicetopology/IPerChannelDbLevel::GetLevel"]

old-location: coreaudio\iperchanneldblevel_getlevel.htm

tech.root: CoreAudio

ms.assetid: afc76c80-1656-4f06-8024-c9b041f52e64

ms.date: 12/05/2018

ms.keywords: GetLevel, GetLevel method [Core Audio], GetLevel method [Core Audio],IPerChannelDbLevel interface, IPerChannelDbLevel interface [Core Audio],GetLevel method, IPerChannelDbLevel.GetLevel, IPerChannelDbLevel::GetLevel, IPerChannelDbLevelGetLevel, coreaudio.iperchanneldblevel_getlevel, devicetopology/IPerChannelDbLevel::GetLevel

req.header: devicetopology.h

req.include-header:

req.target-type: Windows

req.target-min-winverclnt: Windows Vista [desktop apps only]

req.target-min-winversvr: Windows Server 2008 [desktop apps only]

req.kmdf-ver:

req.umdf-ver:

req.ddi-compliance:

req.unicode-ansi:

req.idl:

req.max-support:

req.namespace:

req.assembly:

req.type-library:

req.lib:

req.dll:

req.irql:

targetos: Windows

req.typenames:

req.redist:

ms.custom: 19H1

f1_keywords:

- IPerChannelDbLevel::GetLevel

- devicetopology/IPerChannelDbLevel::GetLevel

dev_langs:

- c++

topic_type:

- APIRef

- kbSyntax

api_type:

- COM

api_location:

- Devicetopology.h

api_name:

- IPerChannelDbLevel.GetLevel

---

# IPerChannelDbLevel::GetLevel

## -description

The <b>GetLevel</b> method gets the volume level, in decibels, of the specified channel.

## -parameters

### -param nChannel [in]

The channel number. If the audio stream has <i>N</i> channels, the channels are numbered from 0 to <i>N</i>– 1. To get the number of channels in the stream, call the <a href="/windows/desktop/api/devicetopology/nf-devicetopology-iperchanneldblevel-getchannelcount">IPerChannelDbLevel::GetChannelCount</a> method.

### -param pfLevelDB [out]

Pointer to a <b>float</b> variable into which the method writes the volume level, in decibels, of the specified channel.

## -returns

If the method succeeds, it returns S_OK. If it fails, possible return codes include, but are not limited to, the values shown in the following table.

<table>

<tr>

<th>Return code</th>

<th>Description</th>

</tr>

<tr>

<td width="40%">

<dl>

<dt><b>E_INVALIDARG</b></dt>

</dl>

</td>

<td width="60%">

Parameter <i>nChannel</i> is out of range.

</td>

</tr>

<tr>

<td width="40%">

<dl>

<dt><b>E_POINTER</b></dt>

</dl>

</td>

<td width="60%">

Pointer <i>pfLevelDB</i> is <b>NULL</b>.

</td>

</tr>

</table>

## -see-also

<a href="/windows/desktop/api/devicetopology/nn-devicetopology-iperchanneldblevel">IPerChannelDbLevel Interface</a>

<a href="/windows/desktop/api/devicetopology/nf-devicetopology-iperchanneldblevel-getchannelcount">IPerChannelDbLevel::GetChannelCount</a> | 30.490385 | 364 | 0.768212 | eng_Latn | 0.339183 |

ce33db7ac2c3cba752ece227e22c5e0f70866441 | 3,262 | md | Markdown | README.md | DRSchlaubi/mikmusic | 738c5f9682cfefa2f496a94e0cb7d8f4604e865f | [

"MIT"

] | 13 | 2021-10-03T10:26:57.000Z | 2021-11-07T07:32:19.000Z | README.md | DRSchlaubi/mikmusic | 738c5f9682cfefa2f496a94e0cb7d8f4604e865f | [

"MIT"

] | 3 | 2021-10-30T15:17:15.000Z | 2021-11-03T16:24:18.000Z | README.md | DRSchlaubi/mikmusic | 738c5f9682cfefa2f496a94e0cb7d8f4604e865f | [

"MIT"

] | 2 | 2021-10-04T19:34:16.000Z | 2021-10-05T14:31:27.000Z | # Mik Bot

[](https://github.com/DRSchlaubi/mikbot/actions/workflows/ci.yaml)

[](https://plugins.gradle.org/plugin/dev.schlaubi.mikbot.gradle-plugin)

[](https://schlaubi.jfrog.io/ui/native/mikbot/dev/schlaubi/mikbot-api/)

[](https://kotlinlang.org)

[](https://gitpod.io/#https://github.com/DRSchlaubi/mikbot)

A modular framework for building Discord bots in [Kotlin](https://kotlinlang.org)

using [Kordex](https://github.com/Kord-Extensions/kord-extensions/) and [Kord](https://github.com/kordlib).

**If you are here for mikmusic, click [here](music) and [there](mikmusic-bot).**

**If you are here for Votebot, click [here](votebot).**

# Help translating this project

<a href="https://hosted.weblate.org/engage/mikbot/">

<img src="https://hosted.weblate.org/widgets/mikbot/-/287x66-grey.png" alt="Übersetzungsstatus" />

</a>

## Deployment

For a full explanation on how to deploy the bot yourself take a look at [this](./SETUP.md)

### Requirements

- [Sentry](https://sentry.io) (Optional)

- [Docker](https://docs.docker.com/get-docker/)

- [Docker Compose](https://docs.docker.com/compose/install/)

## Example Environment file

<details>

<summary>.env</summary>

```properties

ENVIRONMENT=PRODUCTION

SENTRY_TOKEN=<>

DISCORD_TOKEN=<>

MONGO_URL=mongodb://bot:bot@mongo

MONGO_DATABASE=bot_prod

LOG_LEVEL=DEBUG

BOT_OWNERS=416902379598774273

OWNER_GUILD=<>

UPDATE_PLUGINS=false #if you want to disable the auto updater

```

</details>

### Starting the bot

Docker image from: https://github.com/DRSchlaubi/mikmusic/pkgs/container/mikmusic%2Fbot

- Clone this repo

- Run `docker-compose up -d`

# Binary repositories

The bot has two repositories for binaries the [binary-repo](https://storage.googleapis.com/mikbot-binaries) containing

the bots binaries and the [plugin-repo](https://storage.googleapis.com/mikbot-plugins)

([index](https://storage.googleapis.com/mikbot-plugins/plugins.json)) normally you should not need to interact with

these repositories directly.

# For bot developers

JDK is required it can be obtained [here](https://adoptium.net) (Recommended for Windows but works everywhere)

and [here](https://sdkman.io/) (Recommended for Linux/Mac)

Please set the `ENVIRONMENT` env var to `DEVELOPMENT` whilst developing the bot.

Also set a `TEST_GUILD` environment variable, for local commands

If you are making any changes to the bots official plugins (aka the plugins in this repo),

please run the `rebuild-plugin-dependency-list.sh` script first, otherwise your plugins won't be loaded properly

# For plugin developers

You can find a detailed guide on how to write plugins [here](PLUGINS.md)

| 41.291139 | 285 | 0.767627 | eng_Latn | 0.298089 |

ce34392a71d82c7ca233b94eaeb6db13443977f9 | 215 | md | Markdown | README.md | rafaelsantos-dev/Calculadora-Icms | 23b1f0d746b8f63b36df82310738dae44082297a | [

"MIT"

] | null | null | null | README.md | rafaelsantos-dev/Calculadora-Icms | 23b1f0d746b8f63b36df82310738dae44082297a | [

"MIT"

] | null | null | null | README.md | rafaelsantos-dev/Calculadora-Icms | 23b1f0d746b8f63b36df82310738dae44082297a | [

"MIT"

] | null | null | null | # Calculadora Icms

Calculadora de Substituição Tributária - BA <> SE

Criação de solução computacional para auxílio na resolução de cálculo de tributação, inicialmente entre os estado da Bahia e Sergipe no Brasil. | 53.75 | 144 | 0.809302 | por_Latn | 0.999915 |

ce34523c1a69a6e675777595133d2b00186ac122 | 8,251 | md | Markdown | docs/source/expressive_power.md | rix0rrr/gcl | 4e3bccc978a9c60aaaffd20f6f291c4d23775cdf | [

"MIT"

] | 55 | 2015-03-26T22:05:59.000Z | 2022-03-18T07:43:33.000Z | docs/source/expressive_power.md | rix0rrr/gcl | 4e3bccc978a9c60aaaffd20f6f291c4d23775cdf | [

"MIT"

] | 36 | 2015-04-16T09:30:46.000Z | 2020-11-19T20:22:32.000Z | docs/source/expressive_power.md | rix0rrr/gcl | 4e3bccc978a9c60aaaffd20f6f291c4d23775cdf | [

"MIT"

] | 9 | 2015-04-28T08:39:50.000Z | 2021-05-07T08:37:21.000Z | Expressive Power

================

It's the eternal problem of a DSL intended for a limited purpose: such a

language then gets more and more features, to gain more and more expressive

power, until finally the language is fully generic and any computable function

can be expressed in it.

> "In a heart beat, you're Turing complete!" -- Felienne Hermans

Not by design but by accident, GCL is actually one of those Turing complete

languages. It wasn't the intention, but because of the abstractive power of

tuples, lazy evaluation and recursion, GCL actually maps pretty closely onto the

Lambda Calculus, and is therefore also Turing complete.

Having said that, you should definitely not feel encouraged to (ab)use the

Turing completeness to do calculations inside your model. That is emphatically

_not_ what GCL was intended for. This section is more of an intellectual

curiosity, and should be treated as such.

Tuples are functions

--------------------

Tuples map very nicely onto functions; they can have any number of input and

output parameters. Of course, all of this is convention. But you can see how

this would work as I define the mother of all recursive functions, the Fibonacci

function:

fib = {

n;

n1 = n - 1;

n2 = n - 2;

value = if n == 0 then 0

else if n == 1 then 1

else (fib { n = n1 }).value + (fib { n = n2 }).value;

};

fib8 = (fib { n = 8 }).value;

And then:

$ gcl-print fib.gcl fib8

fib8

21

Hooray! Arbitrary computation through recursion!

A more elaborate example

------------------------

Any time you need a particular function, you can inject it from Python, _or_ you

could just write it directly in GCL. Need `string.join`? Got you covered:

string_join = {

list;

i = 0; # Hey, they're default arguments!

sep = ' ';

next_i = i + 1;

suffix = (string_join { inherit list sep; i = next_i }).value;

my_sep = if i > 0 then sep else '';

value = if has(list, i) then my_sep + list(i) + suffix else '';

};

praise = (string_join { list = ['Alonzo','would','be','proud']; }).value;

We make use of the lazy evaluation property here to achieve readability by

giving names to subparts of the computation: the key `suffix` actually only

makes sense if we're not at the end of the list yet, but we can give that

calculation a name anyway. The expression will only be evaluated when we pass

the `has(list, i)` test.

Multi-way relations

------------------

Because all keys are lazily evaluated and can be overridden, we can also encode

relationships between input and output parameters in both directions. The

_caller_ of our relation tuple can then determine which value they need. For

example:

Pythagoras = {

a = sqrt(c * c - b * b);

b = sqrt(c * c - a * a);

c = sqrt(a * a + b * b);

}

Right now we have a complete relationship between all values. Obviously, we

can't evaluate any field because that will yield an infinite recursion. But we

_can_ supply any two values to calculate the remaining one:

(Pythagoras { a = 3; b = 4}).c # 5

(Pythagoras { a = 5; c = 13}).b # 12

Inner tuples are closures

-------------------------

Just as tuples correspond to functions, nested tuples correspond to closures,

as they have a reference to the parent tuple at the moment it was evaluated.

For example, we can make a partially-applied tuple represents the capability of returning elements

from a matrix:

Matrix = {

matrix;

getter = {

x; y;

value = matrix y x;

};

};

PrintSquare = {

getter;

range = [0, 1, 2];

value = [[ (getter { inherit x y }).value for x in range] for y in range];

};

my_matrix = Matrix {

matrix = [

[8, 6, 12, 11, -3],

[20, 6, 8, 7, 7],

[9, 83, 8, 8, 30],

[3, 1, 20, -1, 21]

];

};

top_left = (PrintSquare { getter = my_matrix.getter }).value;

Let's do something silly

------------------------

Let's do something very useless: let's implement the Game of Life in GCL using

the techniques we've seen so far!

Our GCL file is going to load the current state of a board from a file and compute the next state of

the board--after applying all the GoL rules--into some output variable. If we then use a simple bash

script to pipe that output back into the input file, we can repeatedly invoke GCL to get some

animation going!

We'll make use of the fact that we can `include` JSON files directly, and that we can use `gcl2json`

to write some key back to JSON again.

Let's represent the board as an array of strings. That'll print nicely, which is

convenient because we don't have to invest a lot of effort into rendering. For example:

[

"............x....",

"...x.............",

"....x.......xxx..",

"..xxx.......x....",

".............x...",

".................",

".................",

"...x.x..........."

]

First we'll make a function to make ranges to iterate over.

# (range { n = 5 }).value == [0, 1, 2, 3, 4]

range = {

n; i = 0;

next_i = i + 1;

value = if i < n then [i] + (range { i = next_i; inherit n }).value else [];

};

Then we need a function to determine liveness. We'll expect a list of chars, either 'x' or '.', and

output another char.

# (liveness { me = 'x'; neighbours = ['x', 'x', 'x', '.', '.', '.'] }).next == 'x'

liveness = {

me; neighbours;

alive_neighbours = sum([1 for n in neighbours if n == 'x']);

alive = (me == 'x' and 2 <= alive_neighbours and alive_neighbours <= 3)

or (me == '.' and alive_neighbours == 3);

next = if alive then 'x' else '.';

};

On to the real meat! Let's find the neighbours of a cell given some coordinates:

find_neighbours = {

board; i; j;

cells = [

cell { x = i - 1; y = j - 1 },

cell { x = i; y = j - 1 },

cell { x = i + 1; y = j - 1 },

cell { x = i - 1; y = j },

cell { x = i + 1; y = j },

cell { x = i - 1; y = j + 1 },

cell { x = i; y = j + 1 },

cell { x = i + 1; y = j + 1 }

];

chars = [c.char for c in cells];

# Helper function for accessing cells

cell = {

x; y;

H = len board;

my_y = ((H + y) % H);

W = len (board my_y);

char = board (my_y) ((W + x) % W);

}

};

Now we can simply calculate the next state of the board given an input board:

next_board = {

board;

rows = (range { n = len board }).value;

value = [(row { inherit j }).value for j in rows];

row = {

j;

cols = (range { n = len board(j) }).value;

chars = [(cell { inherit i }).value for i in cols];

value = join(chars, '');

cell = {

i;

neighbours = (find_neighbours { inherit board i j }).chars;

me = board j i;

value = (liveness { inherit me neighbours }).next;

};

};

};

We've got everything! Now it's just a matter of tying the input and output together:

input = {

board = include 'board.json';

};

output = {

board = (next_board { board = input.board }).value;

};

That's it! We've got everything we need! Test whether everything is working by running:

$ gcl2json -r output.board game_of_life.gcl output.board

That should show the following:

[

"....x............",

"............x....",

"..x.x.......xx...",

"...xx.......x.x..",

"...x.............",

".................",

".................",

"................."

]

Hooray, it works!

For kicks and giggles, we can turn this into an animation by using `watch`, which will

run the same command over and over again and show its output:

$ watch -n 0 'gcl2json -r output.board game_of_life.gcl output.board | tee board2.json; mv board2.json board.json'

Fun, eh? :)

| 30.113139 | 118 | 0.564416 | eng_Latn | 0.993919 |

ce351d171acc6babb013ed536e93bb597475eb35 | 1,059 | md | Markdown | README.md | t-ishida/Zaolik | 0a8824cc334fed28f9195f3fa5b9a7bcad6a8f0e | [

"MIT"

] | 1 | 2017-06-01T06:09:53.000Z | 2017-06-01T06:09:53.000Z | README.md | t-ishida/Zaolik | 0a8824cc334fed28f9195f3fa5b9a7bcad6a8f0e | [

"MIT"

] | null | null | null | README.md | t-ishida/Zaolik | 0a8824cc334fed28f9195f3fa5b9a7bcad6a8f0e | [

"MIT"

] | null | null | null | # Zaolik

yet another PHP DI Container

inspired by Phalcon

## How To Use

```php

$container = \Zaolik\DIContainer::getInstance();

$databaseConfig = array (

'host' => 'localhost',

'user' => 'user',

'pass' => 'pass',

'database' => 'test',

);

$memcacheConfig = array (

'hosts' => 'localhost',

'port' => 11211,

);

$container->setFlyweight('mysqli', function () use ($databaseConfig) {

$mysql = new \mysqli($databaseConfig['host'], $databaseConfig['user'], $databaseConfig['pass']);

$mysql->select_db($config['database']);

return $mysql;

})->

setNew('DateTime', function ($time = null) {

return new \DateTime($time);

});

// new instance

$mysqli1 = $container->getFlyWieght('mysqli');

// flyweight

$mysqli2 = $container->getFlyWieght('mysqli');

echo $mysqli1 === $mysqli2 . "\n"

// now

echo $container->getNewInstance('DateTime') . "\n";

// yester day

echo $container->getNewInstance('DateTime', '-1 day') . "\n";

```

## License

This library is available under the MIT license. See the LICENSE file for more info.

| 22.0625 | 100 | 0.639282 | kor_Hang | 0.303454 |

ce35f9fe1dbe090afc5ef87c7c75e3fcfb2b8714 | 750 | md | Markdown | docs/server/mysql/utils.md | zhugy-cn/vue-press-blog | 5bf0cbafd9811d0bfe09725c3de11c3bda80d68e | [

"MIT"

] | 1 | 2019-08-24T02:49:05.000Z | 2019-08-24T02:49:05.000Z | docs/server/mysql/utils.md | zhugy-cn/vue-press-blog | 5bf0cbafd9811d0bfe09725c3de11c3bda80d68e | [

"MIT"

] | 17 | 2021-03-01T20:48:39.000Z | 2021-07-28T08:21:10.000Z | docs/server/mysql/utils.md | zhugy-cn/vue-press-blog | 5bf0cbafd9811d0bfe09725c3de11c3bda80d68e | [

"MIT"

] | null | null | null | # Navicat Premium 12 安装破解使用

## 安装软件

- [**下载 Navicat Premium 12**](https://www.navicat.com.cn/download/navicat-premium)

- [**下载 Navicat Premium 12 激活工具**](https://pan.baidu.com/s/1KUG0hM9SzgCnBzy4NuOj_Q)

- 安装软件(最好安装在默认盘符)

- 将下载好的`激活工具`移动到`Navicat`的安装目录(C:\Program Files\PremiumSoft\Navicat Premium 12)

- 运行`激活工具`,注意此时软件不能打开,点击`Path`

- 运行`Navicat`,弹出注册界面(如果没有弹出注册界面,手动在菜单打开:帮助->注册),然后选择版本和语言;然后点击注册机的`generate`按钮,注册码会自动填写到`Navicat`

- 点击`Navicat`注册界面的激活按钮,提示手动激活;点击手动激活,然后将得到的`请求码`复制到注册机;点击注册机左下方的Generate按钮,生成`ActivationCode`,复制粘贴到`Navicat`的激活码框,完成激活;

- [参考](https://blog.csdn.net/y526089989/article/details/89404581)

- [参考](https://blog.csdn.net/Edogawa_Konan/article/details/84928344)

- [参考](https://blog.csdn.net/zdagf/article/details/83987576) | 44.117647 | 119 | 0.766667 | yue_Hant | 0.527295 |

ce3636ce743013f871b9a47ab9ed54f277658a88 | 6,333 | md | Markdown | README.md | textcreationpartnership/A93833 | b7746af84ae25612d6f43900bddce9f396d41b18 | [

"CC0-1.0"

] | null | null | null | README.md | textcreationpartnership/A93833 | b7746af84ae25612d6f43900bddce9f396d41b18 | [

"CC0-1.0"

] | null | null | null | README.md | textcreationpartnership/A93833 | b7746af84ae25612d6f43900bddce9f396d41b18 | [

"CC0-1.0"

] | null | null | null | #Rupes Israelis: = The rock of Israel. A little part of its glory laid forth in a sermon preached at Margarets in Westminster before the honorable House of Commons, at their monthly fast, Apr. 24. 1644. By Edmund Staunton, D.D. minister at Kingston upon Thames, in the county of Surrey, a member of the Assembly of Divines.#

##Staunton, Edmund, 1600-1671.##

Rupes Israelis: = The rock of Israel. A little part of its glory laid forth in a sermon preached at Margarets in Westminster before the honorable House of Commons, at their monthly fast, Apr. 24. 1644. By Edmund Staunton, D.D. minister at Kingston upon Thames, in the county of Surrey, a member of the Assembly of Divines.

Staunton, Edmund, 1600-1671.

##General Summary##

**Links**

[TCP catalogue](http://www.ota.ox.ac.uk/tcp/) •

[HTML](http://tei.it.ox.ac.uk/tcp/Texts-HTML/free/A93/A93833.html) •

[EPUB](http://tei.it.ox.ac.uk/tcp/Texts-EPUB/free/A93/A93833.epub) •

[Page images (Historical Texts)](https://historicaltexts.jisc.ac.uk/eebo-99859067e)

**Availability**

To the extent possible under law, the Text Creation Partnership has waived all copyright and related or neighboring rights to this keyboarded and encoded edition of the work described above, according to the terms of the CC0 1.0 Public Domain Dedication (http://creativecommons.org/publicdomain/zero/1.0/). This waiver does not extend to any page images or other supplementary files associated with this work, which may be protected by copyright or other license restrictions. Please go to https://www.textcreationpartnership.org/ for more information about the project.

**Major revisions**

1. __2011-08__ __TCP__ *Assigned for keying and markup*

1. __2011-08__ __SPi Global__ *Keyed and coded from ProQuest page images*

1. __2011-10__ __Olivia Bottum__ *Sampled and proofread*

1. __2011-10__ __Olivia Bottum__ *Text and markup reviewed and edited*

1. __2012-05__ __pfs__ *Batch review (QC) and XML conversion*

##Content Summary##

#####Front#####

RƲPES ISRAELIS: THE ROCK OF ISRAEL.A Little part of its glory laid forth in a Sermon preached at Mar

1. To the Honourable Houſe of COMMONS now aſſembled in PARLIAMENT.

Die Mercurii 24. April. 1644.IT is this day Ordered by the Commons Aſſembled in Parliament, That SirI authoriſe Chriſtopher Meredith to Print my Sermon.EDMUND STAUNTON.

#####Body#####

1. A SERMON Preached at the LATE FAST, Before the Honorable Houſe of COMMONS.

**Types of content**

* Oh, Mr. Jourdain, there is **prose** in there!

There are 64 **omitted** fragments!

@__reason__ (64) : illegible (38), foreign (26) • @__resp__ (38) : #UOM (38) • @__extent__ (38) : 2 letters (5), 1 letter (29), 1 word (3), 1 span (1)

**Character listing**

|Text|string(s)|codepoint(s)|

|---|---|---|

|Latin-1 Supplement|òàèù|242 224 232 249|

|Latin Extended-A|ſ|383|

|Latin Extended-B|Ʋ|434|

|Combining Diacritical Marks|̄|772|

|General Punctuation|•—…|8226 8212 8230|

|Geometric Shapes|◊|9674|

|CJKSymbolsandPunctuation|〈〉|12296 12297|

##Tag Usage Summary##

###Header Tag Usage###

|No|element name|occ|attributes|

|---|---|---|---|

|1.|__author__|2||

|2.|__availability__|1||

|3.|__biblFull__|1||

|4.|__change__|5||

|5.|__date__|8| @__when__ (1) : 2012-10 (1)|

|6.|__edition__|1||

|7.|__editionStmt__|1||

|8.|__editorialDecl__|1||

|9.|__encodingDesc__|1||

|10.|__extent__|2||

|11.|__fileDesc__|1||

|12.|__idno__|7| @__type__ (7) : DLPS (1), STC (3), EEBO-CITATION (1), PROQUEST (1), VID (1)|

|13.|__keywords__|1| @__scheme__ (1) : http://authorities.loc.gov/ (1)|

|14.|__label__|5||

|15.|__langUsage__|1||

|16.|__language__|1| @__ident__ (1) : eng (1)|

|17.|__listPrefixDef__|1||

|18.|__note__|4||

|19.|__notesStmt__|2||

|20.|__p__|11||

|21.|__prefixDef__|2| @__ident__ (2) : tcp (1), char (1) • @__matchPattern__ (2) : ([0-9\-]+):([0-9IVX]+) (1), (.+) (1) • @__replacementPattern__ (2) : http://eebo.chadwyck.com/downloadtiff?vid=$1&page=$2 (1), https://raw.githubusercontent.com/textcreationpartnership/Texts/master/tcpchars.xml#$1 (1)|

|22.|__profileDesc__|1||

|23.|__projectDesc__|1||

|24.|__pubPlace__|2||

|25.|__publicationStmt__|2||

|26.|__publisher__|2||

|27.|__ref__|1| @__target__ (1) : http://www.textcreationpartnership.org/docs/. (1)|

|28.|__revisionDesc__|1||

|29.|__seriesStmt__|1||

|30.|__sourceDesc__|1||

|31.|__term__|3||

|32.|__textClass__|1||

|33.|__title__|3||

|34.|__titleStmt__|2||

###Text Tag Usage###

|No|element name|occ|attributes|

|---|---|---|---|

|1.|__am__|2||

|2.|__bibl__|1||

|3.|__body__|1||

|4.|__closer__|3||

|5.|__date__|1||

|6.|__dateline__|1||

|7.|__desc__|64||

|8.|__div__|5| @__type__ (5) : title_page (1), dedication (1), order (1), authorization (1), sermon (1)|

|9.|__epigraph__|1||

|10.|__ex__|2||

|11.|__expan__|2||

|12.|__front__|1||

|13.|__g__|281| @__ref__ (281) : char:V (1), char:EOLhyphen (277), char:abque (2), char:cmbAbbrStroke (1)|

|14.|__gap__|64| @__reason__ (64) : illegible (38), foreign (26) • @__resp__ (38) : #UOM (38) • @__extent__ (38) : 2 letters (5), 1 letter (29), 1 word (3), 1 span (1)|

|15.|__head__|2||

|16.|__hi__|631||

|17.|__label__|9| @__type__ (9) : milestone (9)|

|18.|__milestone__|51| @__type__ (51) : tcpmilestone (51) • @__unit__ (51) : unspecified (51) • @__n__ (51) : 1 (12), 2 (13), 3 (11), 4 (6), 5 (5), 6 (3), 7 (1)|

|19.|__note__|142| @__place__ (142) : margin (142) • @__n__ (31) : * (7), a (5), b (4), c (3), d (3), e (2), f (2), g (1), h (1), i (1), k (1), l (1)|

|20.|__opener__|2||

|21.|__p__|99||

|22.|__pb__|40| @__facs__ (40) : tcp:111129:1 (2), tcp:111129:2 (2), tcp:111129:3 (2), tcp:111129:4 (2), tcp:111129:5 (2), tcp:111129:6 (2), tcp:111129:7 (2), tcp:111129:8 (2), tcp:111129:9 (2), tcp:111129:10 (2), tcp:111129:11 (2), tcp:111129:12 (2), tcp:111129:13 (2), tcp:111129:14 (2), tcp:111129:15 (2), tcp:111129:16 (2), tcp:111129:17 (2), tcp:111129:18 (2), tcp:111129:19 (2), tcp:111129:20 (2) • @__rendition__ (1) : simple:additions (1) • @__n__ (29) : 1 (1), 2 (1), 3 (1), 4 (1), 5 (1), 6 (1), 7 (1), 8 (1), 9 (1), 10 (1), 11 (1), 12 (1), 13 (1), 14 (1), 15 (1), 16 (1), 17 (1), 18 (1), 19 (1), 20 (1), 21 (1), 22 (1), 23 (1), 24 (1), 25 (1), 26 (1), 27 (1), 28 (1), 29 (1)|

|23.|__q__|1||

|24.|__salute__|1||

|25.|__seg__|11| @__rend__ (2) : decorInit (2) • @__type__ (9) : milestoneunit (9)|

|26.|__signed__|3||

|27.|__trailer__|1||

| 48.343511 | 689 | 0.668562 | eng_Latn | 0.426137 |

ce37267a7fa4f04be973b8fff6e4efc3540a260d | 1,347 | md | Markdown | docs/0405-mtl-plugin-bdlocation.md | JetXing/mtl-tools | 4c7cb2bbc77d59926ddd284b1c27fd999327e06c | [

"MIT"

] | 1 | 2022-03-07T03:45:02.000Z | 2022-03-07T03:45:02.000Z | docs/0405-mtl-plugin-bdlocation.md | JetXing/mtl-tools | 4c7cb2bbc77d59926ddd284b1c27fd999327e06c | [

"MIT"

] | null | null | null | docs/0405-mtl-plugin-bdlocation.md | JetXing/mtl-tools | 4c7cb2bbc77d59926ddd284b1c27fd999327e06c | [

"MIT"

] | null | null | null | # 百度定位功能(安卓使用)

插件名称: mtl-plugin-bdlocation

<a name="e05dce83"></a>

### 简介

> 百度地图Android定位SDK是为Android移动端应用提供的一套简单易用的定位服务接口,专注于为广大开发者提供最好的综合定位服务。通过使用百度定位SDK,开发者可以轻松为应用程序实现智能、精准、高效的定位功能。

> 为应用提供定位服务,并且可以跳转到百度地图、高德地图等APP,实现出行路线的规划。

<a name="21f2fa80"></a>

### 参数说明

| 参数 | 说明 | 是否必传 |

| --- | --- | --- |

| BDMAP_KEY_ANDROID | Android平台百度定位mapKey | 是 |

<a name="c8a8e7b0"></a>

### 功能([详细API](http://mtlapidocs201908061404.test.app.yyuap.com/0205-location-api))

| 方法 | 功能 |

| --- | --- |

| getLocation | 获取当前坐标 |

| openLocation | 打开地图查看指定坐标位置 |

<a name="2ca50cf2"></a>

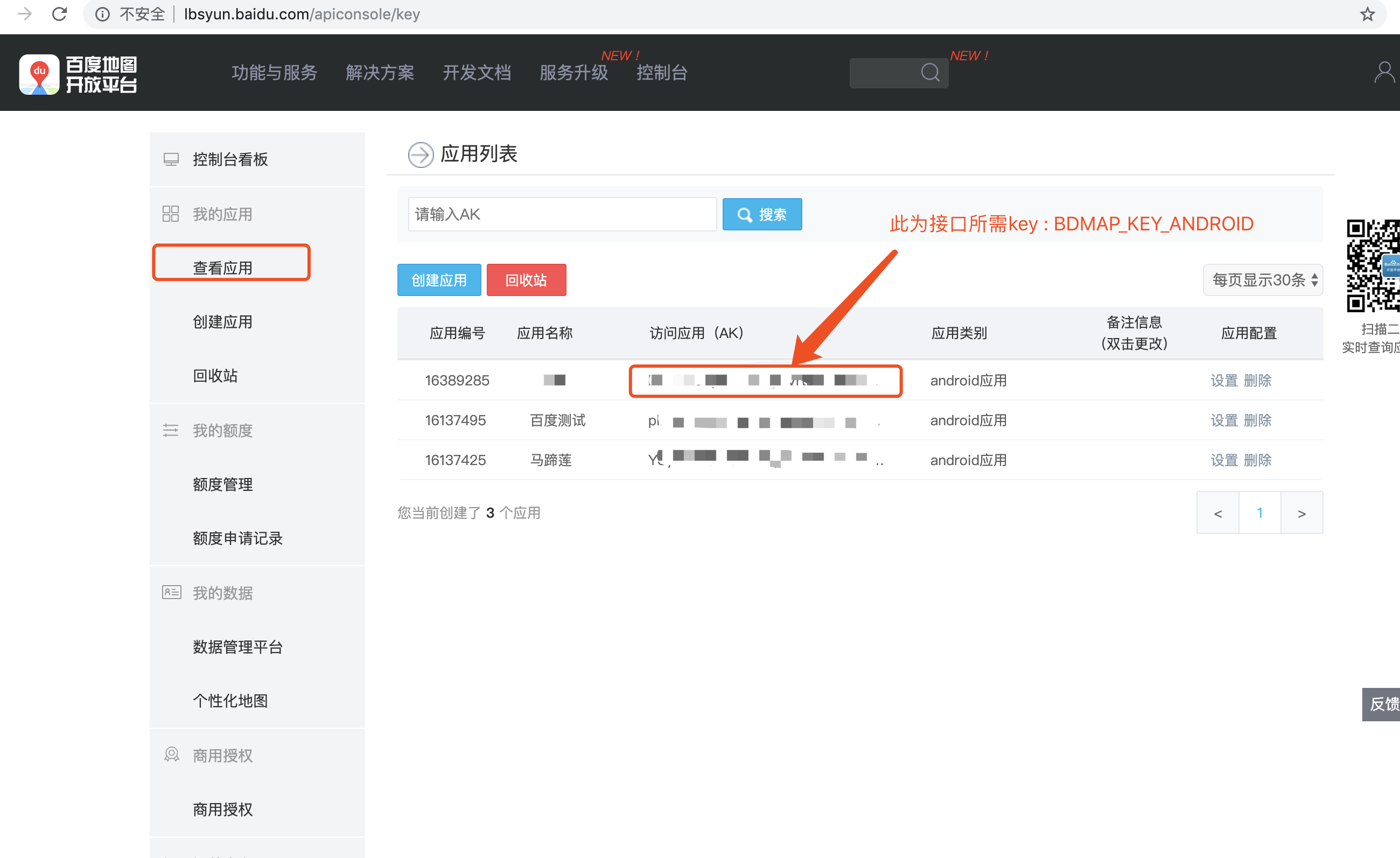

#### 参数获取流程

- 登录百度地图开发平台,网址:[http://lbsyun.baidu.com/]()

- 打开控制台,选择“创建应用”如下图:

- 填写应用信息,SHA1值(创建应用页面有获取帮助,按步骤操作)和包名

- 应用创建完成点开“查看应用”,获取接口参数 BDMAP_KEY_ANDROID

<a name="x9iBG"></a>

#### 参数生效须知

由于SHA1值和应用签名文件有关,需要在打包服务器上传自己的打包keystore文件。暂时由我们的开发人员([email protected])进行上传,需提供打包keystore文件密码及包名等。

| 29.282609 | 236 | 0.74239 | yue_Hant | 0.467235 |

ce37399f825af3c3b94d6a65aee8b9007f79e970 | 218 | md | Markdown | _watches/M20200507_083141_TLP_2.md | Meteoros-Floripa/meteoros.floripa.br | 7d296fb8d630a4e5fec9ab1a3fb6050420fc0dad | [

"MIT"

] | 5 | 2020-01-22T17:44:06.000Z | 2020-01-26T17:57:58.000Z | _watches/M20200507_083141_TLP_2.md | Meteoros-Floripa/site | 764cf471d85a6b498873610e4f3b30efd1fd9fae | [

"MIT"

] | null | null | null | _watches/M20200507_083141_TLP_2.md | Meteoros-Floripa/site | 764cf471d85a6b498873610e4f3b30efd1fd9fae | [

"MIT"

] | 2 | 2020-05-19T17:06:27.000Z | 2020-09-04T00:00:43.000Z | ---

layout: watch

title: TLP2 - 07/05/2020 - M20200507_083141_TLP_2T.jpg

date: 2020-05-07 08:31:41

permalink: /2020/05/07/watch/M20200507_083141_TLP_2

capture: TLP2/2020/202005/20200506/M20200507_083141_TLP_2T.jpg

---

| 27.25 | 62 | 0.784404 | eng_Latn | 0.041651 |

ce3848c1aad84d2abcaeaf755fe03780fbcab19f | 119 | md | Markdown | Solar Tracker Project/servo_control/README.md | jkuatdsc/IoT | 523db8c94e8e622b7b8e246b479eed4387cc644a | [

"MIT"

] | null | null | null | Solar Tracker Project/servo_control/README.md | jkuatdsc/IoT | 523db8c94e8e622b7b8e246b479eed4387cc644a | [

"MIT"

] | 1 | 2021-03-26T11:23:02.000Z | 2021-11-01T20:19:49.000Z | Solar Tracker Project/servo_control/README.md | jkuatdsc/IoT | 523db8c94e8e622b7b8e246b479eed4387cc644a | [

"MIT"

] | null | null | null | ### Optimize Solar Energy Collection

- Controls the Orientataion of the Soalr Pannel based on the Location of the Sun

| 39.666667 | 81 | 0.789916 | eng_Latn | 0.992201 |

ce387b12294672f07340eca3eb38acb1f6612cac | 317 | md | Markdown | admin/D-originality-u6251843.md | ShiqinHuo/IQ-Step_board_game | 30583f49dad63d116d85a4c6b3bebe141b36bc7b | [

"MIT"