changes in flenema

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .cardboardlint.yml +5 -0

- .circleci/config.yml +53 -0

- .compute +17 -0

- .dockerignore +1 -0

- .github/ISSUE_TEMPLATE.md +19 -0

- .github/PR_TEMPLATE.md +18 -0

- .github/stale.yml +19 -0

- .gitignore +132 -0

- .pylintrc +586 -0

- CODE_OF_CONDUCT.md +19 -0

- CODE_OWNERS.rst +75 -0

- CONTRIBUTING.md +51 -0

- LICENSE.txt +373 -0

- MANIFEST.in +11 -0

- README.md +281 -3

- TTS/.models.json +77 -0

- TTS/__init__.py +0 -0

- TTS/bin/__init__.py +0 -0

- TTS/bin/compute_attention_masks.py +166 -0

- TTS/bin/compute_embeddings.py +130 -0

- TTS/bin/compute_statistics.py +90 -0

- TTS/bin/convert_melgan_tflite.py +32 -0

- TTS/bin/convert_melgan_torch_to_tf.py +116 -0

- TTS/bin/convert_tacotron2_tflite.py +37 -0

- TTS/bin/convert_tacotron2_torch_to_tf.py +213 -0

- TTS/bin/distribute.py +69 -0

- TTS/bin/synthesize.py +218 -0

- TTS/bin/train_encoder.py +274 -0

- TTS/bin/train_glow_tts.py +657 -0

- TTS/bin/train_speedy_speech.py +618 -0

- TTS/bin/train_tacotron.py +731 -0

- TTS/bin/train_vocoder_gan.py +664 -0

- TTS/bin/train_vocoder_wavegrad.py +511 -0

- TTS/bin/train_vocoder_wavernn.py +539 -0

- TTS/bin/tune_wavegrad.py +91 -0

- TTS/server/README.md +65 -0

- TTS/server/__init__.py +0 -0

- TTS/server/conf.json +12 -0

- TTS/server/server.py +116 -0

- TTS/server/static/TTS_circle.png +0 -0

- TTS/server/templates/details.html +131 -0

- TTS/server/templates/index.html +114 -0

- TTS/speaker_encoder/README.md +18 -0

- TTS/speaker_encoder/__init__.py +0 -0

- TTS/speaker_encoder/config.json +103 -0

- TTS/speaker_encoder/dataset.py +169 -0

- TTS/speaker_encoder/losses.py +160 -0

- TTS/speaker_encoder/model.py +112 -0

- TTS/speaker_encoder/requirements.txt +2 -0

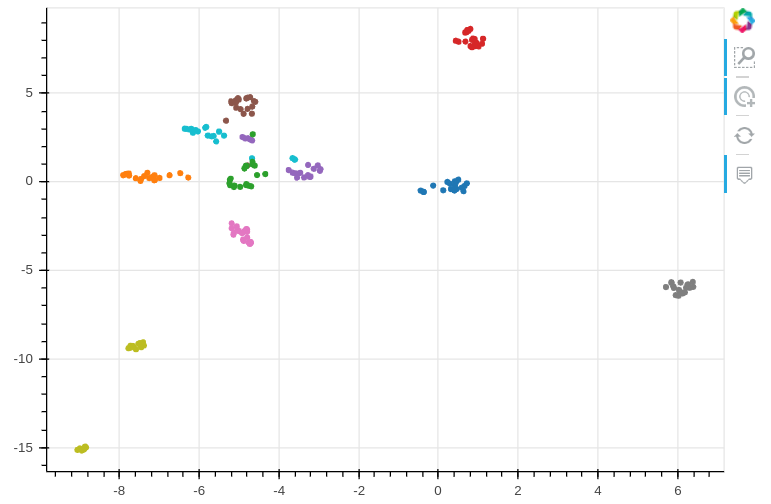

- TTS/speaker_encoder/umap.png +0 -0

.cardboardlint.yml

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

linters:

|

| 2 |

+

- pylint:

|

| 3 |

+

# pylintrc: pylintrc

|

| 4 |

+

filefilter: ['- test_*.py', '+ *.py', '- *.npy']

|

| 5 |

+

# exclude:

|

.circleci/config.yml

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version: 2

|

| 2 |

+

|

| 3 |

+

workflows:

|

| 4 |

+

version: 2

|

| 5 |

+

test:

|

| 6 |

+

jobs:

|

| 7 |

+

- test-3.6

|

| 8 |

+

- test-3.7

|

| 9 |

+

- test-3.8

|

| 10 |

+

|

| 11 |

+

executor: ubuntu-latest

|

| 12 |

+

|

| 13 |

+

on:

|

| 14 |

+

push:

|

| 15 |

+

pull_request:

|

| 16 |

+

types: [opened, synchronize, reopened]

|

| 17 |

+

|

| 18 |

+

jobs:

|

| 19 |

+

test-3.6: &test-template

|

| 20 |

+

docker:

|

| 21 |

+

- image: circleci/python:3.6

|

| 22 |

+

resource_class: large

|

| 23 |

+

working_directory: ~/repo

|

| 24 |

+

steps:

|

| 25 |

+

- checkout

|

| 26 |

+

- run: |

|

| 27 |

+

sudo apt update

|

| 28 |

+

sudo apt install espeak git

|

| 29 |

+

- run: sudo pip install --upgrade pip

|

| 30 |

+

- run: sudo pip install -e .

|

| 31 |

+

- run: |

|

| 32 |

+

sudo pip install --quiet --upgrade cardboardlint pylint

|

| 33 |

+

cardboardlinter --refspec ${CIRCLE_BRANCH} -n auto

|

| 34 |

+

- run: nosetests tests --nocapture

|

| 35 |

+

- run: |

|

| 36 |

+

sudo ./tests/test_server_package.sh

|

| 37 |

+

sudo ./tests/test_glow-tts_train.sh

|

| 38 |

+

sudo ./tests/test_server_package.sh

|

| 39 |

+

sudo ./tests/test_tacotron_train.sh

|

| 40 |

+

sudo ./tests/test_vocoder_gan_train.sh

|

| 41 |

+

sudo ./tests/test_vocoder_wavegrad_train.sh

|

| 42 |

+

sudo ./tests/test_vocoder_wavernn_train.sh

|

| 43 |

+

sudo ./tests/test_speedy_speech_train.sh

|

| 44 |

+

|

| 45 |

+

test-3.7:

|

| 46 |

+

<<: *test-template

|

| 47 |

+

docker:

|

| 48 |

+

- image: circleci/python:3.7

|

| 49 |

+

|

| 50 |

+

test-3.8:

|

| 51 |

+

<<: *test-template

|

| 52 |

+

docker:

|

| 53 |

+

- image: circleci/python:3.8

|

.compute

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

yes | apt-get install sox

|

| 3 |

+

yes | apt-get install ffmpeg

|

| 4 |

+

yes | apt-get install espeak

|

| 5 |

+

yes | apt-get install tmux

|

| 6 |

+

yes | apt-get install zsh

|

| 7 |

+

sh -c "$(curl -fsSL https://raw.githubusercontent.com/robbyrussell/oh-my-zsh/master/tools/install.sh)"

|

| 8 |

+

pip3 install https://download.pytorch.org/whl/cu100/torch-1.3.0%2Bcu100-cp36-cp36m-linux_x86_64.whl

|

| 9 |

+

sudo sh install.sh

|

| 10 |

+

# pip install pytorch==1.7.0+cu100

|

| 11 |

+

# python3 setup.py develop

|

| 12 |

+

# python3 distribute.py --config_path config.json --data_path /data/ro/shared/data/keithito/LJSpeech-1.1/

|

| 13 |

+

# cp -R ${USER_DIR}/Mozilla_22050 ../tmp/

|

| 14 |

+

# python3 distribute.py --config_path config_tacotron_gst.json --data_path ../tmp/Mozilla_22050/

|

| 15 |

+

# python3 distribute.py --config_path config.json --data_path /data/rw/home/LibriTTS/train-clean-360

|

| 16 |

+

# python3 distribute.py --config_path config.json

|

| 17 |

+

while true; do sleep 1000000; done

|

.dockerignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

.git/

|

.github/ISSUE_TEMPLATE.md

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: 'TTS Discourse '

|

| 3 |

+

about: Pls consider to use TTS Discourse page.

|

| 4 |

+

title: ''

|

| 5 |

+

labels: ''

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

<b>Questions</b> will not be answered here!!

|

| 10 |

+

|

| 11 |

+

Help is much more valuable if it's shared publicly, so that more people can benefit from it.

|

| 12 |

+

|

| 13 |

+

Please consider posting on [TTS Discourse](https://discourse.mozilla.org/c/tts) page or matrix [chat room](https://matrix.to/#/!KTePhNahjgiVumkqca:matrix.org?via=matrix.org) if your issue is not directly related to TTS development (Bugs, code updates etc.).

|

| 14 |

+

|

| 15 |

+

You can also check https://github.com/mozilla/TTS/wiki/FAQ for common questions and answers.

|

| 16 |

+

|

| 17 |

+

Happy posting!

|

| 18 |

+

|

| 19 |

+

https://discourse.mozilla.org/c/tts

|

.github/PR_TEMPLATE.md

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: 'Contribution Guideline '

|

| 3 |

+

about: Refer to Contirbution Guideline

|

| 4 |

+

title: ''

|

| 5 |

+

labels: ''

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

### Contribution Guideline

|

| 10 |

+

|

| 11 |

+

Please send your PRs to `dev` branch if it is not directly related to a specific branch.

|

| 12 |

+

Before making a Pull Request, check your changes for basic mistakes and style problems by using a linter.

|

| 13 |

+

We have cardboardlinter setup in this repository, so for example, if you've made some changes and would like to run the linter on just the changed code, you can use the follow command:

|

| 14 |

+

|

| 15 |

+

```bash

|

| 16 |

+

pip install pylint cardboardlint

|

| 17 |

+

cardboardlinter --refspec master

|

| 18 |

+

```

|

.github/stale.yml

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Number of days of inactivity before an issue becomes stale

|

| 2 |

+

daysUntilStale: 60

|

| 3 |

+

# Number of days of inactivity before a stale issue is closed

|

| 4 |

+

daysUntilClose: 7

|

| 5 |

+

# Issues with these labels will never be considered stale

|

| 6 |

+

exemptLabels:

|

| 7 |

+

- pinned

|

| 8 |

+

- security

|

| 9 |

+

# Label to use when marking an issue as stale

|

| 10 |

+

staleLabel: wontfix

|

| 11 |

+

# Comment to post when marking an issue as stale. Set to `false` to disable

|

| 12 |

+

markComment: >

|

| 13 |

+

This issue has been automatically marked as stale because it has not had

|

| 14 |

+

recent activity. It will be closed if no further activity occurs. Thank you

|

| 15 |

+

for your contributions. You might also look our discourse page for further help.

|

| 16 |

+

https://discourse.mozilla.org/c/tts

|

| 17 |

+

# Comment to post when closing a stale issue. Set to `false` to disable

|

| 18 |

+

closeComment: false

|

| 19 |

+

|

.gitignore

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

WadaSNR/

|

| 2 |

+

.idea/

|

| 3 |

+

*.pyc

|

| 4 |

+

.DS_Store

|

| 5 |

+

./__init__.py

|

| 6 |

+

# Byte-compiled / optimized / DLL files

|

| 7 |

+

__pycache__/

|

| 8 |

+

*.py[cod]

|

| 9 |

+

*$py.class

|

| 10 |

+

|

| 11 |

+

# C extensions

|

| 12 |

+

*.so

|

| 13 |

+

|

| 14 |

+

# Distribution / packaging

|

| 15 |

+

.Python

|

| 16 |

+

build/

|

| 17 |

+

develop-eggs/

|

| 18 |

+

dist/

|

| 19 |

+

downloads/

|

| 20 |

+

eggs/

|

| 21 |

+

.eggs/

|

| 22 |

+

lib/

|

| 23 |

+

lib64/

|

| 24 |

+

parts/

|

| 25 |

+

sdist/

|

| 26 |

+

var/

|

| 27 |

+

wheels/

|

| 28 |

+

*.egg-info/

|

| 29 |

+

.installed.cfg

|

| 30 |

+

*.egg

|

| 31 |

+

MANIFEST

|

| 32 |

+

|

| 33 |

+

# PyInstaller

|

| 34 |

+

# Usually these files are written by a python script from a template

|

| 35 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 36 |

+

*.manifest

|

| 37 |

+

*.spec

|

| 38 |

+

|

| 39 |

+

# Installer logs

|

| 40 |

+

pip-log.txt

|

| 41 |

+

pip-delete-this-directory.txt

|

| 42 |

+

|

| 43 |

+

# Unit test / coverage reports

|

| 44 |

+

htmlcov/

|

| 45 |

+

.tox/

|

| 46 |

+

.coverage

|

| 47 |

+

.coverage.*

|

| 48 |

+

.cache

|

| 49 |

+

nosetests.xml

|

| 50 |

+

coverage.xml

|

| 51 |

+

*.cover

|

| 52 |

+

.hypothesis/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

.static_storage/

|

| 61 |

+

.media/

|

| 62 |

+

local_settings.py

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

target/

|

| 76 |

+

|

| 77 |

+

# Jupyter Notebook

|

| 78 |

+

.ipynb_checkpoints

|

| 79 |

+

|

| 80 |

+

# pyenv

|

| 81 |

+

.python-version

|

| 82 |

+

|

| 83 |

+

# celery beat schedule file

|

| 84 |

+

celerybeat-schedule

|

| 85 |

+

|

| 86 |

+

# SageMath parsed files

|

| 87 |

+

*.sage.py

|

| 88 |

+

|

| 89 |

+

# Environments

|

| 90 |

+

.env

|

| 91 |

+

.venv

|

| 92 |

+

env/

|

| 93 |

+

venv/

|

| 94 |

+

ENV/

|

| 95 |

+

env.bak/

|

| 96 |

+

venv.bak/

|

| 97 |

+

|

| 98 |

+

# Spyder project settings

|

| 99 |

+

.spyderproject

|

| 100 |

+

.spyproject

|

| 101 |

+

|

| 102 |

+

# Rope project settings

|

| 103 |

+

.ropeproject

|

| 104 |

+

|

| 105 |

+

# mkdocs documentation

|

| 106 |

+

/site

|

| 107 |

+

|

| 108 |

+

# mypy

|

| 109 |

+

.mypy_cache/

|

| 110 |

+

|

| 111 |

+

# vim

|

| 112 |

+

*.swp

|

| 113 |

+

*.swm

|

| 114 |

+

*.swn

|

| 115 |

+

*.swo

|

| 116 |

+

|

| 117 |

+

# pytorch models

|

| 118 |

+

*.pth.tar

|

| 119 |

+

result/

|

| 120 |

+

|

| 121 |

+

# setup.py

|

| 122 |

+

version.py

|

| 123 |

+

|

| 124 |

+

# jupyter dummy files

|

| 125 |

+

core

|

| 126 |

+

|

| 127 |

+

tests/outputs/*

|

| 128 |

+

TODO.txt

|

| 129 |

+

.vscode/*

|

| 130 |

+

data/*

|

| 131 |

+

notebooks/data/*

|

| 132 |

+

TTS/tts/layers/glow_tts/monotonic_align/core.c

|

.pylintrc

ADDED

|

@@ -0,0 +1,586 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[MASTER]

|

| 2 |

+

|

| 3 |

+

# A comma-separated list of package or module names from where C extensions may

|

| 4 |

+

# be loaded. Extensions are loading into the active Python interpreter and may

|

| 5 |

+

# run arbitrary code.

|

| 6 |

+

extension-pkg-whitelist=

|

| 7 |

+

|

| 8 |

+

# Add files or directories to the blacklist. They should be base names, not

|

| 9 |

+

# paths.

|

| 10 |

+

ignore=CVS

|

| 11 |

+

|

| 12 |

+

# Add files or directories matching the regex patterns to the blacklist. The

|

| 13 |

+

# regex matches against base names, not paths.

|

| 14 |

+

ignore-patterns=

|

| 15 |

+

|

| 16 |

+

# Python code to execute, usually for sys.path manipulation such as

|

| 17 |

+

# pygtk.require().

|

| 18 |

+

#init-hook=

|

| 19 |

+

|

| 20 |

+

# Use multiple processes to speed up Pylint. Specifying 0 will auto-detect the

|

| 21 |

+

# number of processors available to use.

|

| 22 |

+

jobs=1

|

| 23 |

+

|

| 24 |

+

# Control the amount of potential inferred values when inferring a single

|

| 25 |

+

# object. This can help the performance when dealing with large functions or

|

| 26 |

+

# complex, nested conditions.

|

| 27 |

+

limit-inference-results=100

|

| 28 |

+

|

| 29 |

+

# List of plugins (as comma separated values of python modules names) to load,

|

| 30 |

+

# usually to register additional checkers.

|

| 31 |

+

load-plugins=

|

| 32 |

+

|

| 33 |

+

# Pickle collected data for later comparisons.

|

| 34 |

+

persistent=yes

|

| 35 |

+

|

| 36 |

+

# Specify a configuration file.

|

| 37 |

+

#rcfile=

|

| 38 |

+

|

| 39 |

+

# When enabled, pylint would attempt to guess common misconfiguration and emit

|

| 40 |

+

# user-friendly hints instead of false-positive error messages.

|

| 41 |

+

suggestion-mode=yes

|

| 42 |

+

|

| 43 |

+

# Allow loading of arbitrary C extensions. Extensions are imported into the

|

| 44 |

+

# active Python interpreter and may run arbitrary code.

|

| 45 |

+

unsafe-load-any-extension=no

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

[MESSAGES CONTROL]

|

| 49 |

+

|

| 50 |

+

# Only show warnings with the listed confidence levels. Leave empty to show

|

| 51 |

+

# all. Valid levels: HIGH, INFERENCE, INFERENCE_FAILURE, UNDEFINED.

|

| 52 |

+

confidence=

|

| 53 |

+

|

| 54 |

+

# Disable the message, report, category or checker with the given id(s). You

|

| 55 |

+

# can either give multiple identifiers separated by comma (,) or put this

|

| 56 |

+

# option multiple times (only on the command line, not in the configuration

|

| 57 |

+

# file where it should appear only once). You can also use "--disable=all" to

|

| 58 |

+

# disable everything first and then reenable specific checks. For example, if

|

| 59 |

+

# you want to run only the similarities checker, you can use "--disable=all

|

| 60 |

+

# --enable=similarities". If you want to run only the classes checker, but have

|

| 61 |

+

# no Warning level messages displayed, use "--disable=all --enable=classes

|

| 62 |

+

# --disable=W".

|

| 63 |

+

disable=missing-docstring,

|

| 64 |

+

line-too-long,

|

| 65 |

+

fixme,

|

| 66 |

+

wrong-import-order,

|

| 67 |

+

ungrouped-imports,

|

| 68 |

+

wrong-import-position,

|

| 69 |

+

import-error,

|

| 70 |

+

invalid-name,

|

| 71 |

+

too-many-instance-attributes,

|

| 72 |

+

arguments-differ,

|

| 73 |

+

no-name-in-module,

|

| 74 |

+

no-member,

|

| 75 |

+

unsubscriptable-object,

|

| 76 |

+

print-statement,

|

| 77 |

+

parameter-unpacking,

|

| 78 |

+

unpacking-in-except,

|

| 79 |

+

old-raise-syntax,

|

| 80 |

+

backtick,

|

| 81 |

+

long-suffix,

|

| 82 |

+

old-ne-operator,

|

| 83 |

+

old-octal-literal,

|

| 84 |

+

import-star-module-level,

|

| 85 |

+

non-ascii-bytes-literal,

|

| 86 |

+

raw-checker-failed,

|

| 87 |

+

bad-inline-option,

|

| 88 |

+

locally-disabled,

|

| 89 |

+

file-ignored,

|

| 90 |

+

suppressed-message,

|

| 91 |

+

useless-suppression,

|

| 92 |

+

deprecated-pragma,

|

| 93 |

+

use-symbolic-message-instead,

|

| 94 |

+

useless-object-inheritance,

|

| 95 |

+

too-few-public-methods,

|

| 96 |

+

too-many-branches,

|

| 97 |

+

too-many-arguments,

|

| 98 |

+

too-many-locals,

|

| 99 |

+

too-many-statements,

|

| 100 |

+

apply-builtin,

|

| 101 |

+

basestring-builtin,

|

| 102 |

+

buffer-builtin,

|

| 103 |

+

cmp-builtin,

|

| 104 |

+

coerce-builtin,

|

| 105 |

+

execfile-builtin,

|

| 106 |

+

file-builtin,

|

| 107 |

+

long-builtin,

|

| 108 |

+

raw_input-builtin,

|

| 109 |

+

reduce-builtin,

|

| 110 |

+

standarderror-builtin,

|

| 111 |

+

unicode-builtin,

|

| 112 |

+

xrange-builtin,

|

| 113 |

+

coerce-method,

|

| 114 |

+

delslice-method,

|

| 115 |

+

getslice-method,

|

| 116 |

+

setslice-method,

|

| 117 |

+

no-absolute-import,

|

| 118 |

+

old-division,

|

| 119 |

+

dict-iter-method,

|

| 120 |

+

dict-view-method,

|

| 121 |

+

next-method-called,

|

| 122 |

+

metaclass-assignment,

|

| 123 |

+

indexing-exception,

|

| 124 |

+

raising-string,

|

| 125 |

+

reload-builtin,

|

| 126 |

+

oct-method,

|

| 127 |

+

hex-method,

|

| 128 |

+

nonzero-method,

|

| 129 |

+

cmp-method,

|

| 130 |

+

input-builtin,

|

| 131 |

+

round-builtin,

|

| 132 |

+

intern-builtin,

|

| 133 |

+

unichr-builtin,

|

| 134 |

+

map-builtin-not-iterating,

|

| 135 |

+

zip-builtin-not-iterating,

|

| 136 |

+

range-builtin-not-iterating,

|

| 137 |

+

filter-builtin-not-iterating,

|

| 138 |

+

using-cmp-argument,

|

| 139 |

+

eq-without-hash,

|

| 140 |

+

div-method,

|

| 141 |

+

idiv-method,

|

| 142 |

+

rdiv-method,

|

| 143 |

+

exception-message-attribute,

|

| 144 |

+

invalid-str-codec,

|

| 145 |

+

sys-max-int,

|

| 146 |

+

bad-python3-import,

|

| 147 |

+

deprecated-string-function,

|

| 148 |

+

deprecated-str-translate-call,

|

| 149 |

+

deprecated-itertools-function,

|

| 150 |

+

deprecated-types-field,

|

| 151 |

+

next-method-defined,

|

| 152 |

+

dict-items-not-iterating,

|

| 153 |

+

dict-keys-not-iterating,

|

| 154 |

+

dict-values-not-iterating,

|

| 155 |

+

deprecated-operator-function,

|

| 156 |

+

deprecated-urllib-function,

|

| 157 |

+

xreadlines-attribute,

|

| 158 |

+

deprecated-sys-function,

|

| 159 |

+

exception-escape,

|

| 160 |

+

comprehension-escape,

|

| 161 |

+

duplicate-code

|

| 162 |

+

|

| 163 |

+

# Enable the message, report, category or checker with the given id(s). You can

|

| 164 |

+

# either give multiple identifier separated by comma (,) or put this option

|

| 165 |

+

# multiple time (only on the command line, not in the configuration file where

|

| 166 |

+

# it should appear only once). See also the "--disable" option for examples.

|

| 167 |

+

enable=c-extension-no-member

|

| 168 |

+

|

| 169 |

+

|

| 170 |

+

[REPORTS]

|

| 171 |

+

|

| 172 |

+

# Python expression which should return a note less than 10 (10 is the highest

|

| 173 |

+

# note). You have access to the variables errors warning, statement which

|

| 174 |

+

# respectively contain the number of errors / warnings messages and the total

|

| 175 |

+

# number of statements analyzed. This is used by the global evaluation report

|

| 176 |

+

# (RP0004).

|

| 177 |

+

evaluation=10.0 - ((float(5 * error + warning + refactor + convention) / statement) * 10)

|

| 178 |

+

|

| 179 |

+

# Template used to display messages. This is a python new-style format string

|

| 180 |

+

# used to format the message information. See doc for all details.

|

| 181 |

+

#msg-template=

|

| 182 |

+

|

| 183 |

+

# Set the output format. Available formats are text, parseable, colorized, json

|

| 184 |

+

# and msvs (visual studio). You can also give a reporter class, e.g.

|

| 185 |

+

# mypackage.mymodule.MyReporterClass.

|

| 186 |

+

output-format=text

|

| 187 |

+

|

| 188 |

+

# Tells whether to display a full report or only the messages.

|

| 189 |

+

reports=no

|

| 190 |

+

|

| 191 |

+

# Activate the evaluation score.

|

| 192 |

+

score=yes

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

[REFACTORING]

|

| 196 |

+

|

| 197 |

+

# Maximum number of nested blocks for function / method body

|

| 198 |

+

max-nested-blocks=5

|

| 199 |

+

|

| 200 |

+

# Complete name of functions that never returns. When checking for

|

| 201 |

+

# inconsistent-return-statements if a never returning function is called then

|

| 202 |

+

# it will be considered as an explicit return statement and no message will be

|

| 203 |

+

# printed.

|

| 204 |

+

never-returning-functions=sys.exit

|

| 205 |

+

|

| 206 |

+

|

| 207 |

+

[LOGGING]

|

| 208 |

+

|

| 209 |

+

# Format style used to check logging format string. `old` means using %

|

| 210 |

+

# formatting, while `new` is for `{}` formatting.

|

| 211 |

+

logging-format-style=old

|

| 212 |

+

|

| 213 |

+

# Logging modules to check that the string format arguments are in logging

|

| 214 |

+

# function parameter format.

|

| 215 |

+

logging-modules=logging

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

[SPELLING]

|

| 219 |

+

|

| 220 |

+

# Limits count of emitted suggestions for spelling mistakes.

|

| 221 |

+

max-spelling-suggestions=4

|

| 222 |

+

|

| 223 |

+

# Spelling dictionary name. Available dictionaries: none. To make it working

|

| 224 |

+

# install python-enchant package..

|

| 225 |

+

spelling-dict=

|

| 226 |

+

|

| 227 |

+

# List of comma separated words that should not be checked.

|

| 228 |

+

spelling-ignore-words=

|

| 229 |

+

|

| 230 |

+

# A path to a file that contains private dictionary; one word per line.

|

| 231 |

+

spelling-private-dict-file=

|

| 232 |

+

|

| 233 |

+

# Tells whether to store unknown words to indicated private dictionary in

|

| 234 |

+

# --spelling-private-dict-file option instead of raising a message.

|

| 235 |

+

spelling-store-unknown-words=no

|

| 236 |

+

|

| 237 |

+

|

| 238 |

+

[MISCELLANEOUS]

|

| 239 |

+

|

| 240 |

+

# List of note tags to take in consideration, separated by a comma.

|

| 241 |

+

notes=FIXME,

|

| 242 |

+

XXX,

|

| 243 |

+

TODO

|

| 244 |

+

|

| 245 |

+

|

| 246 |

+

[TYPECHECK]

|

| 247 |

+

|

| 248 |

+

# List of decorators that produce context managers, such as

|

| 249 |

+

# contextlib.contextmanager. Add to this list to register other decorators that

|

| 250 |

+

# produce valid context managers.

|

| 251 |

+

contextmanager-decorators=contextlib.contextmanager

|

| 252 |

+

|

| 253 |

+

# List of members which are set dynamically and missed by pylint inference

|

| 254 |

+

# system, and so shouldn't trigger E1101 when accessed. Python regular

|

| 255 |

+

# expressions are accepted.

|

| 256 |

+

generated-members=

|

| 257 |

+

|

| 258 |

+

# Tells whether missing members accessed in mixin class should be ignored. A

|

| 259 |

+

# mixin class is detected if its name ends with "mixin" (case insensitive).

|

| 260 |

+

ignore-mixin-members=yes

|

| 261 |

+

|

| 262 |

+

# Tells whether to warn about missing members when the owner of the attribute

|

| 263 |

+

# is inferred to be None.

|

| 264 |

+

ignore-none=yes

|

| 265 |

+

|

| 266 |

+

# This flag controls whether pylint should warn about no-member and similar

|

| 267 |

+

# checks whenever an opaque object is returned when inferring. The inference

|

| 268 |

+

# can return multiple potential results while evaluating a Python object, but

|

| 269 |

+

# some branches might not be evaluated, which results in partial inference. In

|

| 270 |

+

# that case, it might be useful to still emit no-member and other checks for

|

| 271 |

+

# the rest of the inferred objects.

|

| 272 |

+

ignore-on-opaque-inference=yes

|

| 273 |

+

|

| 274 |

+

# List of class names for which member attributes should not be checked (useful

|

| 275 |

+

# for classes with dynamically set attributes). This supports the use of

|

| 276 |

+

# qualified names.

|

| 277 |

+

ignored-classes=optparse.Values,thread._local,_thread._local

|

| 278 |

+

|

| 279 |

+

# List of module names for which member attributes should not be checked

|

| 280 |

+

# (useful for modules/projects where namespaces are manipulated during runtime

|

| 281 |

+

# and thus existing member attributes cannot be deduced by static analysis. It

|

| 282 |

+

# supports qualified module names, as well as Unix pattern matching.

|

| 283 |

+

ignored-modules=

|

| 284 |

+

|

| 285 |

+

# Show a hint with possible names when a member name was not found. The aspect

|

| 286 |

+

# of finding the hint is based on edit distance.

|

| 287 |

+

missing-member-hint=yes

|

| 288 |

+

|

| 289 |

+

# The minimum edit distance a name should have in order to be considered a

|

| 290 |

+

# similar match for a missing member name.

|

| 291 |

+

missing-member-hint-distance=1

|

| 292 |

+

|

| 293 |

+

# The total number of similar names that should be taken in consideration when

|

| 294 |

+

# showing a hint for a missing member.

|

| 295 |

+

missing-member-max-choices=1

|

| 296 |

+

|

| 297 |

+

|

| 298 |

+

[VARIABLES]

|

| 299 |

+

|

| 300 |

+

# List of additional names supposed to be defined in builtins. Remember that

|

| 301 |

+

# you should avoid defining new builtins when possible.

|

| 302 |

+

additional-builtins=

|

| 303 |

+

|

| 304 |

+

# Tells whether unused global variables should be treated as a violation.

|

| 305 |

+

allow-global-unused-variables=yes

|

| 306 |

+

|

| 307 |

+

# List of strings which can identify a callback function by name. A callback

|

| 308 |

+

# name must start or end with one of those strings.

|

| 309 |

+

callbacks=cb_,

|

| 310 |

+

_cb

|

| 311 |

+

|

| 312 |

+

# A regular expression matching the name of dummy variables (i.e. expected to

|

| 313 |

+

# not be used).

|

| 314 |

+

dummy-variables-rgx=_+$|(_[a-zA-Z0-9_]*[a-zA-Z0-9]+?$)|dummy|^ignored_|^unused_

|

| 315 |

+

|

| 316 |

+

# Argument names that match this expression will be ignored. Default to name

|

| 317 |

+

# with leading underscore.

|

| 318 |

+

ignored-argument-names=_.*|^ignored_|^unused_

|

| 319 |

+

|

| 320 |

+

# Tells whether we should check for unused import in __init__ files.

|

| 321 |

+

init-import=no

|

| 322 |

+

|

| 323 |

+

# List of qualified module names which can have objects that can redefine

|

| 324 |

+

# builtins.

|

| 325 |

+

redefining-builtins-modules=six.moves,past.builtins,future.builtins,builtins,io

|

| 326 |

+

|

| 327 |

+

|

| 328 |

+

[FORMAT]

|

| 329 |

+

|

| 330 |

+

# Expected format of line ending, e.g. empty (any line ending), LF or CRLF.

|

| 331 |

+

expected-line-ending-format=

|

| 332 |

+

|

| 333 |

+

# Regexp for a line that is allowed to be longer than the limit.

|

| 334 |

+

ignore-long-lines=^\s*(# )?<?https?://\S+>?$

|

| 335 |

+

|

| 336 |

+

# Number of spaces of indent required inside a hanging or continued line.

|

| 337 |

+

indent-after-paren=4

|

| 338 |

+

|

| 339 |

+

# String used as indentation unit. This is usually " " (4 spaces) or "\t" (1

|

| 340 |

+

# tab).

|

| 341 |

+

indent-string=' '

|

| 342 |

+

|

| 343 |

+

# Maximum number of characters on a single line.

|

| 344 |

+

max-line-length=100

|

| 345 |

+

|

| 346 |

+

# Maximum number of lines in a module.

|

| 347 |

+

max-module-lines=1000

|

| 348 |

+

|

| 349 |

+

# List of optional constructs for which whitespace checking is disabled. `dict-

|

| 350 |

+

# separator` is used to allow tabulation in dicts, etc.: {1 : 1,\n222: 2}.

|

| 351 |

+

# `trailing-comma` allows a space between comma and closing bracket: (a, ).

|

| 352 |

+

# `empty-line` allows space-only lines.

|

| 353 |

+

no-space-check=trailing-comma,

|

| 354 |

+

dict-separator

|

| 355 |

+

|

| 356 |

+

# Allow the body of a class to be on the same line as the declaration if body

|

| 357 |

+

# contains single statement.

|

| 358 |

+

single-line-class-stmt=no

|

| 359 |

+

|

| 360 |

+

# Allow the body of an if to be on the same line as the test if there is no

|

| 361 |

+

# else.

|

| 362 |

+

single-line-if-stmt=no

|

| 363 |

+

|

| 364 |

+

|

| 365 |

+

[SIMILARITIES]

|

| 366 |

+

|

| 367 |

+

# Ignore comments when computing similarities.

|

| 368 |

+

ignore-comments=yes

|

| 369 |

+

|

| 370 |

+

# Ignore docstrings when computing similarities.

|

| 371 |

+

ignore-docstrings=yes

|

| 372 |

+

|

| 373 |

+

# Ignore imports when computing similarities.

|

| 374 |

+

ignore-imports=no

|

| 375 |

+

|

| 376 |

+

# Minimum lines number of a similarity.

|

| 377 |

+

min-similarity-lines=4

|

| 378 |

+

|

| 379 |

+

|

| 380 |

+

[BASIC]

|

| 381 |

+

|

| 382 |

+

# Naming style matching correct argument names.

|

| 383 |

+

argument-naming-style=snake_case

|

| 384 |

+

|

| 385 |

+

# Regular expression matching correct argument names. Overrides argument-

|

| 386 |

+

# naming-style.

|

| 387 |

+

argument-rgx=[a-z_][a-z0-9_]{0,30}$

|

| 388 |

+

|

| 389 |

+

# Naming style matching correct attribute names.

|

| 390 |

+

attr-naming-style=snake_case

|

| 391 |

+

|

| 392 |

+

# Regular expression matching correct attribute names. Overrides attr-naming-

|

| 393 |

+

# style.

|

| 394 |

+

#attr-rgx=

|

| 395 |

+

|

| 396 |

+

# Bad variable names which should always be refused, separated by a comma.

|

| 397 |

+

bad-names=

|

| 398 |

+

|

| 399 |

+

# Naming style matching correct class attribute names.

|

| 400 |

+

class-attribute-naming-style=any

|

| 401 |

+

|

| 402 |

+

# Regular expression matching correct class attribute names. Overrides class-

|

| 403 |

+

# attribute-naming-style.

|

| 404 |

+

#class-attribute-rgx=

|

| 405 |

+

|

| 406 |

+

# Naming style matching correct class names.

|

| 407 |

+

class-naming-style=PascalCase

|

| 408 |

+

|

| 409 |

+

# Regular expression matching correct class names. Overrides class-naming-

|

| 410 |

+

# style.

|

| 411 |

+

#class-rgx=

|

| 412 |

+

|

| 413 |

+

# Naming style matching correct constant names.

|

| 414 |

+

const-naming-style=UPPER_CASE

|

| 415 |

+

|

| 416 |

+

# Regular expression matching correct constant names. Overrides const-naming-

|

| 417 |

+

# style.

|

| 418 |

+

#const-rgx=

|

| 419 |

+

|

| 420 |

+

# Minimum line length for functions/classes that require docstrings, shorter

|

| 421 |

+

# ones are exempt.

|

| 422 |

+

docstring-min-length=-1

|

| 423 |

+

|

| 424 |

+

# Naming style matching correct function names.

|

| 425 |

+

function-naming-style=snake_case

|

| 426 |

+

|

| 427 |

+

# Regular expression matching correct function names. Overrides function-

|

| 428 |

+

# naming-style.

|

| 429 |

+

#function-rgx=

|

| 430 |

+

|

| 431 |

+

# Good variable names which should always be accepted, separated by a comma.

|

| 432 |

+

good-names=i,

|

| 433 |

+

j,

|

| 434 |

+

k,

|

| 435 |

+

x,

|

| 436 |

+

ex,

|

| 437 |

+

Run,

|

| 438 |

+

_

|

| 439 |

+

|

| 440 |

+

# Include a hint for the correct naming format with invalid-name.

|

| 441 |

+

include-naming-hint=no

|

| 442 |

+

|

| 443 |

+

# Naming style matching correct inline iteration names.

|

| 444 |

+

inlinevar-naming-style=any

|

| 445 |

+

|

| 446 |

+

# Regular expression matching correct inline iteration names. Overrides

|

| 447 |

+

# inlinevar-naming-style.

|

| 448 |

+

#inlinevar-rgx=

|

| 449 |

+

|

| 450 |

+

# Naming style matching correct method names.

|

| 451 |

+

method-naming-style=snake_case

|

| 452 |

+

|

| 453 |

+

# Regular expression matching correct method names. Overrides method-naming-

|

| 454 |

+

# style.

|

| 455 |

+

#method-rgx=

|

| 456 |

+

|

| 457 |

+

# Naming style matching correct module names.

|

| 458 |

+

module-naming-style=snake_case

|

| 459 |

+

|

| 460 |

+

# Regular expression matching correct module names. Overrides module-naming-

|

| 461 |

+

# style.

|

| 462 |

+

#module-rgx=

|

| 463 |

+

|

| 464 |

+

# Colon-delimited sets of names that determine each other's naming style when

|

| 465 |

+

# the name regexes allow several styles.

|

| 466 |

+

name-group=

|

| 467 |

+

|

| 468 |

+

# Regular expression which should only match function or class names that do

|

| 469 |

+

# not require a docstring.

|

| 470 |

+

no-docstring-rgx=^_

|

| 471 |

+

|

| 472 |

+

# List of decorators that produce properties, such as abc.abstractproperty. Add

|

| 473 |

+

# to this list to register other decorators that produce valid properties.

|

| 474 |

+

# These decorators are taken in consideration only for invalid-name.

|

| 475 |

+

property-classes=abc.abstractproperty

|

| 476 |

+

|

| 477 |

+

# Naming style matching correct variable names.

|

| 478 |

+

variable-naming-style=snake_case

|

| 479 |

+

|

| 480 |

+

# Regular expression matching correct variable names. Overrides variable-

|

| 481 |

+

# naming-style.

|

| 482 |

+

variable-rgx=[a-z_][a-z0-9_]{0,30}$

|

| 483 |

+

|

| 484 |

+

|

| 485 |

+

[STRING]

|

| 486 |

+

|

| 487 |

+

# This flag controls whether the implicit-str-concat-in-sequence should

|

| 488 |

+

# generate a warning on implicit string concatenation in sequences defined over

|

| 489 |

+

# several lines.

|

| 490 |

+

check-str-concat-over-line-jumps=no

|

| 491 |

+

|

| 492 |

+

|

| 493 |

+

[IMPORTS]

|

| 494 |

+

|

| 495 |

+

# Allow wildcard imports from modules that define __all__.

|

| 496 |

+

allow-wildcard-with-all=no

|

| 497 |

+

|

| 498 |

+

# Analyse import fallback blocks. This can be used to support both Python 2 and

|

| 499 |

+

# 3 compatible code, which means that the block might have code that exists

|

| 500 |

+

# only in one or another interpreter, leading to false positives when analysed.

|

| 501 |

+

analyse-fallback-blocks=no

|

| 502 |

+

|

| 503 |

+

# Deprecated modules which should not be used, separated by a comma.

|

| 504 |

+

deprecated-modules=optparse,tkinter.tix

|

| 505 |

+

|

| 506 |

+

# Create a graph of external dependencies in the given file (report RP0402 must

|

| 507 |

+

# not be disabled).

|

| 508 |

+

ext-import-graph=

|

| 509 |

+

|

| 510 |

+

# Create a graph of every (i.e. internal and external) dependencies in the

|

| 511 |

+

# given file (report RP0402 must not be disabled).

|

| 512 |

+

import-graph=

|

| 513 |

+

|

| 514 |

+

# Create a graph of internal dependencies in the given file (report RP0402 must

|

| 515 |

+

# not be disabled).

|

| 516 |

+

int-import-graph=

|

| 517 |

+

|

| 518 |

+

# Force import order to recognize a module as part of the standard

|

| 519 |

+

# compatibility libraries.

|

| 520 |

+

known-standard-library=

|

| 521 |

+

|

| 522 |

+

# Force import order to recognize a module as part of a third party library.

|

| 523 |

+

known-third-party=enchant

|

| 524 |

+

|

| 525 |

+

|

| 526 |

+

[CLASSES]

|

| 527 |

+

|

| 528 |

+

# List of method names used to declare (i.e. assign) instance attributes.

|

| 529 |

+

defining-attr-methods=__init__,

|

| 530 |

+

__new__,

|

| 531 |

+

setUp

|

| 532 |

+

|

| 533 |

+

# List of member names, which should be excluded from the protected access

|

| 534 |

+

# warning.

|

| 535 |

+

exclude-protected=_asdict,

|

| 536 |

+

_fields,

|

| 537 |

+

_replace,

|

| 538 |

+

_source,

|

| 539 |

+

_make

|

| 540 |

+

|

| 541 |

+

# List of valid names for the first argument in a class method.

|

| 542 |

+

valid-classmethod-first-arg=cls

|

| 543 |

+

|

| 544 |

+

# List of valid names for the first argument in a metaclass class method.

|

| 545 |

+

valid-metaclass-classmethod-first-arg=cls

|

| 546 |

+

|

| 547 |

+

|

| 548 |

+

[DESIGN]

|

| 549 |

+

|

| 550 |

+

# Maximum number of arguments for function / method.

|

| 551 |

+

max-args=5

|

| 552 |

+

|

| 553 |

+

# Maximum number of attributes for a class (see R0902).

|

| 554 |

+

max-attributes=7

|

| 555 |

+

|

| 556 |

+

# Maximum number of boolean expressions in an if statement.

|

| 557 |

+

max-bool-expr=5

|

| 558 |

+

|

| 559 |

+

# Maximum number of branch for function / method body.

|

| 560 |

+

max-branches=12

|

| 561 |

+

|

| 562 |

+

# Maximum number of locals for function / method body.

|

| 563 |

+

max-locals=15

|

| 564 |

+

|

| 565 |

+

# Maximum number of parents for a class (see R0901).

|

| 566 |

+

max-parents=7

|

| 567 |

+

|

| 568 |

+

# Maximum number of public methods for a class (see R0904).

|

| 569 |

+

max-public-methods=20

|

| 570 |

+

|

| 571 |

+

# Maximum number of return / yield for function / method body.

|

| 572 |

+

max-returns=6

|

| 573 |

+

|

| 574 |

+

# Maximum number of statements in function / method body.

|

| 575 |

+

max-statements=50

|

| 576 |

+

|

| 577 |

+

# Minimum number of public methods for a class (see R0903).

|

| 578 |

+

min-public-methods=2

|

| 579 |

+

|

| 580 |

+

|

| 581 |

+

[EXCEPTIONS]

|

| 582 |

+

|

| 583 |

+

# Exceptions that will emit a warning when being caught. Defaults to

|

| 584 |

+

# "BaseException, Exception".

|

| 585 |

+

overgeneral-exceptions=BaseException,

|

| 586 |

+

Exception

|

CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|