TokenButler -- Predict token importance for all heads across the transformer in the first layer itself. Enable fine-grained token sparsity!

YASH AKHAURI

akhauriyash

AI & ML interests

None yet

Recent Activity

authored

a paper

about 9 hours ago

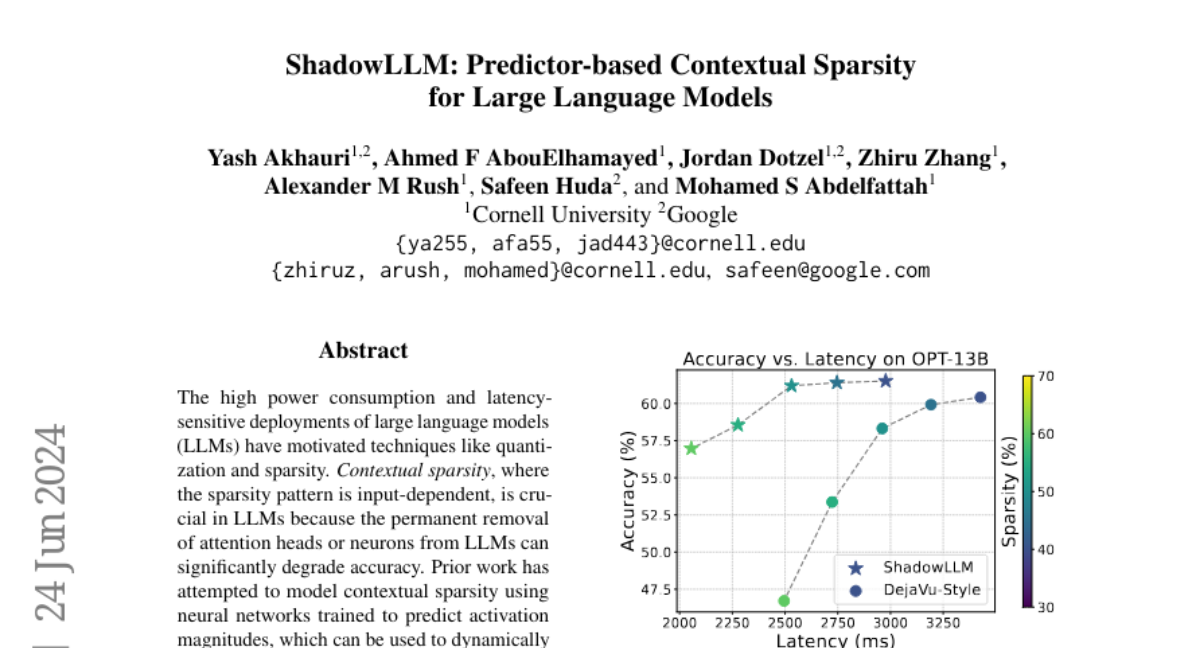

ShadowLLM: Predictor-based Contextual Sparsity for Large Language Models

authored

a paper

about 9 hours ago

Attamba: Attending To Multi-Token States

authored

a paper

about 9 hours ago

TokenButler: Token Importance is Predictable

Organizations

None yet

Collections

1

models

5

akhauriyash/DeepSeek-R1-Distill-Llama-8B-Butler

Text Generation

•

Updated

•

16

akhauriyash/Llama-3.2-3B-Butler

Text Generation

•

Updated

•

10

akhauriyash/Llama-3.2-1B-Butler

Text Generation

•

Updated

•

44

akhauriyash/Llama-3.1-8B-Butler

Text Generation

•

Updated

•

10

akhauriyash/Llama-2-7b-hf-Butler

Text Generation

•

Updated

•

19

datasets

None public yet