Upload 96 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +10 -0

- Attention/heatmap/0007_18.nii.gz +3 -0

- Attention/heatmap/0007_18.png +0 -0

- Attention/heatmap/0007_19.nii.gz +3 -0

- Attention/heatmap/0007_19.png +0 -0

- Attention/heatmap/0007_20.nii.gz +3 -0

- Attention/heatmap/0007_20.png +0 -0

- Attention/heatmap/0007_21.nii.gz +3 -0

- Attention/heatmap/0007_21.png +0 -0

- Attention/heatmap/0007_22.nii.gz +3 -0

- Attention/heatmap/0007_22.png +0 -0

- Attention/heatmap/0007_23.nii.gz +3 -0

- Attention/heatmap/0007_23.png +0 -0

- LICENSE +21 -0

- README.md +202 -3

- data/__init__.py +93 -0

- data/aligned_dataset.py +300 -0

- data/base_dataset.py +169 -0

- data/image_folder.py +65 -0

- data/mask_extract.py +346 -0

- data/vertebra_data.json +1468 -0

- datasets/raw/0007/0007.json +50 -0

- datasets/raw/0007/0007.nii.gz +3 -0

- datasets/raw/0007/0007_msk.nii.gz +3 -0

- datasets/straightened/CT/0007_18.nii.gz +3 -0

- datasets/straightened/CT/0007_19.nii.gz +3 -0

- datasets/straightened/CT/0007_20.nii.gz +3 -0

- datasets/straightened/CT/0007_21.nii.gz +3 -0

- datasets/straightened/CT/0007_22.nii.gz +3 -0

- datasets/straightened/CT/0007_23.nii.gz +3 -0

- datasets/straightened/label/0007_18.nii.gz +3 -0

- datasets/straightened/label/0007_19.nii.gz +3 -0

- datasets/straightened/label/0007_20.nii.gz +3 -0

- datasets/straightened/label/0007_21.nii.gz +3 -0

- datasets/straightened/label/0007_22.nii.gz +3 -0

- datasets/straightened/label/0007_23.nii.gz +3 -0

- eval_3d_sagittal_twostage.py +263 -0

- evaluation/RHLV_quantification.py +212 -0

- evaluation/RHLV_quantification_coronal.py +218 -0

- evaluation/SVM_grading.py +96 -0

- evaluation/SVM_grading_2.5d.py +100 -0

- evaluation/generation_eval_coronal.py +175 -0

- evaluation/generation_eval_sagittal.py +165 -0

- images/SHRM_and_HGAM.png +3 -0

- images/attention.png +3 -0

- images/comparison_with_others.png +3 -0

- images/distribution.png +3 -0

- images/mask.png +3 -0

- images/network.png +3 -0

- images/our_method.png +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,13 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/attention.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/comparison_with_others.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

images/distribution.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

images/mask.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

images/network.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

images/our_method.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

images/rhlv.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

images/SHRM_and_HGAM.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

images/traditional_image_inpaint.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

images/workflow.png filter=lfs diff=lfs merge=lfs -text

|

Attention/heatmap/0007_18.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

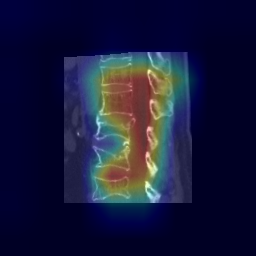

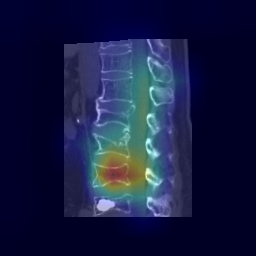

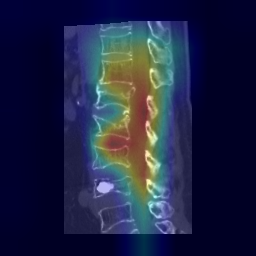

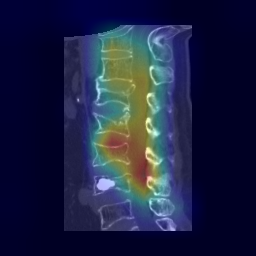

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5c70fe8e6b89d3e406feed30c961de206ebfc17d9f71348e25f6831fdcf228f4

|

| 3 |

+

size 5250286

|

Attention/heatmap/0007_18.png

ADDED

|

Attention/heatmap/0007_19.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e563ebb27cd6259cd98e9c3f99f4ea3c90cfe7a48226b86f69af5cae7215ce11

|

| 3 |

+

size 4993704

|

Attention/heatmap/0007_19.png

ADDED

|

Attention/heatmap/0007_20.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3693893d1a7ccfa83359291b8784f6c12dca2601c0d081df31c31ef062e758a1

|

| 3 |

+

size 5364097

|

Attention/heatmap/0007_20.png

ADDED

|

Attention/heatmap/0007_21.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:16dcc11eb548ebdbfcc84fccb826a5707b987379b32d10e05056beba6db3fb5a

|

| 3 |

+

size 5114208

|

Attention/heatmap/0007_21.png

ADDED

|

Attention/heatmap/0007_22.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e0a8da6028142d200104a8f747a8acaac39499d36c5a64f982d190e3f9fb194c

|

| 3 |

+

size 4997935

|

Attention/heatmap/0007_22.png

ADDED

|

Attention/heatmap/0007_23.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a4d9b6c372b30477a4875dec40409cde9f0112df8359521942c5f95a7990d0ee

|

| 3 |

+

size 4989910

|

Attention/heatmap/0007_23.png

ADDED

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2025 Qi Zhang

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,3 +1,202 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# HealthiVert-GAN: Pseudo-Healthy Vertebral Image Synthesis for Interpretable Compression Fracture Grading

|

| 2 |

+

|

| 3 |

+

[](LICENSE)

|

| 4 |

+

|

| 5 |

+

**HealthiVert-GAN** is a novel framework for synthesizing pseudo-healthy vertebral CT images from fractured vertebrae. By simulating pre-fracture states, it enables interpretable quantification of vertebral compression fractures (VCFs) through **Relative Height Loss of Vertebrae (RHLV)**. The model integrates a two-stage GAN architecture with anatomical consistency modules, achieving state-of-the-art performance on both public and private datasets.

|

| 6 |

+

|

| 7 |

+

---

|

| 8 |

+

|

| 9 |

+

## 🚀 Key Features

|

| 10 |

+

- **Two-Stage Synthesis**: Coarse-to-fine generation with 2.5D sagittal/coronal fusion.

|

| 11 |

+

- **Anatomic Modules**:

|

| 12 |

+

- **Edge-Enhancing Module (EEM)**: Captures precise vertebral morphology.

|

| 13 |

+

- **Self-adaptive Height Restoration Module (SHRM)**: Predicts healthy vertebral height adaptively.

|

| 14 |

+

- **HealthiVert-Guided Attention Module (HGAM)**: Focuses on non-fractured regions via Grad-CAM++.

|

| 15 |

+

- **Iterative Synthesis**: Generates adjacent vertebrae first to minimize fracture interference.

|

| 16 |

+

- **RHLV Quantification**: Measures height loss in anterior/middle/posterior regions for SVM-based Genant grading.

|

| 17 |

+

|

| 18 |

+

---

|

| 19 |

+

|

| 20 |

+

## 🛠️ Architecture

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

### Workflow

|

| 25 |

+

1. **Preprocessing**:

|

| 26 |

+

- **Spine Straightening**: Align vertebrae vertically using SCNet segmentation.

|

| 27 |

+

- **De-pedicle**: Remove vertebral arches for body-focused analysis.

|

| 28 |

+

- **Masking**: Replace target vertebra with a fixed-height mask (40mm).

|

| 29 |

+

|

| 30 |

+

2. **Two-Stage Generation**:

|

| 31 |

+

- **Coarse Generator**: Outputs initial CT and segments adjacent vertebrae.

|

| 32 |

+

- **Refinement Generator**: Enhances details with contextual attention and edge loss.

|

| 33 |

+

|

| 34 |

+

3. **Iterative Synthesis**:

|

| 35 |

+

- Step 1: Synthesize adjacent vertebrae.

|

| 36 |

+

- Step 2: Generate target vertebra using Step 1 results.

|

| 37 |

+

|

| 38 |

+

4. **RHLV Calculation**:

|

| 39 |

+

```math

|

| 40 |

+

RHLV = \frac{H_{syn} - H_{ori}}{H_{syn}}

|

| 41 |

+

```

|

| 42 |

+

Segments vertebra into anterior/middle/posterior regions for detailed analysis.

|

| 43 |

+

|

| 44 |

+

**SVM Classification**: Uses RHLV values to classify fractures into mild/moderate/severe.

|

| 45 |

+

|

| 46 |

+

### 🔑 Key Contributions

|

| 47 |

+

|

| 48 |

+

1. **Interpretable Quantification Beyond Black-Box Models**

|

| 49 |

+

Traditional end-to-end fracture classification models suffer from class imbalance and lack interpretability. HealthiVert-GAN addresses these by synthesizing pseudo-healthy vertebrae and quantifying height loss (RHLV) between generated and original vertebrae. This approach achieves superior performance (e.g., **72.3% Macro-F1** on Verse2019) while providing transparent metrics for clinical decisions.

|

| 50 |

+

|

| 51 |

+

2. **Height Loss Distribution Mapping for Surgical Planning**

|

| 52 |

+

HealthiVert-GAN generates cross-sectional height loss heatmaps that visualize compression patterns (wedge/biconcave/crush fractures). Clinicians can use these maps to assess fracture stability and plan interventions (e.g., vertebroplasty) with precision unmatched by single-slice methods.

|

| 53 |

+

|

| 54 |

+

3. **Anatomic Prior Integration**

|

| 55 |

+

Unlike conventional inpainting models, HealthiVert-GAN introduces adjacent vertebrae height variations as prior knowledge. The **Self-adaptive Height Restoration Module (SHRM)** dynamically adjusts generated vertebral heights based on neighboring healthy vertebrae, improving both interpretability and anatomic consistency.

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

---

|

| 59 |

+

|

| 60 |

+

## 🚀 Quick Start

|

| 61 |

+

|

| 62 |

+

### Installation

|

| 63 |

+

|

| 64 |

+

```bash

|

| 65 |

+

git clone https://github.com/zhibaishouheilab/HealthiVert-GAN.git

|

| 66 |

+

cd HealthiVert-GAN

|

| 67 |

+

pip install -r requirements.txt # PyTorch, NiBabel, SimpleITK, OpenCV

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

### Data Preparation

|

| 71 |

+

|

| 72 |

+

#### Dataset Structure

|

| 73 |

+

Organize data as:

|

| 74 |

+

|

| 75 |

+

```

|

| 76 |

+

/dataset/

|

| 77 |

+

├── raw

|

| 78 |

+

├── 0001/

|

| 79 |

+

│ ├── 0001.nii.gz # Original CT

|

| 80 |

+

│ └── 0001_msk.nii.gz # Vertebrae segmentation

|

| 81 |

+

└── 0002/

|

| 82 |

+

├── 0002.nii.gz

|

| 83 |

+

└── 0002_msk.nii.gz

|

| 84 |

+

```

|

| 85 |

+

**Note**: You have to segment the vertebra firstly. Refer to [CTSpine1K-nnUNet](https://github.com/MIRACLE-Center/CTSpine1K) to obtain how to segment using nnU-Net.

|

| 86 |

+

|

| 87 |

+

#### Preprocessing

|

| 88 |

+

|

| 89 |

+

**Spine Straightening**:

|

| 90 |

+

|

| 91 |

+

```bash

|

| 92 |

+

python straighten/location_json_local.py # Generate vertebral centroids

|

| 93 |

+

python straighten/straighten_mask_3d.py # Output: ./dataset/straightened/

|

| 94 |

+

```

|

| 95 |

+

|

| 96 |

+

**Attention Map Generation**:

|

| 97 |

+

|

| 98 |

+

```bash

|

| 99 |

+

python Attention/grad_CAM_3d_sagittal.py # Output: ./Attention/heatmap/

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

### Training

|

| 103 |

+

|

| 104 |

+

**Configure JSON**:

|

| 105 |

+

|

| 106 |

+

Update `vertebra_data.json` with patient IDs, labels, and paths.

|

| 107 |

+

|

| 108 |

+

**Train Model**:

|

| 109 |

+

|

| 110 |

+

```bash

|

| 111 |

+

python train.py \

|

| 112 |

+

--dataroot ./dataset/straightened \

|

| 113 |

+

--name HealthiVert_experiment \

|

| 114 |

+

--model pix2pix \

|

| 115 |

+

--direction BtoA \

|

| 116 |

+

--batch_size 16 \

|

| 117 |

+

--n_epochs 1000

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

Checkpoints saved in `./checkpoints/HealthiVert_experiment`.

|

| 121 |

+

The pretrained weights will be released later.

|

| 122 |

+

|

| 123 |

+

### Inference

|

| 124 |

+

|

| 125 |

+

**Generate Pseudo-Healthy Vertebrae**:

|

| 126 |

+

|

| 127 |

+

```bash

|

| 128 |

+

python eval_3d_sagittal_twostage.py # define the parameters in the code file

|

| 129 |

+

```

|

| 130 |

+

|

| 131 |

+

Outputs: `./output/CT_fake/` and `./output/label_fake/`.

|

| 132 |

+

|

| 133 |

+

**Fracture Grading**

|

| 134 |

+

|

| 135 |

+

**Calculate RHLV**:

|

| 136 |

+

|

| 137 |

+

```bash

|

| 138 |

+

python evaluation/RHLV_quantification.py

|

| 139 |

+

```

|

| 140 |

+

|

| 141 |

+

**Train SVM Classifier**:

|

| 142 |

+

|

| 143 |

+

```bash

|

| 144 |

+

python evaluation/SVM_grading.py

|

| 145 |

+

```

|

| 146 |

+

|

| 147 |

+

**Evaluate generation results**:

|

| 148 |

+

```bash

|

| 149 |

+

python evaluation/generation_eval_sagittal.py

|

| 150 |

+

```

|

| 151 |

+

---

|

| 152 |

+

|

| 153 |

+

## 📊 Results

|

| 154 |

+

|

| 155 |

+

### Qualitative Comparison

|

| 156 |

+

The generation visulization of different masking strategies.

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

The visulization heatmap of vertebral height loss distribution in axial view, and the curve of height loss.

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

### Quantitative Performance (Verse2019 Dataset)

|

| 163 |

+

|

| 164 |

+

| Metric | HealthiVert-GAN | AOT-GAN |3D SupCon-SENet|

|

| 165 |

+

|-------------|-----------------|--------------|------------------|

|

| 166 |

+

| Macro-P | 0.727 | 0.710 | 0.710 |

|

| 167 |

+

| Macro-R | 0.753 | 0.707 | 0.636 |

|

| 168 |

+

| Macro-F1 | 0.723 | 0.692 | 0.667 |

|

| 169 |

+

|

| 170 |

+

Comparison model codes:

|

| 171 |

+

[AOT-GAN](https://github.com/researchmm/AOT-GAN-for-Inpainting)

|

| 172 |

+

[3D SupCon-SENet](https://github.com/wxwxwwxxx/VertebralFractureGrading)

|

| 173 |

+

|

| 174 |

+

---

|

| 175 |

+

|

| 176 |

+

## 📜 Citation

|

| 177 |

+

|

| 178 |

+

```bibtex

|

| 179 |

+

@misc{zhang2025healthivertgannovelframeworkpseudohealthy,

|

| 180 |

+

title={HealthiVert-GAN: A Novel Framework of Pseudo-Healthy Vertebral Image Synthesis for Interpretable Compression Fracture Grading},

|

| 181 |

+

author={Qi Zhang and Shunan Zhang and Ziqi Zhao and Kun Wang and Jun Xu and Jianqi Sun},

|

| 182 |

+

year={2025},

|

| 183 |

+

eprint={2503.05990},

|

| 184 |

+

archivePrefix={arXiv},

|

| 185 |

+

primaryClass={eess.IV},

|

| 186 |

+

url={https://arxiv.org/abs/2503.05990},

|

| 187 |

+

}

|

| 188 |

+

```

|

| 189 |

+

|

| 190 |

+

## 📧 Contact

|

| 191 |

+

If you have any questions about the codes or paper, please let us know via [[email protected]]([email protected]).

|

| 192 |

+

|

| 193 |

+

---

|

| 194 |

+

|

| 195 |

+

## 🙇 Acknowledgment

|

| 196 |

+

- Thank Febian's [nnUnet](https://github.com/MIC-DKFZ/nnUNet).

|

| 197 |

+

- Thank Deng's shared dataset [CTSpine 1K](https://github.com/MIRACLE-Center/CTSpine1K?tab=readme-ov-file) and their pretrained nnUNet's weights.

|

| 198 |

+

- Thank [NeuroML](https://github.com/neuro-ml/straighten) that released the spine straightening algorithm.

|

| 199 |

+

|

| 200 |

+

## 📄 License

|

| 201 |

+

|

| 202 |

+

This project is licensed under the MIT License. See [LICENSE](LICENSE) for details.

|

data/__init__.py

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This package includes all the modules related to data loading and preprocessing

|

| 2 |

+

|

| 3 |

+

To add a custom dataset class called 'dummy', you need to add a file called 'dummy_dataset.py' and define a subclass 'DummyDataset' inherited from BaseDataset.

|

| 4 |

+

You need to implement four functions:

|

| 5 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 6 |

+

-- <__len__>: return the size of dataset.

|

| 7 |

+

-- <__getitem__>: get a data point from data loader.

|

| 8 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 9 |

+

|

| 10 |

+

Now you can use the dataset class by specifying flag '--dataset_mode dummy'.

|

| 11 |

+

See our template dataset class 'template_dataset.py' for more details.

|

| 12 |

+

"""

|

| 13 |

+

import importlib

|

| 14 |

+

import torch.utils.data

|

| 15 |

+

from data.base_dataset import BaseDataset

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def find_dataset_using_name(dataset_name):

|

| 19 |

+

"""Import the module "data/[dataset_name]_dataset.py".

|

| 20 |

+

|

| 21 |

+

In the file, the class called DatasetNameDataset() will

|

| 22 |

+

be instantiated. It has to be a subclass of BaseDataset,

|

| 23 |

+

and it is case-insensitive.

|

| 24 |

+

"""

|

| 25 |

+

dataset_filename = "data." + dataset_name + "_dataset"

|

| 26 |

+

datasetlib = importlib.import_module(dataset_filename)

|

| 27 |

+

|

| 28 |

+

dataset = None

|

| 29 |

+

target_dataset_name = dataset_name.replace('_', '') + 'dataset'

|

| 30 |

+

for name, cls in datasetlib.__dict__.items():

|

| 31 |

+

if name.lower() == target_dataset_name.lower() \

|

| 32 |

+

and issubclass(cls, BaseDataset):

|

| 33 |

+

dataset = cls

|

| 34 |

+

|

| 35 |

+

if dataset is None:

|

| 36 |

+

raise NotImplementedError("In %s.py, there should be a subclass of BaseDataset with class name that matches %s in lowercase." % (dataset_filename, target_dataset_name))

|

| 37 |

+

|

| 38 |

+

return dataset

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def get_option_setter(dataset_name):

|

| 42 |

+

"""Return the static method <modify_commandline_options> of the dataset class."""

|

| 43 |

+

dataset_class = find_dataset_using_name(dataset_name)

|

| 44 |

+

return dataset_class.modify_commandline_options

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def create_dataset(opt):

|

| 48 |

+

"""Create a dataset given the option.

|

| 49 |

+

|

| 50 |

+

This function wraps the class CustomDatasetDataLoader.

|

| 51 |

+

This is the main interface between this package and 'train.py'/'test.py'

|

| 52 |

+

|

| 53 |

+

Example:

|

| 54 |

+

>>> from data import create_dataset

|

| 55 |

+

>>> dataset = create_dataset(opt)

|

| 56 |

+

"""

|

| 57 |

+

data_loader = CustomDatasetDataLoader(opt)

|

| 58 |

+

dataset = data_loader.load_data()

|

| 59 |

+

return dataset

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

class CustomDatasetDataLoader():

|

| 63 |

+

"""Wrapper class of Dataset class that performs multi-threaded data loading"""

|

| 64 |

+

|

| 65 |

+

def __init__(self, opt):

|

| 66 |

+

"""Initialize this class

|

| 67 |

+

|

| 68 |

+

Step 1: create a dataset instance given the name [dataset_mode]

|

| 69 |

+

Step 2: create a multi-threaded data loader.

|

| 70 |

+

"""

|

| 71 |

+

self.opt = opt

|

| 72 |

+

dataset_class = find_dataset_using_name(opt.dataset_mode)

|

| 73 |

+

self.dataset = dataset_class(opt)

|

| 74 |

+

print("dataset [%s] was created" % type(self.dataset).__name__)

|

| 75 |

+

self.dataloader = torch.utils.data.DataLoader(

|

| 76 |

+

self.dataset,

|

| 77 |

+

batch_size=opt.batch_size,

|

| 78 |

+

shuffle=not opt.serial_batches,

|

| 79 |

+

num_workers=int(opt.num_threads))

|

| 80 |

+

|

| 81 |

+

def load_data(self):

|

| 82 |

+

return self

|

| 83 |

+

|

| 84 |

+

def __len__(self):

|

| 85 |

+

"""Return the number of data in the dataset"""

|

| 86 |

+

return min(len(self.dataset), self.opt.max_dataset_size)

|

| 87 |

+

|

| 88 |

+

def __iter__(self):

|

| 89 |

+

"""Return a batch of data"""

|

| 90 |

+

for i, data in enumerate(self.dataloader):

|

| 91 |

+

if i * self.opt.batch_size >= self.opt.max_dataset_size:

|

| 92 |

+

break

|

| 93 |

+

yield data

|

data/aligned_dataset.py

ADDED

|

@@ -0,0 +1,300 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#假设读入的数据是nii格式的

|

| 2 |

+

# 用于coronal角度数据的读取

|

| 3 |

+

|

| 4 |

+

import os

|

| 5 |

+

from data.base_dataset import BaseDataset, get_params, get_transform

|

| 6 |

+

from data.image_folder import make_dataset

|

| 7 |

+

from PIL import Image

|

| 8 |

+

import numpy as np

|

| 9 |

+

import torch

|

| 10 |

+

from .mask_extract import process_spine_data, process_spine_data_aug

|

| 11 |

+

import json

|

| 12 |

+

import nibabel as nib

|

| 13 |

+

import random

|

| 14 |

+

import torchvision.transforms as transforms

|

| 15 |

+

from scipy.ndimage import label, find_objects

|

| 16 |

+

|

| 17 |

+

def remove_small_connected_components(input_array, min_size):

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

# 识别连通域

|

| 21 |

+

structure = np.ones((3, 3), dtype=np.int32) # 定义连通性结构

|

| 22 |

+

labeled, ncomponents = label(input_array, structure)

|

| 23 |

+

|

| 24 |

+

# 遍历所有连通域,如果连通域大小小于阈值,则去除

|

| 25 |

+

for i in range(1, ncomponents + 1):

|

| 26 |

+

if np.sum(labeled == i) < min_size:

|

| 27 |

+

input_array[labeled == i] = 0

|

| 28 |

+

|

| 29 |

+

# 如果输入是张量,则转换回张量

|

| 30 |

+

|

| 31 |

+

return input_array

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

class AlignedDataset(BaseDataset):

|

| 35 |

+

"""A dataset class for paired image dataset.

|

| 36 |

+

|

| 37 |

+

It assumes that the directory '/path/to/data/train' contains image pairs in the form of {A,B}.

|

| 38 |

+

During test time, you need to prepare a directory '/path/to/data/test'.

|

| 39 |

+

"""

|

| 40 |

+

|

| 41 |

+

def __init__(self, opt):

|

| 42 |

+

"""Initialize this dataset class.

|

| 43 |

+

|

| 44 |

+

Parameters:

|

| 45 |

+

opt (Option class) -- stores all the experiment flags; needs to be a subclass of BaseOptions

|

| 46 |

+

"""

|

| 47 |

+

BaseDataset.__init__(self, opt)

|

| 48 |

+

|

| 49 |

+

# 读取json文件来选择训练集、测试集和验证集

|

| 50 |

+

with open('/home/zhangqi/Project/pytorch-CycleGAN-and-pix2pix-master/data/vertebra_data.json', 'r') as file:

|

| 51 |

+

vertebra_set = json.load(file)

|

| 52 |

+

self.normal_vert_list = []

|

| 53 |

+

self.abnormal_vert_list = []

|

| 54 |

+

# 初始化存储normal和abnormal vertebrae的字典

|

| 55 |

+

self.normal_vert_dict = {}

|

| 56 |

+

self.abnormal_vert_dict = {}

|

| 57 |

+

|

| 58 |

+

for patient_vert_id in vertebra_set[opt.phase].keys():

|

| 59 |

+

# 分离patient id和vert id

|

| 60 |

+

patient_id, vert_id = patient_vert_id.rsplit('_',1)

|

| 61 |

+

|

| 62 |

+

# 判断该vertebra是normal还是abnormal

|

| 63 |

+

if int(vertebra_set[opt.phase][patient_vert_id]) <= 1:

|

| 64 |

+

self.normal_vert_list.append(patient_vert_id)

|

| 65 |

+

# 如果是normal,添加到normal_vert_dict

|

| 66 |

+

if patient_id not in self.normal_vert_dict:

|

| 67 |

+

self.normal_vert_dict[patient_id] = [vert_id]

|

| 68 |

+

else:

|

| 69 |

+

self.normal_vert_dict[patient_id].append(vert_id)

|

| 70 |

+

else:

|

| 71 |

+

self.abnormal_vert_list.append(patient_vert_id)

|

| 72 |

+

# 如果是abnormal,添加到abnormal_vert_dict

|

| 73 |

+

if patient_id not in self.abnormal_vert_dict:

|

| 74 |

+

self.abnormal_vert_dict[patient_id] = [vert_id]

|

| 75 |

+

else:

|

| 76 |

+

self.abnormal_vert_dict[patient_id].append(vert_id)

|

| 77 |

+

if opt.vert_class=="normal":

|

| 78 |

+

self.vertebra_id = np.array(self.normal_vert_list)

|

| 79 |

+

elif opt.vert_class=="abnormal":

|

| 80 |

+

self.vertebra_id = np.array(self.abnormal_vert_list)

|

| 81 |

+

else:

|

| 82 |

+

print("No vert class is set.")

|

| 83 |

+

self.vertebra_id = None

|

| 84 |

+

|

| 85 |

+

#self.dir_AB = os.path.join(opt.dataroot, opt.phase) # get the image directory

|

| 86 |

+

self.dir_AB = opt.dataroot

|

| 87 |

+

#self.dir_mask = os.path.join(opt.dataroot,'mask',opt.phase)

|

| 88 |

+

#self.AB_paths = sorted(make_dataset(self.dir_AB, opt.max_dataset_size)) # get image paths

|

| 89 |

+

#self.mask_paths = sorted(make_dataset(self.dir_mask, opt.max_dataset_size))

|

| 90 |

+

assert(self.opt.load_size >= self.opt.crop_size) # crop_size should be smaller than the size of loaded image

|

| 91 |

+

self.input_nc = self.opt.output_nc if self.opt.direction == 'BtoA' else self.opt.input_nc

|

| 92 |

+

self.output_nc = self.opt.input_nc if self.opt.direction == 'BtoA' else self.opt.output_nc

|

| 93 |

+

|

| 94 |

+

def numpy_to_pil(self,img_np):

|

| 95 |

+

# 假设 img_np 是一个灰度图像的 NumPy 数组,值域在0到255

|

| 96 |

+

if img_np.dtype != np.uint8:

|

| 97 |

+

raise ValueError("NumPy array should have uint8 data type.")

|

| 98 |

+

# 转换为灰度PIL图像

|

| 99 |

+

img_pil = Image.fromarray(img_np)

|

| 100 |

+

return img_pil

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

# 按照金字塔概率选择一个slice,毕竟中间的slice包含的信息是最多的,因此尽量选择中间的slice

|

| 105 |

+

# 按照金字塔概率选择一个slice,毕竟中间的slice包含的信息是最多的,因此尽量选择中间的slice

|

| 106 |

+

def get_weighted_random_slice(self,z0, z1):

|

| 107 |

+

# 计算新的范围,限制为原来范围的2/3

|

| 108 |

+

range_length = z1 - z0 + 1

|

| 109 |

+

new_range_length = int(range_length * 4 / 5)

|

| 110 |

+

|

| 111 |

+

# 计算新范围的起始和结束索引

|

| 112 |

+

new_z0 = z0 + (range_length - new_range_length) // 2

|

| 113 |

+

new_z1 = new_z0 + new_range_length - 1

|

| 114 |

+

|

| 115 |

+

# 计算中心索引

|

| 116 |

+

center_index = (new_z0 + new_z1) // 2

|

| 117 |

+

|

| 118 |

+

# 计算每个索引的权重

|

| 119 |

+

weights = [1 - abs(i - center_index) / (new_z1 - new_z0) for i in range(new_z0, new_z1 + 1)]

|

| 120 |

+

|

| 121 |

+

# 归一化权重使得总和为1

|

| 122 |

+

total_weight = sum(weights)

|

| 123 |

+

normalized_weights = [w / total_weight for w in weights]

|

| 124 |

+

|

| 125 |

+

# 根据权重随机选择一个层

|

| 126 |

+

random_index = np.random.choice(range(new_z0, new_z1 + 1), p=normalized_weights)

|

| 127 |

+

index_ratio = abs(random_index-center_index)/range_length*2

|

| 128 |

+

|

| 129 |

+

return random_index,index_ratio

|

| 130 |

+

|

| 131 |

+

def get_valid_slice(self,vert_label, z0, z1,maxheight):

|

| 132 |

+

"""

|

| 133 |

+

尝试随机选取一个非空的slice。

|

| 134 |

+

"""

|

| 135 |

+

max_attempts = 100 # 设定最大尝试次数以避免无限循环

|

| 136 |

+

attempts = 0

|

| 137 |

+

while attempts < max_attempts:

|

| 138 |

+

slice_index,index_ratio = self.get_weighted_random_slice(z0, z1)

|

| 139 |

+

vert_label[:, slice_index, :] = remove_small_connected_components(vert_label[:, slice_index, :],50)

|

| 140 |

+

|

| 141 |

+

if np.sum(vert_label[:, slice_index, :])>50: # 检查切片是否非空

|

| 142 |

+

coords = np.argwhere(vert_label[:, slice_index, :])

|

| 143 |

+

x1, x2 = min(coords[:, 0]), max(coords[:, 0])

|

| 144 |

+

if x2-x1<maxheight:

|

| 145 |

+

return slice_index,index_ratio

|

| 146 |

+

attempts += 1

|

| 147 |

+

raise ValueError("Failed to find a non-empty slice after {} attempts.".format(max_attempts))

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

def __getitem__(self, index):

|

| 151 |

+

"""Return a data point and its metadata information.

|

| 152 |

+

|

| 153 |

+

Parameters:

|

| 154 |

+

index - - a random integer for data indexing

|

| 155 |

+

|

| 156 |

+

Returns a dictionary that contains A, B, A_paths and B_paths

|

| 157 |

+

A (tensor) - - an image in the input domain

|

| 158 |

+

B (tensor) - - its corresponding image in the target domain

|

| 159 |

+

A_paths (str) - - image paths

|

| 160 |

+

B_paths (str) - - image paths (same as A_paths)

|

| 161 |

+

"""

|

| 162 |

+

# read a image given a random integer index

|

| 163 |

+

CAM_folder = '/home/zhangqi/Project/VertebralFractureGrading/heatmap/straighten_coronal/binaryclass_1'

|

| 164 |

+

CAM_path_0 = os.path.join(CAM_folder, self.vertebra_id[index]+'_0.nii.gz')

|

| 165 |

+

CAM_path_1 = os.path.join(CAM_folder, self.vertebra_id[index]+'_1.nii.gz')

|

| 166 |

+

if not os.path.exists(CAM_path_0):

|

| 167 |

+

CAM_path = CAM_path_1

|

| 168 |

+

else:

|

| 169 |

+

CAM_path = CAM_path_0

|

| 170 |

+

CAM_data = nib.load(CAM_path).get_fdata() * 255

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

patient_id, vert_id = self.vertebra_id[index].rsplit('_', 1)

|

| 174 |

+

vert_id = int(vert_id)

|

| 175 |

+

normal_vert_list = self.normal_vert_dict[patient_id]

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

ct_path = os.path.join(self.dir_AB,"CT",self.vertebra_id[index]+'.nii.gz')

|

| 179 |

+

|

| 180 |

+

label_path = os.path.join(self.dir_AB,"label",self.vertebra_id[index]+'.nii.gz')

|

| 181 |

+

|

| 182 |

+

ct_data = nib.load(ct_path).get_fdata()

|

| 183 |

+

label_data = nib.load(label_path).get_fdata()

|

| 184 |

+

vert_label = np.zeros_like(label_data)

|

| 185 |

+

vert_label[label_data==vert_id]=1

|

| 186 |

+

|

| 187 |

+

normal_vert_label = label_data.copy()

|

| 188 |

+

if normal_vert_list:

|

| 189 |

+

for normal_vert in normal_vert_list:

|

| 190 |

+

normal_vert_label[normal_vert_label==int(normal_vert)]=255

|

| 191 |

+

normal_vert_label[normal_vert_label!=255]=0

|

| 192 |

+

else:

|

| 193 |

+

normal_vert_label = np.zeros_like(label_data)

|

| 194 |

+

|

| 195 |

+

loc = np.where(vert_label)

|

| 196 |

+

|

| 197 |

+

# 冠状面选择

|

| 198 |

+

z0 = min(loc[1])

|

| 199 |

+

z1 = max(loc[1])

|

| 200 |

+

maxheight = 40

|

| 201 |

+

|

| 202 |

+

try:

|

| 203 |

+

slice,slice_ratio = self.get_valid_slice(vert_label, z0, z1, maxheight)

|

| 204 |

+

#vert_label[:, :, slice] = remove_small_connected_components(vert_label[:, :, slice],50)

|

| 205 |

+

coords = np.argwhere(vert_label[:, slice, :])

|

| 206 |

+

x1, x2 = min(coords[:, 0]), max(coords[:, 0])

|

| 207 |

+

except ValueError as e:

|

| 208 |

+

print(e)

|

| 209 |

+

width,length = vert_label[:,slice,:].shape

|

| 210 |

+

|

| 211 |

+

height = x2-x1

|

| 212 |

+

mask_x = (x1+x2)//2

|

| 213 |

+

h2 = maxheight

|

| 214 |

+

if height>h2:

|

| 215 |

+

print(slice,ct_path)

|

| 216 |

+

if mask_x<=h2//2:

|

| 217 |

+

min_x = 0

|

| 218 |

+

max_x = min_x + h2

|

| 219 |

+

elif width-mask_x<=h2/2:

|

| 220 |

+

max_x = width

|

| 221 |

+

min_x = max_x -h2

|

| 222 |

+

else:

|

| 223 |

+

min_x = mask_x-h2//2

|

| 224 |

+

max_x = min_x + h2

|

| 225 |

+

|

| 226 |

+

|

| 227 |

+

# 创建256x256的空白数组

|

| 228 |

+

target_A = np.zeros((256, 256))

|

| 229 |

+

target_B = np.zeros((256, 256))

|

| 230 |

+

target_A1 = np.zeros((256, 256))

|

| 231 |

+

target_normal_vert_label = np.zeros((256, 256))

|

| 232 |

+

target_mask = np.zeros((256, 256))

|

| 233 |

+

target_CAM = np.zeros((256, 256))

|

| 234 |

+

|

| 235 |

+

# 定位原切片放置的起始和结束列

|

| 236 |

+

start_col = (256 - 64) // 2

|

| 237 |

+

end_col = start_col + 64

|

| 238 |

+

|

| 239 |

+

# 对于A,直接从ct_data中取切片,然后放置到target_A中

|

| 240 |

+

|

| 241 |

+

target_B[:min_x, start_col:end_col] = ct_data[(x1-min_x):x1, slice, :]

|

| 242 |

+

target_B[max_x:, start_col:end_col] = ct_data[x2:x2+(width-max_x), slice, :]

|

| 243 |

+

|

| 244 |

+

target_A[:, start_col:end_col] = ct_data[:,slice,:]

|

| 245 |

+

|

| 246 |

+

# ���理A1,将label_data中特定ID的位置设为255,其他为0

|

| 247 |

+

A1 = np.zeros_like(label_data[:, slice, :])

|

| 248 |

+

A1[label_data[:, slice, :] == vert_id] = 255

|

| 249 |

+

target_A1[:, start_col:end_col] = A1

|

| 250 |

+

|

| 251 |

+

# 处理normal_vert_label

|

| 252 |

+

target_normal_vert_label[:min_x, start_col:end_col] = normal_vert_label[(x1-min_x):x1, slice, :]

|

| 253 |

+

target_normal_vert_label[max_x:, start_col:end_col] = normal_vert_label[x2:x2+(width-max_x), slice, :]

|

| 254 |

+

|

| 255 |

+

# 处理mask

|

| 256 |

+

target_mask[min_x:max_x, start_col:end_col] = 255

|

| 257 |

+

target_CAM[:min_x, start_col:end_col] = CAM_data[(x1-min_x):x1, slice, :]

|

| 258 |

+

target_CAM[max_x:, start_col:end_col] = CAM_data[x2:x2+(width-max_x), slice, :]

|

| 259 |

+

|

| 260 |

+

target_A = target_A.astype(np.uint8)

|

| 261 |

+

target_B = target_B.astype(np.uint8)

|

| 262 |

+

target_A1 = target_A1.astype(np.uint8)

|

| 263 |

+

target_normal_vert_label = target_normal_vert_label.astype(np.uint8)

|

| 264 |

+

target_mask = target_mask.astype(np.uint8)

|

| 265 |

+

target_CAM = target_CAM.astype(np.uint8)

|

| 266 |

+

|

| 267 |

+

|

| 268 |

+

target_A = self.numpy_to_pil(target_A)

|

| 269 |

+

target_B = self.numpy_to_pil(target_B)

|

| 270 |

+

target_A1 = self.numpy_to_pil(target_A1)

|

| 271 |

+

target_mask = self.numpy_to_pil(target_mask)

|

| 272 |

+

target_normal_vert_label = self.numpy_to_pil(target_normal_vert_label)

|

| 273 |

+

target_CAM = self.numpy_to_pil(target_CAM)

|

| 274 |

+

|

| 275 |

+

# apply the same transform to both A and B

|

| 276 |

+

A_transform =transforms.Compose([

|

| 277 |

+

transforms.Grayscale(1),

|

| 278 |

+

transforms.ToTensor(),

|

| 279 |

+

transforms.Normalize((0.5,), (0.5,))

|

| 280 |

+

])

|

| 281 |

+

|

| 282 |

+

mask_transform = transforms.Compose([

|

| 283 |

+

transforms.ToTensor()

|

| 284 |

+

])

|

| 285 |

+

|

| 286 |

+

target_A = A_transform(target_A)

|

| 287 |

+

target_B = A_transform(target_B)

|

| 288 |

+

target_A1 = mask_transform(target_A1)

|

| 289 |

+

target_mask = mask_transform(target_mask)

|

| 290 |

+

target_normal_vert_label = mask_transform(target_normal_vert_label)

|

| 291 |

+

target_CAM = mask_transform(target_CAM)

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

|

| 295 |

+

return {'A': target_A, 'A_mask': target_A1, 'mask':target_mask,'B':target_B,'height':height,'x1':x1,'x2':x2,

|

| 296 |

+

'h2':h2,'slice_ratio':slice_ratio,'normal_vert':target_normal_vert_label,'CAM':target_CAM,'A_paths': ct_path, 'B_paths': ct_path}

|

| 297 |

+

|

| 298 |

+

def __len__(self):

|

| 299 |

+

"""Return the total number of images in the dataset."""

|

| 300 |

+

return len(self.vertebra_id)

|

data/base_dataset.py

ADDED

|

@@ -0,0 +1,169 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This module implements an abstract base class (ABC) 'BaseDataset' for datasets.

|

| 2 |

+

|

| 3 |

+

It also includes common transformation functions (e.g., get_transform, __scale_width), which can be later used in subclasses.

|

| 4 |

+

"""

|

| 5 |

+

import random

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch.utils.data as data

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import torchvision.transforms as transforms

|

| 10 |

+

from abc import ABC, abstractmethod

|

| 11 |

+

import torch

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

class BaseDataset(data.Dataset, ABC):

|

| 15 |

+

"""This class is an abstract base class (ABC) for datasets.

|

| 16 |

+

|

| 17 |

+

To create a subclass, you need to implement the following four functions:

|

| 18 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 19 |

+

-- <__len__>: return the size of dataset.

|

| 20 |

+

-- <__getitem__>: get a data point.

|

| 21 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 22 |

+

"""

|

| 23 |

+

|

| 24 |

+

def __init__(self, opt):

|

| 25 |

+

"""Initialize the class; save the options in the class

|

| 26 |

+

|

| 27 |

+

Parameters:

|

| 28 |

+

opt (Option class)-- stores all the experiment flags; needs to be a subclass of BaseOptions

|

| 29 |

+

"""

|

| 30 |

+

self.opt = opt

|

| 31 |

+

self.root = opt.dataroot

|

| 32 |

+

|

| 33 |

+

@staticmethod

|

| 34 |

+

def modify_commandline_options(parser, is_train):

|

| 35 |

+

"""Add new dataset-specific options, and rewrite default values for existing options.

|

| 36 |

+

|

| 37 |

+

Parameters:

|

| 38 |

+

parser -- original option parser

|

| 39 |

+

is_train (bool) -- whether training phase or test phase. You can use this flag to add training-specific or test-specific options.

|

| 40 |

+

|

| 41 |

+

Returns:

|

| 42 |

+

the modified parser.

|

| 43 |

+

"""

|

| 44 |

+

return parser

|

| 45 |

+

|

| 46 |

+

@abstractmethod

|

| 47 |

+

def __len__(self):

|

| 48 |

+

"""Return the total number of images in the dataset."""

|

| 49 |

+

return 0

|

| 50 |

+

|

| 51 |

+

@abstractmethod

|

| 52 |

+

def __getitem__(self, index):

|

| 53 |

+

"""Return a data point and its metadata information.

|

| 54 |

+

|

| 55 |

+

Parameters:

|

| 56 |

+

index - - a random integer for data indexing

|

| 57 |

+

|

| 58 |

+

Returns:

|

| 59 |

+

a dictionary of data with their names. It ususally contains the data itself and its metadata information.

|

| 60 |

+

"""

|

| 61 |

+

pass

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def get_params(opt, size):

|

| 65 |

+

w, h = size

|

| 66 |

+

new_h = h

|

| 67 |

+

new_w = w

|

| 68 |

+

if opt.preprocess == 'resize_and_crop':

|

| 69 |

+

new_h = new_w = opt.load_size

|

| 70 |

+

elif opt.preprocess == 'scale_width_and_crop':

|

| 71 |

+

new_w = opt.load_size

|

| 72 |

+

new_h = opt.load_size * h // w

|

| 73 |

+

|

| 74 |

+

x = random.randint(0, np.maximum(0, new_w - opt.crop_size))

|

| 75 |

+

y = random.randint(0, np.maximum(0, new_h - opt.crop_size))

|

| 76 |

+

|

| 77 |

+

flip = random.random() > 0.5

|

| 78 |

+

|

| 79 |

+

return {'crop_pos': (x, y), 'flip': flip}

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

def get_transform(opt, params=None, grayscale=False, method=transforms.InterpolationMode.BICUBIC, convert=True,normalize=True):

|

| 83 |

+

transform_list = []

|

| 84 |

+

if grayscale:

|

| 85 |

+

transform_list.append(transforms.Grayscale(1))

|

| 86 |

+

if 'resize' in opt.preprocess:

|

| 87 |

+

osize = [opt.load_size, opt.load_size]

|

| 88 |

+

transform_list.append(transforms.Resize(osize, method))

|

| 89 |

+

elif 'scale_width' in opt.preprocess:

|

| 90 |

+

transform_list.append(transforms.Lambda(lambda img: __scale_width(img, opt.load_size, opt.crop_size, method)))

|

| 91 |

+

|

| 92 |

+

if 'crop' in opt.preprocess:

|

| 93 |

+

if params is None:

|

| 94 |

+

transform_list.append(transforms.RandomCrop(opt.crop_size))

|

| 95 |

+

else:

|

| 96 |

+

transform_list.append(transforms.Lambda(lambda img: __crop(img, params['crop_pos'], opt.crop_size)))

|

| 97 |

+

|

| 98 |

+

if opt.preprocess == 'none':

|

| 99 |

+

transform_list.append(transforms.Lambda(lambda img: __make_power_2(img, base=4, method=method)))

|

| 100 |

+

|

| 101 |

+

if not opt.no_flip:

|

| 102 |

+

if params is None:

|

| 103 |

+

transform_list.append(transforms.RandomHorizontalFlip())

|

| 104 |

+

elif params['flip']:

|

| 105 |

+

transform_list.append(transforms.Lambda(lambda img: __flip(img, params['flip'])))

|

| 106 |

+

|

| 107 |

+

if convert:

|

| 108 |

+

transform_list += [transforms.ToTensor()]

|

| 109 |

+

if normalize:

|

| 110 |

+

if grayscale:

|

| 111 |

+

transform_list += [transforms.Normalize((0.5,), (0.5,))]

|

| 112 |

+

else:

|

| 113 |

+

transform_list += [transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]

|

| 114 |

+

return transforms.Compose(transform_list)

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

def __transforms2pil_resize(method):

|

| 118 |

+

mapper = {transforms.InterpolationMode.BILINEAR: Image.BILINEAR,

|

| 119 |

+

transforms.InterpolationMode.BICUBIC: Image.BICUBIC,

|

| 120 |

+

transforms.InterpolationMode.NEAREST: Image.NEAREST,

|

| 121 |

+

transforms.InterpolationMode.LANCZOS: Image.LANCZOS,}

|

| 122 |

+

return mapper[method]

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

def __make_power_2(img, base, method=transforms.InterpolationMode.BICUBIC):

|

| 126 |

+