Update README.md

Browse files

README.md

CHANGED

|

@@ -6,16 +6,22 @@ tags:

|

|

| 6 |

---

|

| 7 |

|

| 8 |

|

|

|

|

|

|

|

| 9 |

Q. Why quantized in 8bit instead of 4bit?

|

| 10 |

A. For evaluation purpose. In theory, a 8bit quantized model should provide slightly better perplexity (maybe not noticeable - To Be Evaluated...) over a 4bit quatized version. If your available GPU VRAM is over 15GB you may want to try this out.

|

| 11 |

Note that quatization in 8bit does not mean loading the model in 8bit precision. Loading your model in 8bit precision (--load-in-8bit) comes with noticeable quality (perplexity) degradation.

|

|

|

|

| 12 |

|

| 13 |

Refs:

|

| 14 |

- https://github.com/ggerganov/llama.cpp/pull/951

|

| 15 |

- https://news.ycombinator.com/item?id=35148542

|

|

|

|

|

|

|

| 16 |

- https://arxiv.org/abs/2105.03536

|

| 17 |

-

- https://github.com/IST-DASLab/gptq

|

| 18 |

- https://arxiv.org/abs/2212.09720

|

|

|

|

|

|

|

| 19 |

|

| 20 |

<br>

|

| 21 |

|

|

@@ -25,7 +31,7 @@ Refs:

|

|

| 25 |

- wbits: 8

|

| 26 |

- true-sequential: yes

|

| 27 |

- act-order: yes

|

| 28 |

-

- 8-bit

|

| 29 |

- Conversion process: LLaMa 13B -> LLaMa 13B HF -> Vicuna13B-v1.1 HF -> Vicuna13B-v1.1-8bit-128g

|

| 30 |

|

| 31 |

<br>

|

|

@@ -84,7 +90,9 @@ pip install -r requirements.txt

|

|

| 84 |

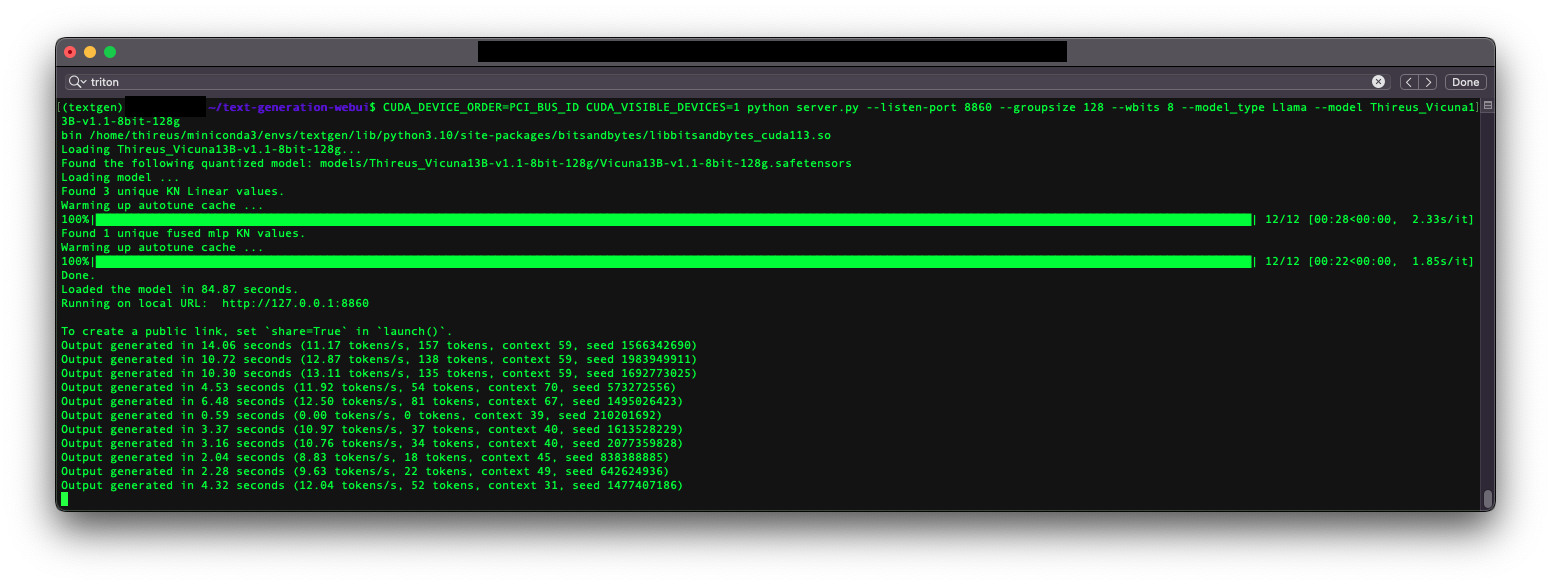

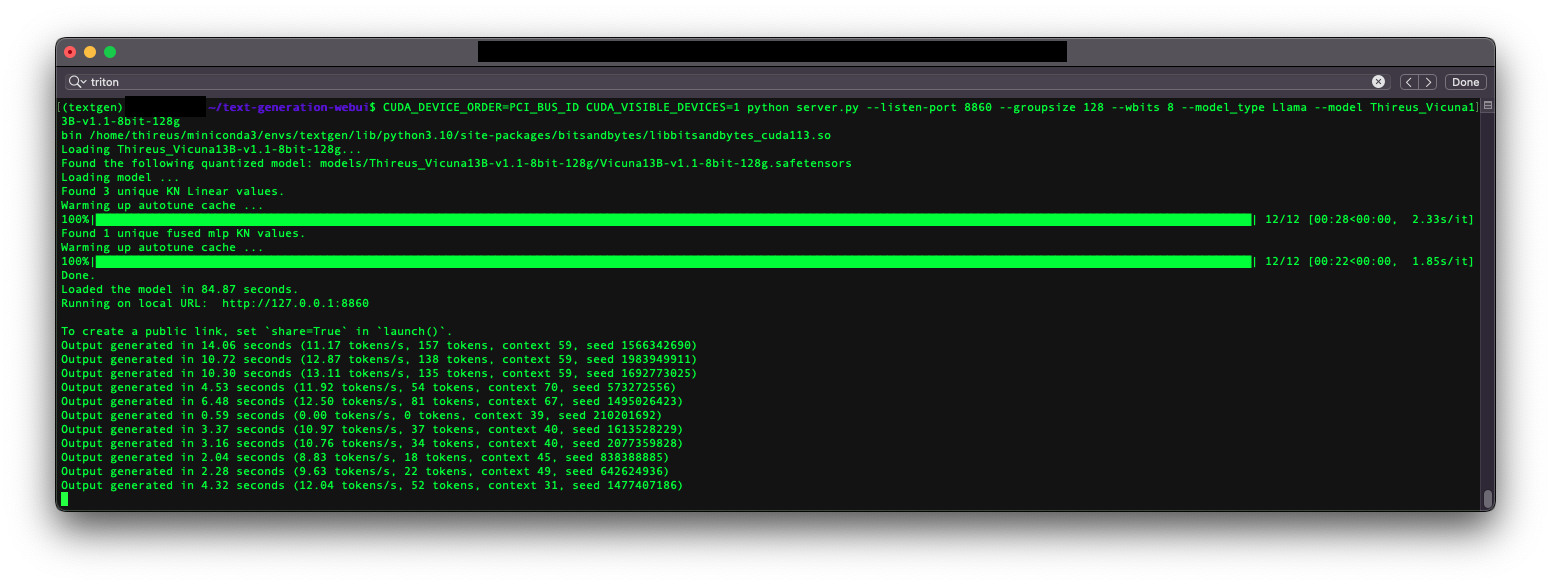

- i9-7980XE OC @4.6Ghz

|

| 85 |

|

| 86 |

- 11 tokens/s on average with Triton

|

| 87 |

-

-

|

|

|

|

|

|

|

| 88 |

- Tested and working in both chat mode and text generation mode

|

| 89 |

|

| 90 |

|

|

|

|

| 6 |

---

|

| 7 |

|

| 8 |

|

| 9 |

+

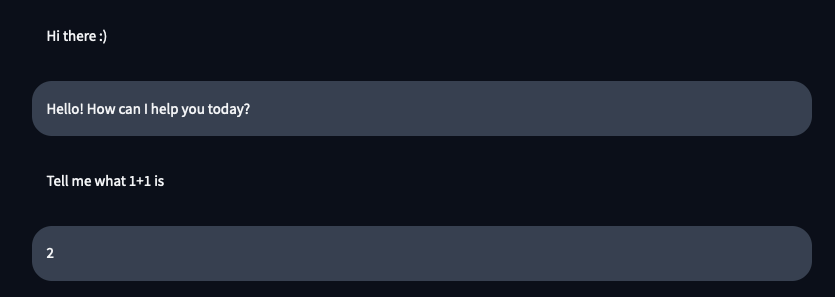

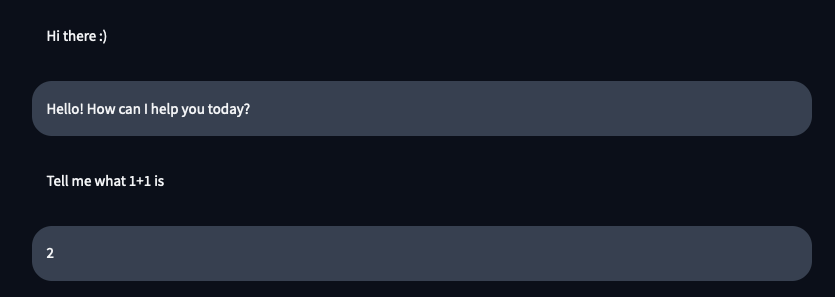

This is a 8bit GPTQ (not to be confused with 8bit RTN) version of Vicuna 13B v1.1 HF.

|

| 10 |

+

|

| 11 |

Q. Why quantized in 8bit instead of 4bit?

|

| 12 |

A. For evaluation purpose. In theory, a 8bit quantized model should provide slightly better perplexity (maybe not noticeable - To Be Evaluated...) over a 4bit quatized version. If your available GPU VRAM is over 15GB you may want to try this out.

|

| 13 |

Note that quatization in 8bit does not mean loading the model in 8bit precision. Loading your model in 8bit precision (--load-in-8bit) comes with noticeable quality (perplexity) degradation.

|

| 14 |

+

This model is also only useful until Vicuna30B or higher come to light, in which case a 8bit GPTQ version for these models would not fit consumer cards and might be less than a 4bit GPTQ (To Be Evaluated).

|

| 15 |

|

| 16 |

Refs:

|

| 17 |

- https://github.com/ggerganov/llama.cpp/pull/951

|

| 18 |

- https://news.ycombinator.com/item?id=35148542

|

| 19 |

+

- https://github.com/ggerganov/llama.cpp/issues/53

|

| 20 |

+

- https://arxiv.org/abs/2210.17323

|

| 21 |

- https://arxiv.org/abs/2105.03536

|

|

|

|

| 22 |

- https://arxiv.org/abs/2212.09720

|

| 23 |

+

- https://arxiv.org/abs/2301.00774

|

| 24 |

+

- https://github.com/IST-DASLab/gptq

|

| 25 |

|

| 26 |

<br>

|

| 27 |

|

|

|

|

| 31 |

- wbits: 8

|

| 32 |

- true-sequential: yes

|

| 33 |

- act-order: yes

|

| 34 |

+

- 8-bit GPTQ

|

| 35 |

- Conversion process: LLaMa 13B -> LLaMa 13B HF -> Vicuna13B-v1.1 HF -> Vicuna13B-v1.1-8bit-128g

|

| 36 |

|

| 37 |

<br>

|

|

|

|

| 90 |

- i9-7980XE OC @4.6Ghz

|

| 91 |

|

| 92 |

- 11 tokens/s on average with Triton

|

| 93 |

+

- Equivalent tokens/s observed over the 4bit version

|

| 94 |

+

- Pending preliminary observation: better quality results than 8bit RTN (--load-in-8bits) (To Be Confirmed)

|

| 95 |

+

- Pending preliminary observation: slightly better quality results than 4bit GPTQ (To Be Confirmed)

|

| 96 |

- Tested and working in both chat mode and text generation mode

|

| 97 |

|

| 98 |

|