File size: 3,552 Bytes

c59bb13 f2e6e76 fb452b1 c59bb13 b7ba185 c59bb13 b7ba185 c59bb13 b7ba185 c59bb13 3fe1847 b7ba185 c59bb13 b7ba185 c59bb13 b7ba185 c59bb13 b7ba185 c59bb13 b7ba185 c59bb13 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 |

---

library_name: transformers

base_model:

- meta-llama/Llama-3.3-70B-Instruct

tags:

- generated_from_trainer

model-index:

- name: 70B-L3.3-mhnnn-x1

results: []

license: llama3.3

---

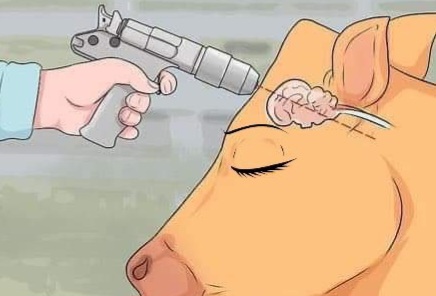

*my mental when things do not go well*

# 70B-L3.3-mhnnn-x1

I quite liked it, after messing around. Same data composition as Freya, applied differently.

Has occasional brainfarts which are fixed with a regen, the price for more creative outputs.

Recommended Model Settings | *Look, I just use these, they work fine enough. I don't even know how DRY or other meme samplers work. Your system prompt matters more anyway.*

```

Prompt Format: Llama-3-Instruct

Temperature: 1.1

min_p: 0.05

```

Types of Data included within Sets

```

Completion - Novels / eBooks

Text Adventure - Include details like 'Text Adventure Narrator' in the System Prompt, give it a one-shot example and it'll fly.

Amoral Assistant - Include the terms 'Amoral', 'Neutral' along with the regular assistant prompt for better results.

Instruct / Assistant - The usual assistant tasks.

Roleplay - As per Usual, Regular Sets

```

Training time in total was ~14 Hours on a 8xH100 Node, shout out to SCDF for not sponsoring this run. My funds are dry doing random things.

https://sao10k.carrd.co/ for contact.

---

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.6.0`

```yaml

adapter: lora # 16-bit

lora_r: 64

lora_alpha: 64

lora_dropout: 0.2

peft_use_rslora: true

lora_target_linear: true

# Data

dataset_prepared_path: dataset_run_freya

datasets:

# S1 - Writing / Completion

- path: datasets/eBooks-cleaned-75K

type: completion

- path: datasets/novels-clean-dedupe-10K

type: completion

# S2 - Instruct

- path: datasets/10k-amoral-full-fixed-sys.json

type: chat_template

chat_template: llama3

roles_to_train: ["gpt"]

field_messages: conversations

message_field_role: from

message_field_content: value

train_on_eos: turn

- path: datasets/44k-hespera-smartshuffle.json

type: chat_template

chat_template: llama3

roles_to_train: ["gpt"]

field_messages: conversations

message_field_role: from

message_field_content: value

train_on_eos: turn

- path: datasets/5k_rpg_adventure_instruct-sys.json

type: chat_template

chat_template: llama3

roles_to_train: ["gpt"]

field_messages: conversations

message_field_role: from

message_field_content: value

train_on_eos: turn

shuffle_merged_datasets: true

warmup_ratio: 0.1

plugins:

- axolotl.integrations.liger.LigerPlugin

liger_rope: true

liger_rms_norm: true

liger_layer_norm: true

liger_glu_activation: true

liger_fused_linear_cross_entropy: true

# Iterations

num_epochs: 1

# Sampling

sample_packing: true

pad_to_sequence_len: true

train_on_inputs: false

group_by_length: false

# Batching

gradient_accumulation_steps: 4

micro_batch_size: 2

gradient_checkpointing: unsloth

# Evaluation

val_set_size: 0.025

evals_per_epoch: 5

eval_table_size:

eval_max_new_tokens: 256

eval_sample_packing: false

eval_batch_size: 1

# Optimizer

optimizer: paged_ademamix_8bit

lr_scheduler: cosine

learning_rate: 0.00000242

weight_decay: 0.2

max_grad_norm: 10.0

# Garbage Collection

gc_steps: 10

# Misc

deepspeed: ./deepspeed_configs/zero3_bf16.json

```

</details><br> |