Update README.md

Browse files

README.md

CHANGED

|

@@ -1 +1,37 @@

|

|

| 1 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

library_name: transformers

|

| 3 |

+

base_model:

|

| 4 |

+

- meta-llama/Llama-3.3-70B-Instruct

|

| 5 |

+

tags:

|

| 6 |

+

- generated_from_trainer

|

| 7 |

+

model-index:

|

| 8 |

+

- name: 70B-L3.3-Cirrus-x1

|

| 9 |

+

results: []

|

| 10 |

+

license: llama3.3

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

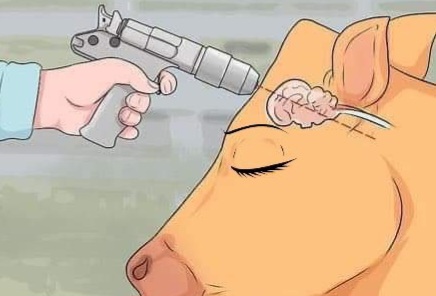

*my mental when things do not go well*

|

| 15 |

+

|

| 16 |

+

# 70B-L3.3-Cirrus-x1

|

| 17 |

+

|

| 18 |

+

I quite liked it, after messing around. Same data composition as Freya, applied differently.

|

| 19 |

+

|

| 20 |

+

Has occasional brainfarts which are fixed with a regen, the price for more creative outputs.

|

| 21 |

+

|

| 22 |

+

Recommended Model Settings | *Look, I just use these, they work fine enough. I don't even know how DRY or other meme samplers work. Your system prompt matters more anyway.*

|

| 23 |

+

```

|

| 24 |

+

Prompt Format: Llama-3-Instruct

|

| 25 |

+

Temperature: 1.1

|

| 26 |

+

min_p: 0.05

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

```

|

| 30 |

+

Training time in total was ~22 Hours on a 8xH100 Node.

|

| 31 |

+

Then, ~3 Hours spent merging checkpoints and model experimentation on a 2xH200 Node.

|

| 32 |

+

```

|

| 33 |

+

|

| 34 |

+

https://sao10k.carrd.co/ for contact.

|

| 35 |

+

|

| 36 |

+

---

|

| 37 |

+

|