Upload 5 files

Browse files- README.md +50 -6

- media/xlam-bfcl.png +0 -0

- media/xlam-toolbench.png +0 -0

- media/xlam-unified_toolquery.png +0 -0

- media/xlam-webshop_toolquery.png +0 -0

README.md

CHANGED

|

@@ -26,9 +26,10 @@ tags:

|

|

| 26 |

<img width="500px" alt="xLAM" src="https://huggingface.co/datasets/jianguozhang/logos/resolve/main/xlam-no-background.png">

|

| 27 |

</p>

|

| 28 |

<p align="center">

|

| 29 |

-

<a href="">[Homepage]</a> |

|

| 30 |

-

<a href="">[Paper]</a> |

|

| 31 |

-

<a href="https://github.com/SalesforceAIResearch/xLAM">[Github]</a>

|

|

|

|

| 32 |

<a href="https://huggingface.co/spaces/Tonic/Salesforce-Xlam-7b-r">[Community Demo]</a>

|

| 33 |

</p>

|

| 34 |

<hr>

|

|

@@ -419,12 +420,55 @@ Output:

|

|

| 419 |

{"thought": "", "tool_calls": [{"name": "get_earthquake_info", "arguments": {"location": "California"}}]}

|

| 420 |

````

|

| 421 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 422 |

|

| 423 |

## License

|

| 424 |

The model is distributed under the CC-BY-NC-4.0 license.

|

| 425 |

|

| 426 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 427 |

|

| 428 |

-

If you find this repo helpful, please cite our paper:

|

| 429 |

```bibtex

|

| 430 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

<img width="500px" alt="xLAM" src="https://huggingface.co/datasets/jianguozhang/logos/resolve/main/xlam-no-background.png">

|

| 27 |

</p>

|

| 28 |

<p align="center">

|

| 29 |

+

<a href="https://www.salesforceairesearch.com/projects/xlam-large-action-models">[Homepage]</a> |

|

| 30 |

+

<a href="https://arxiv.org/abs/2409.03215">[Paper]</a> |

|

| 31 |

+

<a href="https://github.com/SalesforceAIResearch/xLAM">[Github]</a> |

|

| 32 |

+

<a href="https://blog.salesforceairesearch.com/large-action-model-ai-agent/">[Blog]</a> |

|

| 33 |

<a href="https://huggingface.co/spaces/Tonic/Salesforce-Xlam-7b-r">[Community Demo]</a>

|

| 34 |

</p>

|

| 35 |

<hr>

|

|

|

|

| 420 |

{"thought": "", "tool_calls": [{"name": "get_earthquake_info", "arguments": {"location": "California"}}]}

|

| 421 |

````

|

| 422 |

|

| 423 |

+

## Benchmark Results

|

| 424 |

+

Note: **Bold** and <u>Underline</u> results denote the best result and the second best result for Success Rate, respectively.

|

| 425 |

+

|

| 426 |

+

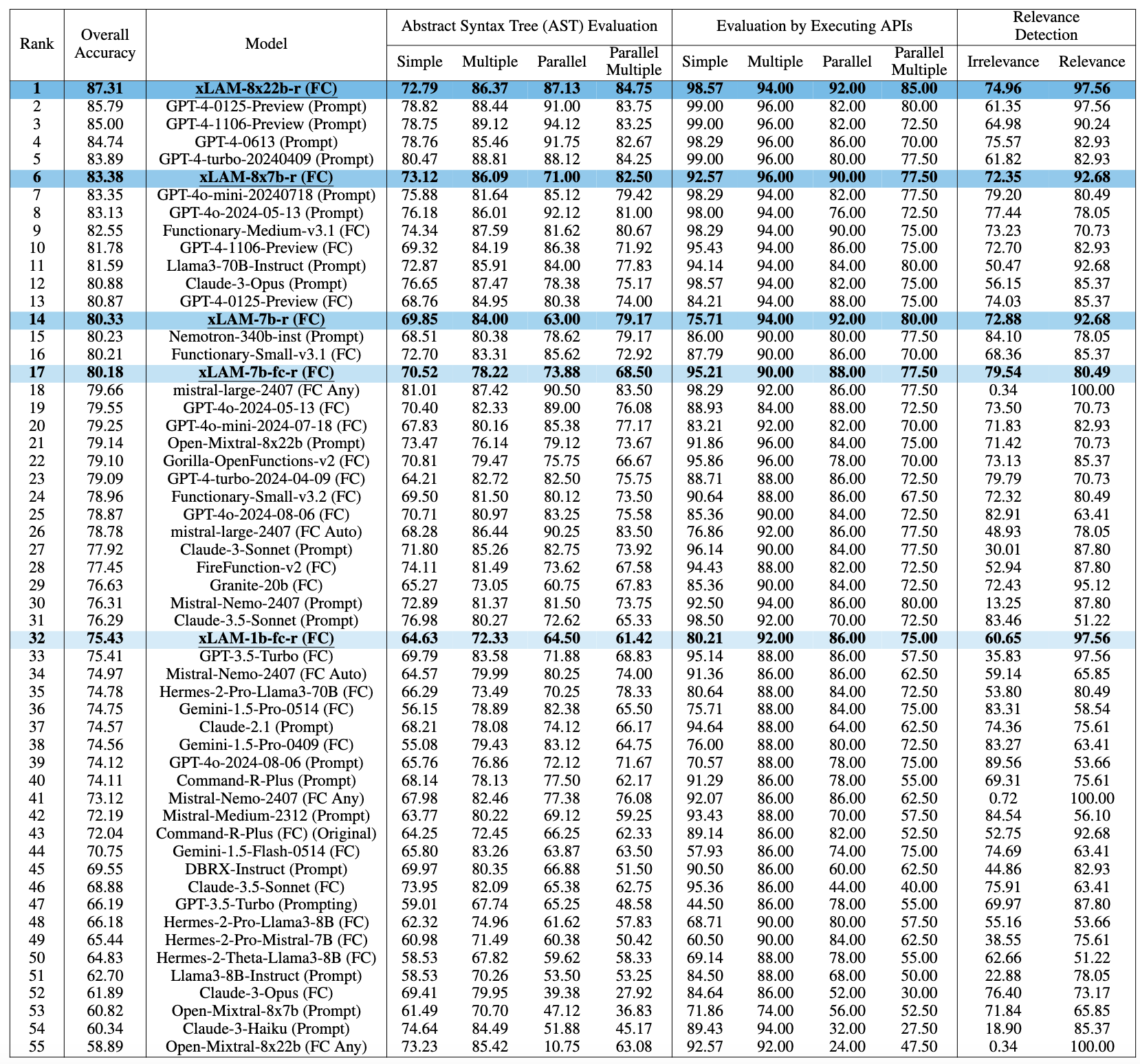

### Berkeley Function-Calling Leaderboard (BFCL)

|

| 427 |

+

|

| 428 |

+

*Table 1: Performance comparison on BFCL-v2 leaderboard (cutoff date 09/03/2024). The rank is based on the overall accuracy, which is a weighted average of different evaluation categories. "FC" stands for function-calling mode in contrast to using a customized "prompt" to extract the function calls.*

|

| 429 |

+

|

| 430 |

+

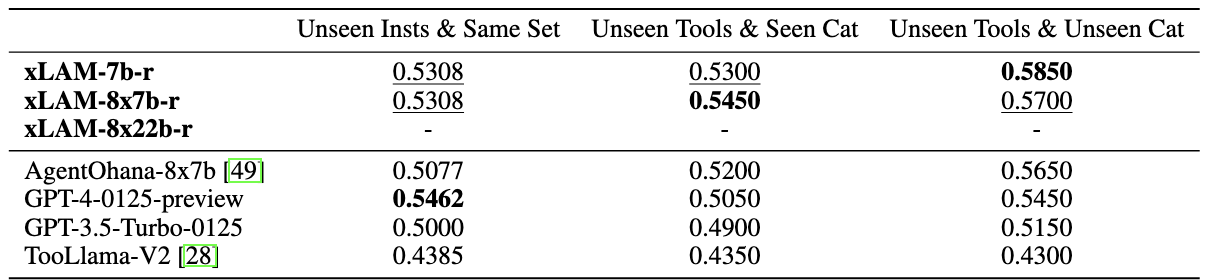

### Webshop and ToolQuery

|

| 431 |

+

|

| 432 |

+

*Table 2: Testing results on Webshop and ToolQuery. Bold and Underline results denote the best result and the second best result for Success Rate, respectively.*

|

| 433 |

+

|

| 434 |

+

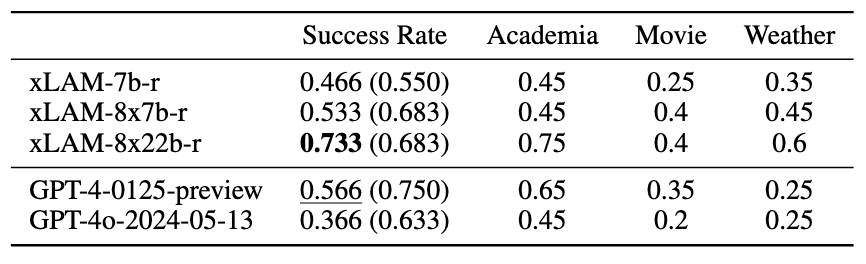

### Unified ToolQuery

|

| 435 |

+

|

| 436 |

+

*Table 3: Testing results on ToolQuery-Unified. Bold and Underline results denote the best result and the second best result for Success Rate, respectively. Values in brackets indicate corresponding performance on ToolQuery*

|

| 437 |

+

|

| 438 |

+

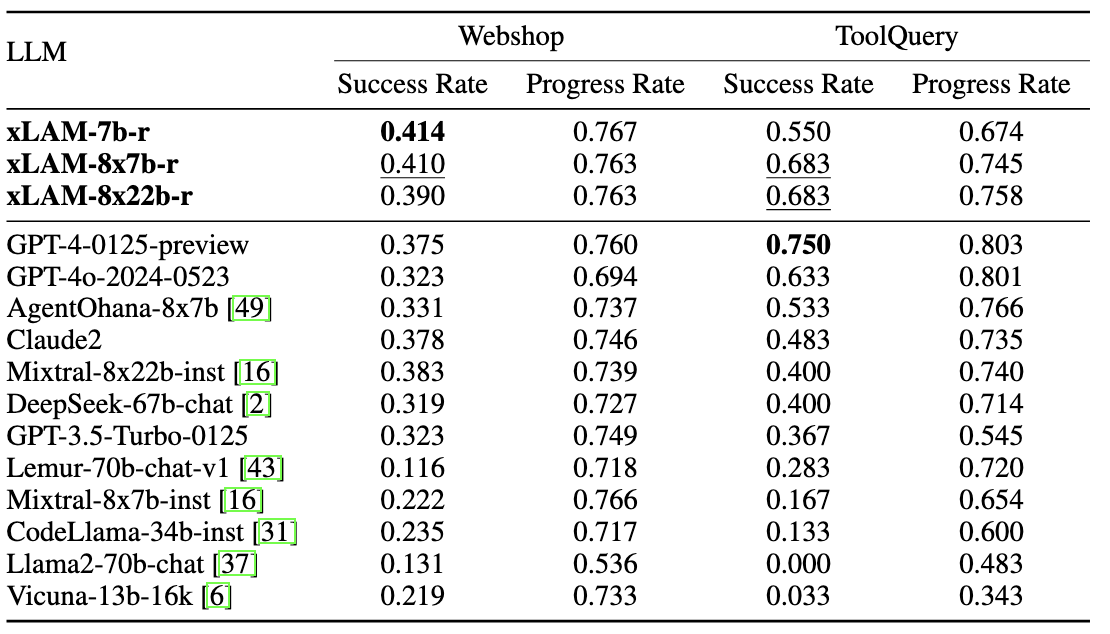

### ToolBench

|

| 439 |

+

|

| 440 |

+

*Table 4: Pass Rate on ToolBench on three distinct scenarios. Bold and Underline results denote the best result and the second best result for each setting, respectively. The results for xLAM-8x22b-r are unavailable due to the ToolBench server being down between 07/28/2024 and our evaluation cutoff date 09/03/2024.*

|

| 441 |

|

| 442 |

## License

|

| 443 |

The model is distributed under the CC-BY-NC-4.0 license.

|

| 444 |

|

| 445 |

+

## Citation

|

| 446 |

+

|

| 447 |

+

If you find this repo helpful, please consider to cite our papers:

|

| 448 |

+

|

| 449 |

+

```bibtex

|

| 450 |

+

@article{zhang2024xlam,

|

| 451 |

+

title={xLAM: A Family of Large Action Models to Empower AI Agent Systems},

|

| 452 |

+

author={Zhang, Jianguo and Lan, Tian and Zhu, Ming and Liu, Zuxin and Hoang, Thai and Kokane, Shirley and Yao, Weiran and Tan, Juntao and Prabhakar, Akshara and Chen, Haolin and others},

|

| 453 |

+

journal={arXiv preprint arXiv:2409.03215},

|

| 454 |

+

year={2024}

|

| 455 |

+

}

|

| 456 |

+

```

|

| 457 |

+

|

| 458 |

+

```bibtex

|

| 459 |

+

@article{liu2024apigen,

|

| 460 |

+

title={Apigen: Automated pipeline for generating verifiable and diverse function-calling datasets},

|

| 461 |

+

author={Liu, Zuxin and Hoang, Thai and Zhang, Jianguo and Zhu, Ming and Lan, Tian and Kokane, Shirley and Tan, Juntao and Yao, Weiran and Liu, Zhiwei and Feng, Yihao and others},

|

| 462 |

+

journal={arXiv preprint arXiv:2406.18518},

|

| 463 |

+

year={2024}

|

| 464 |

+

}

|

| 465 |

+

```

|

| 466 |

|

|

|

|

| 467 |

```bibtex

|

| 468 |

+

@article{zhang2024agentohana,

|

| 469 |

+

title={AgentOhana: Design Unified Data and Training Pipeline for Effective Agent Learning},

|

| 470 |

+

author={Zhang, Jianguo and Lan, Tian and Murthy, Rithesh and Liu, Zhiwei and Yao, Weiran and Tan, Juntao and Hoang, Thai and Yang, Liangwei and Feng, Yihao and Liu, Zuxin and others},

|

| 471 |

+

journal={arXiv preprint arXiv:2402.15506},

|

| 472 |

+

year={2024}

|

| 473 |

+

}

|

| 474 |

+

```

|

media/xlam-bfcl.png

ADDED

|

media/xlam-toolbench.png

ADDED

|

media/xlam-unified_toolquery.png

ADDED

|

media/xlam-webshop_toolquery.png

ADDED

|