cca14b0c63baf918c6151d0a13b34ea0775182297a000f6fa6c77b1362141c1f

Browse files- base_results.json +19 -0

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

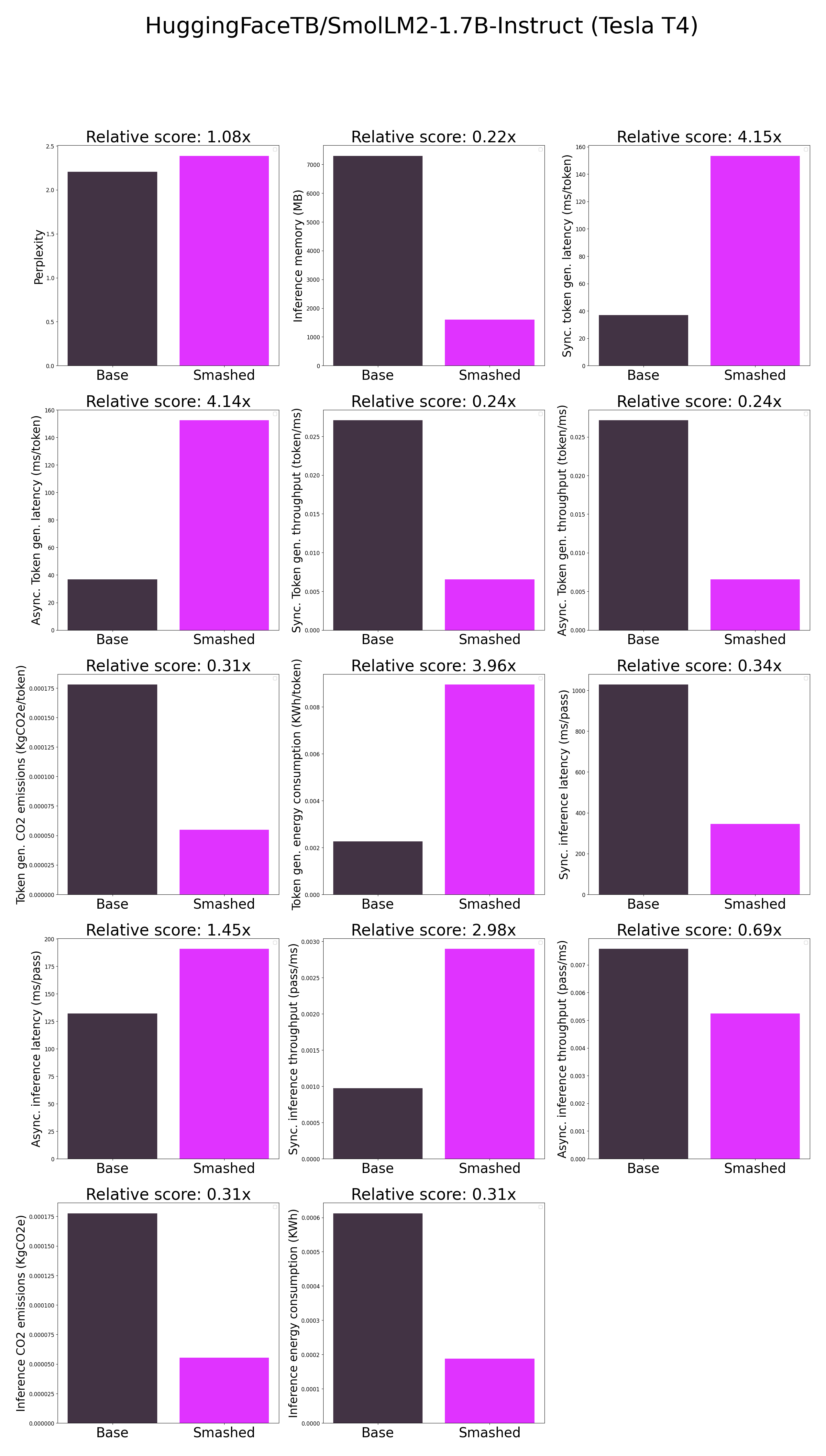

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.2062017917633057,

|

| 5 |

+

"memory_inference_first": 7296.0,

|

| 6 |

+

"memory_inference": 7296.0,

|

| 7 |

+

"token_generation_latency_sync": 36.912679481506345,

|

| 8 |

+

"token_generation_latency_async": 36.8165610358119,

|

| 9 |

+

"token_generation_throughput_sync": 0.02709096207716405,

|

| 10 |

+

"token_generation_throughput_async": 0.027161689518673086,

|

| 11 |

+

"token_generation_CO2_emissions": 0.00017793332995351462,

|

| 12 |

+

"token_generation_energy_consumption": 0.0022603336588687913,

|

| 13 |

+

"inference_latency_sync": 1028.5993484497071,

|

| 14 |

+

"inference_latency_async": 132.02483654022217,

|

| 15 |

+

"inference_throughput_sync": 0.0009721958326215045,

|

| 16 |

+

"inference_throughput_async": 0.007574332422637341,

|

| 17 |

+

"inference_CO2_emissions": 0.00017765837549938682,

|

| 18 |

+

"inference_energy_consumption": 0.0006121215726133666

|

| 19 |

+

}

|

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.3863821029663086,

|

| 5 |

+

"memory_inference_first": 1598.0,

|

| 6 |

+

"memory_inference": 1598.0,

|

| 7 |

+

"token_generation_latency_sync": 153.33177795410157,

|

| 8 |

+

"token_generation_latency_async": 152.51875221729279,

|

| 9 |

+

"token_generation_throughput_sync": 0.0065218052861771465,

|

| 10 |

+

"token_generation_throughput_async": 0.006556570818093925,

|

| 11 |

+

"token_generation_CO2_emissions": 5.481785809408624e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.008956993263524471,

|

| 13 |

+

"inference_latency_sync": 345.3065460205078,

|

| 14 |

+

"inference_latency_async": 190.85931777954102,

|

| 15 |

+

"inference_throughput_sync": 0.002895977535104735,

|

| 16 |

+

"inference_throughput_async": 0.005239461251533375,

|

| 17 |

+

"inference_CO2_emissions": 5.5271057652387143e-05,

|

| 18 |

+

"inference_energy_consumption": 0.00018832182125658322

|

| 19 |

+

}

|