Alok Pandey

commited on

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,102 @@

|

|

| 1 |

-

---

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: en

|

| 3 |

+

license: mit

|

| 4 |

+

tags:

|

| 5 |

+

- image-classification

|

| 6 |

+

- densenet

|

| 7 |

+

- ai-generated-content

|

| 8 |

+

- human-created-content

|

| 9 |

+

- model-card

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

# **Model Card: Fine-tuned DenseNet for Image Classification**

|

| 13 |

+

|

| 14 |

+

## **Model Overview**

|

| 15 |

+

This fine-tuned **DenseNet121** model is designed to classify images into the following categories:

|

| 16 |

+

1. **DALL-E Generated Images**

|

| 17 |

+

2. **Human-Created Images**

|

| 18 |

+

3. **Other AI-Generated Images**

|

| 19 |

+

|

| 20 |

+

The model is ideal for detecting AI-generated content, particularly useful in creative fields such as art and design.

|

| 21 |

+

|

| 22 |

+

---

|

| 23 |

+

|

| 24 |

+

## **Use Cases**

|

| 25 |

+

- **AI Art Detection**: Identifies whether an image was generated by AI or created by a human.

|

| 26 |

+

- **Content Moderation**: Useful in media, art, and design industries where distinguishing AI-generated content is essential.

|

| 27 |

+

- **Educational Purposes**: Useful for exploring the differences between AI and human-generated content.

|

| 28 |

+

|

| 29 |

+

---

|

| 30 |

+

|

| 31 |

+

## **Model Performance**

|

| 32 |

+

- **Accuracy**: **95%** on the validation dataset.

|

| 33 |

+

- **Loss**: **0.0552** after 15 epochs of training.

|

| 34 |

+

|

| 35 |

+

---

|

| 36 |

+

|

| 37 |

+

## **Training Details**

|

| 38 |

+

- **Base Model**: DenseNet121, pretrained on ImageNet.

|

| 39 |

+

- **Optimizer**: Adam with a learning rate of 0.0001.

|

| 40 |

+

- **Loss Function**: Cross-Entropy Loss.

|

| 41 |

+

- **Batch Size**: 32

|

| 42 |

+

- **Epochs**: 15

|

| 43 |

+

|

| 44 |

+

The model was fine-tuned using data augmentation techniques like random flips, rotations, and color jittering to improve robustness.

|

| 45 |

+

|

| 46 |

+

---

|

| 47 |

+

To support `.png` files and ensure that the images are rendered properly, you need to reference the correct file format in the image URLs. Additionally, you should use the appropriate image path for your hosting platform (like Hugging Face).

|

| 48 |

+

|

| 49 |

+

### Updated Model Card with Support for `.png` Images:

|

| 50 |

+

|

| 51 |

+

---

|

| 52 |

+

|

| 53 |

+

## **Training Metrics**

|

| 54 |

+

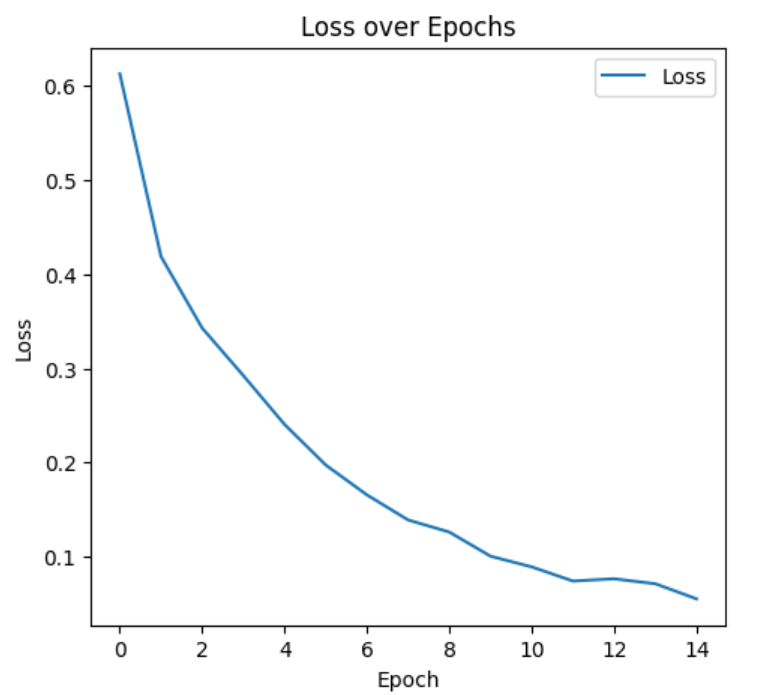

### **1. Loss Over Epochs**

|

| 55 |

+

|

| 56 |

+

This graph shows the decrease in loss over 15 epochs, indicating the model's improved ability to fit the data.

|

| 57 |

+

|

| 58 |

+

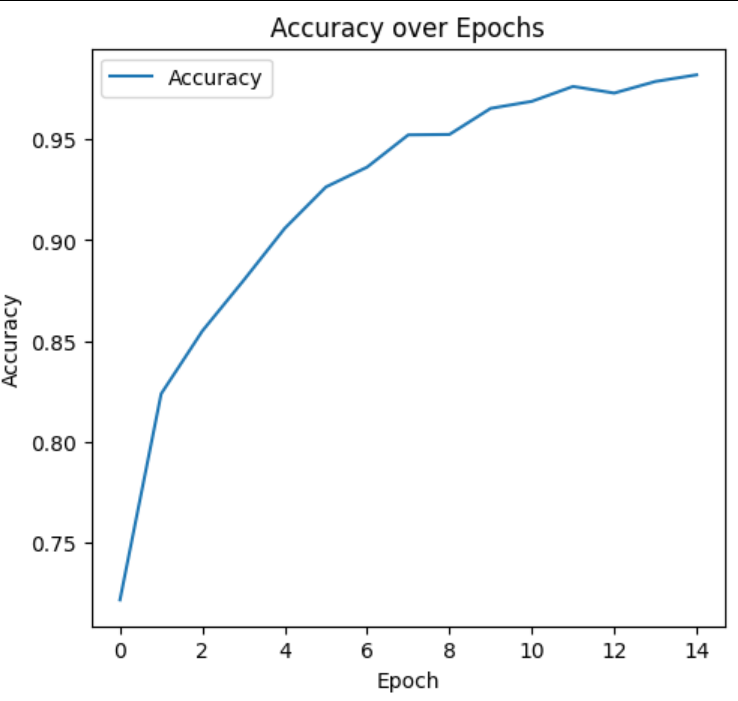

### **2. Accuracy Over Epochs**

|

| 59 |

+

|

| 60 |

+

This graph shows the increase in accuracy, reflecting the model's growing ability to correctly classify images.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

## **Model Output Samples**

|

| 64 |

+

Here are some examples of the model's predictions on various images:

|

| 65 |

+

|

| 66 |

+

#### Sample 1: Human-Created Image

|

| 67 |

+

|

| 68 |

+

*Predicted: Human-Created*

|

| 69 |

+

|

| 70 |

+

#### Sample 2: DALL-E Generated Image

|

| 71 |

+

|

| 72 |

+

*Predicted: DALL-E Generated*

|

| 73 |

+

|

| 74 |

+

#### Sample 3: Other AI-Generated Image

|

| 75 |

+

|

| 76 |

+

*Predicted: Other AI-Generated*

|

| 77 |

+

|

| 78 |

+

---

|

| 79 |

+

|

| 80 |

+

## **Model Architecture**

|

| 81 |

+

- **Feature Extractor**: DenseNet121 with frozen layers to retain general features from ImageNet.

|

| 82 |

+

- **Classifier**: A fully connected layer with 3 output nodes, one for each class (DALL-E, Human-Created, Other AI).

|

| 83 |

+

|

| 84 |

+

---

|

| 85 |

+

|

| 86 |

+

## **Limitations**

|

| 87 |

+

- **Data Bias**: The model's performance is dependent on the balance and diversity of the training dataset.

|

| 88 |

+

- **Generalization**: Further testing on more diverse datasets is recommended to validate the model’s performance across different domains and types of images.

|

| 89 |

+

|

| 90 |

+

---

|

| 91 |

+

|

| 92 |

+

## **Model Download**

|

| 93 |

+

You can download the fine-tuned DenseNet121 model using the following link:

|

| 94 |

+

[**Download the Model**](https://huggingface.co/alokpandey/DenseNet-DH3Classifier/resolve/main/densenet_finetuned_dense.pth)

|

| 95 |

+

|

| 96 |

+

---

|

| 97 |

+

|

| 98 |

+

## **References**

|

| 99 |

+

For more information on DenseNet, refer to the original research paper:

|

| 100 |

+

[**Densely Connected Convolutional Networks (DenseNet)**](https://arxiv.org/abs/1608.06993)

|

| 101 |

+

|

| 102 |

+

---

|