Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- 8.JPG +3 -0

- HackMercedIXRunThrough.glb +0 -0

- README.md +2 -8

- __pycache__/themebuilder.cpython-312.pyc +0 -0

- awanai.py +234 -0

- awantest.py +23 -0

- ckpt_022-vloss_0.1756_vf1_0.7919.ckpt +3 -0

- requirements.txt +5 -0

- samples/samples_10.png +0 -0

- samples/samples_10267.png +0 -0

- samples/samples_10423.png +0 -0

- samples/samples_116.png +0 -0

- samples/samples_11603.png +0 -0

- samples/samples_13698.png +0 -0

- samples/samples_14311.png +0 -0

- samples/samples_14546.png +0 -0

- samples/samples_15528.png +0 -0

- samples/samples_15561.png +0 -0

- samples/samples_16150.png +0 -0

- samples/samples_16312.png +0 -0

- samples/samples_16411.png +0 -0

- samples/samples_16621.png +0 -0

- samples/samples_17289.png +0 -0

- samples/samples_19682.png +0 -0

- samples/samples_19884.png +0 -0

- samples/samples_203.png +0 -0

- samples/samples_21602.png +0 -0

- samples/samples_21920.png +0 -0

- samples/samples_22594.png +0 -0

- samples/samples_23625.png +0 -0

- samples/samples_24.png +0 -0

- samples/samples_24136.png +0 -0

- samples/samples_24715.png +0 -0

- samples/samples_24817.png +0 -0

- samples/samples_25140.png +0 -0

- samples/samples_2563.png +0 -0

- samples/samples_25826.png +0 -0

- samples/samples_26591.png +0 -0

- samples/samples_2694.png +0 -0

- samples/samples_27926.png +0 -0

- samples/samples_28.png +0 -0

- samples/samples_28661.png +0 -0

- samples/samples_28983.png +0 -0

- samples/samples_30258.png +0 -0

- samples/samples_30809.png +0 -0

- samples/samples_3282.png +0 -0

- samples/samples_3665.png +0 -0

- samples/samples_381.png +0 -0

- samples/samples_4595.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

8.JPG filter=lfs diff=lfs merge=lfs -text

|

8.JPG

ADDED

|

|

Git LFS Details

|

HackMercedIXRunThrough.glb

ADDED

|

Binary file (40.6 kB). View file

|

|

|

README.md

CHANGED

|

@@ -1,12 +1,6 @@

|

|

| 1 |

---

|

| 2 |

title: Awan.AI

|

| 3 |

-

|

| 4 |

-

colorFrom: indigo

|

| 5 |

-

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

| 11 |

-

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

title: Awan.AI

|

| 3 |

+

app_file: awanai.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 4.24.0

|

|

|

|

|

|

|

| 6 |

---

|

|

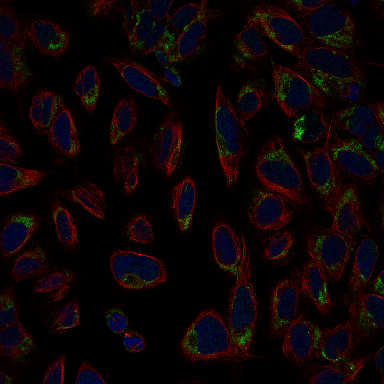

|

|

|

|

__pycache__/themebuilder.cpython-312.pyc

ADDED

|

Binary file (266 Bytes). View file

|

|

|

awanai.py

ADDED

|

@@ -0,0 +1,234 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os

|

| 3 |

+

import numpy as np

|

| 4 |

+

import gradio as gr

|

| 5 |

+

from glob import glob

|

| 6 |

+

from functools import partial

|

| 7 |

+

from dataclasses import dataclass

|

| 8 |

+

|

| 9 |

+

import torch

|

| 10 |

+

import torchvision

|

| 11 |

+

import torch.nn as nn

|

| 12 |

+

import lightning.pytorch as pl

|

| 13 |

+

import torchvision.transforms as TF

|

| 14 |

+

|

| 15 |

+

from torchmetrics import MeanMetric

|

| 16 |

+

from torchmetrics.classification import MultilabelF1Score

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

@dataclass

|

| 20 |

+

class DatasetConfig:

|

| 21 |

+

IMAGE_SIZE: tuple = (384, 384) # (W, H)

|

| 22 |

+

CHANNELS: int = 3

|

| 23 |

+

NUM_CLASSES: int = 10

|

| 24 |

+

MEAN: tuple = (0.485, 0.456, 0.406)

|

| 25 |

+

STD: tuple = (0.229, 0.224, 0.225)

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

@dataclass

|

| 29 |

+

class TrainingConfig:

|

| 30 |

+

METRIC_THRESH: float = 0.4

|

| 31 |

+

MODEL_NAME: str = "efficientnet_v2_s"

|

| 32 |

+

FREEZE_BACKBONE: bool = False

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def get_model(model_name: str, num_classes: int, freeze_backbone: bool = True):

|

| 36 |

+

"""A helper function to load and prepare any classification model

|

| 37 |

+

available in Torchvision for transfer learning or fine-tuning."""

|

| 38 |

+

|

| 39 |

+

model = getattr(torchvision.models, model_name)(weights="DEFAULT")

|

| 40 |

+

|

| 41 |

+

if freeze_backbone:

|

| 42 |

+

# Set all layer to be non-trainable

|

| 43 |

+

for param in model.parameters():

|

| 44 |

+

param.requires_grad = False

|

| 45 |

+

|

| 46 |

+

model_childrens = [name for name, _ in model.named_children()]

|

| 47 |

+

|

| 48 |

+

try:

|

| 49 |

+

final_layer_in_features = getattr(model, f"{model_childrens[-1]}")[-1].in_features

|

| 50 |

+

except Exception as e:

|

| 51 |

+

final_layer_in_features = getattr(model, f"{model_childrens[-1]}").in_features

|

| 52 |

+

|

| 53 |

+

new_output_layer = nn.Linear(in_features=final_layer_in_features, out_features=num_classes)

|

| 54 |

+

|

| 55 |

+

try:

|

| 56 |

+

getattr(model, f"{model_childrens[-1]}")[-1] = new_output_layer

|

| 57 |

+

except:

|

| 58 |

+

setattr(model, model_childrens[-1], new_output_layer)

|

| 59 |

+

|

| 60 |

+

return model

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

class ProteinModel(pl.LightningModule):

|

| 64 |

+

def __init__(

|

| 65 |

+

self,

|

| 66 |

+

model_name: str,

|

| 67 |

+

num_classes: int = 10,

|

| 68 |

+

freeze_backbone: bool = False,

|

| 69 |

+

init_lr: float = 0.001,

|

| 70 |

+

optimizer_name: str = "Adam",

|

| 71 |

+

weight_decay: float = 1e-4,

|

| 72 |

+

use_scheduler: bool = False,

|

| 73 |

+

f1_metric_threshold: float = 0.4,

|

| 74 |

+

):

|

| 75 |

+

super().__init__()

|

| 76 |

+

|

| 77 |

+

# Save the arguments as hyperparameters.

|

| 78 |

+

self.save_hyperparameters()

|

| 79 |

+

|

| 80 |

+

# Loading model using the function defined above.

|

| 81 |

+

self.model = get_model(

|

| 82 |

+

model_name=self.hparams.model_name,

|

| 83 |

+

num_classes=self.hparams.num_classes,

|

| 84 |

+

freeze_backbone=self.hparams.freeze_backbone,

|

| 85 |

+

)

|

| 86 |

+

|

| 87 |

+

# Intialize loss class.

|

| 88 |

+

self.loss_fn = nn.BCEWithLogitsLoss()

|

| 89 |

+

|

| 90 |

+

# Initializing the required metric objects.

|

| 91 |

+

self.mean_train_loss = MeanMetric()

|

| 92 |

+

self.mean_train_f1 = MultilabelF1Score(num_labels=self.hparams.num_classes, average="macro", threshold=self.hparams.f1_metric_threshold)

|

| 93 |

+

self.mean_valid_loss = MeanMetric()

|

| 94 |

+

self.mean_valid_f1 = MultilabelF1Score(num_labels=self.hparams.num_classes, average="macro", threshold=self.hparams.f1_metric_threshold)

|

| 95 |

+

|

| 96 |

+

def forward(self, x):

|

| 97 |

+

return self.model(x)

|

| 98 |

+

|

| 99 |

+

def training_step(self, batch, *args, **kwargs):

|

| 100 |

+

data, target = batch

|

| 101 |

+

logits = self(data)

|

| 102 |

+

loss = self.loss_fn(logits, target)

|

| 103 |

+

|

| 104 |

+

self.mean_train_loss(loss, weight=data.shape[0])

|

| 105 |

+

self.mean_train_f1(logits, target)

|

| 106 |

+

|

| 107 |

+

self.log("train/batch_loss", self.mean_train_loss, prog_bar=True)

|

| 108 |

+

self.log("train/batch_f1", self.mean_train_f1, prog_bar=True)

|

| 109 |

+

return loss

|

| 110 |

+

|

| 111 |

+

def on_train_epoch_end(self):

|

| 112 |

+

# Computing and logging the training mean loss & mean f1.

|

| 113 |

+

self.log("train/loss", self.mean_train_loss, prog_bar=True)

|

| 114 |

+

self.log("train/f1", self.mean_train_f1, prog_bar=True)

|

| 115 |

+

self.log("step", self.current_epoch)

|

| 116 |

+

|

| 117 |

+

def validation_step(self, batch, *args, **kwargs):

|

| 118 |

+

data, target = batch # Unpacking validation dataloader tuple

|

| 119 |

+

logits = self(data)

|

| 120 |

+

loss = self.loss_fn(logits, target)

|

| 121 |

+

|

| 122 |

+

self.mean_valid_loss.update(loss, weight=data.shape[0])

|

| 123 |

+

self.mean_valid_f1.update(logits, target)

|

| 124 |

+

|

| 125 |

+

def on_validation_epoch_end(self):

|

| 126 |

+

# Computing and logging the validation mean loss & mean f1.

|

| 127 |

+

self.log("valid/loss", self.mean_valid_loss, prog_bar=True)

|

| 128 |

+

self.log("valid/f1", self.mean_valid_f1, prog_bar=True)

|

| 129 |

+

self.log("step", self.current_epoch)

|

| 130 |

+

|

| 131 |

+

def configure_optimizers(self):

|

| 132 |

+

optimizer = getattr(torch.optim, self.hparams.optimizer_name)(

|

| 133 |

+

filter(lambda p: p.requires_grad, self.model.parameters()),

|

| 134 |

+

lr=self.hparams.init_lr,

|

| 135 |

+

weight_decay=self.hparams.weight_decay,

|

| 136 |

+

)

|

| 137 |

+

|

| 138 |

+

if self.hparams.use_scheduler:

|

| 139 |

+

lr_scheduler = torch.optim.lr_scheduler.MultiStepLR(

|

| 140 |

+

optimizer,

|

| 141 |

+

milestones=[

|

| 142 |

+

self.trainer.max_epochs // 2,

|

| 143 |

+

],

|

| 144 |

+

gamma=0.1,

|

| 145 |

+

)

|

| 146 |

+

|

| 147 |

+

# The lr_scheduler_config is a dictionary that contains the scheduler

|

| 148 |

+

# and its associated configuration.

|

| 149 |

+

lr_scheduler_config = {

|

| 150 |

+

"scheduler": lr_scheduler,

|

| 151 |

+

"interval": "epoch",

|

| 152 |

+

"name": "multi_step_lr",

|

| 153 |

+

}

|

| 154 |

+

return {"optimizer": optimizer, "lr_scheduler": lr_scheduler_config}

|

| 155 |

+

|

| 156 |

+

else:

|

| 157 |

+

return optimizer

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

@torch.inference_mode()

|

| 161 |

+

def predict(input_image, threshold=0.4, model=None, preprocess_fn=None, device="cpu", idx2labels=None):

|

| 162 |

+

input_tensor = preprocess_fn(input_image)

|

| 163 |

+

input_tensor = input_tensor.unsqueeze(0).to(device)

|

| 164 |

+

|

| 165 |

+

# Generate predictions

|

| 166 |

+

output = model(input_tensor).cpu()

|

| 167 |

+

|

| 168 |

+

probabilities = torch.sigmoid(output)[0].numpy().tolist()

|

| 169 |

+

|

| 170 |

+

output_probs = dict()

|

| 171 |

+

predicted_classes = []

|

| 172 |

+

|

| 173 |

+

for idx, prob in enumerate(probabilities):

|

| 174 |

+

output_probs[idx2labels[idx]] = prob

|

| 175 |

+

if prob >= threshold:

|

| 176 |

+

predicted_classes.append(idx2labels[idx])

|

| 177 |

+

|

| 178 |

+

predicted_classes = "\n".join(predicted_classes)

|

| 179 |

+

return predicted_classes, output_probs

|

| 180 |

+

|

| 181 |

+

|

| 182 |

+

if __name__ == "__main__":

|

| 183 |

+

labels = {

|

| 184 |

+

0: "Mitochondria",

|

| 185 |

+

1: "Nuclear bodies",

|

| 186 |

+

2: "Nucleoli",

|

| 187 |

+

3: "Golgi apparatus",

|

| 188 |

+

4: "Nucleoplasm",

|

| 189 |

+

5: "Nucleoli fibrillar center",

|

| 190 |

+

6: "Cytosol",

|

| 191 |

+

7: "Plasma membrane",

|

| 192 |

+

8: "Centrosome",

|

| 193 |

+

9: "Nuclear speckles",

|

| 194 |

+

}

|

| 195 |

+

|

| 196 |

+

DEVICE = torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")

|

| 197 |

+

CKPT_PATH = os.path.join(os.getcwd(), r"ckpt_022-vloss_0.1756_vf1_0.7919.ckpt")

|

| 198 |

+

model = ProteinModel.load_from_checkpoint(CKPT_PATH)

|

| 199 |

+

model.to(DEVICE)

|

| 200 |

+

model.eval()

|

| 201 |

+

_ = model(torch.randn(1, DatasetConfig.CHANNELS, *DatasetConfig.IMAGE_SIZE[::-1], device=DEVICE))

|

| 202 |

+

|

| 203 |

+

preprocess = TF.Compose(

|

| 204 |

+

[

|

| 205 |

+

TF.Resize(size=DatasetConfig.IMAGE_SIZE[::-1]),

|

| 206 |

+

TF.ToTensor(),

|

| 207 |

+

TF.Normalize(DatasetConfig.MEAN, DatasetConfig.STD, inplace=True),

|

| 208 |

+

]

|

| 209 |

+

)

|

| 210 |

+

|

| 211 |

+

images_dir = glob(os.path.join(os.getcwd(), "samples") + os.sep + "*.png")

|

| 212 |

+

examples = [[i, TrainingConfig.METRIC_THRESH] for i in np.random.choice(images_dir, size=10, replace=False)]

|

| 213 |

+

# print(examples)

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

with gr.Interface(

|

| 217 |

+

fn=partial(predict, model=model, preprocess_fn=preprocess, device=DEVICE, idx2labels=labels),

|

| 218 |

+

inputs=[

|

| 219 |

+

gr.Image(type="pil", label="Image"),

|

| 220 |

+

gr.Slider(0.0, 1.0, value=0.4, label="Threshold", info="Select the cut-off threshold for a node to be considered as a valid output."),

|

| 221 |

+

],

|

| 222 |

+

outputs=[

|

| 223 |

+

gr.Textbox(label="Labels Present"),

|

| 224 |

+

gr.Label(label="Probabilities", show_label=False),

|

| 225 |

+

],

|

| 226 |

+

|

| 227 |

+

examples=examples,

|

| 228 |

+

cache_examples=False,

|

| 229 |

+

allow_flagging="never",

|

| 230 |

+

title="Awan AI Medical Image Classification",

|

| 231 |

+

theme=gr.themes.Soft(primary_hue="sky", secondary_hue="pink"),

|

| 232 |

+

) as iface:

|

| 233 |

+

additional_inputs=[gr.Model3D(label="3D Model", value="./HackMercedIXRunThrough.glb", clear_color=[0.4, 0.2, 0.7, 1.0])]

|

| 234 |

+

iface.launch(share=True)

|

awantest.py

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import numpy as np

|

| 3 |

+

|

| 4 |

+

def awan(img):

|

| 5 |

+

sepia_filter = np.array([

|

| 6 |

+

[.001, .001, .001],

|

| 7 |

+

[.001, .0, .001],

|

| 8 |

+

[.001, .001, .001]])

|

| 9 |

+

|

| 10 |

+

sepia_img = img.dot(sepia_filter.T)

|

| 11 |

+

sepia_img /= sepia_img.max()

|

| 12 |

+

|

| 13 |

+

output_txt = "You might be sick"

|

| 14 |

+

return (sepia_img, output_txt)

|

| 15 |

+

|

| 16 |

+

awan = gr.Interface(

|

| 17 |

+

fn = awan,

|

| 18 |

+

inputs = gr.Image(label="Upload image or take photo here"),

|

| 19 |

+

outputs = ["image", "text"], title="output image and analysis result",

|

| 20 |

+

examples = ["8.JPG"],

|

| 21 |

+

live = True,

|

| 22 |

+

description = "Input image to get analysis"

|

| 23 |

+

).launch(share=True,debug=True, auth=("u", "p"), auth_message="Username is \"u\" and Password is \"p\"")

|

ckpt_022-vloss_0.1756_vf1_0.7919.ckpt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8f0bb009e4d3c07380ed58b5078df7ec08f8adccd742b44aff99b4b35531300e

|

| 3 |

+

size 243578302

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

--find-links https://download.pytorch.org/whl/torch_stable.html

|

| 2 |

+

# torch==2.0.0+cpu

|

| 3 |

+

torchvision==0.15.0

|

| 4 |

+

lightning==2.0.1

|

| 5 |

+

torchmetrics==1.0.0

|

samples/samples_10.png

ADDED

|

samples/samples_10267.png

ADDED

|

samples/samples_10423.png

ADDED

|

samples/samples_116.png

ADDED

|

samples/samples_11603.png

ADDED

|

samples/samples_13698.png

ADDED

|

samples/samples_14311.png

ADDED

|

samples/samples_14546.png

ADDED

|

samples/samples_15528.png

ADDED

|

samples/samples_15561.png

ADDED

|

samples/samples_16150.png

ADDED

|

samples/samples_16312.png

ADDED

|

samples/samples_16411.png

ADDED

|

samples/samples_16621.png

ADDED

|

samples/samples_17289.png

ADDED

|

samples/samples_19682.png

ADDED

|

samples/samples_19884.png

ADDED

|

samples/samples_203.png

ADDED

|

samples/samples_21602.png

ADDED

|

samples/samples_21920.png

ADDED

|

samples/samples_22594.png

ADDED

|

samples/samples_23625.png

ADDED

|

samples/samples_24.png

ADDED

|

samples/samples_24136.png

ADDED

|

samples/samples_24715.png

ADDED

|

samples/samples_24817.png

ADDED

|

samples/samples_25140.png

ADDED

|

samples/samples_2563.png

ADDED

|

samples/samples_25826.png

ADDED

|

samples/samples_26591.png

ADDED

|

samples/samples_2694.png

ADDED

|

samples/samples_27926.png

ADDED

|

samples/samples_28.png

ADDED

|

samples/samples_28661.png

ADDED

|

samples/samples_28983.png

ADDED

|

samples/samples_30258.png

ADDED

|

samples/samples_30809.png

ADDED

|

samples/samples_3282.png

ADDED

|

samples/samples_3665.png

ADDED

|

samples/samples_381.png

ADDED

|

samples/samples_4595.png

ADDED

|