Commit

·

760cec5

0

Parent(s):

New release

Browse files- .dockerignore +6 -0

- .gitattributes +3 -0

- .gitignore +49 -0

- .streamlit/config.toml +2 -0

- Dockerfile +24 -0

- README.md +50 -0

- demo.gif +3 -0

- demo.png +3 -0

- logo.png +3 -0

- requirements.txt +5 -0

- streamlit_app.py +232 -0

.dockerignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.git

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*.stl

|

| 5 |

+

*.3mf

|

| 6 |

+

demo.png

|

.gitattributes

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

demo.png filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

logo.png filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

download/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

*.egg-info/

|

| 23 |

+

.installed.cfg

|

| 24 |

+

*.egg

|

| 25 |

+

|

| 26 |

+

# Virtual environments

|

| 27 |

+

.venv/

|

| 28 |

+

env/

|

| 29 |

+

venv/

|

| 30 |

+

|

| 31 |

+

# IDEs and editors

|

| 32 |

+

.vscode/

|

| 33 |

+

.idea/

|

| 34 |

+

*.sublime-project

|

| 35 |

+

*.sublime-workspace

|

| 36 |

+

|

| 37 |

+

# OS generated files

|

| 38 |

+

.DS_Store

|

| 39 |

+

Thumbs.db

|

| 40 |

+

|

| 41 |

+

# Logs and databases

|

| 42 |

+

*.log

|

| 43 |

+

*.sqlite3

|

| 44 |

+

|

| 45 |

+

# Environment files

|

| 46 |

+

.env

|

| 47 |

+

|

| 48 |

+

# Mac system files

|

| 49 |

+

._*

|

.streamlit/config.toml

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[browser]

|

| 2 |

+

gatherUsageStats = false

|

Dockerfile

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.10-slim

|

| 2 |

+

|

| 3 |

+

# Install OpenSCAD CLI and required system libraries for headless OpenSCAD

|

| 4 |

+

RUN apt-get update && \

|

| 5 |

+

apt-get install -y --no-install-recommends \

|

| 6 |

+

openscad libgl1 libglu1-mesa xvfb \

|

| 7 |

+

&& rm -rf /var/lib/apt/lists/*

|

| 8 |

+

|

| 9 |

+

WORKDIR /app

|

| 10 |

+

# Copy and install Python dependencies (will use prebuilt wheels, no compilers needed)

|

| 11 |

+

COPY requirements.txt .

|

| 12 |

+

RUN pip install --no-cache-dir -r requirements.txt

|

| 13 |

+

|

| 14 |

+

# Copy application code

|

| 15 |

+

COPY . .

|

| 16 |

+

|

| 17 |

+

# Ensure OpenSCAD runs headlessly

|

| 18 |

+

ENV OPENSCAD_NO_GUI=1

|

| 19 |

+

|

| 20 |

+

# Expose HF Spaces default port

|

| 21 |

+

EXPOSE 7860

|

| 22 |

+

|

| 23 |

+

# Launch the Streamlit app

|

| 24 |

+

CMD ["streamlit","run","streamlit_app.py","--server.port","7860","--server.address","0.0.0.0"]

|

README.md

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: 3D Designer Agent

|

| 3 |

+

emoji: 🤖

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: purple

|

| 6 |

+

sdk: docker

|

| 7 |

+

app_port: 7860

|

| 8 |

+

---

|

| 9 |

+

# 3D Designer Agent

|

| 10 |

+

|

| 11 |

+

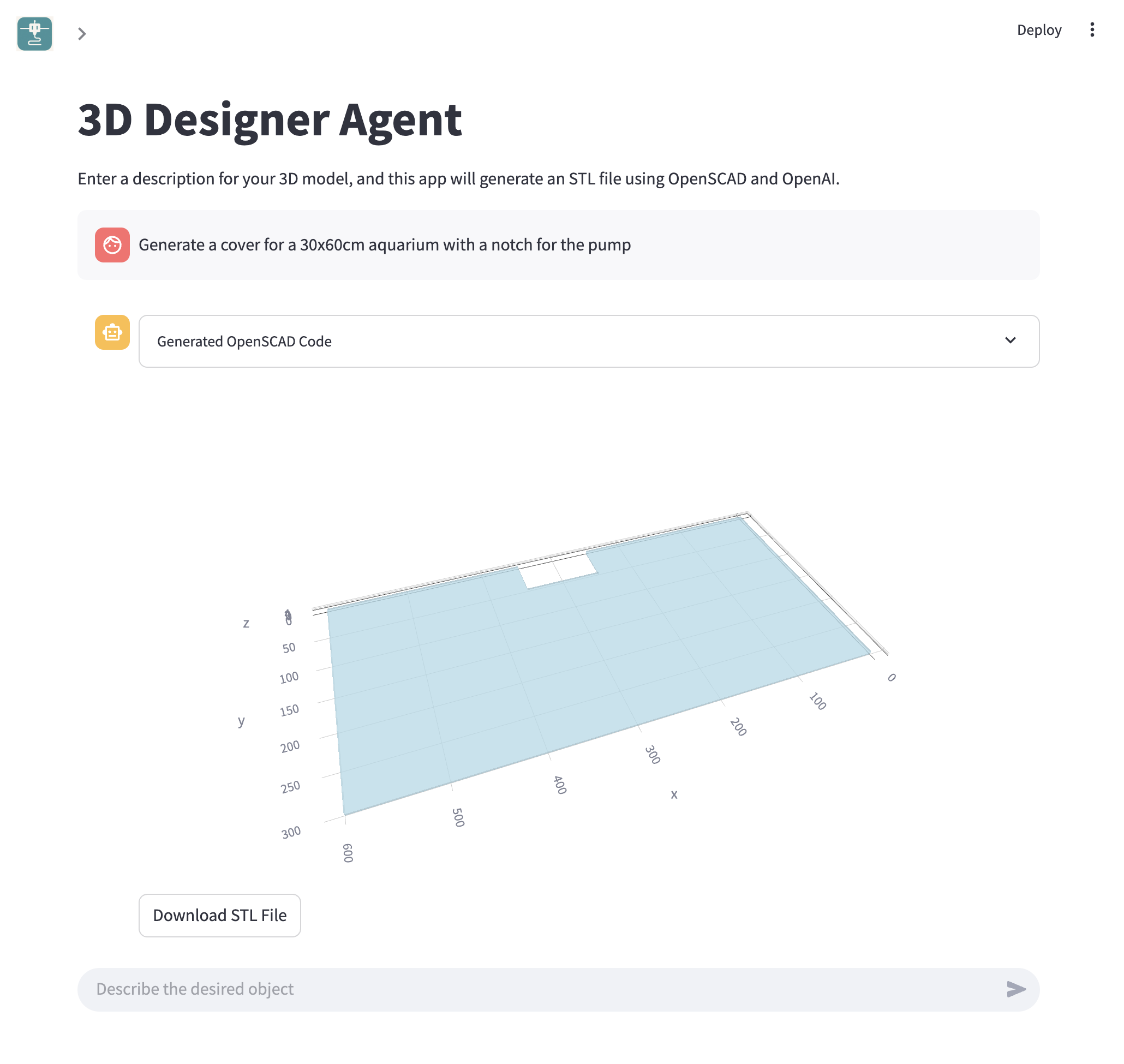

A Dockerized Streamlit app that converts text prompts into printable STL models via OpenAI + OpenSCAD.

|

| 12 |

+

|

| 13 |

+

## Local Usage with Docker

|

| 14 |

+

|

| 15 |

+

1. Build the Docker image:

|

| 16 |

+

```bash

|

| 17 |

+

docker build -t 3d-designer-agent .

|

| 18 |

+

```

|

| 19 |

+

2. Run the container (passing your OpenAI key):

|

| 20 |

+

```bash

|

| 21 |

+

docker run --rm -p 7860:7860 -e OPENAI_API_KEY=$OPENAI_API_KEY 3d-designer-agent

|

| 22 |

+

```

|

| 23 |

+

3. Visit http://localhost:7860 in your browser.

|

| 24 |

+

|

| 25 |

+

## Deploying to Hugging Face

|

| 26 |

+

|

| 27 |

+

Note: This project requires a Hugging Face Space of type "Docker".

|

| 28 |

+

|

| 29 |

+

First, ensure you have a remote named `hf` pointing to your Hugging Face Space repository. You can add it with:

|

| 30 |

+

`git remote add hf https://huggingface.co/spaces/YOUR-USERNAME/YOUR-SPACE-NAME`

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

Then execute the commands below to create a temporary branch, commit all files, force-pushes to the `hf` remote's `main` branch, and then cleans up:

|

| 34 |

+

|

| 35 |

+

```bash

|

| 36 |

+

git checkout --orphan hf-main && \

|

| 37 |

+

git add . && \

|

| 38 |

+

git commit -m "New release" && \

|

| 39 |

+

git push --force hf hf-main:main && \

|

| 40 |

+

git checkout main && \

|

| 41 |

+

git branch -D hf-main

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

## Demo

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

For more example models and usage patterns, see our Medium post: [Vibe Modeling: Turning Prompts into Parametric 3D Prints](https://medium.com/@nchourrout/vibe-modeling-turning-prompts-into-parametric-3d-prints-a63405d36824).

|

| 49 |

+

|

| 50 |

+

Made by [Flowful.ai](https://flowful.ai)

|

demo.gif

ADDED

|

Git LFS Details

|

demo.png

ADDED

|

Git LFS Details

|

logo.png

ADDED

|

Git LFS Details

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

streamlit

|

| 2 |

+

openai

|

| 3 |

+

python-dotenv

|

| 4 |

+

trimesh

|

| 5 |

+

plotly

|

streamlit_app.py

ADDED

|

@@ -0,0 +1,232 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from openai import OpenAI

|

| 3 |

+

import subprocess

|

| 4 |

+

import tempfile

|

| 5 |

+

import os

|

| 6 |

+

from dotenv import load_dotenv

|

| 7 |

+

import trimesh

|

| 8 |

+

import plotly.graph_objects as go

|

| 9 |

+

import re

|

| 10 |

+

|

| 11 |

+

# Load environment variables from .env

|

| 12 |

+

load_dotenv(override=True)

|

| 13 |

+

title = "3D Designer Agent"

|

| 14 |

+

|

| 15 |

+

# Set the Streamlit layout to wide

|

| 16 |

+

st.set_page_config(page_title=title, layout="wide")

|

| 17 |

+

|

| 18 |

+

SYSTEM_PROMPT = (

|

| 19 |

+

"You are an expert in OpenSCAD. Given a user prompt describing a 3D printable model, "

|

| 20 |

+

"generate a parametric OpenSCAD script that fulfills the description. "

|

| 21 |

+

"Only return the raw .scad code without any explanations or markdown formatting."

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

# Cache SCAD generation to avoid repeated API calls for same prompt+history

|

| 26 |

+

@st.cache_data

|

| 27 |

+

def generate_scad(prompt: str, history: tuple[tuple[str, str]], api_key: str) -> str:

|

| 28 |

+

"""

|

| 29 |

+

Uses OpenAI API to generate OpenSCAD code from a user prompt.

|

| 30 |

+

"""

|

| 31 |

+

client = OpenAI(api_key=api_key)

|

| 32 |

+

# Build conversation messages including history

|

| 33 |

+

messages = [{"role": "system", "content": SYSTEM_PROMPT}]

|

| 34 |

+

for role, content in history:

|

| 35 |

+

messages.append({"role": role, "content": content})

|

| 36 |

+

messages.append({"role": "user", "content": prompt})

|

| 37 |

+

response = client.chat.completions.create(

|

| 38 |

+

model="o4-mini",

|

| 39 |

+

messages=messages,

|

| 40 |

+

max_completion_tokens=4500,

|

| 41 |

+

)

|

| 42 |

+

code = response.choices[0].message.content.strip()

|

| 43 |

+

return code

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

@st.cache_data

|

| 47 |

+

def generate_3d_files(scad_path: str, formats: list[str] = ["stl", "3mf"]) -> dict[str, str]:

|

| 48 |

+

"""

|

| 49 |

+

Generate 3D files from a SCAD file using OpenSCAD CLI for specified formats.

|

| 50 |

+

Returns a mapping from format extension to output file path.

|

| 51 |

+

Throws CalledProcessError on failure.

|

| 52 |

+

"""

|

| 53 |

+

paths: dict[str, str] = {}

|

| 54 |

+

for fmt in formats:

|

| 55 |

+

output_path = scad_path.replace(".scad", f".{fmt}")

|

| 56 |

+

subprocess.run(["openscad", "-o", output_path, scad_path], check=True, capture_output=True, text=True)

|

| 57 |

+

paths[fmt] = output_path

|

| 58 |

+

return paths

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

def parse_scad_parameters(code: str) -> dict[str, float]:

|

| 62 |

+

params: dict[str, float] = {}

|

| 63 |

+

for line in code.splitlines():

|

| 64 |

+

m = re.match(r"(\w+)\s*=\s*([0-9\.]+)\s*;", line)

|

| 65 |

+

if m:

|

| 66 |

+

params[m.group(1)] = float(m.group(2))

|

| 67 |

+

return params

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def apply_scad_parameters(code: str, params: dict[str, float]) -> str:

|

| 71 |

+

def repl(match):

|

| 72 |

+

name = match.group(1)

|

| 73 |

+

if name in params:

|

| 74 |

+

return f"{name} = {params[name]};"

|

| 75 |

+

return match.group(0)

|

| 76 |

+

return re.sub(r"(\w+)\s*=\s*[0-9\.]+\s*;", repl, code)

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

# Dialog for downloading model in chosen format

|

| 80 |

+

@st.dialog("Download Model")

|

| 81 |

+

def download_model_dialog(stl_path: str, threemf_path: str):

|

| 82 |

+

choice = st.radio("Choose file format", ["STL", "3MF"] )

|

| 83 |

+

if choice == "STL":

|

| 84 |

+

with open(stl_path, "rb") as f:

|

| 85 |

+

st.download_button(

|

| 86 |

+

label="Download STL File", data=f, file_name="model.stl",

|

| 87 |

+

mime="application/sla", on_click="ignore"

|

| 88 |

+

)

|

| 89 |

+

else:

|

| 90 |

+

with open(threemf_path, "rb") as f:

|

| 91 |

+

st.download_button(

|

| 92 |

+

label="Download 3MF File", data=f, file_name="model.3mf",

|

| 93 |

+

mime="application/octet-stream", on_click="ignore"

|

| 94 |

+

)

|

| 95 |

+

if st.button("Close"):

|

| 96 |

+

st.rerun()

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

def main():

|

| 100 |

+

# Sidebar for custom OpenAI API key

|

| 101 |

+

api_key = st.sidebar.text_input("OpenAI API Key", type="password", value=os.getenv("OPENAI_API_KEY", ""))

|

| 102 |

+

|

| 103 |

+

# Display large app logo and updated title

|

| 104 |

+

st.logo("https://media.githubusercontent.com/media/nchourrout/Chat-To-STL/main/logo.png", size="large")

|

| 105 |

+

st.title(title)

|

| 106 |

+

st.write("Enter a description for your 3D model, and this app will generate an STL file using OpenSCAD and OpenAI.")

|

| 107 |

+

|

| 108 |

+

if not api_key:

|

| 109 |

+

st.warning("👈 Please enter an OpenAI API key in the sidebar to generate a model.")

|

| 110 |

+

st.image("demo.gif")

|

| 111 |

+

|

| 112 |

+

# Initialize chat history

|

| 113 |

+

if "history" not in st.session_state:

|

| 114 |

+

st.session_state.history = []

|

| 115 |

+

|

| 116 |

+

# Replay full conversation history

|

| 117 |

+

for idx, msg in enumerate(st.session_state.history):

|

| 118 |

+

with st.chat_message(msg["role"]):

|

| 119 |

+

if msg["role"] == "user":

|

| 120 |

+

st.write(msg["content"])

|

| 121 |

+

else:

|

| 122 |

+

with st.expander("Generated OpenSCAD Code", expanded=False):

|

| 123 |

+

st.code(msg["scad_code"], language="c")

|

| 124 |

+

mesh = trimesh.load(msg["stl_path"])

|

| 125 |

+

fig = go.Figure(data=[go.Mesh3d(

|

| 126 |

+

x=mesh.vertices[:,0], y=mesh.vertices[:,1], z=mesh.vertices[:,2],

|

| 127 |

+

i=mesh.faces[:,0], j=mesh.faces[:,1], k=mesh.faces[:,2],

|

| 128 |

+

color='lightblue', opacity=0.50

|

| 129 |

+

)])

|

| 130 |

+

fig.update_layout(scene=dict(aspectmode='data'), margin=dict(l=0, r=0, b=0, t=0))

|

| 131 |

+

st.plotly_chart(fig, use_container_width=True, height=600)

|

| 132 |

+

# Single button to open download dialog

|

| 133 |

+

if st.button("Download Model", key=f"download-model-{idx}"):

|

| 134 |

+

download_model_dialog(msg["stl_path"], msg["3mf_path"])

|

| 135 |

+

# Add parameter adjustment UI tied to this history message

|

| 136 |

+

if msg["role"] == "assistant":

|

| 137 |

+

params = parse_scad_parameters(msg["scad_code"])

|

| 138 |

+

with st.expander("Adjust parameters", expanded=False):

|

| 139 |

+

if not params:

|

| 140 |

+

st.write("No numeric parameters detected in the SCAD code.")

|

| 141 |

+

else:

|

| 142 |

+

# Use a form so inputs don't trigger reruns until submitted

|

| 143 |

+

with st.form(key=f"param-form-{idx}"):

|

| 144 |

+

updated: dict[str, float] = {}

|

| 145 |

+

for name, default in params.items():

|

| 146 |

+

updated[name] = st.number_input(name, value=default, key=f"{idx}-{name}")

|

| 147 |

+

regenerate = st.form_submit_button("Regenerate Preview")

|

| 148 |

+

if regenerate:

|

| 149 |

+

# Apply new parameter values

|

| 150 |

+

new_code = apply_scad_parameters(msg["scad_code"], updated)

|

| 151 |

+

# Overwrite SCAD file

|

| 152 |

+

with open(msg["scad_path"], "w") as f:

|

| 153 |

+

f.write(new_code)

|

| 154 |

+

# Regenerate only STL preview for speed

|

| 155 |

+

try:

|

| 156 |

+

stl_only_path = generate_3d_files(msg["scad_path"], formats=["stl"])["stl"]

|

| 157 |

+

except subprocess.CalledProcessError as e:

|

| 158 |

+

st.error(f"OpenSCAD failed with exit code {e.returncode}")

|

| 159 |

+

return

|

| 160 |

+

# Update history message in place

|

| 161 |

+

msg["scad_code"] = new_code

|

| 162 |

+

msg["content"] = new_code

|

| 163 |

+

msg["stl_path"] = stl_only_path

|

| 164 |

+

# Rerun to refresh UI

|

| 165 |

+

st.rerun()

|

| 166 |

+

|

| 167 |

+

# Accept new user input and handle conversation state

|

| 168 |

+

if user_input := st.chat_input("Describe the desired object"):

|

| 169 |

+

if not api_key:

|

| 170 |

+

st.error("👈 Please enter an OpenAI API key in the sidebar to generate a model.")

|

| 171 |

+

return

|

| 172 |

+

|

| 173 |

+

# Add user message to history

|

| 174 |

+

st.session_state.history.append({"role": "user", "content": user_input})

|

| 175 |

+

# Generate SCAD and 3D files

|

| 176 |

+

with st.spinner("Generating and rendering your model..."):

|

| 177 |

+

history_for_api = tuple(

|

| 178 |

+

(m["role"], m["content"])

|

| 179 |

+

for m in st.session_state.history

|

| 180 |

+

if "content" in m and "role" in m

|

| 181 |

+

)

|

| 182 |

+

scad_code = generate_scad(user_input, history_for_api, api_key)

|

| 183 |

+

with tempfile.NamedTemporaryFile(suffix=".scad", delete=False) as scad_file:

|

| 184 |

+

scad_file.write(scad_code.encode("utf-8"))

|

| 185 |

+

scad_path = scad_file.name

|

| 186 |

+

try:

|

| 187 |

+

file_paths = generate_3d_files(scad_path)

|

| 188 |

+

except subprocess.CalledProcessError as e:

|

| 189 |

+

st.error(f"OpenSCAD failed with exit code {e.returncode}")

|

| 190 |

+

st.subheader("OpenSCAD stdout")

|

| 191 |

+

st.code(e.stdout or "<no stdout>")

|

| 192 |

+

st.subheader("OpenSCAD stderr")

|

| 193 |

+

st.code(e.stderr or "<no stderr>")

|

| 194 |

+

return

|

| 195 |

+

# Add assistant message to history and rerun to display via history loop

|

| 196 |

+

st.session_state.history.append({

|

| 197 |

+

"role": "assistant",

|

| 198 |

+

"content": scad_code,

|

| 199 |

+

"scad_code": scad_code,

|

| 200 |

+

"scad_path": scad_path,

|

| 201 |

+

"stl_path": file_paths["stl"],

|

| 202 |

+

"3mf_path": file_paths["3mf"]

|

| 203 |

+

})

|

| 204 |

+

# Rerun to update chat history display

|

| 205 |

+

st.rerun()

|

| 206 |

+

|

| 207 |

+

# Fixed footer always visible

|

| 208 |

+

st.markdown(

|

| 209 |

+

"""

|

| 210 |

+

<style>

|

| 211 |

+

footer {

|

| 212 |

+

position: fixed;

|

| 213 |

+

bottom: 0;

|

| 214 |

+

left: 0;

|

| 215 |

+

width: 100%;

|

| 216 |

+

text-align: center;

|

| 217 |

+

padding: 10px 0;

|

| 218 |

+

background-color: var(--secondary-background-color);

|

| 219 |

+

color: var(--text-color);

|

| 220 |

+

z-index: 1000;

|

| 221 |

+

}

|

| 222 |

+

footer a {

|

| 223 |

+

color: var(--primary-color);

|

| 224 |

+

}

|

| 225 |

+

</style>

|

| 226 |

+

<footer>Made by <a href="https://flowful.ai" target="_blank">flowful.ai</a> | <a href="https://medium.com/@nchourrout/vibe-modeling-turning-prompts-into-parametric-3d-prints-a63405d36824" target="_blank">Examples</a></footer>

|

| 227 |

+

""", unsafe_allow_html=True

|

| 228 |

+

)

|

| 229 |

+

|

| 230 |

+

|

| 231 |

+

if __name__ == "__main__":

|

| 232 |

+

main()

|