Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +142 -0

- LICENSE +201 -0

- README.md +149 -12

- README_DEV.md +61 -0

- fast_sam/__init__.py +9 -0

- fast_sam/fast_sam_wrapper.py +90 -0

- ia_check_versions.py +74 -0

- ia_config.py +115 -0

- ia_devices.py +10 -0

- ia_file_manager.py +71 -0

- ia_get_dataset_colormap.py +416 -0

- ia_logging.py +14 -0

- ia_sam_manager.py +182 -0

- ia_threading.py +55 -0

- ia_ui_gradio.py +30 -0

- ia_ui_items.py +110 -0

- iasam_app.py +809 -0

- images/inpaint_anything_explanation_image_1.png +0 -0

- images/inpaint_anything_ui_image_1.png +0 -0

- images/sample_input_image.png +0 -0

- images/sample_mask_image.png +0 -0

- images/sample_seg_color_image.png +0 -0

- inpalib/__init__.py +18 -0

- inpalib/masklib.py +106 -0

- inpalib/samlib.py +256 -0

- javascript/inpaint-anything.js +458 -0

- lama_cleaner/__init__.py +19 -0

- lama_cleaner/benchmark.py +109 -0

- lama_cleaner/const.py +173 -0

- lama_cleaner/file_manager/__init__.py +1 -0

- lama_cleaner/file_manager/file_manager.py +265 -0

- lama_cleaner/file_manager/storage_backends.py +46 -0

- lama_cleaner/file_manager/utils.py +67 -0

- lama_cleaner/helper.py +292 -0

- lama_cleaner/installer.py +12 -0

- lama_cleaner/model/__init__.py +0 -0

- lama_cleaner/model/base.py +298 -0

- lama_cleaner/model/controlnet.py +289 -0

- lama_cleaner/model/ddim_sampler.py +193 -0

- lama_cleaner/model/fcf.py +1733 -0

- lama_cleaner/model/instruct_pix2pix.py +83 -0

- lama_cleaner/model/lama.py +51 -0

- lama_cleaner/model/ldm.py +333 -0

- lama_cleaner/model/manga.py +91 -0

- lama_cleaner/model/mat.py +1935 -0

- lama_cleaner/model/opencv2.py +28 -0

- lama_cleaner/model/paint_by_example.py +79 -0

- lama_cleaner/model/pipeline/__init__.py +3 -0

- lama_cleaner/model/pipeline/pipeline_stable_diffusion_controlnet_inpaint.py +585 -0

- lama_cleaner/model/plms_sampler.py +225 -0

.gitignore

ADDED

|

@@ -0,0 +1,142 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.pth

|

| 2 |

+

*.pt

|

| 3 |

+

*.pyc

|

| 4 |

+

src/

|

| 5 |

+

outputs/

|

| 6 |

+

models/

|

| 7 |

+

models

|

| 8 |

+

.DS_Store

|

| 9 |

+

ia_config.ini

|

| 10 |

+

.eslintrc

|

| 11 |

+

.eslintrc.json

|

| 12 |

+

pyproject.toml

|

| 13 |

+

|

| 14 |

+

# Byte-compiled / optimized / DLL files

|

| 15 |

+

__pycache__/

|

| 16 |

+

*.py[cod]

|

| 17 |

+

*$py.class

|

| 18 |

+

|

| 19 |

+

# C extensions

|

| 20 |

+

*.so

|

| 21 |

+

|

| 22 |

+

# Distribution / packaging

|

| 23 |

+

.Python

|

| 24 |

+

build/

|

| 25 |

+

develop-eggs/

|

| 26 |

+

dist/

|

| 27 |

+

downloads/

|

| 28 |

+

eggs/

|

| 29 |

+

.eggs/

|

| 30 |

+

lib/

|

| 31 |

+

lib64/

|

| 32 |

+

parts/

|

| 33 |

+

sdist/

|

| 34 |

+

var/

|

| 35 |

+

wheels/

|

| 36 |

+

pip-wheel-metadata/

|

| 37 |

+

share/python-wheels/

|

| 38 |

+

*.egg-info/

|

| 39 |

+

.installed.cfg

|

| 40 |

+

*.egg

|

| 41 |

+

MANIFEST

|

| 42 |

+

|

| 43 |

+

# PyInstaller

|

| 44 |

+

# Usually these files are written by a python script from a template

|

| 45 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 46 |

+

*.manifest

|

| 47 |

+

*.spec

|

| 48 |

+

|

| 49 |

+

# Installer logs

|

| 50 |

+

pip-log.txt

|

| 51 |

+

pip-delete-this-directory.txt

|

| 52 |

+

|

| 53 |

+

# Unit test / coverage reports

|

| 54 |

+

htmlcov/

|

| 55 |

+

.tox/

|

| 56 |

+

.nox/

|

| 57 |

+

.coverage

|

| 58 |

+

.coverage.*

|

| 59 |

+

.cache

|

| 60 |

+

nosetests.xml

|

| 61 |

+

coverage.xml

|

| 62 |

+

*.cover

|

| 63 |

+

*.py,cover

|

| 64 |

+

.hypothesis/

|

| 65 |

+

.pytest_cache/

|

| 66 |

+

|

| 67 |

+

# Translations

|

| 68 |

+

*.mo

|

| 69 |

+

*.pot

|

| 70 |

+

|

| 71 |

+

# Django stuff:

|

| 72 |

+

*.log

|

| 73 |

+

local_settings.py

|

| 74 |

+

db.sqlite3

|

| 75 |

+

db.sqlite3-journal

|

| 76 |

+

|

| 77 |

+

# Flask stuff:

|

| 78 |

+

instance/

|

| 79 |

+

.webassets-cache

|

| 80 |

+

|

| 81 |

+

# Scrapy stuff:

|

| 82 |

+

.scrapy

|

| 83 |

+

|

| 84 |

+

# Sphinx documentation

|

| 85 |

+

docs/_build/

|

| 86 |

+

|

| 87 |

+

# PyBuilder

|

| 88 |

+

target/

|

| 89 |

+

|

| 90 |

+

# Jupyter Notebook

|

| 91 |

+

.ipynb_checkpoints

|

| 92 |

+

|

| 93 |

+

# IPython

|

| 94 |

+

profile_default/

|

| 95 |

+

ipython_config.py

|

| 96 |

+

|

| 97 |

+

# pyenv

|

| 98 |

+

.python-version

|

| 99 |

+

|

| 100 |

+

# pipenv

|

| 101 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 102 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 103 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 104 |

+

# install all needed dependencies.

|

| 105 |

+

#Pipfile.lock

|

| 106 |

+

|

| 107 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 108 |

+

__pypackages__/

|

| 109 |

+

|

| 110 |

+

# Celery stuff

|

| 111 |

+

celerybeat-schedule

|

| 112 |

+

celerybeat.pid

|

| 113 |

+

|

| 114 |

+

# SageMath parsed files

|

| 115 |

+

*.sage.py

|

| 116 |

+

|

| 117 |

+

# Environments

|

| 118 |

+

.env

|

| 119 |

+

.venv

|

| 120 |

+

env/

|

| 121 |

+

venv/

|

| 122 |

+

ENV/

|

| 123 |

+

env.bak/

|

| 124 |

+

venv.bak/

|

| 125 |

+

|

| 126 |

+

# Spyder project settings

|

| 127 |

+

.spyderproject

|

| 128 |

+

.spyproject

|

| 129 |

+

|

| 130 |

+

# Rope project settings

|

| 131 |

+

.ropeproject

|

| 132 |

+

|

| 133 |

+

# mkdocs documentation

|

| 134 |

+

/site

|

| 135 |

+

|

| 136 |

+

# mypy

|

| 137 |

+

.mypy_cache/

|

| 138 |

+

.dmypy.json

|

| 139 |

+

dmypy.json

|

| 140 |

+

|

| 141 |

+

# Pyre type checker

|

| 142 |

+

.pyre/

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,12 +1,149 @@

|

|

| 1 |

-

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: _

|

| 3 |

+

app_file: iasam_app.py

|

| 4 |

+

sdk: gradio

|

| 5 |

+

sdk_version: 3.50.2

|

| 6 |

+

---

|

| 7 |

+

# Inpaint Anything (Inpainting with Segment Anything)

|

| 8 |

+

|

| 9 |

+

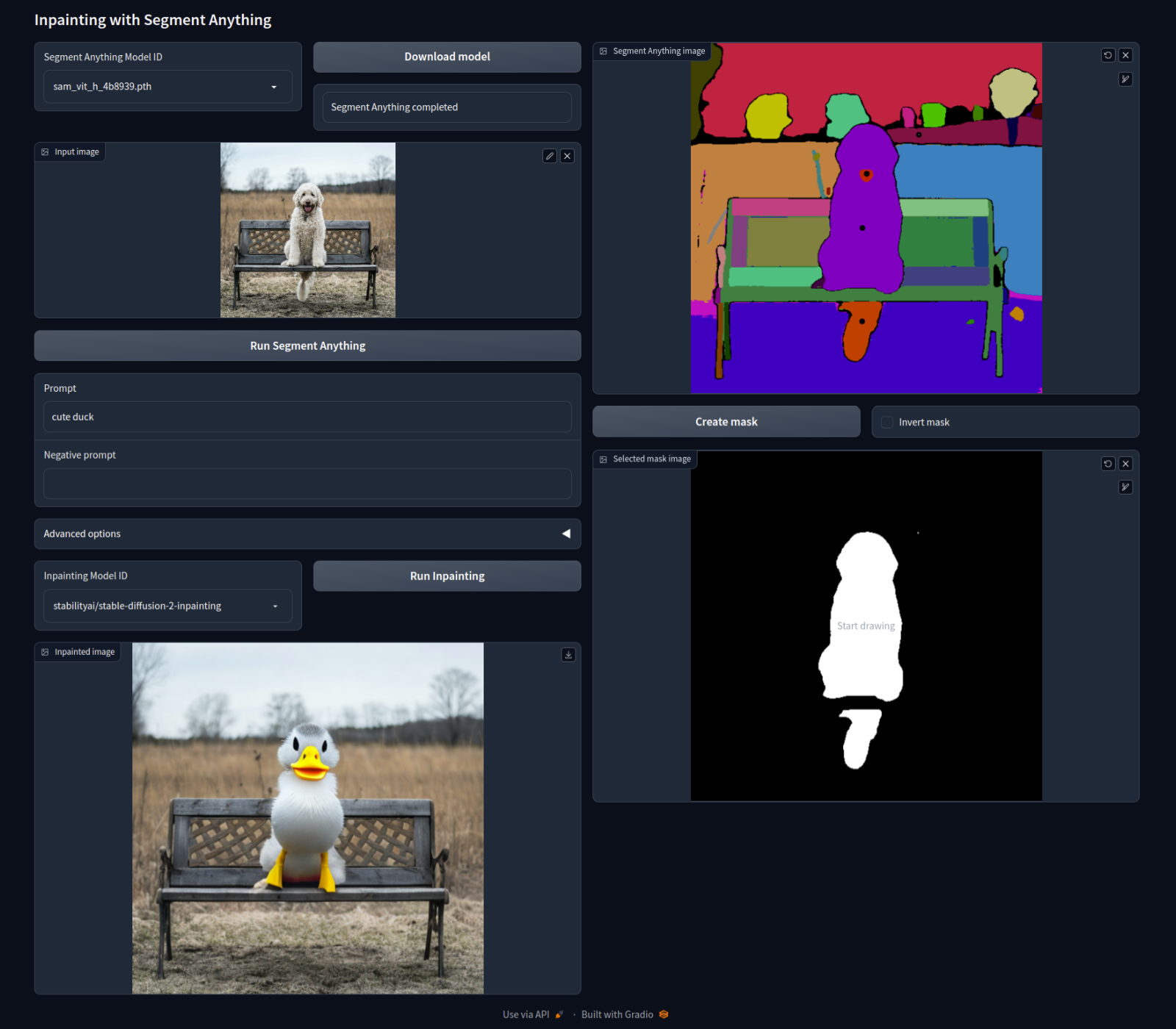

Inpaint Anything performs stable diffusion inpainting on a browser UI using any mask selected from the output of [Segment Anything](https://github.com/facebookresearch/segment-anything).

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

Using Segment Anything enables users to specify masks by simply pointing to the desired areas, instead of manually filling them in. This can increase the efficiency and accuracy of the mask creation process, leading to potentially higher-quality inpainting results while saving time and effort.

|

| 13 |

+

|

| 14 |

+

[Extension version for AUTOMATIC1111's Web UI](https://github.com/Uminosachi/sd-webui-inpaint-anything)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## Installation

|

| 19 |

+

|

| 20 |

+

Please follow these steps to install the software:

|

| 21 |

+

|

| 22 |

+

* Create a new conda environment:

|

| 23 |

+

|

| 24 |

+

```bash

|

| 25 |

+

conda create -n inpaint python=3.10

|

| 26 |

+

conda activate inpaint

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

* Clone the software repository:

|

| 30 |

+

|

| 31 |

+

```bash

|

| 32 |

+

git clone https://github.com/Uminosachi/inpaint-anything.git

|

| 33 |

+

cd inpaint-anything

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

* For the CUDA environment, install the following packages:

|

| 37 |

+

|

| 38 |

+

```bash

|

| 39 |

+

pip install -r requirements.txt

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

* If you are using macOS, please install the package from the following file instead:

|

| 43 |

+

|

| 44 |

+

```bash

|

| 45 |

+

pip install -r requirements_mac.txt

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

## Running the application

|

| 49 |

+

|

| 50 |

+

```bash

|

| 51 |

+

python iasam_app.py

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

* Open http://127.0.0.1:7860/ in your browser.

|

| 55 |

+

* Note: If you have a privacy protection extension enabled in your web browser, such as DuckDuckGo, you may not be able to retrieve the mask from your sketch.

|

| 56 |

+

|

| 57 |

+

### Options

|

| 58 |

+

|

| 59 |

+

* `--save-seg`: Save the segmentation image generated by SAM.

|

| 60 |

+

* `--offline`: Execute inpainting using an offline network.

|

| 61 |

+

* `--sam-cpu`: Perform the Segment Anything operation on CPU.

|

| 62 |

+

|

| 63 |

+

## Downloading the Model

|

| 64 |

+

|

| 65 |

+

* Launch this application.

|

| 66 |

+

* Click on the `Download model` button, located next to the [Segment Anything Model ID](https://github.com/facebookresearch/segment-anything#model-checkpoints). This includes the [SAM 2](https://github.com/facebookresearch/segment-anything-2), [Segment Anything in High Quality Model ID](https://github.com/SysCV/sam-hq), [Fast Segment Anything](https://github.com/CASIA-IVA-Lab/FastSAM), and [Faster Segment Anything (MobileSAM)](https://github.com/ChaoningZhang/MobileSAM).

|

| 67 |

+

* Please note that the SAM is available in three sizes: Base, Large, and Huge. Remember, larger sizes consume more VRAM.

|

| 68 |

+

* Wait for the download to complete.

|

| 69 |

+

* The downloaded model file will be stored in the `models` directory of this application's repository.

|

| 70 |

+

|

| 71 |

+

## Usage

|

| 72 |

+

|

| 73 |

+

* Drag and drop your image onto the input image area.

|

| 74 |

+

* Outpainting can be achieved by the `Padding options`, configuring the scale and balance, and then clicking on the `Run Padding` button.

|

| 75 |

+

* The `Anime Style` checkbox enhances segmentation mask detection, particularly in anime style images, at the expense of a slight reduction in mask quality.

|

| 76 |

+

* Click on the `Run Segment Anything` button.

|

| 77 |

+

* Use sketching to point the area you want to inpaint. You can undo and adjust the pen size.

|

| 78 |

+

* Hover over either the SAM image or the mask image and press the `S` key for Fullscreen mode, or the `R` key to Reset zoom.

|

| 79 |

+

* Click on the `Create mask` button. The mask will appear in the selected mask image area.

|

| 80 |

+

|

| 81 |

+

### Mask Adjustment

|

| 82 |

+

|

| 83 |

+

* `Expand mask region` button: Use this to slightly expand the area of the mask for broader coverage.

|

| 84 |

+

* `Trim mask by sketch` button: Clicking this will exclude the sketched area from the mask.

|

| 85 |

+

* `Add mask by sketch` button: Clicking this will add the sketched area to the mask.

|

| 86 |

+

|

| 87 |

+

### Inpainting Tab

|

| 88 |

+

|

| 89 |

+

* Enter your desired Prompt and Negative Prompt, then choose the Inpainting Model ID.

|

| 90 |

+

* Click on the `Run Inpainting` button (**Please note that it may take some time to download the model for the first time**).

|

| 91 |

+

* In the Advanced options, you can adjust the Sampler, Sampling Steps, Guidance Scale, and Seed.

|

| 92 |

+

* If you enable the `Mask area Only` option, modifications will be confined to the designated mask area only.

|

| 93 |

+

* Adjust the iteration slider to perform inpainting multiple times with different seeds.

|

| 94 |

+

* The inpainting process is powered by [diffusers](https://github.com/huggingface/diffusers).

|

| 95 |

+

|

| 96 |

+

#### Tips

|

| 97 |

+

|

| 98 |

+

* You can directly drag and drop the inpainted image into the input image field on the Web UI. (useful with Chrome and Edge browsers)

|

| 99 |

+

|

| 100 |

+

#### Model Cache

|

| 101 |

+

* The inpainting model, which is saved in HuggingFace's cache and includes `inpaint` (case-insensitive) in its repo_id, will also be added to the Inpainting Model ID dropdown list.

|

| 102 |

+

* If there's a specific model you'd like to use, you can cache it in advance using the following Python commands:

|

| 103 |

+

```bash

|

| 104 |

+

python

|

| 105 |

+

```

|

| 106 |

+

```python

|

| 107 |

+

from diffusers import StableDiffusionInpaintPipeline

|

| 108 |

+

pipe = StableDiffusionInpaintPipeline.from_pretrained("Uminosachi/dreamshaper_5-inpainting")

|

| 109 |

+

exit()

|

| 110 |

+

```

|

| 111 |

+

* The model diffusers downloaded is typically stored in your home directory. You can find it at `/home/username/.cache/huggingface/hub` for Linux and MacOS users, or at `C:\Users\username\.cache\huggingface\hub` for Windows users.

|

| 112 |

+

* When executing inpainting, if the following error is output to the console, try deleting the corresponding model from the cache folder mentioned above:

|

| 113 |

+

```

|

| 114 |

+

An error occurred while trying to fetch model name...

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

### Cleaner Tab

|

| 118 |

+

|

| 119 |

+

* Choose the Cleaner Model ID.

|

| 120 |

+

* Click on the `Run Cleaner` button (**Please note that it may take some time to download the model for the first time**).

|

| 121 |

+

* Cleaner process is performed using [Lama Cleaner](https://github.com/Sanster/lama-cleaner).

|

| 122 |

+

|

| 123 |

+

### Mask only Tab

|

| 124 |

+

|

| 125 |

+

* Gives ability to just save mask without any other processing, so it's then possible to use the mask in other graphic applications.

|

| 126 |

+

* `Get mask as alpha of image` button: Save the mask as RGBA image, with the mask put into the alpha channel of the input image.

|

| 127 |

+

* `Get mask` button: Save the mask as RGB image.

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

## Auto-saving images

|

| 132 |

+

|

| 133 |

+

* The inpainted image will be automatically saved in the folder that matches the current date within the `outputs` directory.

|

| 134 |

+

|

| 135 |

+

## Development

|

| 136 |

+

|

| 137 |

+

With the [Inpaint Anything library](README_DEV.md), you can perform segmentation and create masks using sketches from other applications.

|

| 138 |

+

|

| 139 |

+

## License

|

| 140 |

+

|

| 141 |

+

The source code is licensed under the [Apache 2.0 license](LICENSE).

|

| 142 |

+

|

| 143 |

+

## References

|

| 144 |

+

|

| 145 |

+

* Ravi, N., Gabeur, V., Hu, Y.-T., Hu, R., Ryali, C., Ma, T., Khedr, H., Rädel, R., Rolland, C., Gustafson, L., Mintun, E., Pan, J., Alwala, K. V., Carion, N., Wu, C.-Y., Girshick, R., Dollár, P., & Feichtenhofer, C. (2024). [SAM 2: Segment Anything in Images and Videos](https://ai.meta.com/research/publications/sam-2-segment-anything-in-images-and-videos/). arXiv preprint.

|

| 146 |

+

* Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C., Gustafson, L., Xiao, T., Whitehead, S., Berg, A. C., Lo, W-Y., Dollár, P., & Girshick, R. (2023). [Segment Anything](https://arxiv.org/abs/2304.02643). arXiv:2304.02643.

|

| 147 |

+

* Ke, L., Ye, M., Danelljan, M., Liu, Y., Tai, Y-W., Tang, C-K., & Yu, F. (2023). [Segment Anything in High Quality](https://arxiv.org/abs/2306.01567). arXiv:2306.01567.

|

| 148 |

+

* Zhao, X., Ding, W., An, Y., Du, Y., Yu, T., Li, M., Tang, M., & Wang, J. (2023). [Fast Segment Anything](https://arxiv.org/abs/2306.12156). arXiv:2306.12156 [cs.CV].

|

| 149 |

+

* Zhang, C., Han, D., Qiao, Y., Kim, J. U., Bae, S-H., Lee, S., & Hong, C. S. (2023). [Faster Segment Anything: Towards Lightweight SAM for Mobile Applications](https://arxiv.org/abs/2306.14289). arXiv:2306.14289.

|

README_DEV.md

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Usage of Inpaint Anything Library

|

| 2 |

+

|

| 3 |

+

## Introduction

|

| 4 |

+

|

| 5 |

+

The `inpalib` from the `inpaint-anything` package lets you segment images and create masks using sketches from other applications.

|

| 6 |

+

|

| 7 |

+

## Code Breakdown

|

| 8 |

+

|

| 9 |

+

### Imports and Module Initialization

|

| 10 |

+

|

| 11 |

+

```python

|

| 12 |

+

import importlib

|

| 13 |

+

|

| 14 |

+

import numpy as np

|

| 15 |

+

from PIL import Image, ImageDraw

|

| 16 |

+

|

| 17 |

+

inpalib = importlib.import_module("inpaint-anything.inpalib")

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

### Fetch Model IDs

|

| 21 |

+

|

| 22 |

+

```python

|

| 23 |

+

available_sam_ids = inpalib.get_available_sam_ids()

|

| 24 |

+

|

| 25 |

+

use_sam_id = "sam_hq_vit_l.pth"

|

| 26 |

+

# assert use_sam_id in available_sam_ids, f"Invalid SAM ID: {use_sam_id}"

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

Note: Only the models downloaded via the Inpaint Anything are available.

|

| 30 |

+

|

| 31 |

+

### Generate Segments Image

|

| 32 |

+

|

| 33 |

+

```python

|

| 34 |

+

input_image = np.array(Image.open("/path/to/image.png"))

|

| 35 |

+

|

| 36 |

+

sam_masks = inpalib.generate_sam_masks(input_image, use_sam_id, anime_style_chk=False)

|

| 37 |

+

sam_masks = inpalib.sort_masks_by_area(sam_masks)

|

| 38 |

+

|

| 39 |

+

seg_color_image = inpalib.create_seg_color_image(input_image, sam_masks)

|

| 40 |

+

|

| 41 |

+

Image.fromarray(seg_color_image).save("/path/to/seg_color_image.png")

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

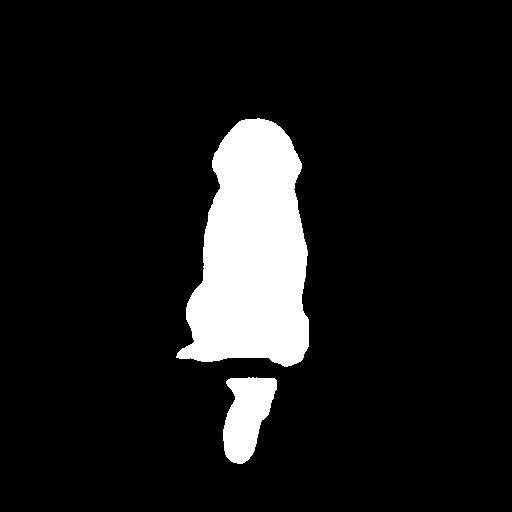

<img src="images/sample_input_image.png" alt="drawing" width="256"/> <img src="images/sample_seg_color_image.png" alt="drawing" width="256"/>

|

| 45 |

+

|

| 46 |

+

### Create Mask from Sketch

|

| 47 |

+

|

| 48 |

+

```python

|

| 49 |

+

sketch_image = Image.fromarray(np.zeros_like(input_image))

|

| 50 |

+

|

| 51 |

+

draw = ImageDraw.Draw(sketch_image)

|

| 52 |

+

draw.point((input_image.shape[1] // 2, input_image.shape[0] // 2), fill=(255, 255, 255))

|

| 53 |

+

|

| 54 |

+

mask_image = inpalib.create_mask_image(np.array(sketch_image), sam_masks, ignore_black_chk=True)

|

| 55 |

+

|

| 56 |

+

Image.fromarray(mask_image).save("/path/to/mask_image.png")

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

<img src="images/sample_mask_image.png" alt="drawing" width="256"/>

|

| 60 |

+

|

| 61 |

+

Note: Ensure you adjust the file paths before executing the code.

|

fast_sam/__init__.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .fast_sam_wrapper import FastSAM

|

| 2 |

+

from .fast_sam_wrapper import FastSamAutomaticMaskGenerator

|

| 3 |

+

|

| 4 |

+

fast_sam_model_registry = {

|

| 5 |

+

"FastSAM-x": FastSAM,

|

| 6 |

+

"FastSAM-s": FastSAM,

|

| 7 |

+

}

|

| 8 |

+

|

| 9 |

+

__all__ = ["FastSAM", "FastSamAutomaticMaskGenerator", "fast_sam_model_registry"]

|

fast_sam/fast_sam_wrapper.py

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import inspect

|

| 2 |

+

import math

|

| 3 |

+

from typing import Any, Dict, List

|

| 4 |

+

|

| 5 |

+

import cv2

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

import ultralytics

|

| 9 |

+

|

| 10 |

+

if hasattr(ultralytics, "FastSAM"):

|

| 11 |

+

from ultralytics import FastSAM as YOLO

|

| 12 |

+

else:

|

| 13 |

+

from ultralytics import YOLO

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class FastSAM:

|

| 17 |

+

def __init__(

|

| 18 |

+

self,

|

| 19 |

+

checkpoint: str,

|

| 20 |

+

) -> None:

|

| 21 |

+

self.model_path = checkpoint

|

| 22 |

+

self.model = YOLO(self.model_path)

|

| 23 |

+

|

| 24 |

+

if not hasattr(torch.nn.Upsample, "recompute_scale_factor"):

|

| 25 |

+

torch.nn.Upsample.recompute_scale_factor = None

|

| 26 |

+

|

| 27 |

+

def to(self, device) -> None:

|

| 28 |

+

self.model.to(device)

|

| 29 |

+

|

| 30 |

+

@property

|

| 31 |

+

def device(self) -> Any:

|

| 32 |

+

return self.model.device

|

| 33 |

+

|

| 34 |

+

def __call__(self, source=None, stream=False, **kwargs) -> Any:

|

| 35 |

+

return self.model(source=source, stream=stream, **kwargs)

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

class FastSamAutomaticMaskGenerator:

|

| 39 |

+

def __init__(

|

| 40 |

+

self,

|

| 41 |

+

model: FastSAM,

|

| 42 |

+

points_per_batch: int = None,

|

| 43 |

+

pred_iou_thresh: float = None,

|

| 44 |

+

stability_score_thresh: float = None,

|

| 45 |

+

) -> None:

|

| 46 |

+

self.model = model

|

| 47 |

+

self.points_per_batch = points_per_batch

|

| 48 |

+

self.pred_iou_thresh = pred_iou_thresh

|

| 49 |

+

self.stability_score_thresh = stability_score_thresh

|

| 50 |

+

self.conf = 0.25 if stability_score_thresh >= 0.95 else 0.15

|

| 51 |

+

|

| 52 |

+

def generate(self, image: np.ndarray) -> List[Dict[str, Any]]:

|

| 53 |

+

height, width = image.shape[:2]

|

| 54 |

+

new_height = math.ceil(height / 32) * 32

|

| 55 |

+

new_width = math.ceil(width / 32) * 32

|

| 56 |

+

resize_image = cv2.resize(image, (new_width, new_height), interpolation=cv2.INTER_CUBIC)

|

| 57 |

+

|

| 58 |

+

backup_nn_dict = {}

|

| 59 |

+

for key, _ in torch.nn.__dict__.copy().items():

|

| 60 |

+

if not inspect.isclass(torch.nn.__dict__.get(key)) and "Norm" in key:

|

| 61 |

+

backup_nn_dict[key] = torch.nn.__dict__.pop(key)

|

| 62 |

+

|

| 63 |

+

results = self.model(

|

| 64 |

+

source=resize_image,

|

| 65 |

+

stream=False,

|

| 66 |

+

imgsz=max(new_height, new_width),

|

| 67 |

+

device=self.model.device,

|

| 68 |

+

retina_masks=True,

|

| 69 |

+

iou=0.7,

|

| 70 |

+

conf=self.conf,

|

| 71 |

+

max_det=256)

|

| 72 |

+

|

| 73 |

+

for key, value in backup_nn_dict.items():

|

| 74 |

+

setattr(torch.nn, key, value)

|

| 75 |

+

# assert backup_nn_dict[key] == torch.nn.__dict__[key]

|

| 76 |

+

|

| 77 |

+

annotations = results[0].masks.data

|

| 78 |

+

|

| 79 |

+

if isinstance(annotations[0], torch.Tensor):

|

| 80 |

+

annotations = np.array(annotations.cpu())

|

| 81 |

+

|

| 82 |

+

annotations_list = []

|

| 83 |

+

for mask in annotations:

|

| 84 |

+

mask = cv2.morphologyEx(mask.astype(np.uint8), cv2.MORPH_CLOSE, np.ones((3, 3), np.uint8))

|

| 85 |

+

mask = cv2.morphologyEx(mask.astype(np.uint8), cv2.MORPH_OPEN, np.ones((7, 7), np.uint8))

|

| 86 |

+

mask = cv2.resize(mask, (width, height), interpolation=cv2.INTER_AREA)

|

| 87 |

+

|

| 88 |

+

annotations_list.append(dict(segmentation=mask.astype(bool)))

|

| 89 |

+

|

| 90 |

+

return annotations_list

|

ia_check_versions.py

ADDED

|

@@ -0,0 +1,74 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from functools import cached_property

|

| 2 |

+

from importlib.metadata import version

|

| 3 |

+

from importlib.util import find_spec

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

from packaging.version import parse

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def get_module_version(module_name):

|

| 10 |

+

try:

|

| 11 |

+

module_version = version(module_name)

|

| 12 |

+

except Exception:

|

| 13 |

+

module_version = None

|

| 14 |

+

return module_version

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def compare_version(version1, version2):

|

| 18 |

+

if not isinstance(version1, str) or not isinstance(version2, str):

|

| 19 |

+

return None

|

| 20 |

+

|

| 21 |

+

if parse(version1) > parse(version2):

|

| 22 |

+

return 1

|

| 23 |

+

elif parse(version1) < parse(version2):

|

| 24 |

+

return -1

|

| 25 |

+

else:

|

| 26 |

+

return 0

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def compare_module_version(module_name, version_string):

|

| 30 |

+

module_version = get_module_version(module_name)

|

| 31 |

+

|

| 32 |

+

result = compare_version(module_version, version_string)

|

| 33 |

+

return result if result is not None else -2

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

class IACheckVersions:

|

| 37 |

+

@cached_property

|

| 38 |

+

def diffusers_enable_cpu_offload(self):

|

| 39 |

+

if (find_spec("diffusers") is not None and compare_module_version("diffusers", "0.15.0") >= 0 and

|

| 40 |

+

find_spec("accelerate") is not None and compare_module_version("accelerate", "0.17.0") >= 0 and

|

| 41 |

+

torch.cuda.is_available()):

|

| 42 |

+

return True

|

| 43 |

+

else:

|

| 44 |

+

return False

|

| 45 |

+

|

| 46 |

+

@cached_property

|

| 47 |

+

def torch_mps_is_available(self):

|

| 48 |

+

if compare_module_version("torch", "2.0.1") < 0:

|

| 49 |

+

if not getattr(torch, "has_mps", False):

|

| 50 |

+

return False

|

| 51 |

+

try:

|

| 52 |

+

torch.zeros(1).to(torch.device("mps"))

|

| 53 |

+

return True

|

| 54 |

+

except Exception:

|

| 55 |

+

return False

|

| 56 |

+

else:

|

| 57 |

+

return torch.backends.mps.is_available() and torch.backends.mps.is_built()

|

| 58 |

+

|

| 59 |

+

@cached_property

|

| 60 |

+

def torch_on_amd_rocm(self):

|

| 61 |

+

if find_spec("torch") is not None and "rocm" in version("torch"):

|

| 62 |

+

return True

|

| 63 |

+

else:

|

| 64 |

+

return False

|

| 65 |

+

|

| 66 |

+

@cached_property

|

| 67 |

+

def gradio_version_is_old(self):

|

| 68 |

+

if find_spec("gradio") is not None and compare_module_version("gradio", "3.34.0") <= 0:

|

| 69 |

+

return True

|

| 70 |

+

else:

|

| 71 |

+

return False

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

ia_check_versions = IACheckVersions()

|

ia_config.py

ADDED

|

@@ -0,0 +1,115 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import configparser

|

| 2 |

+

# import json

|

| 3 |

+

import os

|

| 4 |

+

from types import SimpleNamespace

|

| 5 |

+

|

| 6 |

+

from ia_ui_items import get_inp_model_ids, get_sam_model_ids

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class IAConfig:

|

| 10 |

+

SECTIONS = SimpleNamespace(

|

| 11 |

+

DEFAULT=configparser.DEFAULTSECT,

|

| 12 |

+

USER="USER",

|

| 13 |

+

)

|

| 14 |

+

|

| 15 |

+

KEYS = SimpleNamespace(

|

| 16 |

+

SAM_MODEL_ID="sam_model_id",

|

| 17 |

+

INP_MODEL_ID="inp_model_id",

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

PATHS = SimpleNamespace(

|

| 21 |

+

INI=os.path.join(os.path.dirname(os.path.realpath(__file__)), "ia_config.ini"),

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

global_args = {}

|

| 25 |

+

|

| 26 |

+

def __init__(self):

|

| 27 |

+

self.ids_dict = {}

|

| 28 |

+

self.ids_dict[IAConfig.KEYS.SAM_MODEL_ID] = {

|

| 29 |

+

"list": get_sam_model_ids(),

|

| 30 |

+

"index": 1,

|

| 31 |

+

}

|

| 32 |

+

self.ids_dict[IAConfig.KEYS.INP_MODEL_ID] = {

|

| 33 |

+

"list": get_inp_model_ids(),

|

| 34 |

+

"index": 0,

|

| 35 |

+

}

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

ia_config = IAConfig()

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def setup_ia_config_ini():

|

| 42 |

+