Spaces:

Runtime error

Runtime error

j

commited on

Commit

·

2e37cc0

1

Parent(s):

0fe6d3b

first commit

Browse files- README.md +1 -1

- assets/framework.png +0 -0

- assets/logo.png +0 -0

- assets/radar_compare_qwen_audio.png +0 -0

- demo/demo.sh +14 -0

- demo/requirements_web_demo.txt +2 -0

- demo/web_demo_audio.py +164 -0

- eval_audio/EVALUATION.md +176 -0

- eval_audio/cn_tn.py +1204 -0

- eval_audio/evaluate_asr.py +269 -0

- eval_audio/evaluate_chat.py +182 -0

- eval_audio/evaluate_emotion.py +195 -0

- eval_audio/evaluate_st.py +200 -0

- eval_audio/evaluate_tokenizer.py +61 -0

- eval_audio/evaluate_vocal_sound.py +193 -0

- eval_audio/whisper_normalizer/basic.py +76 -0

- eval_audio/whisper_normalizer/english.json +1741 -0

- eval_audio/whisper_normalizer/english.py +550 -0

- requirements.txt +2 -0

README.md

CHANGED

|

@@ -5,7 +5,7 @@ colorFrom: indigo

|

|

| 5 |

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.5.0

|

| 8 |

-

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

|

|

|

| 5 |

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.5.0

|

| 8 |

+

app_file: demo/app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

assets/framework.png

ADDED

|

assets/logo.png

ADDED

|

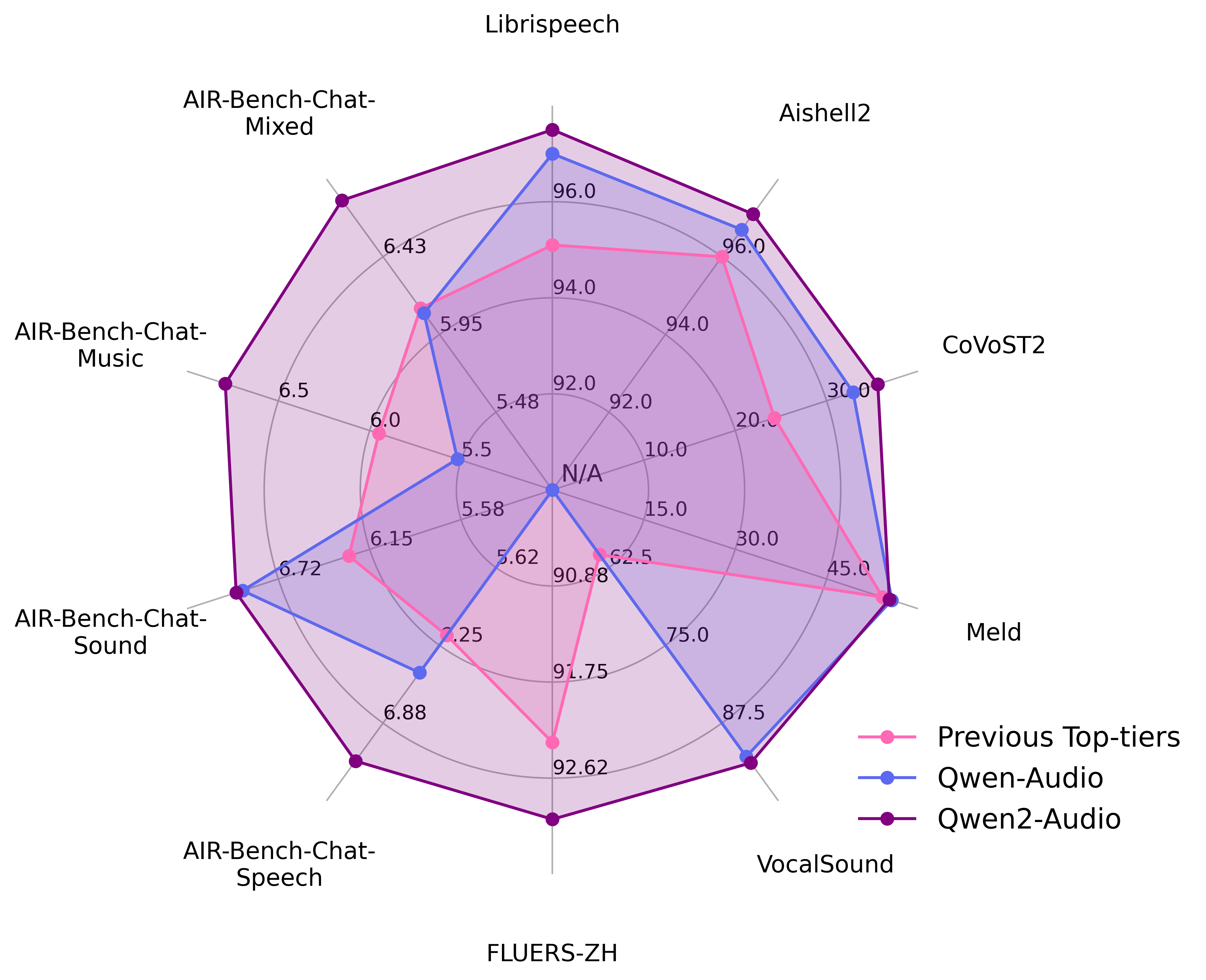

assets/radar_compare_qwen_audio.png

ADDED

|

demo/demo.sh

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

echo $CUDA_VISIBLE_DEVICES

|

| 2 |

+

SERVER_PORT=9001

|

| 3 |

+

MASTER_ADDR=localhost

|

| 4 |

+

MASTER_PORT="3${SERVER_PORT}"

|

| 5 |

+

NNODES=${WORLD_SIZE:-1}

|

| 6 |

+

NODE_RANK=${RANK:-0}

|

| 7 |

+

GPUS_PER_NODE=1

|

| 8 |

+

python -m torch.distributed.launch --use_env \

|

| 9 |

+

--nproc_per_node $GPUS_PER_NODE --nnodes $NNODES \

|

| 10 |

+

--node_rank $NODE_RANK \

|

| 11 |

+

--master_addr=${MASTER_ADDR:-127.0.0.1} \

|

| 12 |

+

--master_port=$MASTER_PORT \

|

| 13 |

+

web_demo_audio.py \

|

| 14 |

+

--server-port ${SERVER_PORT}

|

demo/requirements_web_demo.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==4.31.3

|

| 2 |

+

modelscope-studio

|

demo/web_demo_audio.py

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import modelscope_studio as mgr

|

| 3 |

+

import librosa

|

| 4 |

+

from transformers import AutoProcessor, Qwen2AudioForConditionalGeneration

|

| 5 |

+

from argparse import ArgumentParser

|

| 6 |

+

|

| 7 |

+

DEFAULT_CKPT_PATH = 'Qwen/Qwen2-Audio-7B-Instruct'

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def _get_args():

|

| 11 |

+

parser = ArgumentParser()

|

| 12 |

+

parser.add_argument("-c", "--checkpoint-path", type=str, default=DEFAULT_CKPT_PATH,

|

| 13 |

+

help="Checkpoint name or path, default to %(default)r")

|

| 14 |

+

parser.add_argument("--cpu-only", action="store_true", help="Run demo with CPU only")

|

| 15 |

+

parser.add_argument("--inbrowser", action="store_true", default=False,

|

| 16 |

+

help="Automatically launch the interface in a new tab on the default browser.")

|

| 17 |

+

parser.add_argument("--server-port", type=int, default=8000,

|

| 18 |

+

help="Demo server port.")

|

| 19 |

+

parser.add_argument("--server-name", type=str, default="127.0.0.1",

|

| 20 |

+

help="Demo server name.")

|

| 21 |

+

|

| 22 |

+

args = parser.parse_args()

|

| 23 |

+

return args

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def add_text(chatbot, task_history, input):

|

| 27 |

+

text_content = input.text

|

| 28 |

+

content = []

|

| 29 |

+

if len(input.files) > 0:

|

| 30 |

+

for i in input.files:

|

| 31 |

+

content.append({'type': 'audio', 'audio_url': i.path})

|

| 32 |

+

if text_content:

|

| 33 |

+

content.append({'type': 'text', 'text': text_content})

|

| 34 |

+

task_history.append({"role": "user", "content": content})

|

| 35 |

+

|

| 36 |

+

chatbot.append([{

|

| 37 |

+

"text": input.text,

|

| 38 |

+

"files": input.files,

|

| 39 |

+

}, None])

|

| 40 |

+

return chatbot, task_history, None

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def add_file(chatbot, task_history, audio_file):

|

| 44 |

+

"""Add audio file to the chat history."""

|

| 45 |

+

task_history.append({"role": "user", "content": [{"audio": audio_file.name}]})

|

| 46 |

+

chatbot.append((f"[Audio file: {audio_file.name}]", None))

|

| 47 |

+

return chatbot, task_history

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def reset_user_input():

|

| 51 |

+

"""Reset the user input field."""

|

| 52 |

+

return gr.Textbox.update(value='')

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

def reset_state(task_history):

|

| 56 |

+

"""Reset the chat history."""

|

| 57 |

+

return [], []

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def regenerate(chatbot, task_history):

|

| 61 |

+

"""Regenerate the last bot response."""

|

| 62 |

+

if task_history and task_history[-1]['role'] == 'assistant':

|

| 63 |

+

task_history.pop()

|

| 64 |

+

chatbot.pop()

|

| 65 |

+

if task_history:

|

| 66 |

+

chatbot, task_history = predict(chatbot, task_history)

|

| 67 |

+

return chatbot, task_history

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def predict(chatbot, task_history):

|

| 71 |

+

"""Generate a response from the model."""

|

| 72 |

+

print(f"{task_history=}")

|

| 73 |

+

print(f"{chatbot=}")

|

| 74 |

+

text = processor.apply_chat_template(task_history, add_generation_prompt=True, tokenize=False)

|

| 75 |

+

audios = []

|

| 76 |

+

for message in task_history:

|

| 77 |

+

if isinstance(message["content"], list):

|

| 78 |

+

for ele in message["content"]:

|

| 79 |

+

if ele["type"] == "audio":

|

| 80 |

+

audios.append(

|

| 81 |

+

librosa.load(ele['audio_url'], sr=processor.feature_extractor.sampling_rate)[0]

|

| 82 |

+

)

|

| 83 |

+

|

| 84 |

+

if len(audios)==0:

|

| 85 |

+

audios=None

|

| 86 |

+

print(f"{text=}")

|

| 87 |

+

print(f"{audios=}")

|

| 88 |

+

inputs = processor(text=text, audios=audios, return_tensors="pt", padding=True)

|

| 89 |

+

if not _get_args().cpu_only:

|

| 90 |

+

inputs["input_ids"] = inputs.input_ids.to("cuda")

|

| 91 |

+

|

| 92 |

+

generate_ids = model.generate(**inputs, max_length=256)

|

| 93 |

+

generate_ids = generate_ids[:, inputs.input_ids.size(1):]

|

| 94 |

+

|

| 95 |

+

response = processor.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

|

| 96 |

+

print(f"{response=}")

|

| 97 |

+

task_history.append({'role': 'assistant',

|

| 98 |

+

'content': response})

|

| 99 |

+

chatbot.append((None, response)) # Add the response to chatbot

|

| 100 |

+

return chatbot, task_history

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

def _launch_demo(args):

|

| 104 |

+

with gr.Blocks() as demo:

|

| 105 |

+

gr.Markdown(

|

| 106 |

+

"""<p align="center"><img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/assets/blog/qwenaudio/qwen2audio_logo.png" style="height: 80px"/><p>""")

|

| 107 |

+

gr.Markdown("""<center><font size=8>Qwen2-Audio-Instruct Bot</center>""")

|

| 108 |

+

gr.Markdown(

|

| 109 |

+

"""\

|

| 110 |

+

<center><font size=3>This WebUI is based on Qwen2-Audio-Instruct, developed by Alibaba Cloud. \

|

| 111 |

+

(本WebUI基于Qwen2-Audio-Instruct打造,实现聊天机器人功能。)</center>""")

|

| 112 |

+

gr.Markdown("""\

|

| 113 |

+

<center><font size=4>Qwen2-Audio <a href="https://modelscope.cn/models/qwen/Qwen2-Audio-7B">🤖 </a>

|

| 114 |

+

| <a href="https://huggingface.co/Qwen/Qwen2-Audio-7B">🤗</a>  |

|

| 115 |

+

Qwen2-Audio-Instruct <a href="https://modelscope.cn/models/qwen/Qwen2-Audio-7B-Instruct">🤖 </a> |

|

| 116 |

+

<a href="https://huggingface.co/Qwen/Qwen2-Audio-7B-Instruct">🤗</a>  |

|

| 117 |

+

<a href="https://github.com/QwenLM/Qwen2-Audio">Github</a></center>""")

|

| 118 |

+

chatbot = mgr.Chatbot(label='Qwen2-Audio-7B-Instruct', elem_classes="control-height", height=750)

|

| 119 |

+

|

| 120 |

+

user_input = mgr.MultimodalInput(

|

| 121 |

+

interactive=True,

|

| 122 |

+

sources=['microphone', 'upload'],

|

| 123 |

+

submit_button_props=dict(value="🚀 Submit (发送)"),

|

| 124 |

+

upload_button_props=dict(value="📁 Upload (上传文件)", show_progress=True),

|

| 125 |

+

)

|

| 126 |

+

task_history = gr.State([])

|

| 127 |

+

|

| 128 |

+

with gr.Row():

|

| 129 |

+

empty_bin = gr.Button("🧹 Clear History (清除历史)")

|

| 130 |

+

regen_btn = gr.Button("🤔️ Regenerate (重试)")

|

| 131 |

+

|

| 132 |

+

user_input.submit(fn=add_text,

|

| 133 |

+

inputs=[chatbot, task_history, user_input],

|

| 134 |

+

outputs=[chatbot, task_history, user_input]).then(

|

| 135 |

+

predict, [chatbot, task_history], [chatbot, task_history], show_progress=True

|

| 136 |

+

)

|

| 137 |

+

empty_bin.click(reset_state, outputs=[chatbot, task_history], show_progress=True)

|

| 138 |

+

regen_btn.click(regenerate, [chatbot, task_history], [chatbot, task_history], show_progress=True)

|

| 139 |

+

|

| 140 |

+

demo.queue().launch(

|

| 141 |

+

share=False,

|

| 142 |

+

inbrowser=args.inbrowser,

|

| 143 |

+

server_port=args.server_port,

|

| 144 |

+

server_name=args.server_name,

|

| 145 |

+

)

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

if __name__ == "__main__":

|

| 149 |

+

args = _get_args()

|

| 150 |

+

if args.cpu_only:

|

| 151 |

+

device_map = "cpu"

|

| 152 |

+

else:

|

| 153 |

+

device_map = "auto"

|

| 154 |

+

|

| 155 |

+

model = Qwen2AudioForConditionalGeneration.from_pretrained(

|

| 156 |

+

args.checkpoint_path,

|

| 157 |

+

torch_dtype="auto",

|

| 158 |

+

device_map=device_map,

|

| 159 |

+

resume_download=True,

|

| 160 |

+

).eval()

|

| 161 |

+

model.generation_config.max_new_tokens = 2048 # For chat.

|

| 162 |

+

print("generation_config", model.generation_config)

|

| 163 |

+

processor = AutoProcessor.from_pretrained(args.checkpoint_path, resume_download=True)

|

| 164 |

+

_launch_demo(args)

|

eval_audio/EVALUATION.md

ADDED

|

@@ -0,0 +1,176 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Evaluation

|

| 2 |

+

|

| 3 |

+

### Dependencies

|

| 4 |

+

|

| 5 |

+

```bash

|

| 6 |

+

apt-get update

|

| 7 |

+

apt-get install openjdk-8-jdk

|

| 8 |

+

pip install evaluate

|

| 9 |

+

pip install sacrebleu==1.5.1

|

| 10 |

+

pip install edit_distance

|

| 11 |

+

pip install editdistance

|

| 12 |

+

pip install jiwer

|

| 13 |

+

pip install scikit-image

|

| 14 |

+

pip install textdistance

|

| 15 |

+

pip install sed_eval

|

| 16 |

+

pip install more_itertools

|

| 17 |

+

pip install zhconv

|

| 18 |

+

```

|

| 19 |

+

### ASR

|

| 20 |

+

|

| 21 |

+

- Data

|

| 22 |

+

|

| 23 |

+

> LibriSpeech: https://www.openslr.org/12

|

| 24 |

+

|

| 25 |

+

> Aishell2: https://www.aishelltech.com/aishell_2

|

| 26 |

+

|

| 27 |

+

> common voice 15: https://commonvoice.mozilla.org/en/datasets

|

| 28 |

+

|

| 29 |

+

> Fluers: https://huggingface.co/datasets/google/fleurs

|

| 30 |

+

|

| 31 |

+

```bash

|

| 32 |

+

mkdir -p data/asr && cd data/asr

|

| 33 |

+

|

| 34 |

+

# download audios from above links

|

| 35 |

+

|

| 36 |

+

# download converted files

|

| 37 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/librispeech_eval.jsonl

|

| 38 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/aishell2_eval.jsonl

|

| 39 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/cv15_asr_en_eval.jsonl

|

| 40 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/cv15_asr_zh_eval.jsonl

|

| 41 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/cv15_asr_yue_eval.jsonl

|

| 42 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/cv15_asr_fr_eval.jsonl

|

| 43 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/fleurs_asr_zh_eval.jsonl

|

| 44 |

+

cd ../..

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

```bash

|

| 48 |

+

for ds in "librispeech" "aishell2" "cv15_en" "cv15_zh" "cv15_yue" "cv15_fr" "fluers_zh"

|

| 49 |

+

do

|

| 50 |

+

python -m torch.distributed.launch --use_env \

|

| 51 |

+

--nproc_per_node ${NPROC_PER_NODE:-8} --nnodes 1 \

|

| 52 |

+

evaluate_asr.py \

|

| 53 |

+

--checkpoint $checkpoint \

|

| 54 |

+

--dataset $ds \

|

| 55 |

+

--batch-size 20 \

|

| 56 |

+

--num-workers 2

|

| 57 |

+

done

|

| 58 |

+

```

|

| 59 |

+

### S2TT

|

| 60 |

+

|

| 61 |

+

- Data

|

| 62 |

+

|

| 63 |

+

> CoVoST 2: https://github.com/facebookresearch/covost

|

| 64 |

+

|

| 65 |

+

```bash

|

| 66 |

+

mkdir -p data/st && cd data/st

|

| 67 |

+

|

| 68 |

+

# download audios from https://commonvoice.mozilla.org/en/datasets

|

| 69 |

+

|

| 70 |

+

# download converted files

|

| 71 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/covost2_eval.jsonl

|

| 72 |

+

|

| 73 |

+

cd ../..

|

| 74 |

+

```

|

| 75 |

+

- Evaluate

|

| 76 |

+

```bash

|

| 77 |

+

ds="covost2"

|

| 78 |

+

python -m torch.distributed.launch --use-env \

|

| 79 |

+

--nproc_per_node ${NPROC_PER_NODE:-8} --nnodes 1 \

|

| 80 |

+

evaluate_st.py \

|

| 81 |

+

--checkpoint $checkpoint \

|

| 82 |

+

--dataset $ds \

|

| 83 |

+

--batch-size 8 \

|

| 84 |

+

--num-workers 2

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

### SER

|

| 88 |

+

- Data

|

| 89 |

+

> MELD: https://affective-meld.github.io/

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

```bash

|

| 94 |

+

mkdir -p data/ser && cd data/ser

|

| 95 |

+

|

| 96 |

+

# download MELD datasets from above link

|

| 97 |

+

|

| 98 |

+

# download converted files

|

| 99 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/meld_eval.jsonl

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

cd ../..

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

+

- Evaluate

|

| 106 |

+

|

| 107 |

+

```bash

|

| 108 |

+

ds="meld"

|

| 109 |

+

python -m torch.distributed.launch --use-env \

|

| 110 |

+

--nproc_per_node ${NPROC_PER_NODE:-8} --nnodes 1 \

|

| 111 |

+

evaluate_emotion.py \

|

| 112 |

+

--checkpoint $checkpoint \

|

| 113 |

+

--dataset $ds \

|

| 114 |

+

--batch-size 8 \

|

| 115 |

+

--num-workers 2

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

### VSC

|

| 120 |

+

- Data

|

| 121 |

+

> VocalSound: https://github.com/YuanGongND/vocalsound

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

```bash

|

| 125 |

+

mkdir -p data/vsc && cd data/vsc

|

| 126 |

+

|

| 127 |

+

# download dataset from the above link

|

| 128 |

+

# download converted files

|

| 129 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/vocalsound_eval.jsonl

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

cd ../..

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

- Evaluate

|

| 136 |

+

|

| 137 |

+

```bash

|

| 138 |

+

ds="vocalsound"

|

| 139 |

+

python -m torch.distributed.launch --use-env \

|

| 140 |

+

--nproc_per_node ${NPROC_PER_NODE:-8} --nnodes 1 \

|

| 141 |

+

evaluate_aqa.py \

|

| 142 |

+

--checkpoint $checkpoint \

|

| 143 |

+

--dataset $ds \

|

| 144 |

+

--batch-size 8 \

|

| 145 |

+

--num-workers 2

|

| 146 |

+

```

|

| 147 |

+

|

| 148 |

+

### AIR-BENCH

|

| 149 |

+

- Data

|

| 150 |

+

> AIR-BENCH: https://huggingface.co/datasets/qyang1021/AIR-Bench-Dataset

|

| 151 |

+

|

| 152 |

+

```bash

|

| 153 |

+

mkdir -p data/airbench && cd data/airbench

|

| 154 |

+

|

| 155 |

+

# download dataset from the above link

|

| 156 |

+

# download converted files

|

| 157 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-Audio/evaluation/airbench_level_3_eval.jsonl

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

cd ../..

|

| 161 |

+

```

|

| 162 |

+

|

| 163 |

+

```bash

|

| 164 |

+

ds="airbench_level3"

|

| 165 |

+

python -m torch.distributed.launch --use-env \

|

| 166 |

+

--nproc_per_node ${NPROC_PER_NODE:-8} --nnodes 1 \

|

| 167 |

+

evaluate_chat.py \

|

| 168 |

+

--checkpoint $checkpoint \

|

| 169 |

+

--dataset $ds \

|

| 170 |

+

--batch-size 8 \

|

| 171 |

+

--num-workers 2

|

| 172 |

+

```

|

| 173 |

+

|

| 174 |

+

### Acknowledgement

|

| 175 |

+

|

| 176 |

+

Part of these codes are borrowed from [Whisper](https://github.com/openai/whisper) , [speechio](https://github.com/speechio/chinese_text_normalization), thanks for their wonderful work.

|

eval_audio/cn_tn.py

ADDED

|

@@ -0,0 +1,1204 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

# coding=utf-8

|

| 3 |

+

# copied from https://github.com/speechio/chinese_text_normalization/blob/master/python/cn_tn.py

|

| 4 |

+

# Authors:

|

| 5 |

+

# 2019.5 Zhiyang Zhou (https://github.com/Joee1995/chn_text_norm.git)

|

| 6 |

+

# 2019.9 - 2022 Jiayu DU

|

| 7 |

+

#

|

| 8 |

+

# requirements:

|

| 9 |

+

# - python 3.X

|

| 10 |

+

# notes: python 2.X WILL fail or produce misleading results

|

| 11 |

+

|

| 12 |

+

import sys, os, argparse

|

| 13 |

+

import string, re

|

| 14 |

+

import csv

|

| 15 |

+

|

| 16 |

+

# ================================================================================ #

|

| 17 |

+

# basic constant

|

| 18 |

+

# ================================================================================ #

|

| 19 |

+

CHINESE_DIGIS = u'零一二三四五六七八九'

|

| 20 |

+

BIG_CHINESE_DIGIS_SIMPLIFIED = u'零壹贰叁肆伍陆柒捌玖'

|

| 21 |

+

BIG_CHINESE_DIGIS_TRADITIONAL = u'零壹貳參肆伍陸柒捌玖'

|

| 22 |

+

SMALLER_BIG_CHINESE_UNITS_SIMPLIFIED = u'十百千万'

|

| 23 |

+

SMALLER_BIG_CHINESE_UNITS_TRADITIONAL = u'拾佰仟萬'

|

| 24 |

+

LARGER_CHINESE_NUMERING_UNITS_SIMPLIFIED = u'亿兆京垓秭穰沟涧正载'

|

| 25 |

+

LARGER_CHINESE_NUMERING_UNITS_TRADITIONAL = u'億兆京垓秭穰溝澗正載'

|

| 26 |

+

SMALLER_CHINESE_NUMERING_UNITS_SIMPLIFIED = u'十百千万'

|

| 27 |

+

SMALLER_CHINESE_NUMERING_UNITS_TRADITIONAL = u'拾佰仟萬'

|

| 28 |

+

|

| 29 |

+

ZERO_ALT = u'〇'

|

| 30 |

+

ONE_ALT = u'幺'

|

| 31 |

+

TWO_ALTS = [u'两', u'兩']

|

| 32 |

+

|

| 33 |

+

POSITIVE = [u'正', u'正']

|

| 34 |

+

NEGATIVE = [u'负', u'負']

|

| 35 |

+

POINT = [u'点', u'點']

|

| 36 |

+

# PLUS = [u'加', u'加']

|

| 37 |

+

# SIL = [u'杠', u'槓']

|

| 38 |

+

|

| 39 |

+

FILLER_CHARS = ['呃', '啊']

|

| 40 |

+

|

| 41 |

+

ER_WHITELIST = '(儿女|儿子|儿孙|女儿|儿媳|妻儿|' \

|

| 42 |

+

'胎儿|婴儿|新生儿|婴幼儿|幼儿|少儿|小儿|儿歌|儿童|儿科|托儿所|孤儿|' \

|

| 43 |

+

'儿戏|儿化|台儿庄|鹿儿岛|正儿八经|吊儿郎当|生儿育女|托儿带女|养儿防老|痴儿呆女|' \

|

| 44 |

+

'佳儿佳妇|儿怜兽扰|儿无常父|儿不嫌母丑|儿行千里母担忧|儿大不由爷|苏乞儿)'

|

| 45 |

+

ER_WHITELIST_PATTERN = re.compile(ER_WHITELIST)

|

| 46 |

+

|

| 47 |

+

# 中文数字系统类型

|

| 48 |

+

NUMBERING_TYPES = ['low', 'mid', 'high']

|

| 49 |

+

|

| 50 |

+

CURRENCY_NAMES = '(人民币|美元|日元|英镑|欧元|马克|法郎|加拿大元|澳元|港币|先令|芬兰马克|爱尔兰镑|' \

|

| 51 |

+

'里拉|荷兰盾|埃斯库多|比塞塔|印尼盾|林吉特|新西兰元|比索|卢布|新加坡元|韩元|泰铢)'

|

| 52 |

+

CURRENCY_UNITS = '((亿|千万|百万|万|千|百)|(亿|千万|百万|万|千|百|)元|(亿|千万|百万|万|千|百|)块|角|毛|分)'

|

| 53 |

+

COM_QUANTIFIERS = '(匹|张|座|回|场|尾|条|个|首|阙|阵|网|炮|顶|丘|棵|只|支|袭|辆|挑|担|颗|壳|窠|曲|墙|群|腔|' \

|

| 54 |

+

'砣|座|客|贯|扎|捆|刀|令|打|手|罗|坡|山|岭|江|溪|钟|队|单|双|对|出|口|头|脚|板|跳|枝|件|贴|' \

|

| 55 |

+

'针|线|管|名|位|身|堂|课|本|页|家|户|层|丝|毫|厘|分|钱|两|斤|担|铢|石|钧|锱|忽|(千|毫|微)克|' \

|

| 56 |

+

'毫|厘|分|寸|尺|丈|里|寻|常|铺|程|(千|分|厘|毫|微)米|撮|勺|合|升|斗|石|盘|碗|碟|叠|桶|笼|盆|' \

|

| 57 |

+

'盒|杯|钟|斛|锅|簋|篮|盘|桶|罐|瓶|壶|卮|盏|箩|箱|煲|啖|袋|钵|年|月|日|季|刻|时|周|天|秒|分|旬|' \

|

| 58 |

+

'纪|岁|世|更|夜|春|夏|秋|冬|代|伏|辈|丸|泡|粒|颗|幢|堆|条|根|支|道|面|片|张|颗|块)'

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

# Punctuation information are based on Zhon project (https://github.com/tsroten/zhon.git)

|

| 62 |

+

CN_PUNCS_STOP = '!?。。'

|

| 63 |

+

CN_PUNCS_NONSTOP = '"#$%&'()*+,-/:;<=>@[\]^_`{|}~⦅⦆「」、、〃《》「」『』【】〔〕〖〗〘〙〚〛〜〝〞〟〰〾〿–—‘’‛“”„‟…‧﹏·〈〉-'

|

| 64 |

+

CN_PUNCS = CN_PUNCS_STOP + CN_PUNCS_NONSTOP

|

| 65 |

+

|

| 66 |

+

PUNCS = CN_PUNCS + string.punctuation

|

| 67 |

+

PUNCS_TRANSFORM = str.maketrans(PUNCS, ' ' * len(PUNCS), '') # replace puncs with space

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

# https://zh.wikipedia.org/wiki/全行和半行

|

| 71 |

+

QJ2BJ = {

|

| 72 |

+

' ': ' ',

|

| 73 |

+

'!': '!',

|

| 74 |

+

'"': '"',

|

| 75 |

+

'#': '#',

|

| 76 |

+

'$': '$',

|

| 77 |

+

'%': '%',

|

| 78 |

+

'&': '&',

|

| 79 |

+

''': "'",

|

| 80 |

+

'(': '(',

|

| 81 |

+

')': ')',

|

| 82 |

+

'*': '*',

|

| 83 |

+

'+': '+',

|

| 84 |

+

',': ',',

|

| 85 |

+

'-': '-',

|

| 86 |

+

'.': '.',

|

| 87 |

+

'/': '/',

|

| 88 |

+

'0': '0',

|

| 89 |

+

'1': '1',

|

| 90 |

+

'2': '2',

|

| 91 |

+

'3': '3',

|

| 92 |

+

'4': '4',

|

| 93 |

+

'5': '5',

|

| 94 |

+

'6': '6',

|

| 95 |

+

'7': '7',

|

| 96 |

+

'8': '8',

|

| 97 |

+

'9': '9',

|

| 98 |

+

':': ':',

|

| 99 |

+

';': ';',

|

| 100 |

+

'<': '<',

|

| 101 |

+

'=': '=',

|

| 102 |

+

'>': '>',

|

| 103 |

+

'?': '?',

|

| 104 |

+

'@': '@',

|

| 105 |

+

'A': 'A',

|

| 106 |

+

'B': 'B',

|

| 107 |

+

'C': 'C',

|

| 108 |

+

'D': 'D',

|

| 109 |

+

'E': 'E',

|

| 110 |

+

'F': 'F',

|

| 111 |

+

'G': 'G',

|

| 112 |

+

'H': 'H',

|

| 113 |

+

'I': 'I',

|

| 114 |

+

'J': 'J',

|

| 115 |

+

'K': 'K',

|

| 116 |

+

'L': 'L',

|

| 117 |

+

'M': 'M',

|

| 118 |

+

'N': 'N',

|

| 119 |

+

'O': 'O',

|

| 120 |

+

'P': 'P',

|

| 121 |

+

'Q': 'Q',

|

| 122 |

+

'R': 'R',

|

| 123 |

+

'S': 'S',

|

| 124 |

+

'T': 'T',

|

| 125 |

+

'U': 'U',

|

| 126 |

+

'V': 'V',

|

| 127 |

+

'W': 'W',

|

| 128 |

+

'X': 'X',

|

| 129 |

+

'Y': 'Y',

|

| 130 |

+

'Z': 'Z',

|

| 131 |

+

'[': '[',

|

| 132 |

+

'\': '\\',

|

| 133 |

+

']': ']',

|

| 134 |

+

'^': '^',

|

| 135 |

+

'_': '_',

|

| 136 |

+

'`': '`',

|

| 137 |

+

'a': 'a',

|

| 138 |

+

'b': 'b',

|

| 139 |

+

'c': 'c',

|

| 140 |

+

'd': 'd',

|

| 141 |

+

'e': 'e',

|

| 142 |

+

'f': 'f',

|

| 143 |

+

'g': 'g',

|

| 144 |

+

'h': 'h',

|

| 145 |

+

'i': 'i',

|

| 146 |

+

'j': 'j',

|

| 147 |

+

'k': 'k',

|

| 148 |

+

'l': 'l',

|

| 149 |

+

'm': 'm',

|

| 150 |

+

'n': 'n',

|

| 151 |

+

'o': 'o',

|

| 152 |

+

'p': 'p',

|

| 153 |

+

'q': 'q',

|

| 154 |

+

'r': 'r',

|

| 155 |

+

's': 's',

|

| 156 |

+

't': 't',

|

| 157 |

+

'u': 'u',

|

| 158 |

+

'v': 'v',

|

| 159 |

+

'w': 'w',

|

| 160 |

+

'x': 'x',

|

| 161 |

+

'y': 'y',

|

| 162 |

+

'z': 'z',

|

| 163 |

+

'{': '{',

|

| 164 |

+

'|': '|',

|

| 165 |

+

'}': '}',

|

| 166 |

+

'~': '~',

|

| 167 |

+

}

|

| 168 |

+

QJ2BJ_TRANSFORM = str.maketrans(''.join(QJ2BJ.keys()), ''.join(QJ2BJ.values()), '')

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

# 2013 China National Standard: https://zh.wikipedia.org/wiki/通用规范汉字表, raw resources:

|

| 172 |

+

# https://github.com/mozillazg/pinyin-data/blob/master/kMandarin_8105.txt with 8105 chinese chars in total

|

| 173 |

+

CN_CHARS_COMMON = (

|

| 174 |

+

'一丁七万丈三上下不与丏丐丑专且丕世丘丙业丛东丝丞丢两严丧个丫中丰串临丸丹为主丽举'

|

| 175 |

+

'乂乃久么义之乌乍乎乏乐乒乓乔乖乘乙乜九乞也习乡书乩买乱乳乸乾了予争事二亍于亏云互'

|

| 176 |

+

'亓五井亘亚些亟亡亢交亥亦产亨亩享京亭亮亲亳亵亶亸亹人亿什仁仂仃仄仅仆仇仉今介仍从'

|

| 177 |

+

'仑仓仔仕他仗付仙仝仞仟仡代令以仨仪仫们仰仲仳仵件价任份仿企伈伉伊伋伍伎伏伐休众优'

|

| 178 |

+

'伙会伛伞伟传伢伣伤伥伦伧伪伫伭伯估伲伴伶伸伺似伽伾佁佃但位低住佐佑体何佖佗佘余佚'

|

| 179 |

+

'佛作佝佞佟你佣佤佥佩佬佯佰佳佴佶佸佺佻佼佽佾使侁侂侃侄侈侉例侍侏侑侔侗侘供依侠侣'

|

| 180 |

+

'侥侦侧侨侩侪侬侮侯侴侵侹便促俄俅俊俍俎俏俐俑俗俘俙俚俜保俞俟信俣俦俨俩俪俫俭修俯'

|

| 181 |

+

'俱俳俵俶俸俺俾倌倍倏倒倓倔倕倘候倚倜倞借倡倥倦倧倨倩倪倬倭倮倴债倻值倾偁偃假偈偌'

|

| 182 |

+

'偎偏偓偕做停偡健偬偭偰偲偶偷偻偾偿傀傃傅傈傉傍傒傕傣傥傧储傩催傲傺傻僇僎像僔僖僚'

|

| 183 |

+

'僦僧僬僭僮僰僳僵僻儆儇儋儒儡儦儳儴儿兀允元兄充兆先光克免兑兔兕兖党兜兢入全八公六'

|

| 184 |

+

'兮兰共关兴兵其具典兹养兼兽冀冁内冈冉册再冏冒冔冕冗写军农冠冢冤冥冬冮冯冰冱冲决况'

|

| 185 |

+

'冶冷冻冼冽净凄准凇凉凋凌减凑凓凘凛凝几凡凤凫凭凯凰凳凶凸凹出击凼函凿刀刁刃分切刈'

|

| 186 |

+

'刊刍刎刑划刖列刘则刚创初删判刨利别刬刭刮到刳制刷券刹刺刻刽刿剀剁剂剃剅削剋剌前剐'

|

| 187 |

+

'剑剔剕剖剜剞剟剡剥剧剩剪副割剽剿劁劂劄劈劐劓力劝办功加务劢劣动助努劫劬劭励劲劳劼'

|

| 188 |

+

'劾势勃勇勉勋勍勐勒勔勖勘勚募勠勤勰勺勾勿匀包匆匈匍匏匐匕化北匙匜匝匠匡匣匦匪匮匹'

|

| 189 |

+

'区医匼匾匿十千卅升午卉半华协卑卒卓单卖南博卜卞卟占卡卢卣卤卦卧卫卬卮卯印危即却卵'

|

| 190 |

+

'卷卸卺卿厂厄厅历厉压厌厍厕厖厘厚厝原厢厣厥厦厨厩厮去厾县叁参叆叇又叉及友双反发叔'

|

| 191 |

+

'叕取受变叙叚叛叟叠口古句另叨叩只叫召叭叮可台叱史右叵叶号司叹叻叼叽吁吃各吆合吉吊'

|

| 192 |

+

'同名后吏吐向吒吓吕吖吗君吝吞吟吠吡吣否吧吨吩含听吭吮启吱吲吴吵吸吹吻吼吽吾呀呃呆'

|

| 193 |

+

'呇呈告呋呐呒呓呔呕呖呗员呙呛呜呢呣呤呦周呱呲味呵呶呷呸呻呼命咀咂咄咆咇咉咋和咍咎'

|

| 194 |

+

'咏咐咒咔咕咖咙咚咛咝咡咣咤咥咦咧咨咩咪咫咬咯咱咳咴咸咺咻咽咿哀品哂哃哄哆哇哈哉哌'

|

| 195 |

+

'响哎哏哐哑哒哓哔哕哗哙哚哝哞哟哢哥哦哧哨哩哪哭哮哱哲哳哺哼哽哿唁唆唇唉唏唐唑唔唛'

|

| 196 |

+

'唝唠唢唣唤唧唪唬售唯唰唱唳唵唷唼唾唿啁啃啄商啉啊啐啕啖啜啡啤啥啦啧啪啫啬啭啮啰啴'

|

| 197 |

+

'啵啶啷啸啻啼啾喀喁喂喃善喆喇喈喉喊喋喏喑喔喘喙喜喝喟喤喧喱喳喵喷喹喻喽喾嗄嗅嗉嗌'

|

| 198 |

+

'嗍嗐嗑嗒嗓嗔嗖嗜嗝嗞嗟嗡嗣嗤嗥嗦嗨嗪嗫嗬嗯嗲嗳嗵嗷嗽嗾嘀嘁嘈嘉嘌嘎嘏嘘嘚嘛嘞嘟嘡'

|

| 199 |

+

'嘣嘤嘧嘬嘭嘱嘲嘴嘶嘹嘻嘿噀噂噇噌噍噎噔噗噘噙噜噢噤器噩噪噫噬噱噶噻噼嚄嚅嚆嚎嚏嚓'

|

| 200 |

+

'嚚嚣嚭嚯嚷嚼囊囔囚四回囟因囡团囤囫园困囱围囵囷囹固国图囿圃圄圆圈圉圊圌圐圙圜土圢'

|

| 201 |

+

'圣在圩圪圫圬圭圮圯地圲圳圹场圻圾址坂均坉坊坋坌坍坎坏坐坑坒块坚坛坜坝坞坟坠坡坤坥'

|

| 202 |

+

'坦坨坩坪坫坬坭坯坰坳坷坻坼坽垂垃垄垆垈型垌垍垎垏垒垓垕垙垚垛垞垟垠垡垢垣垤垦垧垩'

|

| 203 |

+

'垫垭垮垯垱垲垴垵垸垺垾垿埂埃埆埇埋埌城埏埒埔埕埗埘埙埚埝域埠埤埪埫埭埯埴埵埸培基'

|

| 204 |

+

'埼埽堂堃堆堇堉堋堌堍堎堐堑堕堙堞堠堡堤堧堨堪堰堲堵堼堽堾塄塅塆塌塍塑塔塘塝塞塥填'

|

| 205 |

+

'塬塱塾墀墁境墅墈墉墐墒墓墕墘墙墚增墟墡墣墦墨墩墼壁壅壑壕壤士壬壮声壳壶壸壹处备复'

|

| 206 |

+

'夏夐夔夕外夙多夜够夤夥大天太夫夬夭央夯失头夷夸夹夺夼奁奂奄奇奈奉奋奎奏契奓奔奕奖'

|

| 207 |

+