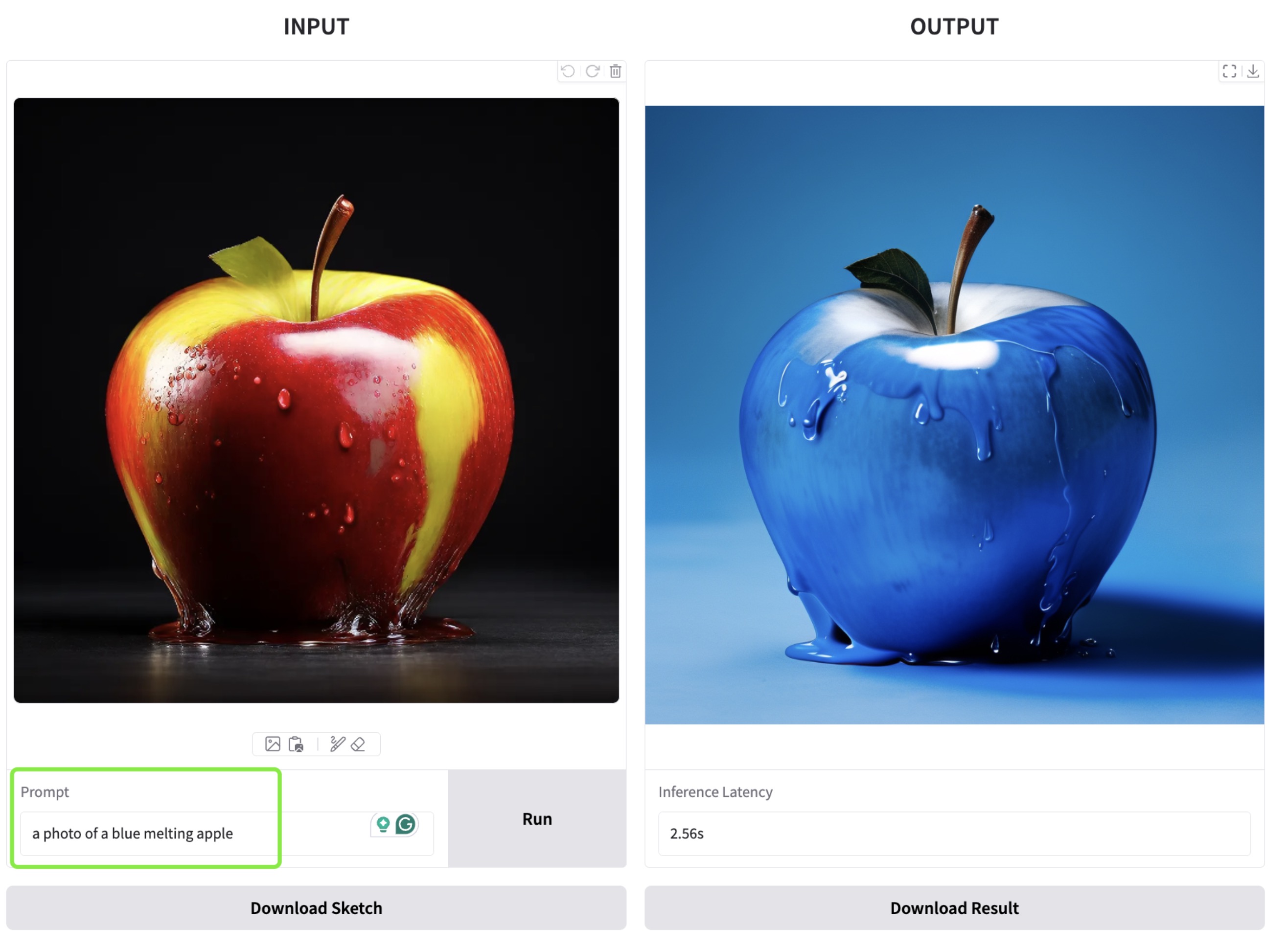

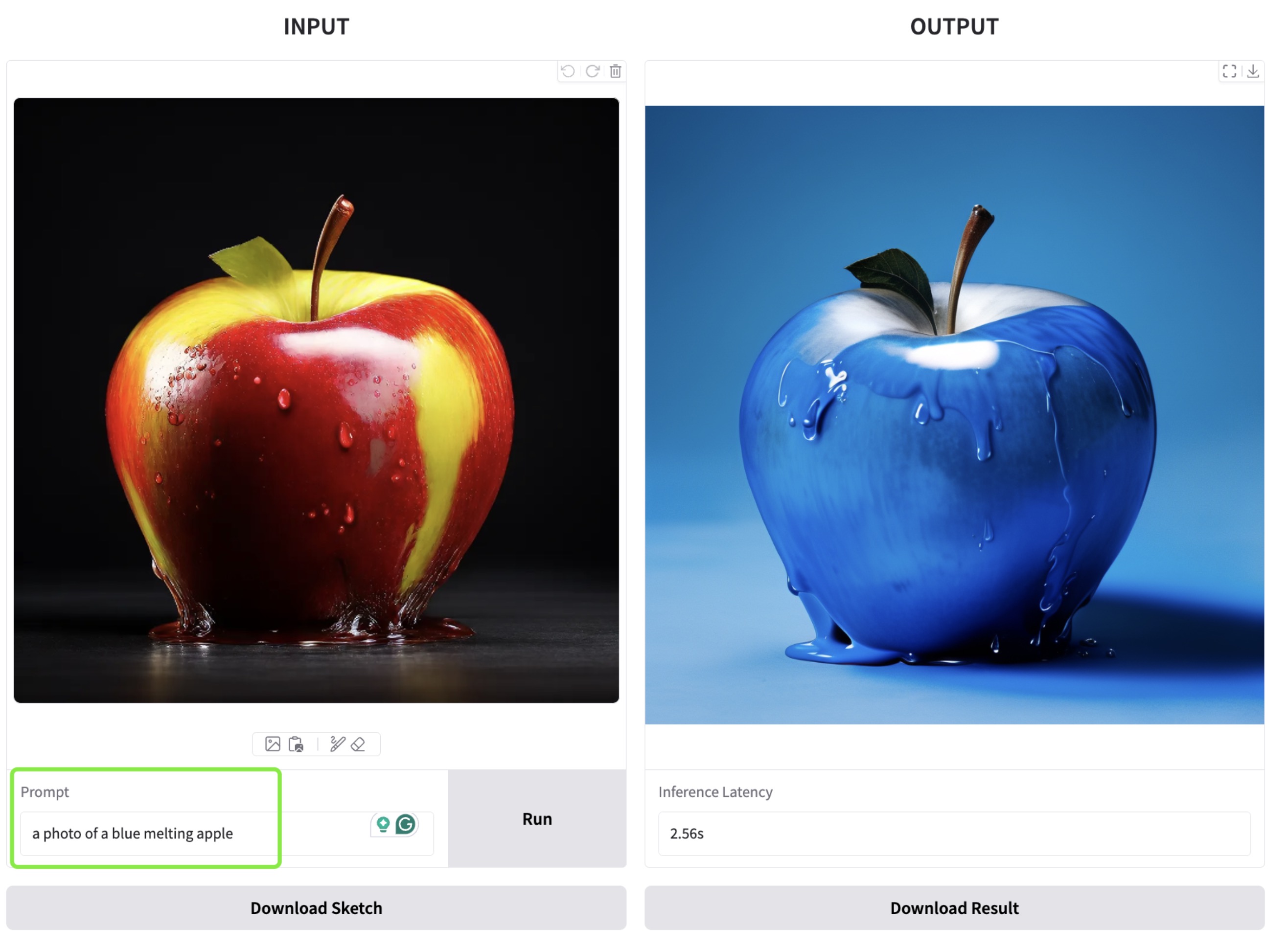

## 🔥 ControlNet

We incorporate a ControlNet-like(https://github.com/lllyasviel/ControlNet) module enables fine-grained control over text-to-image diffusion models. We implement a ControlNet-Transformer architecture, specifically tailored for Transformers, achieving explicit controllability alongside high-quality image generation.

## Inference of `Sana + ControlNet`

### 1). Gradio Interface

```bash

python app/app_sana_controlnet_hed.py \

--config configs/sana_controlnet_config/Sana_1600M_1024px_controlnet_bf16.yaml \

--model_path hf://Efficient-Large-Model/Sana_1600M_1024px_BF16_ControlNet_HED/checkpoints/Sana_1600M_1024px_BF16_ControlNet_HED.pth

```

### 2). Inference with JSON file

```bash

python tools/controlnet/inference_controlnet.py \

--config configs/sana_controlnet_config/Sana_1600M_1024px_controlnet_bf16.yaml \

--model_path hf://Efficient-Large-Model/Sana_1600M_1024px_BF16_ControlNet_HED/checkpoints/Sana_1600M_1024px_BF16_ControlNet_HED.pth \

--json_file asset/controlnet/samples_controlnet.json

```

### 3). Inference code snap

```python

import torch

from PIL import Image

from app.sana_controlnet_pipeline import SanaControlNetPipeline

device = "cuda" if torch.cuda.is_available() else "cpu"

pipe = SanaControlNetPipeline("configs/sana_controlnet_config/Sana_1600M_1024px_controlnet_bf16.yaml")

pipe.from_pretrained("hf://Efficient-Large-Model/Sana_1600M_1024px_BF16_ControlNet_HED/checkpoints/Sana_1600M_1024px_BF16_ControlNet_HED.pth")

ref_image = Image.open("asset/controlnet/ref_images/A transparent sculpture of a duck made out of glass. The sculpture is in front of a painting of a la.jpg")

prompt = "A transparent sculpture of a duck made out of glass. The sculpture is in front of a painting of a landscape."

images = pipe(

prompt=prompt,

ref_image=ref_image,

guidance_scale=4.5,

num_inference_steps=10,

sketch_thickness=2,

generator=torch.Generator(device=device).manual_seed(0),

)

```

## Training of `Sana + ControlNet`

### Coming soon