Spaces:

Running

Running

Victoria Oberascher

commited on

Commit

·

7af19e8

1

Parent(s):

9b22cca

update readme

Browse files- README.md +165 -35

- assets/example_confidence_curves.png +0 -0

README.md

CHANGED

|

@@ -28,7 +28,7 @@ First, ensure you have Python 3.8 or later installed. Then, install det-metrics

|

|

| 28 |

pip install evaluate git+https://github.com/SEA-AI/seametrics@develop

|

| 29 |

```

|

| 30 |

|

| 31 |

-

|

| 32 |

|

| 33 |

Here's how to quickly evaluate your object detection models using SEA-AI/det-metrics:

|

| 34 |

|

|

@@ -60,22 +60,23 @@ results = module.compute()

|

|

| 60 |

print(results)

|

| 61 |

```

|

| 62 |

|

| 63 |

-

This will output the

|

| 64 |

-

|

| 65 |

-

|

| 66 |

-

|

| 67 |

-

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

'recall': 1.0,

|

| 74 |

-

'f1': 1.0,

|

| 75 |

-

'support': 2,

|

| 76 |

-

'fpi': 0,

|

| 77 |

-

'nImgs': 1}

|

| 78 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 79 |

|

| 80 |

## FiftyOne Integration

|

| 81 |

|

|

@@ -92,35 +93,40 @@ logging.basicConfig(level=logging.WARNING)

|

|

| 92 |

processor = PayloadProcessor(

|

| 93 |

dataset_name="SAILING_DATASET_QA",

|

| 94 |

gt_field="ground_truth_det",

|

| 95 |

-

models=["yolov5n6_RGB_D2304-v1_9C"],

|

| 96 |

sequence_list=["Trip_14_Seq_1"],

|

| 97 |

data_type="rgb",

|

| 98 |

)

|

| 99 |

|

| 100 |

# Evaluate using SEA-AI/det-metrics

|

| 101 |

-

module = evaluate.load("

|

| 102 |

-

module.add_payload(processor.payload)

|

| 103 |

results = module.compute()

|

| 104 |

|

| 105 |

print(results)

|

| 106 |

```

|

| 107 |

-

|

| 108 |

-

```

|

| 109 |

-

{

|

| 110 |

-

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

'nImgs': 22}}

|

| 122 |

```

|

| 123 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 124 |

## Metric Settings

|

| 125 |

|

| 126 |

Customize your evaluation by specifying various parameters when loading SEA-AI/det-metrics:

|

|

@@ -146,8 +152,32 @@ module = evaluate.load(

|

|

| 146 |

```

|

| 147 |

|

| 148 |

## Output Values

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 149 |

|

| 150 |

-

|

|

|

|

|

|

|

| 151 |

|

| 152 |

- **range**: The area range considered.

|

| 153 |

- **iouThr**: The IOU threshold applied.

|

|

@@ -159,6 +189,106 @@ SEA-AI/det-metrics provides a detailed breakdown of performance metrics for each

|

|

| 159 |

- **fpi**: Number of images with predictions but no ground truths.

|

| 160 |

- **nImgs**: Total number of images evaluated.

|

| 161 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 162 |

## Further References

|

| 163 |

|

| 164 |

- **seametrics Library**: Explore the [seametrics GitHub repository](https://github.com/SEA-AI/seametrics/tree/main) for more details on the underlying library.

|

|

|

|

| 28 |

pip install evaluate git+https://github.com/SEA-AI/seametrics@develop

|

| 29 |

```

|

| 30 |

|

| 31 |

+

## Basic Usage

|

| 32 |

|

| 33 |

Here's how to quickly evaluate your object detection models using SEA-AI/det-metrics:

|

| 34 |

|

|

|

|

| 60 |

print(results)

|

| 61 |

```

|

| 62 |

|

| 63 |

+

This will output the following dictionary containing metrics for the detection model. The key of the dictionary will be the model name or "custom" if no model names are available like in this case.

|

| 64 |

+

|

| 65 |

+

```json

|

| 66 |

+

{

|

| 67 |

+

"custom": {

|

| 68 |

+

"metrics": ...,

|

| 69 |

+

"eval": ...,

|

| 70 |

+

"params": ...

|

| 71 |

+

}

|

| 72 |

+

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 73 |

```

|

| 74 |

+

- `metrics`: A dictionary containing performance metrics for each area range

|

| 75 |

+

- `eval`: Output of COCOeval.accumulate()

|

| 76 |

+

- `params`: COCOeval parameters object

|

| 77 |

+

|

| 78 |

+

See [Output Values](#output-values) for more detailed information about the returned results structure, which includes metrics, eval, and params fields for each model passed as input.

|

| 79 |

+

|

| 80 |

|

| 81 |

## FiftyOne Integration

|

| 82 |

|

|

|

|

| 93 |

processor = PayloadProcessor(

|

| 94 |

dataset_name="SAILING_DATASET_QA",

|

| 95 |

gt_field="ground_truth_det",

|

| 96 |

+

models=["yolov5n6_RGB_D2304-v1_9C", "tf1zoo_ssd-mobilenet-v2_agnostic_D2207"],

|

| 97 |

sequence_list=["Trip_14_Seq_1"],

|

| 98 |

data_type="rgb",

|

| 99 |

)

|

| 100 |

|

| 101 |

# Evaluate using SEA-AI/det-metrics

|

| 102 |

+

module = evaluate.load("det-metrics", payload=processor.payload)

|

|

|

|

| 103 |

results = module.compute()

|

| 104 |

|

| 105 |

print(results)

|

| 106 |

```

|

| 107 |

+

This will output the following dictionary containing metrics for the detection model. The key of the dictionary will be the model name.

|

| 108 |

+

```json

|

| 109 |

+

{

|

| 110 |

+

"yolov5n6_RGB_D2304-v1_9C": {

|

| 111 |

+

"metrics": ...,

|

| 112 |

+

"eval": ...,

|

| 113 |

+

"params": ...

|

| 114 |

+

},

|

| 115 |

+

"tf1zoo_ssd-mobilenet-v2_agnostic_D2207": {

|

| 116 |

+

"metrics": ...,

|

| 117 |

+

"eval": ...,

|

| 118 |

+

"params": ...

|

| 119 |

+

}

|

| 120 |

+

}

|

|

|

|

| 121 |

```

|

| 122 |

|

| 123 |

+

- `metrics`: A dictionary containing performance metrics for each area range

|

| 124 |

+

- `eval`: Output of COCOeval.accumulate()

|

| 125 |

+

- `params`: COCOeval parameters object

|

| 126 |

+

|

| 127 |

+

See [Output Values](#output-values) for more detailed information about the returned results structure, which includes metrics, eval, and params fields for each model passed as input.

|

| 128 |

+

|

| 129 |

+

|

| 130 |

## Metric Settings

|

| 131 |

|

| 132 |

Customize your evaluation by specifying various parameters when loading SEA-AI/det-metrics:

|

|

|

|

| 152 |

```

|

| 153 |

|

| 154 |

## Output Values

|

| 155 |

+

For every model passed as input, the results contain the metrics, eval, and params fields. If no specific model was passed (usage without payload), the default model name “custom” will be used.

|

| 156 |

+

|

| 157 |

+

```json

|

| 158 |

+

{

|

| 159 |

+

"model_1": {

|

| 160 |

+

"metrics": ...,

|

| 161 |

+

"eval": ...,

|

| 162 |

+

"params": ...

|

| 163 |

+

},

|

| 164 |

+

"model_2": {

|

| 165 |

+

"metrics": ...,

|

| 166 |

+

"eval": ...,

|

| 167 |

+

"params": ...

|

| 168 |

+

},

|

| 169 |

+

"model_3": {

|

| 170 |

+

"metrics": ...,

|

| 171 |

+

"eval": ...,

|

| 172 |

+

"params": ...

|

| 173 |

+

},

|

| 174 |

+

...

|

| 175 |

+

}

|

| 176 |

+

```

|

| 177 |

|

| 178 |

+

### Metrics

|

| 179 |

+

|

| 180 |

+

SEA-AI/det-metrics metrics dictionary provides a detailed breakdown of performance metrics for each specified area range:

|

| 181 |

|

| 182 |

- **range**: The area range considered.

|

| 183 |

- **iouThr**: The IOU threshold applied.

|

|

|

|

| 189 |

- **fpi**: Number of images with predictions but no ground truths.

|

| 190 |

- **nImgs**: Total number of images evaluated.

|

| 191 |

|

| 192 |

+

### Eval

|

| 193 |

+

|

| 194 |

+

The SEA-AI/det-metrics evaluation dictionary provides details about evaluation metrics and results. Below is a description of each field:

|

| 195 |

+

|

| 196 |

+

- **params**: Parameters used for evaluation, defining settings and conditions.

|

| 197 |

+

|

| 198 |

+

- **counts**: Dimensions of parameters used in evaluation, represented as a list [T, R, K, A, M]:

|

| 199 |

+

- T: IoU threshold (default: [1e-10])

|

| 200 |

+

- R: Recall threshold (not used)

|

| 201 |

+

- K: Class index (class-agnostic, so only 0)

|

| 202 |

+

- A: Area range (0=all, 1=valid_n, 2=valid_w, 3=tiny, 4=small, 5=medium, 6=large)

|

| 203 |

+

- M: Max detections (default: [100])

|

| 204 |

+

|

| 205 |

+

- **date**: The date when the evaluation was performed.

|

| 206 |

+

|

| 207 |

+

- **precision**: A multi-dimensional array [TxRxKxAxM] storing precision values for each evaluation setting.

|

| 208 |

+

|

| 209 |

+

- **recall**: A multi-dimensional array [TxKxAxM] storing maximum recall values for each evaluation setting.

|

| 210 |

+

|

| 211 |

+

- **scores**: Scores for each detection.

|

| 212 |

+

|

| 213 |

+

- **TP**: True Positives - correct detections matching ground truth.

|

| 214 |

+

|

| 215 |

+

- **FP**: False Positives - incorrect detections not matching ground truth.

|

| 216 |

+

|

| 217 |

+

- **FN**: False Negatives - ground truth objects not detected.

|

| 218 |

+

|

| 219 |

+

- **duplicates**: Duplicate detections of the same object.

|

| 220 |

+

|

| 221 |

+

- **support**: Number of ground truth objects for each category.

|

| 222 |

+

|

| 223 |

+

- **FPI**: False Positives per Image.

|

| 224 |

+

|

| 225 |

+

- **TPC**: True Positives per Category.

|

| 226 |

+

|

| 227 |

+

- **FPC**: False Positives per Category.

|

| 228 |

+

|

| 229 |

+

- **sorted_conf**: Confidence scores of detections sorted in descending order.

|

| 230 |

+

|

| 231 |

+

> Note:

|

| 232 |

+

> **precision** and **recall** are set to -1 for settings with no ground truth objects.

|

| 233 |

+

|

| 234 |

+

### Params

|

| 235 |

+

|

| 236 |

+

The params return value of the COCO evaluation parameters in PyCOCO represents a dictionary with various evaluation settings that can be customized. Here’s a breakdown of what each parameter means:

|

| 237 |

+

|

| 238 |

+

- **imgIds**: List of image IDs to use for evaluation. By default, it evaluates on all images.

|

| 239 |

+

- **catIds**: List of category IDs to use for evaluation. By default, it evaluates on all categories.

|

| 240 |

+

- **iouThrs**: List of IoU (Intersection over Union) thresholds for evaluation. By default, it uses thresholds from 0.5 to 0.95 with a step of 0.05 (i.e., [0.5, 0.55, …, 0.95]).

|

| 241 |

+

- **recThrs**: List of recall thresholds for evaluation. By default, it uses 101 thresholds from 0 to 1 with a step of 0.01 (i.e., [0, 0.01, …, 1]).

|

| 242 |

+

- **areaRng**: Object area ranges for evaluation. This parameter defines the sizes of objects to evaluate. It is specified as a list of tuples, where each tuple represents a range of area in square pixels.

|

| 243 |

+

- **maxDets**: List of thresholds on maximum detections per image for evaluation. By default, it evaluates with thresholds of 1, 10, and 100 detections per image.

|

| 244 |

+

- **iouType**: Type of IoU calculation used for evaluation. It can be ‘segm’ (segmentation), ‘bbox’ (bounding box), or ‘keypoints’.

|

| 245 |

+

- **useCats**: Boolean flag indicating whether to use category labels for evaluation (default is 1, meaning true).

|

| 246 |

+

|

| 247 |

+

> Note:

|

| 248 |

+

> If useCats=0 category labels are ignored as in proposal scoring.

|

| 249 |

+

> Multiple areaRngs [Ax2] and maxDets [Mx1] can be specified.

|

| 250 |

+

|

| 251 |

+

## Confidence Curves

|

| 252 |

+

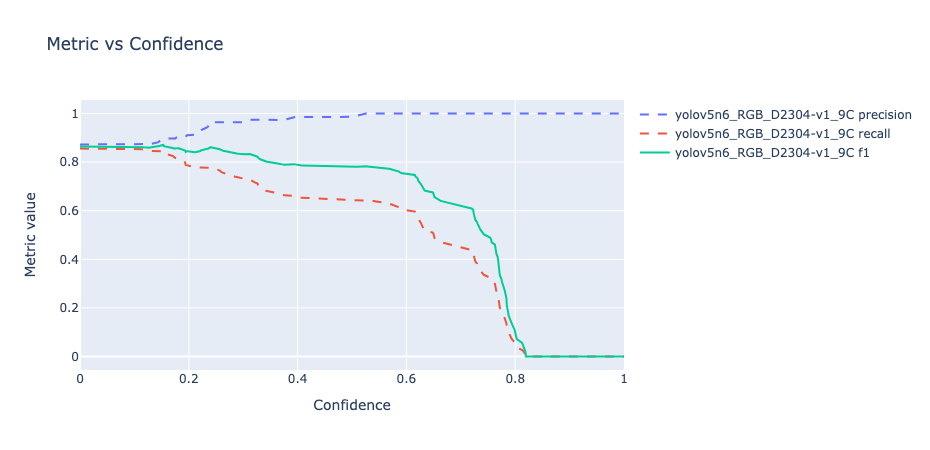

When you use **module.generate_confidence_curves()**, it creates a graph that shows how metrics like **precision, recall, and f1 score** change as you adjust confidence thresholds. This helps you see the trade-offs between precision (how accurate positive predictions are) and recall (how well the model finds all positive instances) at different confidence levels. As confidence scores go up, models usually have higher precision but may find fewer positive instances, reflecting their certainty in making correct predictions.

|

| 253 |

+

|

| 254 |

+

#### Confidence Config

|

| 255 |

+

The `confidence_config` dictionary is set as `{"T": 0, "R": 0, "K": 0, "A": 0, "M": 0}`, where:

|

| 256 |

+

- `T = 0`: represents the IoU (Intersection over Union) threshold.

|

| 257 |

+

- `R = 0`: is the recall threshold, although it's currently not used.

|

| 258 |

+

- `K = 0`: indicates a class index for class-agnostic mean Average Precision (mAP), with only one class indexed at 0.

|

| 259 |

+

- `A = 0`: signifies that all object sizes are considered for evaluation. (0=all, 1=small, 2=medium, 3=large, ... depending on area ranges)

|

| 260 |

+

- `M = 0`: sets the default maximum detections (`maxDets`) to 100 in precision_recall_f1_support calculations.

|

| 261 |

+

|

| 262 |

+

|

| 263 |

+

```python

|

| 264 |

+

import evaluate

|

| 265 |

+

import logging

|

| 266 |

+

from seametrics.payload.processor import PayloadProcessor

|

| 267 |

+

|

| 268 |

+

logging.basicConfig(level=logging.WARNING)

|

| 269 |

+

|

| 270 |

+

# Configure your dataset and model details

|

| 271 |

+

processor = PayloadProcessor(

|

| 272 |

+

dataset_name="SAILING_DATASET_QA",

|

| 273 |

+

gt_field="ground_truth_det",

|

| 274 |

+

models=["yolov5n6_RGB_D2304-v1_9C"],

|

| 275 |

+

sequence_list=["Trip_14_Seq_1"],

|

| 276 |

+

data_type="rgb",

|

| 277 |

+

)

|

| 278 |

+

|

| 279 |

+

# Evaluate using SEA-AI/det-metrics

|

| 280 |

+

module = evaluate.load("det-metrics", payload=processor.payload)

|

| 281 |

+

results = module.compute()

|

| 282 |

+

|

| 283 |

+

# Plot confidence curves

|

| 284 |

+

confidence_config={"T": 0, "R": 0, "K": 0, "A": 0, "M": 0}

|

| 285 |

+

fig = module.generate_confidence_curves(results, confidence_config)

|

| 286 |

+

fig.show()

|

| 287 |

+

```

|

| 288 |

+

|

| 289 |

+

|

| 290 |

+

|

| 291 |

+

|

| 292 |

## Further References

|

| 293 |

|

| 294 |

- **seametrics Library**: Explore the [seametrics GitHub repository](https://github.com/SEA-AI/seametrics/tree/main) for more details on the underlying library.

|

assets/example_confidence_curves.png

ADDED

|