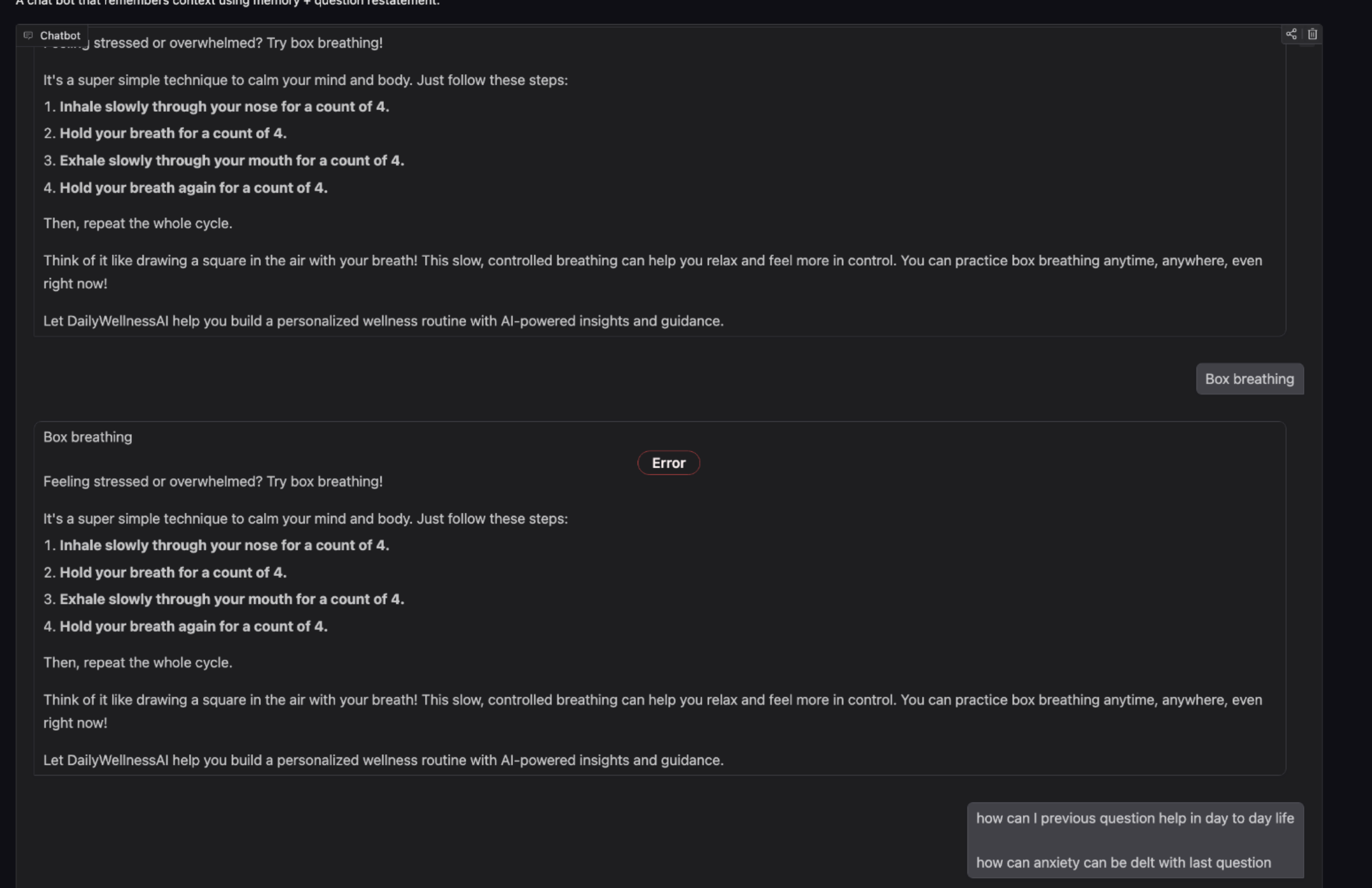

Update app.py

Browse filescorrected this error

app.py

CHANGED

|

@@ -3,34 +3,43 @@ from pipeline import run_with_chain

|

|

| 3 |

from my_memory_logic import memory, restatement_chain

|

| 4 |

|

| 5 |

def chat_history_fn(user_input, history):

|

| 6 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

for user_msg, ai_msg in history:

|

| 8 |

memory.chat_memory.add_user_message(user_msg)

|

| 9 |

memory.chat_memory.add_ai_message(ai_msg)

|

| 10 |

|

| 11 |

-

#

|

| 12 |

reformulated_q = restatement_chain.run({

|

| 13 |

"chat_history": memory.chat_memory.messages,

|

| 14 |

"input": user_input

|

| 15 |

})

|

| 16 |

|

| 17 |

-

#

|

| 18 |

answer = run_with_chain(reformulated_q)

|

| 19 |

|

| 20 |

-

#

|

| 21 |

memory.chat_memory.add_user_message(user_input)

|

| 22 |

memory.chat_memory.add_ai_message(answer)

|

| 23 |

|

| 24 |

-

#

|

| 25 |

-

# This is what Gradio's ChatInterface expects (instead of returning a tuple).

|

| 26 |

history.append((user_input, answer))

|

|

|

|

|

|

|

|

|

|

|

|

|

| 27 |

message_dicts = []

|

| 28 |

-

for

|

| 29 |

-

|

| 30 |

-

message_dicts.append({"role": "user", "content": user_msg})

|

| 31 |

-

# AI turn

|

| 32 |

message_dicts.append({"role": "assistant", "content": ai_msg})

|

| 33 |

|

|

|

|

| 34 |

return message_dicts

|

| 35 |

|

| 36 |

demo = gr.ChatInterface(

|

|

|

|

| 3 |

from my_memory_logic import memory, restatement_chain

|

| 4 |

|

| 5 |

def chat_history_fn(user_input, history):

|

| 6 |

+

"""

|

| 7 |

+

Gradio calls this function each time the user submits a message.

|

| 8 |

+

'history' is a list of (user_msg, ai_msg) pairs from previous turns.

|

| 9 |

+

We'll add them to memory, restate the user_input if needed,

|

| 10 |

+

run the pipeline, store the new turn, then return a list of

|

| 11 |

+

message dicts that Gradio's ChatInterface accepts.

|

| 12 |

+

"""

|

| 13 |

+

# 1) Convert existing history into memory

|

| 14 |

for user_msg, ai_msg in history:

|

| 15 |

memory.chat_memory.add_user_message(user_msg)

|

| 16 |

memory.chat_memory.add_ai_message(ai_msg)

|

| 17 |

|

| 18 |

+

# 2) Restate the new user question with chat history

|

| 19 |

reformulated_q = restatement_chain.run({

|

| 20 |

"chat_history": memory.chat_memory.messages,

|

| 21 |

"input": user_input

|

| 22 |

})

|

| 23 |

|

| 24 |

+

# 3) Pass the reformulated question to your pipeline

|

| 25 |

answer = run_with_chain(reformulated_q)

|

| 26 |

|

| 27 |

+

# 4) Update memory with the new turn

|

| 28 |

memory.chat_memory.add_user_message(user_input)

|

| 29 |

memory.chat_memory.add_ai_message(answer)

|

| 30 |

|

| 31 |

+

# 5) Update the 'history' list with (user, ai)

|

|

|

|

| 32 |

history.append((user_input, answer))

|

| 33 |

+

|

| 34 |

+

# 6) Gradio's ChatInterface expects a list of message dicts:

|

| 35 |

+

# [{"role": "user"|"assistant", "content": "..."} ...]

|

| 36 |

+

# We'll build that from our (user_msg, ai_msg) pairs in 'history'.

|

| 37 |

message_dicts = []

|

| 38 |

+

for usr_msg, ai_msg in history:

|

| 39 |

+

message_dicts.append({"role": "user", "content": usr_msg})

|

|

|

|

|

|

|

| 40 |

message_dicts.append({"role": "assistant", "content": ai_msg})

|

| 41 |

|

| 42 |

+

# 7) Return the message dictionary list

|

| 43 |

return message_dicts

|

| 44 |

|

| 45 |

demo = gr.ChatInterface(

|