file upload

Browse files- README.md +9 -6

- app.py +123 -0

- celle/__init__.py +4 -0

- celle/attention.py +253 -0

- celle/celle.py +1061 -0

- celle/reversible.py +36 -0

- celle/transformer.py +213 -0

- celle/utils.py +228 -0

- celle/vae.py +112 -0

- celle_main.py +619 -0

- celle_taming_main.py +695 -0

- dataloader.py +308 -0

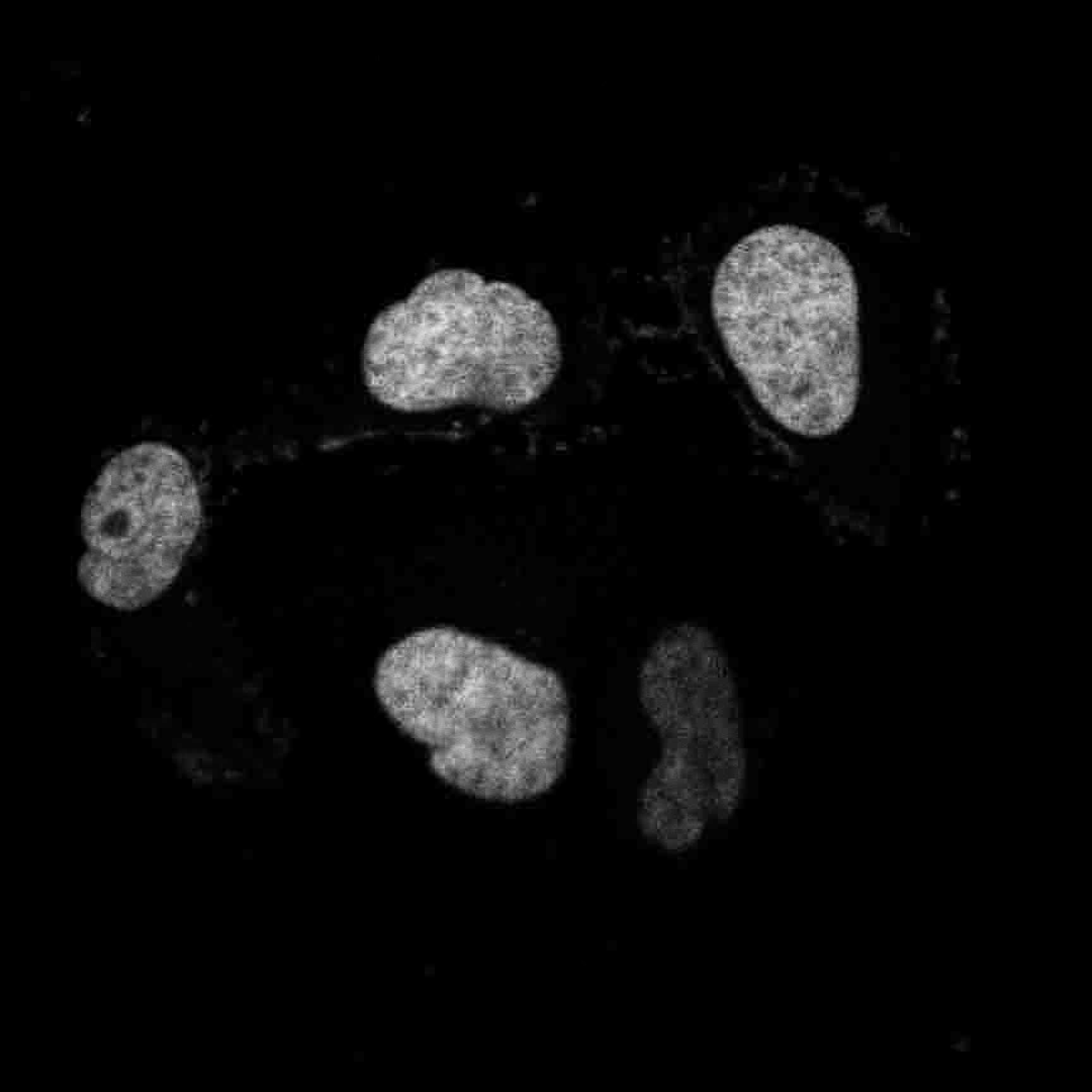

- images/Armadillo repeat-containing X-linked protein 5 nucleus.jpg +0 -0

- images/Armadillo repeat-containing X-linked protein 5 protein.jpg +0 -0

- prediction.py +82 -0

- taming/lr_scheduler.py +34 -0

- taming/models/cond_transformer.py +349 -0

- taming/models/dummy_cond_stage.py +22 -0

- taming/models/vqgan.py +649 -0

- taming/modules/autoencoder/lpips/vgg.pth +3 -0

- taming/modules/diffusionmodules/model.py +776 -0

- taming/modules/discriminator/model.py +67 -0

- taming/modules/losses/__init__.py +2 -0

- taming/modules/losses/lpips.py +123 -0

- taming/modules/losses/segmentation.py +22 -0

- taming/modules/losses/vqperceptual.py +182 -0

- taming/modules/misc/coord.py +31 -0

- taming/modules/transformer/mingpt.py +415 -0

- taming/modules/transformer/permuter.py +248 -0

- taming/modules/util.py +130 -0

- taming/modules/vqvae/quantize.py +445 -0

- taming/util.py +157 -0

README.md

CHANGED

|

@@ -1,12 +1,15 @@

|

|

| 1 |

---

|

| 2 |

-

title: CELL-E 2-Sequence Prediction

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

-

|

|

|

|

| 8 |

app_file: app.py

|

| 9 |

-

|

|

|

|

|

|

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

|

|

|

| 1 |

---

|

| 2 |

+

title: CELL-E 2 - Sequence Prediction

|

| 3 |

+

emoji: 🔬

|

| 4 |

+

colorFrom: red

|

| 5 |

+

colorTo: purple

|

| 6 |

sdk: gradio

|

| 7 |

+

python_version: 3.11

|

| 8 |

+

sdk_version: 3.30.0

|

| 9 |

app_file: app.py

|

| 10 |

+

tags: [proteins, image-to-text]

|

| 11 |

+

fullWidth: true

|

| 12 |

+

pinned: true

|

| 13 |

license: mit

|

| 14 |

---

|

| 15 |

|

app.py

ADDED

|

@@ -0,0 +1,123 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from huggingface_hub import hf_hub_download

|

| 3 |

+

from prediction import run_sequence_prediction

|

| 4 |

+

import torch

|

| 5 |

+

import torchvision.transforms as T

|

| 6 |

+

from celle.utils import process_image

|

| 7 |

+

from PIL import Image

|

| 8 |

+

from matplotlib import pyplot as plt

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def gradio_demo(model_name, sequence_input, image):

|

| 12 |

+

model = hf_hub_download(repo_id=f"HuangLab/{model_name}", filename="model.ckpt")

|

| 13 |

+

config = hf_hub_download(repo_id=f"HuangLab/{model_name}", filename="config.yaml")

|

| 14 |

+

hf_hub_download(repo_id=f"HuangLab/{model_name}", filename="nucleus_vqgan.yaml")

|

| 15 |

+

hf_hub_download(repo_id=f"HuangLab/{model_name}", filename="threshold_vqgan.yaml")

|

| 16 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 17 |

+

|

| 18 |

+

if "Finetuned" in model_name:

|

| 19 |

+

dataset = "OpenCell"

|

| 20 |

+

|

| 21 |

+

else:

|

| 22 |

+

dataset = "HPA"

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

nucleus_image = image['image']

|

| 26 |

+

protein_image = image['mask']

|

| 27 |

+

|

| 28 |

+

nucleus_image = process_image(nucleus_image, dataset, "nucleus")

|

| 29 |

+

protein_image = process_image(protein_image, dataset, "nucleus")

|

| 30 |

+

protein_image = 1.0*(protein_image > .5)

|

| 31 |

+

print(f'{nucleus_image=}')

|

| 32 |

+

print(f'{protein_image.shape=}')

|

| 33 |

+

|

| 34 |

+

threshold, heatmap = run_sequence_prediction(

|

| 35 |

+

sequence_input=sequence_input,

|

| 36 |

+

nucleus_image=nucleus_image,

|

| 37 |

+

protein_image=protein_image,

|

| 38 |

+

model_ckpt_path=model,

|

| 39 |

+

model_config_path=config,

|

| 40 |

+

device=device,

|

| 41 |

+

)

|

| 42 |

+

|

| 43 |

+

protein_image = protein_image[0, 0]

|

| 44 |

+

protein_image = protein_image * 1.0

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

# Plot the heatmap

|

| 48 |

+

plt.imshow(heatmap.cpu(), cmap="rainbow", interpolation="bicubic")

|

| 49 |

+

plt.axis("off")

|

| 50 |

+

|

| 51 |

+

# Save the plot to a temporary file

|

| 52 |

+

plt.savefig("temp.png", bbox_inches="tight", dpi=256)

|

| 53 |

+

|

| 54 |

+

# Open the temporary file as a PIL image

|

| 55 |

+

heatmap = Image.open("temp.png")

|

| 56 |

+

|

| 57 |

+

return (

|

| 58 |

+

T.ToPILImage()(nucleus_image[0, 0]),

|

| 59 |

+

T.ToPILImage()(protein_image),

|

| 60 |

+

T.ToPILImage()(threshold),

|

| 61 |

+

heatmap,

|

| 62 |

+

)

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

with gr.Blocks() as demo:

|

| 66 |

+

gr.Markdown("Select the prediction model.")

|

| 67 |

+

gr.Markdown(

|

| 68 |

+

"CELL-E_2_HPA_2560 is a good general purpose model for various cell types using ICC-IF."

|

| 69 |

+

)

|

| 70 |

+

gr.Markdown(

|

| 71 |

+

"CELL-E_2_OpenCell_2560 is trained on OpenCell and is good more live-cell predictions on HEK cells."

|

| 72 |

+

)

|

| 73 |

+

with gr.Row():

|

| 74 |

+

model_name = gr.Dropdown(

|

| 75 |

+

["CELL-E_2_HPA_2560", "CELL-E_2_OpenCell_2560"],

|

| 76 |

+

value="CELL-E_2_HPA_2560",

|

| 77 |

+

label="Model Name",

|

| 78 |

+

)

|

| 79 |

+

with gr.Row():

|

| 80 |

+

gr.Markdown(

|

| 81 |

+

"Input the desired amino acid sequence. GFP is shown below by default."

|

| 82 |

+

)

|

| 83 |

+

|

| 84 |

+

with gr.Row():

|

| 85 |

+

sequence_input = gr.Textbox(

|

| 86 |

+

value="MSKGEELFTGVVPILVELDGDVNGHKFSVSGEGEGDATYGKLTLKFICTTGKLPVPWPTLVTTFSYGVQCFSRYPDHMKQHDFFKSAMPEGYVQERTIFFKDDGNYKTRAEVKFEGDTLVNRIELKGIDFKEDGNILGHKLEYNYNSHNVYIMADKQKNGIKVNFKIRHNIEDGSVQLADHYQQNTPIGDGPVLLPDNHYLSTQSALSKDPNEKRDHMVLLEFVTAAGITHGMDELYK",

|

| 87 |

+

label="Sequence",

|

| 88 |

+

)

|

| 89 |

+

with gr.Row():

|

| 90 |

+

gr.Markdown(

|

| 91 |

+

"Uploading a nucleus image is necessary. A random crop of 256 x 256 will be applied if larger. We provide default images in [images](https://huggingface.co/spaces/HuangLab/CELL-E_2/tree/main/images)"

|

| 92 |

+

)

|

| 93 |

+

gr.Markdown("The protein image is optional and is just used for display.")

|

| 94 |

+

|

| 95 |

+

with gr.Row().style(equal_height=True):

|

| 96 |

+

nucleus_image = gr.Image(

|

| 97 |

+

source="upload",

|

| 98 |

+

tool="sketch",

|

| 99 |

+

label="Nucleus Image",

|

| 100 |

+

line_color="white",

|

| 101 |

+

interactive=True,

|

| 102 |

+

image_mode="L",

|

| 103 |

+

type="pil"

|

| 104 |

+

)

|

| 105 |

+

|

| 106 |

+

with gr.Row():

|

| 107 |

+

gr.Markdown("Image predictions are show below.")

|

| 108 |

+

|

| 109 |

+

with gr.Row().style(equal_height=True):

|

| 110 |

+

predicted_sequence = gr.Textbox(

|

| 111 |

+

label="Predicted Sequence",

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

with gr.Row():

|

| 115 |

+

button = gr.Button("Run Model")

|

| 116 |

+

|

| 117 |

+

inputs = [model_name, sequence_input, nucleus_image]

|

| 118 |

+

|

| 119 |

+

outputs = [predicted_sequence]

|

| 120 |

+

|

| 121 |

+

button.click(gradio_demo, inputs, outputs)

|

| 122 |

+

|

| 123 |

+

demo.launch(share=True)

|

celle/__init__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from celle.celle import CELLE

|

| 2 |

+

from celle.vae import VQGanVAE

|

| 3 |

+

|

| 4 |

+

__version__ = "2.0.0"

|

celle/attention.py

ADDED

|

@@ -0,0 +1,253 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn, einsum

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

from einops import rearrange, repeat

|

| 5 |

+

|

| 6 |

+

from rotary_embedding_torch import apply_rotary_emb

|

| 7 |

+

from celle.utils import exists, default, max_neg_value

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

# helpers

|

| 11 |

+

def stable_softmax(t, dim=-1, alpha=32**2):

|

| 12 |

+

t = t / alpha

|

| 13 |

+

t = t - torch.amax(t, dim=dim, keepdim=True).detach()

|

| 14 |

+

return (t * alpha).softmax(dim=dim)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def apply_pos_emb(pos_emb, qkv):

|

| 18 |

+

n = qkv[0].shape[-2]

|

| 19 |

+

pos_emb = pos_emb[..., :n, :]

|

| 20 |

+

return tuple(map(lambda t: apply_rotary_emb(pos_emb, t), qkv))

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

# classes

|

| 24 |

+

class Attention(nn.Module):

|

| 25 |

+

def __init__(

|

| 26 |

+

self,

|

| 27 |

+

dim,

|

| 28 |

+

seq_len,

|

| 29 |

+

causal=False,

|

| 30 |

+

heads=8,

|

| 31 |

+

dim_head=64,

|

| 32 |

+

dropout=0.0,

|

| 33 |

+

stable=False,

|

| 34 |

+

static_mask=None,

|

| 35 |

+

):

|

| 36 |

+

super().__init__()

|

| 37 |

+

inner_dim = dim_head * heads

|

| 38 |

+

self.heads = heads

|

| 39 |

+

self.seq_len = seq_len

|

| 40 |

+

self.scale = dim_head**-0.5

|

| 41 |

+

self.stable = stable

|

| 42 |

+

self.causal = causal

|

| 43 |

+

self.register_buffer("static_mask", static_mask, persistent=False)

|

| 44 |

+

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias=False)

|

| 45 |

+

self.to_out = nn.Sequential(nn.Linear(inner_dim, dim), nn.Dropout(dropout))

|

| 46 |

+

self.save_attn = nn.Identity()

|

| 47 |

+

|

| 48 |

+

def forward(self, x, context_mask=None, rotary_pos_emb=None):

|

| 49 |

+

# x: [batch_size, seq_len, dim]

|

| 50 |

+

b, n, _, h = *x.shape, self.heads

|

| 51 |

+

device = x.device

|

| 52 |

+

|

| 53 |

+

softmax = torch.softmax if not self.stable else stable_softmax

|

| 54 |

+

|

| 55 |

+

# qkv: 3 tensors of shape [batch_size, seq_len, inner_dim]

|

| 56 |

+

qkv = self.to_qkv(x).chunk(3, dim=-1)

|

| 57 |

+

|

| 58 |

+

# q,k,v: [batch_size, heads, seq_len, dim_head]

|

| 59 |

+

q, k, v = map(lambda t: rearrange(t, "b n (h d) -> b h n d", h=h), qkv)

|

| 60 |

+

|

| 61 |

+

if exists(rotary_pos_emb):

|

| 62 |

+

q, k, v = apply_pos_emb(rotary_pos_emb[..., :, :], (q, k, v))

|

| 63 |

+

|

| 64 |

+

q *= self.scale

|

| 65 |

+

|

| 66 |

+

# dots: [batch_size, heads, seq_len_i ,seq_len_j]

|

| 67 |

+

dots = torch.einsum("b h i d, b h j d -> b h i j", q, k)

|

| 68 |

+

mask_value = max_neg_value(dots)

|

| 69 |

+

|

| 70 |

+

if exists(context_mask):

|

| 71 |

+

# context_mask: [batch_size ,1 ,1 ,seq_len_j]

|

| 72 |

+

context_mask = rearrange(context_mask, "b j -> b 1 1 j")

|

| 73 |

+

context_mask = F.pad(context_mask, (1, 0), value=True)

|

| 74 |

+

|

| 75 |

+

mask_value = -torch.finfo(dots.dtype).max

|

| 76 |

+

dots = dots.masked_fill(~context_mask, mask_value)

|

| 77 |

+

|

| 78 |

+

if self.causal:

|

| 79 |

+

i, j = dots.shape[-2:]

|

| 80 |

+

context_mask = torch.ones(i, j, device=device).triu_(j - i + 1).bool()

|

| 81 |

+

dots.masked_fill_(context_mask, mask_value)

|

| 82 |

+

|

| 83 |

+

if exists(self.static_mask):

|

| 84 |

+

dots.masked_fill_(~self.static_mask[:n, :n], mask_value)

|

| 85 |

+

|

| 86 |

+

# attn: [batch_size ,heads ,seq_len_i ,seq_len_j]

|

| 87 |

+

attn = softmax(dots, dim=-1)

|

| 88 |

+

attn = self.save_attn(attn)

|

| 89 |

+

|

| 90 |

+

# out: [batch_size ,heads ,seq_len_i ,dim_head]

|

| 91 |

+

out = torch.einsum("b h n j, b h j d -> b h n d", attn, v)

|

| 92 |

+

|

| 93 |

+

# out: [batch_size ,seq_len_i ,(heads*dim_head)]

|

| 94 |

+

out = rearrange(out, "b h n d -> b n (h d)")

|

| 95 |

+

|

| 96 |

+

# out: [batch_size ,seq_len_i ,dim]

|

| 97 |

+

out = self.to_out(out)

|

| 98 |

+

|

| 99 |

+

return out

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

# sparse attention with convolutional pattern, as mentioned in the blog post. customizable kernel size and dilation

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

class SparseConvCausalAttention(nn.Module):

|

| 106 |

+

def __init__(

|

| 107 |

+

self,

|

| 108 |

+

dim,

|

| 109 |

+

seq_len,

|

| 110 |

+

image_size=32,

|

| 111 |

+

kernel_size=5,

|

| 112 |

+

dilation=1,

|

| 113 |

+

heads=8,

|

| 114 |

+

dim_head=64,

|

| 115 |

+

dropout=0.0,

|

| 116 |

+

stable=False,

|

| 117 |

+

**kwargs,

|

| 118 |

+

):

|

| 119 |

+

super().__init__()

|

| 120 |

+

assert kernel_size % 2 == 1, "kernel size must be odd"

|

| 121 |

+

|

| 122 |

+

inner_dim = dim_head * heads

|

| 123 |

+

self.seq_len = seq_len

|

| 124 |

+

self.heads = heads

|

| 125 |

+

self.scale = dim_head**-0.5

|

| 126 |

+

self.image_size = image_size

|

| 127 |

+

self.kernel_size = kernel_size

|

| 128 |

+

self.dilation = dilation

|

| 129 |

+

|

| 130 |

+

self.stable = stable

|

| 131 |

+

|

| 132 |

+

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias=False)

|

| 133 |

+

|

| 134 |

+

self.to_out = nn.Sequential(nn.Linear(inner_dim, dim), nn.Dropout(dropout))

|

| 135 |

+

|

| 136 |

+

def forward(self, x, mask=None, rotary_pos_emb=None):

|

| 137 |

+

b, n, _, h, img_size, kernel_size, dilation, seq_len, device = (

|

| 138 |

+

*x.shape,

|

| 139 |

+

self.heads,

|

| 140 |

+

self.image_size,

|

| 141 |

+

self.kernel_size,

|

| 142 |

+

self.dilation,

|

| 143 |

+

self.seq_len,

|

| 144 |

+

x.device,

|

| 145 |

+

)

|

| 146 |

+

softmax = torch.softmax if not self.stable else stable_softmax

|

| 147 |

+

|

| 148 |

+

img_seq_len = img_size**2

|

| 149 |

+

text_len = seq_len + 1 - img_seq_len

|

| 150 |

+

|

| 151 |

+

# padding

|

| 152 |

+

|

| 153 |

+

padding = seq_len - n + 1

|

| 154 |

+

mask = default(mask, lambda: torch.ones(b, text_len, device=device).bool())

|

| 155 |

+

|

| 156 |

+

x = F.pad(x, (0, 0, 0, padding), value=0)

|

| 157 |

+

mask = mask[:, :text_len]

|

| 158 |

+

|

| 159 |

+

# derive query / keys / values

|

| 160 |

+

|

| 161 |

+

qkv = self.to_qkv(x).chunk(3, dim=-1)

|

| 162 |

+

q, k, v = map(lambda t: rearrange(t, "b n (h d) -> (b h) n d", h=h), qkv)

|

| 163 |

+

|

| 164 |

+

if exists(rotary_pos_emb):

|

| 165 |

+

q, k, v = apply_pos_emb(rotary_pos_emb, (q, k, v))

|

| 166 |

+

|

| 167 |

+

q *= self.scale

|

| 168 |

+

|

| 169 |

+

((q_text, q_img), (k_text, k_img), (v_text, v_img)) = map(

|

| 170 |

+

lambda t: (t[:, :-img_seq_len], t[:, -img_seq_len:]), (q, k, v)

|

| 171 |

+

)

|

| 172 |

+

|

| 173 |

+

# text attention

|

| 174 |

+

|

| 175 |

+

dots_text = einsum("b i d, b j d -> b i j", q_text, k_text)

|

| 176 |

+

mask_value = max_neg_value(dots_text)

|

| 177 |

+

|

| 178 |

+

i, j = dots_text.shape[-2:]

|

| 179 |

+

text_causal_mask = torch.ones(i, j, device=device).triu_(j - i + 1).bool()

|

| 180 |

+

dots_text.masked_fill_(text_causal_mask, mask_value)

|

| 181 |

+

|

| 182 |

+

attn_text = softmax(dots_text, dim=-1)

|

| 183 |

+

out_text = einsum("b i j, b j d -> b i d", attn_text, v_text)

|

| 184 |

+

|

| 185 |

+

# image attention

|

| 186 |

+

|

| 187 |

+

effective_kernel_size = (kernel_size - 1) * dilation + 1

|

| 188 |

+

padding = effective_kernel_size // 2

|

| 189 |

+

|

| 190 |

+

k_img, v_img = map(

|

| 191 |

+

lambda t: rearrange(t, "b (h w) c -> b c h w", h=img_size), (k_img, v_img)

|

| 192 |

+

)

|

| 193 |

+

k_img, v_img = map(

|

| 194 |

+

lambda t: F.unfold(t, kernel_size, padding=padding, dilation=dilation),

|

| 195 |

+

(k_img, v_img),

|

| 196 |

+

)

|

| 197 |

+

k_img, v_img = map(

|

| 198 |

+

lambda t: rearrange(t, "b (d j) i -> b i j d", j=kernel_size**2),

|

| 199 |

+

(k_img, v_img),

|

| 200 |

+

)

|

| 201 |

+

|

| 202 |

+

# let image attend to all of text

|

| 203 |

+

|

| 204 |

+

dots_image = einsum("b i d, b i j d -> b i j", q_img, k_img)

|

| 205 |

+

dots_image_to_text = einsum("b i d, b j d -> b i j", q_img, k_text)

|

| 206 |

+

|

| 207 |

+

# calculate causal attention for local convolution

|

| 208 |

+

|

| 209 |

+

i, j = dots_image.shape[-2:]

|

| 210 |

+

img_seq = torch.arange(img_seq_len, device=device)

|

| 211 |

+

k_img_indices = rearrange(img_seq.float(), "(h w) -> () () h w", h=img_size)

|

| 212 |

+

k_img_indices = F.pad(

|

| 213 |

+

k_img_indices, (padding,) * 4, value=img_seq_len

|

| 214 |

+

) # padding set to be max, so it is never attended to

|

| 215 |

+

k_img_indices = F.unfold(k_img_indices, kernel_size, dilation=dilation)

|

| 216 |

+

k_img_indices = rearrange(k_img_indices, "b j i -> b i j")

|

| 217 |

+

|

| 218 |

+

# mask image attention

|

| 219 |

+

|

| 220 |

+

q_img_indices = rearrange(img_seq, "i -> () i ()")

|

| 221 |

+

causal_mask = q_img_indices < k_img_indices

|

| 222 |

+

|

| 223 |

+

# concat text mask with image causal mask

|

| 224 |

+

|

| 225 |

+

causal_mask = repeat(causal_mask, "() i j -> b i j", b=b * h)

|

| 226 |

+

mask = repeat(mask, "b j -> (b h) i j", i=i, h=h)

|

| 227 |

+

mask = torch.cat((~mask, causal_mask), dim=-1)

|

| 228 |

+

|

| 229 |

+

# image can attend to all of text

|

| 230 |

+

|

| 231 |

+

dots = torch.cat((dots_image_to_text, dots_image), dim=-1)

|

| 232 |

+

dots.masked_fill_(mask, mask_value)

|

| 233 |

+

|

| 234 |

+

attn = softmax(dots, dim=-1)

|

| 235 |

+

|

| 236 |

+

# aggregate

|

| 237 |

+

|

| 238 |

+

attn_image_to_text, attn_image = attn[..., :text_len], attn[..., text_len:]

|

| 239 |

+

|

| 240 |

+

out_image_to_image = einsum("b i j, b i j d -> b i d", attn_image, v_img)

|

| 241 |

+

out_image_to_text = einsum("b i j, b j d -> b i d", attn_image_to_text, v_text)

|

| 242 |

+

|

| 243 |

+

out_image = out_image_to_image + out_image_to_text

|

| 244 |

+

|

| 245 |

+

# combine attended values for both text and image

|

| 246 |

+

|

| 247 |

+

out = torch.cat((out_text, out_image), dim=1)

|

| 248 |

+

|

| 249 |

+

out = rearrange(out, "(b h) n d -> b n (h d)", h=h)

|

| 250 |

+

|

| 251 |

+

out = self.to_out(out)

|

| 252 |

+

|

| 253 |

+

return out[:, :n]

|

celle/celle.py

ADDED

|

@@ -0,0 +1,1061 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Import necessary packages and modules

|

| 2 |

+

from math import floor, ceil

|

| 3 |

+

import torch

|

| 4 |

+

from torch import nn

|

| 5 |

+

import torch.nn.functional as F

|

| 6 |

+

from axial_positional_embedding import AxialPositionalEmbedding

|

| 7 |

+

from einops import rearrange

|

| 8 |

+

from celle.utils import (

|

| 9 |

+

exists,

|

| 10 |

+

always,

|

| 11 |

+

eval_decorator,

|

| 12 |

+

gumbel_sample,

|

| 13 |

+

top_k,

|

| 14 |

+

gamma_func,

|

| 15 |

+

DivideMax,

|

| 16 |

+

)

|

| 17 |

+

from tqdm import tqdm

|

| 18 |

+

|

| 19 |

+

# Import additional modules from within the codebase

|

| 20 |

+

from celle.transformer import Transformer

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def generate_mask(gamma_func, batch_size, length, device):

|

| 24 |

+

# Get the number of `True` values in the mask for each batch element

|

| 25 |

+

num_true_values = floor(gamma_func(torch.rand(1)) * length)

|

| 26 |

+

|

| 27 |

+

# Generate a random sample of indices to set to `True` in the mask

|

| 28 |

+

# The number of indices in the sample is determined by `num_true_values`

|

| 29 |

+

indices = (

|

| 30 |

+

torch.rand((batch_size, length), device=device)

|

| 31 |

+

.topk(num_true_values, dim=1)

|

| 32 |

+

.indices

|

| 33 |

+

)

|

| 34 |

+

|

| 35 |

+

# Create a binary mask tensor with `True` values at the sampled indices

|

| 36 |

+

mask = torch.zeros((batch_size, length), dtype=torch.bool, device=device)

|

| 37 |

+

mask.scatter_(dim=1, index=indices, value=True)

|

| 38 |

+

|

| 39 |

+

return mask

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

def match_batch_size(text, condition, image, batch_size):

|

| 43 |

+

"""

|

| 44 |

+

This function ensures all inputs to the sample function have the same batch size.

|

| 45 |

+

"""

|

| 46 |

+

if text.shape[0] != batch_size:

|

| 47 |

+

text = text.repeat(batch_size, 1)

|

| 48 |

+

|

| 49 |

+

if condition.shape[0] != batch_size:

|

| 50 |

+

condition = condition.repeat(batch_size, 1)

|

| 51 |

+

|

| 52 |

+

if image.shape[0] != batch_size:

|

| 53 |

+

image = image.repeat(batch_size, 1)

|

| 54 |

+

|

| 55 |

+

return text, condition, image

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def calc_unmask_probs(timestep, timesteps, gamma_func):

|

| 59 |

+

if timestep == 1 or timesteps == 1:

|

| 60 |

+

unmask_prob = 1

|

| 61 |

+

else:

|

| 62 |

+

unmask_prob = 1 - gamma_func(timestep)

|

| 63 |

+

return unmask_prob

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

def calculate_logits(

|

| 67 |

+

input_tokens, input_mask, logits_function, filter_thres, temperature

|

| 68 |

+

):

|

| 69 |

+

logits, _, _ = logits_function(input_tokens, input_mask, return_encoding=False)

|

| 70 |

+

filtered_logits = top_k(logits, thres=filter_thres)

|

| 71 |

+

sample = gumbel_sample(filtered_logits, temperature=temperature, dim=-1)

|

| 72 |

+

|

| 73 |

+

return logits, sample

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def unmask_tokens(

|

| 77 |

+

input_tokens,

|

| 78 |

+

input_mask,

|

| 79 |

+

num_masked_tokens,

|

| 80 |

+

logits,

|

| 81 |

+

sample,

|

| 82 |

+

timestep,

|

| 83 |

+

timesteps,

|

| 84 |

+

gamma,

|

| 85 |

+

filter_func=None,

|

| 86 |

+

pad_token=None,

|

| 87 |

+

mask_token=None,

|

| 88 |

+

force_aas=True,

|

| 89 |

+

):

|

| 90 |

+

sample = sample.masked_fill(~input_mask.unsqueeze(-1), -torch.inf)

|

| 91 |

+

if filter_func:

|

| 92 |

+

sample = filter_func(

|

| 93 |

+

input_tokens, sample, force_aas, pad_token=pad_token, mask_token=mask_token

|

| 94 |

+

)

|

| 95 |

+

selected_token_probs, selected_tokens = torch.max(sample, dim=-1)

|

| 96 |

+

|

| 97 |

+

unmask_prob = calc_unmask_probs(timestep, timesteps, gamma)

|

| 98 |

+

num_tokens_to_unmask = max(1, ceil(unmask_prob * num_masked_tokens))

|

| 99 |

+

|

| 100 |

+

_, top_k_indices = torch.topk(selected_token_probs, num_tokens_to_unmask, dim=-1)

|

| 101 |

+

|

| 102 |

+

sample_mask = torch.zeros(

|

| 103 |

+

input_tokens.shape, dtype=torch.bool, device=input_tokens.device

|

| 104 |

+

)

|

| 105 |

+

sample_mask.scatter_(dim=1, index=top_k_indices, value=True)

|

| 106 |

+

|

| 107 |

+

unmasked_tokens = torch.where(sample_mask, selected_tokens, input_tokens)

|

| 108 |

+

full_logits = torch.where(

|

| 109 |

+

sample_mask.unsqueeze(-1), logits, torch.zeros_like(logits)

|

| 110 |

+

)

|

| 111 |

+

return unmasked_tokens, full_logits

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

def suppress_invalid_text_tokens(

|

| 115 |

+

text,

|

| 116 |

+

logits,

|

| 117 |

+

start_token=None,

|

| 118 |

+

end_token=None,

|

| 119 |

+

pad_token=None,

|

| 120 |

+

mask_token=None,

|

| 121 |

+

force_aas=False,

|

| 122 |

+

):

|

| 123 |

+

# Find the indices of start_token and end_token in tensor text along axis=1

|

| 124 |

+

idx_start = (text == start_token).nonzero(as_tuple=True)[1]

|

| 125 |

+

idx_end = (text == end_token).nonzero(as_tuple=True)[1]

|

| 126 |

+

|

| 127 |

+

# For every position other than the index corresponding to the start index, set the values on the start index of dimension=2 to -torch.inf

|

| 128 |

+

if idx_start.nelement() != start_token:

|

| 129 |

+

try:

|

| 130 |

+

mask = idx_start.unsqueeze(1) != torch.arange(

|

| 131 |

+

logits.size(1), device=text.device

|

| 132 |

+

)

|

| 133 |

+

indices = torch.where(mask)

|

| 134 |

+

logits[indices[0], indices[1], start_token] = -torch.inf

|

| 135 |

+

except:

|

| 136 |

+

pass

|

| 137 |

+

|

| 138 |

+

# else:

|

| 139 |

+

# idx_start = torch.zeros(text.size(0), dtype=torch.long)

|

| 140 |

+

|

| 141 |

+

# Similarly, for every position other than the index corresponding to the end index, set the values on the end index of dimension=2 to -torch.inf

|

| 142 |

+

if idx_end.nelement() != 0:

|

| 143 |

+

try:

|

| 144 |

+

mask = idx_end.unsqueeze(1) != torch.arange(

|

| 145 |

+

logits.size(1), device=text.device

|

| 146 |

+

)

|

| 147 |

+

indices = torch.where(mask)

|

| 148 |

+

logits[indices[0], indices[1], end_token] = -torch.inf

|

| 149 |

+

except:

|

| 150 |

+

pass

|

| 151 |

+

|

| 152 |

+

# else:

|

| 153 |

+

# idx_end = torch.full((text.size(0),), text.size(1) - 1, dtype=torch.long)

|

| 154 |

+

|

| 155 |

+

if pad_token:

|

| 156 |

+

if idx_start.nelement() != 0 and idx_end.nelement() != 0:

|

| 157 |

+

try:

|

| 158 |

+

# For every position between the indices of start_token and end_token, set the values for 1st index of dimension=2 equal to -torch.inf. Any value outside of that range should be set to torch.inf.

|

| 159 |

+

mask = (

|

| 160 |

+

torch.arange(logits.size(1), device=text.device)

|

| 161 |

+

>= idx_start.unsqueeze(1)

|

| 162 |

+

) & (

|

| 163 |

+

torch.arange(logits.size(1), device=text.device)

|

| 164 |

+

<= idx_end.unsqueeze(1)

|

| 165 |

+

)

|

| 166 |

+

|

| 167 |

+

indices = torch.where(mask)

|

| 168 |

+

logits[indices[0], indices[1], pad_token] = -torch.inf

|

| 169 |

+

|

| 170 |

+

indices = torch.where(~mask)

|

| 171 |

+

logits[indices[0], indices[1], pad_token] = torch.inf

|

| 172 |

+

|

| 173 |

+

except:

|

| 174 |

+

pass

|

| 175 |

+

|

| 176 |

+

elif idx_start.nelement() != 0:

|

| 177 |

+

try:

|

| 178 |

+

mask = torch.arange(

|

| 179 |

+

logits.size(1), device=text.device

|

| 180 |

+

) < idx_start.unsqueeze(1)

|

| 181 |

+

logits[indices[0], indices[1], pad_token] = torch.inf

|

| 182 |

+

except:

|

| 183 |

+

pass

|

| 184 |

+

|

| 185 |

+

elif idx_end.nelement() != 0:

|

| 186 |

+

try:

|

| 187 |

+

mask = torch.arange(

|

| 188 |

+

logits.size(1), device=text.device

|

| 189 |

+

) > idx_end.unsqueeze(1)

|

| 190 |

+

logits[indices[0], indices[1], pad_token] = torch.inf

|

| 191 |

+

except:

|

| 192 |

+

pass

|

| 193 |

+

|

| 194 |

+

if force_aas:

|

| 195 |

+

if pad_token:

|

| 196 |

+

logits[:, :, pad_token] = -torch.inf

|

| 197 |

+

logits[:, :, 3] = -torch.inf

|

| 198 |

+

logits[:, :, 29:] = -torch.inf

|

| 199 |

+

|

| 200 |

+

if mask_token:

|

| 201 |

+

logits[:, :, mask_token] = -torch.inf

|

| 202 |

+

|

| 203 |

+

return logits

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

def detokenize_text(text_embedding, sequence):

|

| 207 |

+

if text_embedding == "esm1b" or text_embedding == "esm2":

|

| 208 |

+

from esm import Alphabet

|

| 209 |

+

|

| 210 |

+

alphabet = (

|

| 211 |

+

Alphabet.from_architecture("ESM-1b").get_batch_converter().alphabet.all_toks

|

| 212 |

+

)

|

| 213 |

+

else:

|

| 214 |

+

assert NameError("Detokenization only available for ESM mdodels")

|

| 215 |

+

|

| 216 |

+

output_seqs = []

|

| 217 |

+

|

| 218 |

+

for batch in sequence:

|

| 219 |

+

converted_seq = [alphabet[idx] for idx in batch]

|

| 220 |

+

converted_seq = "".join(converted_seq)

|

| 221 |

+

output_seqs.append(converted_seq)

|

| 222 |

+

|

| 223 |

+

return output_seqs

|

| 224 |

+

|

| 225 |

+

class ImageEmbedding(nn.Module):

|

| 226 |

+