abc

commited on

Commit

·

3249d87

1

Parent(s):

74be2a5

Upload 55 files

Browse files- .gitattributes +1 -0

- .github/workflows/typos.yml +21 -0

- .gitignore +7 -0

- LICENSE.md +201 -0

- README-ja.md +147 -0

- README.md +230 -0

- append_module.py +378 -56

- bitsandbytes_windows/cextension.py +54 -0

- bitsandbytes_windows/libbitsandbytes_cpu.dll +0 -0

- bitsandbytes_windows/libbitsandbytes_cuda116.dll +3 -0

- bitsandbytes_windows/main.py +166 -0

- config_README-ja.md +279 -0

- fine_tune.py +50 -45

- fine_tune_README_ja.md +140 -0

- finetune/blip/blip.py +240 -0

- finetune/blip/med.py +955 -0

- finetune/blip/med_config.json +22 -0

- finetune/blip/vit.py +305 -0

- finetune/clean_captions_and_tags.py +184 -0

- finetune/hypernetwork_nai.py +96 -0

- finetune/make_captions.py +162 -0

- finetune/make_captions_by_git.py +145 -0

- finetune/merge_captions_to_metadata.py +67 -0

- finetune/merge_dd_tags_to_metadata.py +62 -0

- finetune/prepare_buckets_latents.py +261 -0

- finetune/tag_images_by_wd14_tagger.py +200 -0

- gen_img_diffusers.py +234 -55

- library/model_util.py +5 -1

- library/train_util.py +853 -229

- networks/check_lora_weights.py +1 -1

- networks/extract_lora_from_models.py +44 -25

- networks/lora.py +191 -30

- networks/merge_lora.py +11 -5

- networks/resize_lora.py +187 -50

- networks/svd_merge_lora.py +40 -18

- requirements.txt +2 -0

- tools/canny.py +24 -0

- tools/original_control_net.py +320 -0

- train_README-ja.md +936 -0

- train_db.py +47 -45

- train_db_README-ja.md +167 -0

- train_network.py +248 -175

- train_network_README-ja.md +269 -0

- train_network_opt.py +324 -373

- train_textual_inversion.py +72 -58

- train_ti_README-ja.md +105 -0

.gitattributes

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

bitsandbytes_windows/libbitsandbytes_cuda116.dll filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/typos.yml

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

# yamllint disable rule:line-length

|

| 3 |

+

name: Typos

|

| 4 |

+

|

| 5 |

+

on: # yamllint disable-line rule:truthy

|

| 6 |

+

push:

|

| 7 |

+

pull_request:

|

| 8 |

+

types:

|

| 9 |

+

- opened

|

| 10 |

+

- synchronize

|

| 11 |

+

- reopened

|

| 12 |

+

|

| 13 |

+

jobs:

|

| 14 |

+

build:

|

| 15 |

+

runs-on: ubuntu-latest

|

| 16 |

+

|

| 17 |

+

steps:

|

| 18 |

+

- uses: actions/checkout@v3

|

| 19 |

+

|

| 20 |

+

- name: typos-action

|

| 21 |

+

uses: crate-ci/[email protected]

|

.gitignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

logs

|

| 2 |

+

__pycache__

|

| 3 |

+

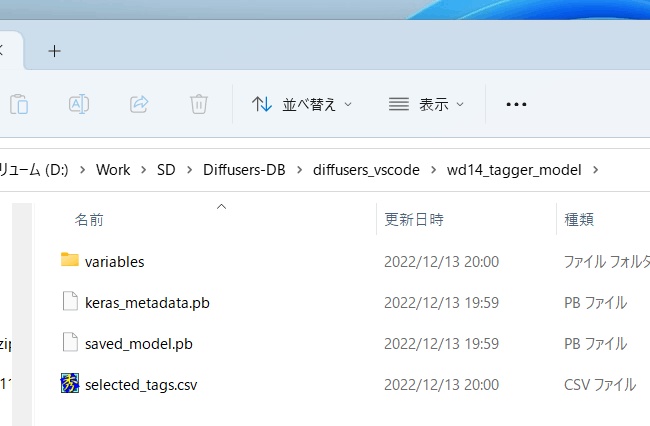

wd14_tagger_model

|

| 4 |

+

venv

|

| 5 |

+

*.egg-info

|

| 6 |

+

build

|

| 7 |

+

.vscode

|

LICENSE.md

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [2022] [kohya-ss]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README-ja.md

ADDED

|

@@ -0,0 +1,147 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## リポジトリについて

|

| 2 |

+

Stable Diffusionの学習、画像生成、その他のスクリプトを入れたリポジトリです。

|

| 3 |

+

|

| 4 |

+

[README in English](./README.md) ←更新情報はこちらにあります

|

| 5 |

+

|

| 6 |

+

GUIやPowerShellスクリプトなど、より使いやすくする機能が[bmaltais氏のリポジトリ](https://github.com/bmaltais/kohya_ss)で提供されています(英語です)のであわせてご覧ください。bmaltais氏に感謝します。

|

| 7 |

+

|

| 8 |

+

以下のスクリプトがあります。

|

| 9 |

+

|

| 10 |

+

* DreamBooth、U-NetおよびText Encoderの学習をサポート

|

| 11 |

+

* fine-tuning、同上

|

| 12 |

+

* 画像生成

|

| 13 |

+

* モデル変換(Stable Diffision ckpt/safetensorsとDiffusersの相互変換)

|

| 14 |

+

|

| 15 |

+

## 使用法について

|

| 16 |

+

|

| 17 |

+

当リポジトリ内およびnote.comに記事がありますのでそちらをご覧ください(将来的にはすべてこちらへ移すかもしれません)。

|

| 18 |

+

|

| 19 |

+

* [学習について、共通編](./train_README-ja.md) : データ整備やオプションなど

|

| 20 |

+

* [データセット設定](./config_README-ja.md)

|

| 21 |

+

* [DreamBoothの学習について](./train_db_README-ja.md)

|

| 22 |

+

* [fine-tuningのガイド](./fine_tune_README_ja.md):

|

| 23 |

+

* [LoRAの学習について](./train_network_README-ja.md)

|

| 24 |

+

* [Textual Inversionの学習について](./train_ti_README-ja.md)

|

| 25 |

+

* note.com [画像生成スクリプト](https://note.com/kohya_ss/n/n2693183a798e)

|

| 26 |

+

* note.com [モデル変換スクリプト](https://note.com/kohya_ss/n/n374f316fe4ad)

|

| 27 |

+

|

| 28 |

+

## Windowsでの動作に必要なプログラム

|

| 29 |

+

|

| 30 |

+

Python 3.10.6およびGitが必要です。

|

| 31 |

+

|

| 32 |

+

- Python 3.10.6: https://www.python.org/ftp/python/3.10.6/python-3.10.6-amd64.exe

|

| 33 |

+

- git: https://git-scm.com/download/win

|

| 34 |

+

|

| 35 |

+

PowerShellを使う場合、venvを使えるようにするためには以下の手順でセキュリティ設定を変更してください。

|

| 36 |

+

(venvに限らずスクリプトの実行が可能になりますので注意してください。)

|

| 37 |

+

|

| 38 |

+

- PowerShellを管理者として開きます。

|

| 39 |

+

- 「Set-ExecutionPolicy Unrestricted」と入力し、Yと答えます。

|

| 40 |

+

- 管理者のPowerShellを閉じます。

|

| 41 |

+

|

| 42 |

+

## Windows環境でのインストール

|

| 43 |

+

|

| 44 |

+

以下の例ではPyTorchは1.12.1/CUDA 11.6版をインストールします。CUDA 11.3版やPyTorch 1.13を使う場合は適宜書き換えください。

|

| 45 |

+

|

| 46 |

+

(なお、python -m venv~の行で「python」とだけ表示された場合、py -m venv~のようにpythonをpyに変更してください。)

|

| 47 |

+

|

| 48 |

+

通常の(管理者ではない)PowerShellを開き以下を順に実行します。

|

| 49 |

+

|

| 50 |

+

```powershell

|

| 51 |

+

git clone https://github.com/kohya-ss/sd-scripts.git

|

| 52 |

+

cd sd-scripts

|

| 53 |

+

|

| 54 |

+

python -m venv venv

|

| 55 |

+

.\venv\Scripts\activate

|

| 56 |

+

|

| 57 |

+

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

| 58 |

+

pip install --upgrade -r requirements.txt

|

| 59 |

+

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

| 60 |

+

|

| 61 |

+

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

| 62 |

+

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

| 63 |

+

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

| 64 |

+

|

| 65 |

+

accelerate config

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

<!--

|

| 69 |

+

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

|

| 70 |

+

pip install --use-pep517 --upgrade -r requirements.txt

|

| 71 |

+

pip install -U -I --no-deps xformers==0.0.16

|

| 72 |

+

-->

|

| 73 |

+

|

| 74 |

+

コマンドプロンプトでは以下になります。

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

```bat

|

| 78 |

+

git clone https://github.com/kohya-ss/sd-scripts.git

|

| 79 |

+

cd sd-scripts

|

| 80 |

+

|

| 81 |

+

python -m venv venv

|

| 82 |

+

.\venv\Scripts\activate

|

| 83 |

+

|

| 84 |

+

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

| 85 |

+

pip install --upgrade -r requirements.txt

|

| 86 |

+

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

| 87 |

+

|

| 88 |

+

copy /y .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

| 89 |

+

copy /y .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

| 90 |

+

copy /y .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

| 91 |

+

|

| 92 |

+

accelerate config

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

(注:``python -m venv venv`` のほうが ``python -m venv --system-site-packages venv`` より安全そうなため書き換えました。globalなpythonにパッケージがインストールしてあると、後者だといろいろと問題が起きます。)

|

| 96 |

+

|

| 97 |

+

accelerate configの質問には以下のように答えてください。(bf16で学習する場合、最後の質問にはbf16と答えてください。)

|

| 98 |

+

|

| 99 |

+

※0.15.0から日本語環境では選択のためにカーソルキーを押すと落ちます(……)。数字キーの0、1、2……で選択できますので、そちらを使ってください。

|

| 100 |

+

|

| 101 |

+

```txt

|

| 102 |

+

- This machine

|

| 103 |

+

- No distributed training

|

| 104 |

+

- NO

|

| 105 |

+

- NO

|

| 106 |

+

- NO

|

| 107 |

+

- all

|

| 108 |

+

- fp16

|

| 109 |

+

```

|

| 110 |

+

|

| 111 |

+

※場合によって ``ValueError: fp16 mixed precision requires a GPU`` というエラーが出ることがあるようです。この場合、6番目の質問(

|

| 112 |

+

``What GPU(s) (by id) should be used for training on this machine as a comma-separated list? [all]:``)に「0」と答えてください。(id `0`のGPUが使われます。)

|

| 113 |

+

|

| 114 |

+

### PyTorchとxformersのバージョンについて

|

| 115 |

+

|

| 116 |

+

他のバージョンでは学習がうまくいかない場合があるようです。特に他の理由がなければ指定のバージョンをお使いください。

|

| 117 |

+

|

| 118 |

+

## アップグレード

|

| 119 |

+

|

| 120 |

+

新しいリリースがあった場合、以下のコマンドで更新できます。

|

| 121 |

+

|

| 122 |

+

```powershell

|

| 123 |

+

cd sd-scripts

|

| 124 |

+

git pull

|

| 125 |

+

.\venv\Scripts\activate

|

| 126 |

+

pip install --use-pep517 --upgrade -r requirements.txt

|

| 127 |

+

```

|

| 128 |

+

|

| 129 |

+

コマンドが成功すれば新しいバージョンが使用できます。

|

| 130 |

+

|

| 131 |

+

## 謝意

|

| 132 |

+

|

| 133 |

+

LoRAの実装は[cloneofsimo氏のリポジトリ](https://github.com/cloneofsimo/lora)を基にしたものです。感謝申し上げます。

|

| 134 |

+

|

| 135 |

+

Conv2d 3x3への拡大は [cloneofsimo氏](https://github.com/cloneofsimo/lora) が最初にリリースし、KohakuBlueleaf氏が [LoCon](https://github.com/KohakuBlueleaf/LoCon) でその有効性を明らかにしたものです。KohakuBlueleaf氏に深く感謝します。

|

| 136 |

+

|

| 137 |

+

## ライセンス

|

| 138 |

+

|

| 139 |

+

スクリプトのライセンスはASL 2.0ですが(Diffusersおよびcloneofsimo氏のリポジトリ由来のものも同様)、一部他のライセンスのコードを含みます。

|

| 140 |

+

|

| 141 |

+

[Memory Efficient Attention Pytorch](https://github.com/lucidrains/memory-efficient-attention-pytorch): MIT

|

| 142 |

+

|

| 143 |

+

[bitsandbytes](https://github.com/TimDettmers/bitsandbytes): MIT

|

| 144 |

+

|

| 145 |

+

[BLIP](https://github.com/salesforce/BLIP): BSD-3-Clause

|

| 146 |

+

|

| 147 |

+

|

README.md

ADDED

|

@@ -0,0 +1,230 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

This repository contains training, generation and utility scripts for Stable Diffusion.

|

| 2 |

+

|

| 3 |

+

[__Change History__](#change-history) is moved to the bottom of the page.

|

| 4 |

+

更新履歴は[ページ末尾](#change-history)に移しました。

|

| 5 |

+

|

| 6 |

+

[日本語版README](./README-ja.md)

|

| 7 |

+

|

| 8 |

+

For easier use (GUI and PowerShell scripts etc...), please visit [the repository maintained by bmaltais](https://github.com/bmaltais/kohya_ss). Thanks to @bmaltais!

|

| 9 |

+

|

| 10 |

+

This repository contains the scripts for:

|

| 11 |

+

|

| 12 |

+

* DreamBooth training, including U-Net and Text Encoder

|

| 13 |

+

* Fine-tuning (native training), including U-Net and Text Encoder

|

| 14 |

+

* LoRA training

|

| 15 |

+

* Texutl Inversion training

|

| 16 |

+

* Image generation

|

| 17 |

+

* Model conversion (supports 1.x and 2.x, Stable Diffision ckpt/safetensors and Diffusers)

|

| 18 |

+

|

| 19 |

+

__Stable Diffusion web UI now seems to support LoRA trained by ``sd-scripts``.__ (SD 1.x based only) Thank you for great work!!!

|

| 20 |

+

|

| 21 |

+

## About requirements.txt

|

| 22 |

+

|

| 23 |

+

These files do not contain requirements for PyTorch. Because the versions of them depend on your environment. Please install PyTorch at first (see installation guide below.)

|

| 24 |

+

|

| 25 |

+

The scripts are tested with PyTorch 1.12.1 and 1.13.0, Diffusers 0.10.2.

|

| 26 |

+

|

| 27 |

+

## Links to how-to-use documents

|

| 28 |

+

|

| 29 |

+

All documents are in Japanese currently.

|

| 30 |

+

|

| 31 |

+

* [Training guide - common](./train_README-ja.md) : data preparation, options etc...

|

| 32 |

+

* [Dataset config](./config_README-ja.md)

|

| 33 |

+

* [DreamBooth training guide](./train_db_README-ja.md)

|

| 34 |

+

* [Step by Step fine-tuning guide](./fine_tune_README_ja.md):

|

| 35 |

+

* [training LoRA](./train_network_README-ja.md)

|

| 36 |

+

* [training Textual Inversion](./train_ti_README-ja.md)

|

| 37 |

+

* note.com [Image generation](https://note.com/kohya_ss/n/n2693183a798e)

|

| 38 |

+

* note.com [Model conversion](https://note.com/kohya_ss/n/n374f316fe4ad)

|

| 39 |

+

|

| 40 |

+

## Windows Required Dependencies

|

| 41 |

+

|

| 42 |

+

Python 3.10.6 and Git:

|

| 43 |

+

|

| 44 |

+

- Python 3.10.6: https://www.python.org/ftp/python/3.10.6/python-3.10.6-amd64.exe

|

| 45 |

+

- git: https://git-scm.com/download/win

|

| 46 |

+

|

| 47 |

+

Give unrestricted script access to powershell so venv can work:

|

| 48 |

+

|

| 49 |

+

- Open an administrator powershell window

|

| 50 |

+

- Type `Set-ExecutionPolicy Unrestricted` and answer A

|

| 51 |

+

- Close admin powershell window

|

| 52 |

+

|

| 53 |

+

## Windows Installation

|

| 54 |

+

|

| 55 |

+

Open a regular Powershell terminal and type the following inside:

|

| 56 |

+

|

| 57 |

+

```powershell

|

| 58 |

+

git clone https://github.com/kohya-ss/sd-scripts.git

|

| 59 |

+

cd sd-scripts

|

| 60 |

+

|

| 61 |

+

python -m venv venv

|

| 62 |

+

.\venv\Scripts\activate

|

| 63 |

+

|

| 64 |

+

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

| 65 |

+

pip install --upgrade -r requirements.txt

|

| 66 |

+

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

| 67 |

+

|

| 68 |

+

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

| 69 |

+

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

| 70 |

+

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

| 71 |

+

|

| 72 |

+

accelerate config

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

update: ``python -m venv venv`` is seemed to be safer than ``python -m venv --system-site-packages venv`` (some user have packages in global python).

|

| 76 |

+

|

| 77 |

+

Answers to accelerate config:

|

| 78 |

+

|

| 79 |

+

```txt

|

| 80 |

+

- This machine

|

| 81 |

+

- No distributed training

|

| 82 |

+

- NO

|

| 83 |

+

- NO

|

| 84 |

+

- NO

|

| 85 |

+

- all

|

| 86 |

+

- fp16

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

note: Some user reports ``ValueError: fp16 mixed precision requires a GPU`` is occurred in training. In this case, answer `0` for the 6th question:

|

| 90 |

+

``What GPU(s) (by id) should be used for training on this machine as a comma-separated list? [all]:``

|

| 91 |

+

|

| 92 |

+

(Single GPU with id `0` will be used.)

|

| 93 |

+

|

| 94 |

+

### about PyTorch and xformers

|

| 95 |

+

|

| 96 |

+

Other versions of PyTorch and xformers seem to have problems with training.

|

| 97 |

+

If there is no other reason, please install the specified version.

|

| 98 |

+

|

| 99 |

+

## Upgrade

|

| 100 |

+

|

| 101 |

+

When a new release comes out you can upgrade your repo with the following command:

|

| 102 |

+

|

| 103 |

+

```powershell

|

| 104 |

+

cd sd-scripts

|

| 105 |

+

git pull

|

| 106 |

+

.\venv\Scripts\activate

|

| 107 |

+

pip install --use-pep517 --upgrade -r requirements.txt

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

Once the commands have completed successfully you should be ready to use the new version.

|

| 111 |

+

|

| 112 |

+

## Credits

|

| 113 |

+

|

| 114 |

+

The implementation for LoRA is based on [cloneofsimo's repo](https://github.com/cloneofsimo/lora). Thank you for great work!

|

| 115 |

+

|

| 116 |

+

The LoRA expansion to Conv2d 3x3 was initially released by cloneofsimo and its effectiveness was demonstrated at [LoCon](https://github.com/KohakuBlueleaf/LoCon) by KohakuBlueleaf. Thank you so much KohakuBlueleaf!

|

| 117 |

+

|

| 118 |

+

## License

|

| 119 |

+

|

| 120 |

+

The majority of scripts is licensed under ASL 2.0 (including codes from Diffusers, cloneofsimo's and LoCon), however portions of the project are available under separate license terms:

|

| 121 |

+

|

| 122 |

+

[Memory Efficient Attention Pytorch](https://github.com/lucidrains/memory-efficient-attention-pytorch): MIT

|

| 123 |

+

|

| 124 |

+

[bitsandbytes](https://github.com/TimDettmers/bitsandbytes): MIT

|

| 125 |

+

|

| 126 |

+

[BLIP](https://github.com/salesforce/BLIP): BSD-3-Clause

|

| 127 |

+

|

| 128 |

+

## Change History

|

| 129 |

+

|

| 130 |

+

- 11 Mar. 2023, 2023/3/11:

|

| 131 |

+

- Fix `svd_merge_lora.py` causes an error about the device.

|

| 132 |

+

- `svd_merge_lora.py` でデバイス関連のエラーが発生する不具合を修正しました。

|

| 133 |

+

- 10 Mar. 2023, 2023/3/10: release v0.5.1

|

| 134 |

+

- Fix to LoRA modules in the model are same to the previous (before 0.5.0) if Conv2d-3x3 is disabled (no `conv_dim` arg, default).

|

| 135 |

+

- Conv2D with kernel size 1x1 in ResNet modules were accidentally included in v0.5.0.

|

| 136 |

+

- Trained models with v0.5.0 will work with Web UI's built-in LoRA and Additional Networks extension.

|

| 137 |

+

- Fix an issue that dim (rank) of LoRA module is limited to the in/out dimensions of the target Linear/Conv2d (in case of the dim > 320).

|

| 138 |

+

- `resize_lora.py` now have a feature to `dynamic resizing` which means each LoRA module can have different ranks (dims). Thanks to mgz-dev for this great work!

|

| 139 |

+

- The appropriate rank is selected based on the complexity of each module with an algorithm specified in the command line arguments. For details: https://github.com/kohya-ss/sd-scripts/pull/243

|

| 140 |

+

- Multiple GPUs training is finally supported in `train_network.py`. Thanks to ddPn08 to solve this long running issue!

|

| 141 |

+

- Dataset with fine-tuning method (with metadata json) now works without images if `.npz` files exist. Thanks to rvhfxb!

|

| 142 |

+

- `train_network.py` can work if the current directory is not the directory where the script is in. Thanks to mio2333!

|

| 143 |

+

- Fix `extract_lora_from_models.py` and `svd_merge_lora.py` doesn't work with higher rank (>320).

|

| 144 |

+

|

| 145 |

+

- LoRAのConv2d-3x3拡張を行わない場合(`conv_dim` を指定しない場合)、以前(v0.5.0)と同じ構成になるよう修正しました。

|

| 146 |

+

- ResNetのカーネルサイズ1x1のConv2dが誤って対象になっていました。

|

| 147 |

+

- ただv0.5.0で学習したモデルは Additional Networks 拡張、およびWeb UIのLoRA機能で問題なく使えると思われます。

|

| 148 |

+

- LoRAモジュールの dim (rank) が、対象モジュールの次元数以下に制限される不具合を修正しました(320より大きい dim を指定した場合)。

|

| 149 |

+

- `resize_lora.py` に `dynamic resizing` (リサイズ後の各LoRAモジュールが異なるrank (dim) を持てる機能)を追加しました。mgz-dev 氏の貢献に感謝します。

|

| 150 |

+

- 適切なランクがコマンドライン引数で指定したアルゴリズムにより自動的に選択されます。詳細はこちらをご覧ください: https://github.com/kohya-ss/sd-scripts/pull/243

|

| 151 |

+

- `train_network.py` でマルチGPU学習をサポートしました。長年の懸案を解決された ddPn08 氏に感謝します。

|

| 152 |

+

- fine-tuning方式のデータセット(メタデータ.jsonファイルを使うデータセット)で `.npz` が存在するときには画像がなくても動作するようになりました。rvhfxb 氏に感謝します。

|

| 153 |

+

- 他のディレクトリから `train_network.py` を呼び出しても動作するよう変更しました。 mio2333 氏に感謝します。

|

| 154 |

+

- `extract_lora_from_models.py` および `svd_merge_lora.py` が320より大きいrankを指定すると動かない不具合を修正しました。

|

| 155 |

+

|

| 156 |

+

- 9 Mar. 2023, 2023/3/9: release v0.5.0

|

| 157 |

+

- There may be problems due to major changes. If you cannot revert back to the previous version when problems occur, please do not update for a while.

|

| 158 |

+

- Minimum metadata (module name, dim, alpha and network_args) is recorded even with `--no_metadata`, issue https://github.com/kohya-ss/sd-scripts/issues/254

|

| 159 |

+

- `train_network.py` supports LoRA for Conv2d-3x3 (extended to conv2d with a kernel size not 1x1).

|

| 160 |

+

- Same as a current version of [LoCon](https://github.com/KohakuBlueleaf/LoCon). __Thank you very much KohakuBlueleaf for your help!__

|

| 161 |

+

- LoCon will be enhanced in the future. Compatibility for future versions is not guaranteed.

|

| 162 |

+

- Specify `--network_args` option like: `--network_args "conv_dim=4" "conv_alpha=1"`

|

| 163 |

+

- [Additional Networks extension](https://github.com/kohya-ss/sd-webui-additional-networks) version 0.5.0 or later is required to use 'LoRA for Conv2d-3x3' in Stable Diffusion web UI.

|

| 164 |

+

- __Stable Diffusion web UI built-in LoRA does not support 'LoRA for Conv2d-3x3' now. Consider carefully whether or not to use it.__

|

| 165 |

+

- Merging/extracting scripts also support LoRA for Conv2d-3x3.

|

| 166 |

+

- Free CUDA memory after sample generation to reduce VRAM usage, issue https://github.com/kohya-ss/sd-scripts/issues/260

|

| 167 |

+

- Empty caption doesn't cause error now, issue https://github.com/kohya-ss/sd-scripts/issues/258

|

| 168 |

+

- Fix sample generation is crashing in Textual Inversion training when using templates, or if height/width is not divisible by 8.

|

| 169 |

+

- Update documents (Japanese only).

|

| 170 |

+

|

| 171 |

+

- 大きく変更したため不具合があるかもしれません。問題が起きた時にスクリプトを前のバージョンに戻せない場合は、しばらく更新を控えてください。

|

| 172 |

+

- 最低限のメタデータ(module name, dim, alpha および network_args)が `--no_metadata` オプション指定時にも記録されます。issue https://github.com/kohya-ss/sd-scripts/issues/254

|

| 173 |

+

- `train_network.py` で LoRAの Conv2d-3x3 拡張に対応しました(カーネルサイズ1x1以外のConv2dにも対象範囲を拡大します)。

|

| 174 |

+

- 現在のバージョンの [LoCon](https://github.com/KohakuBlueleaf/LoCon) と同一の仕様です。__KohakuBlueleaf氏のご支援に深く感謝します。__

|

| 175 |

+

- LoCon が将来的に拡張された場合、それらのバージョンでの互換性は保証できません。

|

| 176 |

+

- `--network_args` オプションを `--network_args "conv_dim=4" "conv_alpha=1"` のように指定してください。

|

| 177 |

+

- Stable Diffusion web UI での使用には [Additional Networks extension](https://github.com/kohya-ss/sd-webui-additional-networks) のversion 0.5.0 以降が必要です。

|

| 178 |

+

- __Stable Diffusion web UI の LoRA 機能は LoRAの Conv2d-3x3 拡張に対応していないようです。使用するか否か慎重にご検討ください。__

|

| 179 |

+

- マージ、抽出のスクリプトについても LoRA の Conv2d-3x3 拡張に対応しました.

|

| 180 |

+

- サンプル画像生成後にCUDAメモリを解放しVRAM使用量を削減しました。 issue https://github.com/kohya-ss/sd-scripts/issues/260

|

| 181 |

+

- 空のキャプションが使えるようになりました。 issue https://github.com/kohya-ss/sd-scripts/issues/258

|

| 182 |

+

- Textual Inversion 学習でテンプレートを使ったとき、height/width が 8 で割り切れなかったときにサンプル画像生成がクラッシュするのを修正しました。

|

| 183 |

+

- ドキュメント類を更新しました。

|

| 184 |

+

|

| 185 |

+

- Sample image generation:

|

| 186 |

+

A prompt file might look like this, for example

|

| 187 |

+

|

| 188 |

+

```

|

| 189 |

+

# prompt 1

|

| 190 |

+

masterpiece, best quality, 1girl, in white shirts, upper body, looking at viewer, simple background --n low quality, worst quality, bad anatomy,bad composition, poor, low effort --w 768 --h 768 --d 1 --l 7.5 --s 28

|

| 191 |

+

|

| 192 |

+

# prompt 2

|

| 193 |

+

masterpiece, best quality, 1boy, in business suit, standing at street, looking back --n low quality, worst quality, bad anatomy,bad composition, poor, low effort --w 576 --h 832 --d 2 --l 5.5 --s 40

|

| 194 |

+

```

|

| 195 |

+

|

| 196 |

+

Lines beginning with `#` are comments. You can specify options for the generated image with options like `--n` after the prompt. The following can be used.

|

| 197 |

+

|

| 198 |

+

* `--n` Negative prompt up to the next option.

|

| 199 |

+

* `--w` Specifies the width of the generated image.

|

| 200 |

+

* `--h` Specifies the height of the generated image.

|

| 201 |

+

* `--d` Specifies the seed of the generated image.

|

| 202 |

+

* `--l` Specifies the CFG scale of the generated image.

|

| 203 |

+

* `--s` Specifies the number of steps in the generation.

|

| 204 |

+

|

| 205 |

+

The prompt weighting such as `( )` and `[ ]` are not working.

|

| 206 |

+

|

| 207 |

+

- サンプル画像生成:

|

| 208 |

+

プロンプトファイルは例えば以下のようになります。

|

| 209 |

+

|

| 210 |

+

```

|

| 211 |

+

# prompt 1

|

| 212 |

+

masterpiece, best quality, 1girl, in white shirts, upper body, looking at viewer, simple background --n low quality, worst quality, bad anatomy,bad composition, poor, low effort --w 768 --h 768 --d 1 --l 7.5 --s 28

|

| 213 |

+

|

| 214 |

+

# prompt 2

|

| 215 |

+

masterpiece, best quality, 1boy, in business suit, standing at street, looking back --n low quality, worst quality, bad anatomy,bad composition, poor, low effort --w 576 --h 832 --d 2 --l 5.5 --s 40

|

| 216 |

+

```

|

| 217 |

+

|

| 218 |

+

`#` で始まる行はコメントになります。`--n` のように「ハイフン二個+英小文字」の形でオプションを指定できます。以下が使用可能できます。

|

| 219 |

+

|

| 220 |

+

* `--n` Negative prompt up to the next option.

|

| 221 |

+

* `--w` Specifies the width of the generated image.

|

| 222 |

+

* `--h` Specifies the height of the generated image.

|

| 223 |

+

* `--d` Specifies the seed of the generated image.

|

| 224 |

+

* `--l` Specifies the CFG scale of the generated image.

|

| 225 |

+

* `--s` Specifies the number of steps in the generation.

|

| 226 |

+

|

| 227 |

+

`( )` や `[ ]` などの重みづけは動作しません。

|

| 228 |

+

|

| 229 |

+

Please read [Releases](https://github.com/kohya-ss/sd-scripts/releases) for recent updates.

|

| 230 |

+

最近の更新情報は [Release](https://github.com/kohya-ss/sd-scripts/releases) をご覧ください。

|

append_module.py

CHANGED

|

@@ -2,7 +2,19 @@ import argparse

|

|

| 2 |

import json

|

| 3 |

import shutil

|

| 4 |

import time

|

| 5 |

-

from typing import

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

from accelerate import Accelerator

|

| 7 |

from torch.autograd.function import Function

|

| 8 |

import glob

|

|

@@ -28,6 +40,7 @@ import safetensors.torch

|

|

| 28 |

|

| 29 |

import library.model_util as model_util

|

| 30 |

import library.train_util as train_util

|

|

|

|

| 31 |

|

| 32 |

#============================================================================================================

|

| 33 |

#AdafactorScheduleに暫定的にinitial_lrを層別に適用できるようにしたもの

|

|

@@ -115,6 +128,124 @@ def make_bucket_resolutions_fix(max_reso, min_reso, min_size=256, max_size=1024,

|

|

| 115 |

return area_size_resos_list, area_size_list

|

| 116 |

|

| 117 |

#============================================================================================================

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

#train_util 内より

|

| 119 |

#============================================================================================================

|

| 120 |

class BucketManager_append(train_util.BucketManager):

|

|

@@ -179,7 +310,7 @@ class BucketManager_append(train_util.BucketManager):

|

|

| 179 |

bucket_size_id_list.append(bucket_size_id + i + 1)

|

| 180 |

_min_error = 1000.

|

| 181 |

_min_id = bucket_size_id

|

| 182 |

-

for now_size_id in

|

| 183 |

self.predefined_aspect_ratios = self.predefined_aspect_ratios_list[now_size_id]

|

| 184 |

ar_errors = self.predefined_aspect_ratios - aspect_ratio

|

| 185 |

ar_error = np.abs(ar_errors).min()

|

|

@@ -253,13 +384,13 @@ class BucketManager_append(train_util.BucketManager):

|

|

| 253 |

return reso, resized_size, ar_error

|

| 254 |

|

| 255 |

class DreamBoothDataset(train_util.DreamBoothDataset):

|

| 256 |

-

def __init__(self,

|

| 257 |

print("use append DreamBoothDataset")

|

| 258 |

self.min_resolution = min_resolution

|

| 259 |

self.area_step = area_step

|

| 260 |

-

super().__init__(

|

| 261 |

-

|

| 262 |

-

|

| 263 |

def make_buckets(self):

|

| 264 |

'''

|

| 265 |

bucketingを行わない場合も呼び出し必須(ひとつだけbucketを作る)

|

|

@@ -352,40 +483,50 @@ class DreamBoothDataset(train_util.DreamBoothDataset):

|

|

| 352 |

self.shuffle_buckets()

|

| 353 |

self._length = len(self.buckets_indices)

|

| 354 |

|

| 355 |

-

|

| 356 |

-

|

| 357 |

-

|

| 358 |

-

|

| 359 |

-

|

| 360 |

-

|

| 361 |

-

|

| 362 |

-

|

| 363 |

-

|

| 364 |

-

|

| 365 |

-

|

| 366 |

-

|

| 367 |

-

|

| 368 |

-

dots.append(".npz")

|

| 369 |

-

for ext in dots:

|

| 370 |

-

if base == '*':

|

| 371 |

-

img_paths.extend(glob.glob(os.path.join(glob.escape(directory), base + ext)))

|

| 372 |

-

else:

|

| 373 |

-

img_paths.extend(glob.glob(glob.escape(os.path.join(directory, base + ext))))

|

| 374 |

-

return img_paths

|

| 375 |

-

|

| 376 |

#============================================================================================================

|

| 377 |

#networks.lora

|

| 378 |

#============================================================================================================

|

| 379 |

-

from networks.lora import LoRANetwork

|

| 380 |

-

def replace_prepare_optimizer_params(networks):

|

| 381 |

-

def prepare_optimizer_params(self, text_encoder_lr, unet_lr,

|

| 382 |

-

|

| 383 |

def enumerate_params(loras, lora_name=None):

|

| 384 |

params = []

|

| 385 |

for lora in loras:

|

| 386 |

if lora_name is not None:

|

| 387 |

-

|

| 388 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 389 |

else:

|

| 390 |

params.extend(lora.parameters())

|

| 391 |

return params

|

|

@@ -393,6 +534,7 @@ def replace_prepare_optimizer_params(networks):

|

|

| 393 |

self.requires_grad_(True)

|

| 394 |

all_params = []

|

| 395 |

ret_scheduler_lr = []

|

|

|

|

| 396 |

|

| 397 |

if loranames is not None:

|

| 398 |

textencoder_names = [None]

|

|

@@ -405,37 +547,181 @@ def replace_prepare_optimizer_params(networks):

|

|

| 405 |

if self.text_encoder_loras:

|

| 406 |

for textencoder_name in textencoder_names:

|

| 407 |

param_data = {'params': enumerate_params(self.text_encoder_loras, lora_name=textencoder_name)}

|

|

|

|

| 408 |

if text_encoder_lr is not None:

|

| 409 |

param_data['lr'] = text_encoder_lr

|

| 410 |

-

|

| 411 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 412 |

all_params.append(param_data)

|

| 413 |

|

| 414 |

if self.unet_loras:

|

| 415 |

for unet_name in unet_names:

|

| 416 |

param_data = {'params': enumerate_params(self.unet_loras, lora_name=unet_name)}

|

|

|

|

|

|

|

| 417 |

if unet_lr is not None:

|

| 418 |

param_data['lr'] = unet_lr

|

| 419 |

-

|

| 420 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 421 |

all_params.append(param_data)

|

| 422 |

|

| 423 |

-

return all_params, ret_scheduler_lr

|

| 424 |

-

|

| 425 |

-

|

|

|

|

|

|

|

| 426 |

|

| 427 |